zookeeper、hadoop、hbase单节点伪分布式集群一键部署shell脚本

ardh.1.0安装说明

注:脚本tgz包后续上传,欢迎留言与我交流讨论

一、使用说明

1、脚本内默认安装三款软件:zookeeper-3.4.13、hadoop2.7.3、hbase-1.2.6

2、脚本经centos6.5、centos7.4/7.5测试安装正常

3、服务器需配置正确的hostname(hostname前面不可跟回环地址127.0.0.1)例:

[root@SHELL2 tgz]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.110.133 SHELL24、服务器需提前装有jdk1.7及以上版本(强烈建议安装jdk1.8)例:

[root@SHELL2 tgz]# java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)5、可解压tar包至任意位置(命令:tar -zxvf ardh.1.0.tgz)

6、脚本当前状态为自动获取系统JAVA_HOME,默认安装位置为/data/arbd,启用zookeeper安装

7、如需修改请编辑下面三个参数:(实际安装中优先级为:脚本输入 > 配置 > 脚本自动获取)

8、编辑ardh-hbase/conf/jvm.conf的JAVA_HOME参数(置则为空则自动获取系统环境变量)

[root@SHELL2 tgz]# cd ardh-hbase/

[root@SHELL2 ardh-hbase]# vi conf/jvm.confJAVA_HOME=修改后:wq保存退出编辑9、编辑ardh-hbase/conf/env.conf的安装位置参数:arbdzhDir(置则为空则默认安装在/data/arbd下)

[root@SHELL2 ardh-hbase]# vi conf/env.conf#软件安装位置,绝对路径,末尾不要带/

arbdzhDir=

#是否安装zookeeper。安装为true,不安装为false

setupZK=true修改后:wq保存退出编辑10、编辑ardh-hbase/conf/env.conf的是否安装zookeeper参数:setupZK为true/false

操作参考第9条11、请使用root用户,并用source方式执行脚本!(命令:source ./setup.sh)

[root@SHELL2 ardh-hbase]# su -root

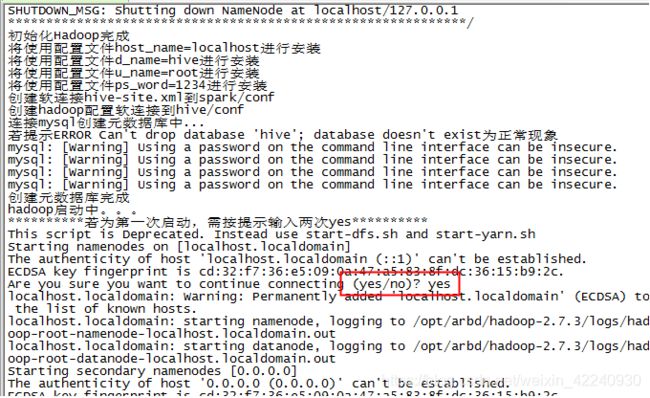

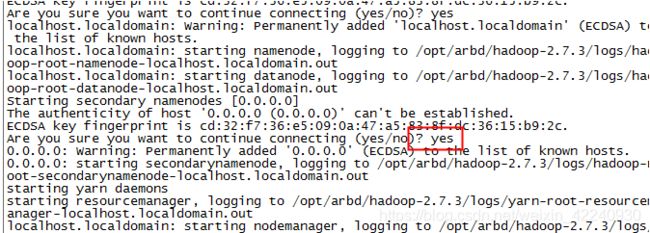

[root@SHELL2 ardh-hbase]# source ./setup.sh12、脚本内按中文提示操作即可,需要注意的是首次安装hadoop需要输入两个yes:

13、只要不更改安装位置,执行./bin/stop.sh关闭集群并重复执行setup.sh即可重装整套软件。若更改安装位置,需手动清空/etc/profile的zk、hadoop、hbase环境变量,再执行setup.sh

14、已按上面说明操作,仍然安装出错可能存在的原因:

(1)环境变量配置异常:检查/etc/profile

(2)检查配置文件格式:jvm.conf与env.conf

(3)未在setup.sh所在目录执行脚本:返回目录

(4)服务器8080端口、8088端口、2181端口被占用

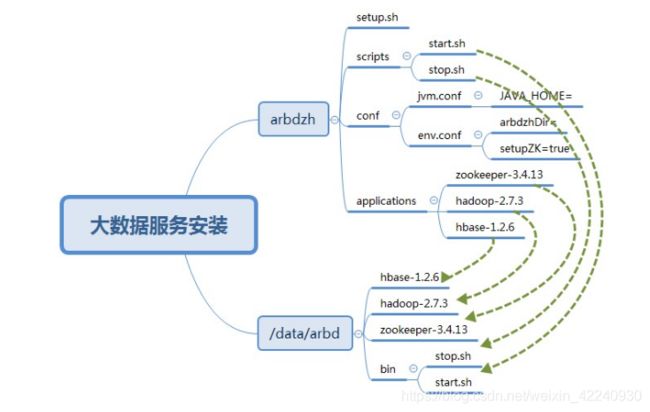

二、安装结构

三、配置信息

以下配置中所有带$的变量脚本中都会自行使用当前服务器实际值替换

(一)、zookeeper部分

1、zoo.cfg

autopurge.snapRetainCount=3

autopurge.purgeInterval=1

dataDir=${arbdzhDir}/zookeeper-3.4.13/data

dataLogDir=${arbdzhDir}/zookeeper-3.4.13/logs

server.1=$hostname:2888:38882、myid

13、zkEnv.sh(更改zookeeper.out输出位置)

if [ "x${ZOO_LOG_DIR}" = "x" ]

then

ZOO_LOG_DIR="_"

fi修改为

if [ "x${ZOO_LOG_DIR}" = "x" ]

then

ZOO_LOG_DIR="$ZOOKEEPER_PREFIX/logs"

fi注:新建data与logs文件夹

(二)、hadoop部分

1、core-site.xml

fs.defaultFS

hdfs://$hostname:8020

hadoop.tmp.dir

${arbdzhDir}/hadoop-2.7.3/data/tmp

2、hadoop-env.sh

export JAVA_HOME=$JAVA_HOME

export HADOOP_CONF_DIR=${arbdzhDir}/hadoop-2.7.3/etc/hadoop/3、hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

${arbdzhDir}/hadoop-2.7.3/data/namenode

dfs.datanode.data.dir

${arbdzhDir}/hadoop-2.7.3/data/datanode

dfs.permissions

false

dfs.webhdfs.enabled

true

4、mapred-env.sh

export JAVA_HOME=$JAVA_HOME5、mapred-site.xml

mapreduce.framework.name

yarn

6、slaves

$hostname7、yarn-env.sh

export JAVA_HOME=$JAVA_HOME8、yarn-site.xml

yarn.resourcemanager.address

$hostname:8032

yarn.nodemanager.aux-services

mapreduce_shuffle

9、hadoop-daemon.sh

HADOOP_PID_DIR=${arbdzhDir}/hadoop-2.7.3/pids10、yarn-deamon.sh

YARN_PID_DIR=${arbdzhDir}/hadoop-2.7.3/pids(三)、hbase部分

1、hbase-env.sh

export JAVA_HOME=$JAVA_HOME

export HBASE_MANAGES_ZK=false注:其中HBASE_MANAGES_ZK为hbase对zookeeper的自动管理,默认true时会尝试在关闭时关闭zookeeper,并警告no zookeeper to stop because no pid file /tmp/hbase-root-zookeeper.pid

参见hbase官网

2、hbase-site.xml

hbase.rootdir

hdfs://$hostname:8020/hbase

hbase.cluster.distributed

true

hbase.zookeeper.quorum

$hostname

dfs.replication

1

hbase.zookeeper.property.clientPort

2181

hbase.regionserver.port

60020

hbase.master.port

60000

3、regionservers

$hostname4、hbase-env.sh

export HBASE_PID_DIR=${arbdzhDir}/hbase-1.2.6/pids5、设置hadoop的hdfs-site.xml到hbase/conf下

ln -sf ${arbdzhDir}/hadoop-2.7.3/etc/hadoop/hdfs-site.xml ${arbdzhDir}/hbase-1.2.6/conf/hdfs-site.xml注:如果在Hadoop集群上进行了HDFS客户端配置更改(例如HDFS客户端的配置指令),而不是服务器端配置,则必须使用以下方法来启用HBase以查看和使用这些配置更改hbase官网

四、脚本原文

#!/bin/bash

check_javahome(){

check_results=`java -version 2>&1`

if [[ $check_results =~ 'version "1.8' ]]

then

echo "当前jdk版本为1.8,符合要求"

elif [[ $check_results =~ 'version "1.7' ]]

then

echo "当前jdk版本为1.7,符合要求"

else

echo "请安装jdk1.8"

read -p "按回车键退出" aaa

clear

break

fi

if [ "$JAVA_HOME" = "" ]

then

echo "未配置JAVA_HOME环境变量!手动在/etc/profile中配置!"

read -p "按回车键退出" bbb

clear

exit 1

fi

if [ "$JAVA_HOME" != "" ]

then

JAVA_HOME=$JAVA_HOME

echo "JAVA_HOME:$JAVA_HOME"

fi

}

input_javahome(){

chmod 777 ./conf/jvm.conf

#为避免JAVA_HOME与系统环境变量JAVA_HOME冲突,不采用source方式加载配置文件

#source ./conf/jvm.conf

JAVAHOME=`cat ./conf/jvm.conf | grep JAVA_HOME | awk -F'=' '{ print $2 }'`

if [ ! $JAVAHOME ]

then

echo "./conf/jvm.conf未配置JAVA_HOME参数"

echo "默认值JAVA_HOME=$JAVA_HOME"

check_javahome

JAVA_HOME=$JAVA_HOME

sleep 1

else

echo "将使用配置文件jvm.conf安装,JAVA_HOME参数值为:$JAVAHOME"

JAVA_HOME=$JAVAHOME

sleep 1

fi

echo "是否输入并应用新的JAVA_HOME?(ctrl+Backspace为删除)"

echo "是==>输入新的JAVA_HOME 否==>直接回车"

read read1

if [ "$read1" = "" ]

then

JAVA_HOME=$JAVA_HOME

else

while !([[ "$read1" =~ ^/ ]])

do

echo "路径错误,请输入正确的绝对路径"

read read1

done

JAVA_HOME=$read1

echo "配置文件jvm.conf已更新"

echo "将使用新的JAVA_HOME=$JAVA_HOME进行安装"

sed -i 's#JAVA_HOME.*#JAVA_HOME='"$JAVA_HOME"'#g' ./conf/jvm.conf

fi

}

input_arbdzhDir(){

chmod 777 ./conf/env.conf

source ./conf/env.conf

#否则换行符严重影响后续操作

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

if [ ! $arbdzhDir ]

then

echo $arbdzhDir

echo "./conf/env.conf未配置安装位置参数"

echo "将使用默认安装位置/data/arbd进行安装"

arbdzhDir=/data/arbd

sleep 1

else

echo "读取配置文件env.conf"

echo "将使用配置文件arbdzhDir=$arbdzhDir进行安装"

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

sleep 1

fi

echo "是否输入新的安装位置?(ctrl+Backspace为删除)"

echo "是==>输入一个绝对路径(结尾不带/) 否==>直接回车"

read read2

if [ "$read2" = "" ]

then

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

else

while !([[ "$read2" =~ ^/ ]])

do

echo "路径错误,请输入正确的绝对路径"

read read2

done

arbdzhDir=$read2

echo "配置文件env.conf已更新"

echo "将使用新的路径arbdzhDir=$arbdzhDir进行安装"

sed -i 's#arbdzhDir.*#arbdzhDir='"$arbdzhDir"'#g' ./conf/env.conf

fi

sleep 1

if [ -d "$arbdzhDir" ] ; then

echo "文件夹$arbdzhDir存在!"

else

echo "文件夹$arbdzhDir不存在,正在创建..."

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

mkdir -p $arbdzhDir

fi

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

sleep 1

setupZK=$(echo $setupZK | sed 's/\r//')

if [ "$setupZK" = "true" ]

then

echo "配置文件setupZK=$setupZK,您选择了安装zookeeper"

else

echo "配置文件setupZK=$setupZK,您选择了不安装zookeeper"

fi

chmod 777 ./scripts/start.sh

chmod 777 ./scripts/stop.sh

sed -i 's#setupZK=.*#setupZK='"$setupZK"'#g' ./scripts/start.sh

sed -i 's#setupZK=.*#setupZK='"$setupZK"'#g' ./scripts/stop.sh

sleep 1

sed -i 's#arbdzhDir=.*#arbdzhDir='"$arbdzhDir"'#g' ./scripts/start.sh

sed -i 's#arbdzhDir=.*#arbdzhDir='"$arbdzhDir"'#g' ./scripts/stop.sh

sleep 1

sed -i '/>hadoop.tmp.dir'"$arbdzhDir"'/hadoop-2.7.3/data/tmp|}' ./applications/hadoop-2.7.3/etc/hadoop/core-site.xml

sed -i 's#HADOOP_CONF_DIR=.*#HADOOP_CONF_DIR='"$arbdzhDir"'/hadoop-2.7.3/etc/hadoop/#g' ./applications/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

sed -i '/>dfs.namenode.name.dir'"$arbdzhDir"'/hadoop-2.7.3/data/namenode|}' ./applications/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

sleep 0.5

sed -i '/>dfs.datanode.data.dir'"$arbdzhDir"'/hadoop-2.7.3/data/datanode|}' ./applications/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

sed -i 's#dataDir=.*#dataDir='"$arbdzhDir"'/zookeeper-3.4.13/data#g' ./applications/zookeeper-3.4.13/conf/zoo.cfg

sleep 0.5

sed -i 's#dataLogDir=.*#dataLogDir='"$arbdzhDir"'/zookeeper-3.4.13/logs#g' ./applications/zookeeper-3.4.13/conf/zoo.cfg

sed -i 's#export HBASE_PID_DIR=.*#export HBASE_PID_DIR='"$arbdzhDir"'/hbase-1.2.6/pids#g' ./applications/hbase-1.2.6/conf/hbase-env.sh

sed -i 's#HADOOP_PID_DIR=.*#HADOOP_PID_DIR='"$arbdzhDir"'/hadoop-2.7.3/pids#' ./applications/hadoop-2.7.3/sbin/hadoop-daemon.sh

sed -i 's#YARN_PID_DIR=.*#YARN_PID_DIR='"$arbdzhDir"'/hadoop-2.7.3/pids#' ./applications/hadoop-2.7.3/sbin/yarn-daemon.sh

}

check_user(){

if [ "$EUID" == "0" ]

then

echo "当前用户为root"

else

echo "请切换至root用户"

read -p "请按回车键退出"

clear

break

fi

}

check_fwall(){

service iptables status 1>/dev/null 2>&1

if [[ $? -ne 0 && `firewall-cmd --state` != 'running' ]]; then

echo "防火墙已关闭"

else

read -p "防火墙未关闭,关闭防火墙及selinux请按回车" comde

echo "正在关闭防火墙..."

echo "----本操作若提示xxx not (be) found、没有那个文件或目录为正常现象----"

/sbin/service iptables stop

/sbin/chkconfig iptables off

/sbin/service iptables status

systemctl stop firewalld.service

systemctl disable firewalld.service

firewall-cmd --state

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

echo "防火墙已关闭-------------------------------------------------------"

fi

}

check_port(){

if netstat -tlpn | grep 2181

then

read -p "检测到zookeeper进程,请先关闭或卸载原有的zookeeper(ctrl+c退出)" abc

exit

fi

}

auth_load(){

read -p "是否配置单节点免密登陆?:(y/n)" startnow

while !([ "$startnow" = "Y" ]||[ "$startnow" = "y" ]||[ "$startnow" = "N" ]||[ "$startnow" = "n" ])

do

echo "输入错误,请输入大写或者小写的y或n"

read startnow

done

if [ "$startnow" = "Y" ]||[ "$startnow" = "y" ]

then

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

fi

}

env_export(){

if grep -q '$HADOOP_HOME/bin' /etc/profile && grep -q '$HADOOP_HOME/sbin' /etc/profile && grep -q '$HBASE_HOME/bin' /etc/profile

then

echo '环境变量已配置'

elif grep -q '$JAVA_HOME/bin:' /etc/profile

then

echo "正在添加hadoop环境变量..."

sed -i '/JAVA_HOME=/a\zrarbd-' /etc/profile

sleep 1

if [ "$setupZK" = "true" ]

then

echo "正在添加hbase、zookeeper环境变量..."

sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3\nHBASE_HOME='"$arbdzhDir"'/hbase-1.2.6\nZOOKEEPER_HOME='"$arbdzhDir"'/zookeeper-3.4.13#' /etc/profile

#sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3#' /etc/profile

sleep 1

sed -i 's#$JAVA_HOME/bin:#$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$ZOOKEEPER_HOME/bin:#g' /etc/profile

sleep 1

sed -i '/$JAVA_HOME\/bin:/a\export HADOOP_HOME HBASE_HOME ZOOKEEPER_HOME' /etc/profile

sleep 0.5

source /etc/profile

echo "环境变量生效"

else

echo "正在添加hbase环境变量..."

sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3\nHBASE_HOME='"$arbdzhDir"'/hbase-1.2.6#' /etc/profile

sleep 1

sed -i 's#$JAVA_HOME/bin:#$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:#g' /etc/profile

sleep 1

sed -i '/$JAVA_HOME\/bin:/a\export HADOOP_HOME HBASE_HOME' /etc/profile

sleep 0.5

source /etc/profile

echo "环境变量生效"

fi

elif grep -q ':$JAVA_HOME/bin' /etc/profile

then

echo "正在添加hadoop环境变量..."

sed -i '/JAVA_HOME=/a\zrarbd-' /etc/profile

sleep 1

if [ "$setupZK" = "true" ]

then

echo "正在添加hbase、zookeeper环境变量..."

sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3\nHBASE_HOME='"$arbdzhDir"'/hbase-1.2.6\nZOOKEEPER_HOME='"$arbdzhDir"'/zookeeper-3.4.13#' /etc/profile

#sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3#' /etc/profile

sleep 1

sed -i 's#:$JAVA_HOME/bin#:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$ZOOKEEPER_HOME/bin#g' /etc/profile

sleep 1

sed -i '/:$JAVA_HOME\/bin/a\export HADOOP_HOME HBASE_HOME ZOOKEEPER_HOME' /etc/profile

sleep 1

source /etc/profile

echo "环境变量生效"

else

echo "正在添加hbase环境变量..."

sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3\nHBASE_HOME='"$arbdzhDir"'/hbase-1.2.6#' /etc/profile

#sed -i 's#zrarbd-#HADOOP_HOME='"$arbdzhDir"'/hadoop-2.7.3#' /etc/profile

sleep 1

sed -i 's#:$JAVA_HOME/bin#:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin#g' /etc/profile

sleep 1

sed -i '/:$JAVA_HOME\/bin/a\export HADOOP_HOME HBASE_HOME' /etc/profile

sleep 1

source /etc/profile

echo "环境变量生效"

fi

else

echo '错误!请正确配置JAVA_HOME和PATH!'

fi

}

hadoop_format(){

if [ -d "$arbdzhDir/hadoop-2.7.3" ] ; then

echo "$arbdzhDir/hadoop-2.7.3/data/datanode已存在,正在删除"

rm -rf $arbdzhDir/hadoop-2.7.3/data/datanode/*

ls $arbdzhDir/hadoop-2.7.3/data/datanode/

fi

if [ -d "$arbdzhDir/hadoop-2.7.3/data/namenode" ] ; then

echo "$arbdzhDir/hadoop-2.7.3/data/namenode已存在,正在删除"

rm -rf $arbdzhDir/hadoop-2.7.3/data/namenode/*

ls $arbdzhDir/hadoop-2.7.3/data/namenode/

fi

rm -rf $arbdzhDir/hadoop-2.7.3/data/tmp/*

ls $arbdzhDir/hadoop-2.7.3/data/tmp/

sleep 1

echo "已删除临时文件"

sleep 1

echo "开始初始化Hadoop"

sleep 1

$arbdzhDir/hadoop-2.7.3/bin/hdfs namenode -format

echo "初始化Hadoop完成"

sleep 1

}

zk_conf(){

echo "配置zookeeper中..."

sed -i "s/server.1=.*/server.1=$(hostname):2888:3888/" $arbdzhDir/zookeeper-3.4.13/conf/zoo.cfg

}

hadoop_conf(){

echo "配置hadoop中..."

sed -i "/>fs.defaultFShdfs://$(hostname):8020|}" $arbdzhDir/hadoop-2.7.3/etc/hadoop/core-site.xml

sleep 0.5

sed -i "/>yarn.resourcemanager.address$(hostname):8032|}" $arbdzhDir/hadoop-2.7.3/etc/hadoop/yarn-site.xml

sleep 0.5

sed -i "s/localhost/$(hostname)/" $arbdzhDir/hadoop-2.7.3/etc/hadoop/slaves

sleep 0.5

sed -i "s#^export JAVA_HOME=.*#export JAVA_HOME=$JAVA_HOME#" $arbdzhDir/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

sleep 1

sed -i "s#^export JAVA_HOME=.*#export JAVA_HOME=$JAVA_HOME#" $arbdzhDir/hadoop-2.7.3/etc/hadoop/mapred-env.sh

sleep 1

sed -i "s#^export JAVA_HOME=.*#export JAVA_HOME=$JAVA_HOME#" $arbdzhDir/hadoop-2.7.3/etc/hadoop/yarn-env.sh

sleep 1

ln -sf $arbdzhDir/hadoop-2.7.3/etc/hadoop/hdfs-site.xml $arbdzhDir/hbase-1.2.6/conf/hdfs-site.xml

}

hbase_conf(){

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

echo "配置hbase中..."

sed -i "s/localhost/$(hostname)/" $arbdzhDir/hbase-1.2.6/conf/regionservers

sleep 0.5

sed -i "s!export JAVA_HOME=.*!export JAVA_HOME=${JAVA_HOME}!" $arbdzhDir/hbase-1.2.6/conf/hbase-env.sh

sleep 0.5

sed -i "/>hbase.rootdirhdfs://$(hostname):8020/hbase|}" $arbdzhDir/hbase-1.2.6/conf/hbase-site.xml

sleep 0.5

sed -i "/>hbase.zookeeper.quorum$(hostname)|}" $arbdzhDir/hbase-1.2.6/conf/hbase-site.xml

}

check_cp(){

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

if [[ $(jps) =~ " NameNode" ]] || [[ $(jps) =~ "ResourceManager" ]] || [[ $(jps) =~ "HRegionServer" ]]

then

echo "关闭集群中..."

source $arbdzhDir/bin/stop.sh

fi

sleep 1

if [ -d "$arbdzhDir" ] ; then

echo "文件夹$arbdzhDir存在!"

else

echo "文件夹$arbdzhDir不存在,正在创建..."

mkdir -p $arbdzhDir

fi

if [ -d "$arbdzhDir/bin" ] ; then

echo "文件夹$arbdzhDir/bin存在!"

else

echo "文件夹$arbdzhDir/bin不存在,正在创建..."

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

mkdir -p $arbdzhDir/bin

fi

if [ "$setupZK" = "true" ]

then

echo "正在拷贝zookeeper至$arbdzhDir..."

\cp -rf ./applications/zookeeper-3.4.13 $arbdzhDir/

sleep 1

fi

echo "正在拷贝hadoop至$arbdzhDir..."

\cp -rf ./applications/hadoop-2.7.3 $arbdzhDir/

sleep 1

echo "正在拷贝hbase至$arbdzhDir..."

\cp -rf ./applications/hbase-1.2.6 $arbdzhDir/

echo "正在拷贝start.sh/stop.sh至$arbdzhDir/bin..."

\cp -rf ./scripts/start.sh $arbdzhDir/bin/

\cp -rf ./scripts/stop.sh $arbdzhDir/bin/

echo "完毕"

}

run_test(){

arbdzhDir=$(echo $arbdzhDir | sed 's/\r//')

#sh zkCli.sh、

echo "正在HDFS功能测试(若无报错则功能正常),请稍后..."

$arbdzhDir/hadoop-2.7.3/bin/hdfs dfs -mkdir /testInput

sleep 1

$arbdzhDir/hadoop-2.7.3/bin/hdfs dfs -put $arbdzhDir/hadoop-2.7.3/README.txt /testInput

sleep 1

echo "正在运行MR任务测试,请稍后..."

$arbdzhDir/hadoop-2.7.3/bin/yarn jar $arbdzhDir/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /testInput /testOutput

sleep 1

echo "WordCount测试结果:"

$arbdzhDir/hadoop-2.7.3/bin/hdfs dfs -cat /testOutput/*

sleep 1

$arbdzhDir/hadoop-2.7.3/bin/hdfs dfs -rm -r /testInput

$arbdzhDir/hadoop-2.7.3/bin/hdfs dfs -rm -r /testOutput

sleep 1

echo "即将进行hbase测试,若无报错即功能正常"

echo "status

create 'testtable','colfaml'

list 'testtable'

put 'testtable','myrow-1','colfaml:q1','value-1'

scan 'testtable'

disable 'testtable'

drop 'testtable'" | $arbdzhDir/hbase-1.2.6/bin/hbase shell -n 2>&1

status2=$?

echo "The status was " $status2

if [ $status2 == 0 ]; then

echo "功能测试成功"

else

echo "功能测试错误"

fi

echo "测试完毕"

}

start_now(){

read -p "是保持集群启动状态?:(y/n)" startnow

while !([ "$startnow" = "Y" ]||[ "$startnow" = "y" ]||[ "$startnow" = "N" ]||[ "$startnow" = "n" ])

do

echo "输入错误,请输入大写或者小写的y或n"

read startnow

done

#if [ "$startnow" = "Y" ]||[ "$startnow" = "y" ]

#then

# source $arbdzhDir/bin/start.sh

#fi

if [ "$startnow" = "N" ]||[ "$startnow" = "n" ]

then

source $arbdzhDir/bin/stop.sh

fi

}

check_conf(){

echo "******************************正式安装前请确认**********************************"

echo "*** 1、已使用source方式执行此脚本!!!!! (source ./setup.sh)* *"

echo "*** 2、已检查过conf目录配置文件内容! (脚本输入 > 配置 > 脚本自动获取)* *"

echo "*** 3、已配置正确的hostname (hostname前面不可跟回环地址127.0.0.1)* *"

echo "********************************************************************************"

read -p "是否已按上述要求进行操作?:(y/n)" startnow

while !([ "$startnow" = "Y" ]||[ "$startnow" = "y" ]||[ "$startnow" = "N" ]||[ "$startnow" = "n" ])

do

echo "输入错误,请输入大写或者小写的y或n"

read startnow

done

if [ "$startnow" = "Y" ]||[ "$startnow" = "y" ]

then

clear

fi

if [ "$startnow" = "N" ]||[ "$startnow" = "n" ]

then

exit

fi

}

check_conf

check_user

input_javahome

input_arbdzhDir

check_fwall

auth_load

check_cp

if [ "$setupZK" = "true" ]

then

check_port

zk_conf

fi

hadoop_conf

hbase_conf

env_export

hadoop_format

$arbdzhDir/bin/start.sh

run_test

start_now