TensorRT5.1.5.0 实践 Doinference过程的探究(python)

.

找了个时间研究一下下python下,到底是怎么实现doinference的。

第一次接触tensorRT的时候,主页上就写了tensorRT是通过输入某种框架产生的模型及其weights,就可以进行 优化生成一个engine,用这个engine多推断,推断会加速。

具体的来说,就是model->engine->结果。这部分想主要学习一下构建及使用engine的具体代码,也就是很多demo中用到的doinference过程,我是以TensorRT的onnx_yolo-v3的demo中的代码来学习这个过程的。

严格来说,这个demo中,进行推断的过程如下:

with get_engine(onnx_file_path, engine_file_path) as engine, engine.create_execution_context() as context:

inputs, outputs, bindings, stream = common.allocate_buffers(engine)

print('Running inference on image {}...'.format(input_image_path))

inputs[0].host = image

trt_outputs = common.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

trt_outputs = [output.reshape(shape) for output, shape in zip(trt_outputs, output_shapes)]

文章结构

- get_engine

- engine.create_execution_context()

- common.allocate_buffers()

- for循环之外

- for循环之内

- common.doinference()

- 输入的参数

- context

- bindings

- inputs,outputs

- stream

- 执行的操作

- cuda.memcpy_htod_async()

- context.execute_async

- cuda.memcpy_dtoh_async

- stream.synchronize()

get_engine

def get_engine(onnx_file_path, engine_file_path=""):

def build_engine():

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.OnnxParser(network, TRT_LOGGER) as parser:

builder.max_workspace_size = 1 << 30 # 1GB

builder.max_batch_size = 1

# Parse model file

if not os.path.exists(onnx_file_path):

print('ONNX file {} not found, please run yolov3_to_onnx.py first to generate it.'.format(onnx_file_path))

exit(0)

print('Loading ONNX file from path {}...'.format(onnx_file_path))

with open(onnx_file_path, 'rb') as model:

print('Beginning ONNX file parsing')

parser.parse(model.read())

print('Completed parsing of ONNX file')

print('Building an engine from file {}; this may take a while...'.format(onnx_file_path))

engine = builder.build_cuda_engine(network)

print("Completed creating Engine")

with open(engine_file_path, "wb") as f:

f.write(engine.serialize())

return engine

if os.path.exists(engine_file_path):

# If a serialized engine exists, use it instead of building an engine.

print("Reading engine from file {}".format(engine_file_path))

with open(engine_file_path, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

return runtime.deserialize_cuda_engine(f.read())

else:

return build_engine()

get_engine()方法判别engine是否存在,存在的话直接用trt.Runtime()类的runtime对象下的deserialize_cuda_engine方法读取,如下所示:

with open(engine_file_path, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

return runtime.deserialize_cuda_engine(f.read())

如果不存在,调用build_engine方法先后创建builder, network, parser;然后,运用parser.parse()方法解析model,builder.build_cuda_engine方法根据网络创建engine;最后,利用engine.serialize()方法把创建好的engine并行化(保存为二进制流),存在”engine_file_path“这个文件下。

engine.create_execution_context()

这是个封装好的函数,是闭源的,c++里面的声明是这样的。

//!

//! \brief Create an execution context.

//!

//! \see IExecutionContext.

//!

virtual IExecutionContext* createExecutionContext() = 0;

就是创建了一个在runtime过程中所必需的context。(最后也要destroy)

common.allocate_buffers()

nputs, outputs, bindings, stream = common.allocate_buffers(engine)

allocate_buffers方法为这个创造出来的engine分配空间。

def allocate_buffers(engine):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

for循环之外

在这里面创建了一个cuda流,然后就是对input/output的分析,以及空间分配了。

for循环之内

---- binding定义为list,在engine中4次循环取到的分别是unicode类型的:u’000_net’, u’082_convolutional’, u’094_convolutional’, u’106_convolutional’ 。而这些值代表的恰好就是一个input和三个output。

1. size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

trt.volume方法是计算迭代空间的方法:

def volume(iterable):

vol = 1

for elem in iterable:

vol *= elem

return vol if iterable else 0

而debug显示,此时的engine.get_binding_shape(binding)的值为Dims类型的(608,608,3),显而易见,trt.volume就是把图片铺平了,计算占位;最后再乘以engine.max_batch_size,计算HCHW的总的内存占用size。(这里还没没有按照精度计算内存空间,相当于只计算了像素个数)

2. dtype = trt.nptype(engine.get_binding_dtype(binding))

trt.nptype是返回type的:

def nptype(trt_type):

import numpy as np

if trt_type == float32:

return np.float32

elif trt_type == float16:

return np.float16

elif trt_type == int8:

return np.int8

elif trt_type == int32:

return np.int32

raise TypeError("Could not resolve TensorRT datatype to an equivalent numpy datatype.")

这里返回了type,因为不同精度占的内存不同,所以需要知道type。

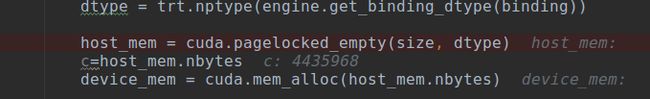

3. host_mem = cuda.pagelocked_empty(size, dtype)

根据size和type创建page-locked的内存缓冲区(sizetype所占byte数)

4. device_mem = cuda.mem_alloc(host_mem.nbytes)

创建设备存储区。其中host_mem.nbytes就是计算出来的int值,比如在我跑tensorRT的yolov3中,推断过程中batchsize等于1,输入为16086083,对应的float类型为32位,占4个byte,所以最后占用的byte数为1608608*4= 4435968个byte。

而我debug出来的也正是如此。

最后,list类型的binding存储了,每个input/output的内存占有量。

5. if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

如果是input,就在inputs的list中append出一个对应的HostDeviceMem对象;如果是output,就在outputs类里面append一个对应的HostDeviceMem对象。HostDeviceMem对象的结构如下所示:

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

所以,总结起来,allocate_buffers方法就是为输入和输出开辟了内存。每个input/ouput都生成两个ndarray类型的host_mem,device_mem,封装在HostDeviceMem类中,用来存inference的结果。

common.doinference()

除了设置了一下inputs[0]中的host(也就是上面求出的input的host_mem)的值,即给input赋值

inputs[0].host=image

就是做doinference了。

trt_outputs = common.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

doinference方法只有五行代码:

def do_inference(context, bindings, inputs, outputs, stream, batch_size=1):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

输入的参数

context

context是通过engine.create_execution_context()生成的,create_execution_context是写在ICudaEngine.py的一个闭源方法,这个方法是创建立一个IExecutionContext类型的对象。

在python中,这个类是写在IExecutionContext中,

class IExecutionContext(__pybind11_builtins.pybind11_object):

def execute(self, batch_size, bindings, p_int=None): # real signature unknown; restored from __doc__

def execute_async(self, batch_size=1, bindings, p_int=None, *args, **kwargs): # real signature unknown; NOTE: unreliably restored from __doc__

def __del__(self): # real signature unknown; restored from __doc__

""" __del__(self: tensorrt.tensorrt.IExecutionContext) -> None """

pass

def __enter__(self, *args, **kwargs): # real signature unknown

pass

def __exit__(self, *args, **kwargs): # real signature unknown

pass

def __init__(self, *args, **kwargs): # real signature unknown

pass

debug_sync = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

device_memory = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

engine = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

name = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

profiler = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

又去 c++ 的NvInfer.h中看了IExecutionContext类的一些方法。

class IExecutionContext

{

public:

# 在一个batch上同步的执行inference

virtual bool execute(int batchSize,void** bindings)=0;

# 在一个batch上异步的执行inference

virtual bool enqueue(int batchSize, void** bindings, cudaStream_t stream, cudaEvent_t* inputConsumed)=0;

# 设置Debug sync flag

virtual void setDebugSync(bool sync)=0;

# 得到 Debug sync flag

virtual bool getDebugSync()=0;

# 设置 profiler

virtual void setProfiler(IProfiler*)=0;

# 得到 profiler

virtual const ICudaEngine& getEngine() const=0;

# 得到链接的engine

virtual const getEngine() const=0;

virtual void destroy()=0;

protected:

virtual ~IExecutionContext(){}

public:

# 设置execution context的名字

virtual void setName(const char* name)=0;

# 返回该名字的execution context

virtual const char* getName() const =0;

# 设置需要的device memory

virtual void setDeviceMemory(void* memory)=0;

}

所以,大同小异,context是用来执行推断的对象,在C++中,是利用execute或者enqueue方法进行推断执行,但是在python中则是利用execute和execute_async两个函方法。

bindings

bindings中存的是每个input/output所占byte数的int值,上面已经有介绍

inputs,outputs

inputs,outputs是有一个个HostDeviceMem类型组成的list,比如inputs[0]就在之前的步骤被赋值为预处理后的image,而outputs在没有执行推断之前,则是值为0,outputs大小的三个HostDeviceMem对象。

stream

stream为在allocate_buffers中由cuda.Stream()生成的stream,来自于Stream.py,但是这个不是TensorRT的东西,而来自于pycuda,是cuda使用过程不可缺少的一员。

执行的操作

def do_inference(context, bindings, inputs, outputs, stream, batch_size=1):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

cuda.memcpy_htod_async()

python中,没有给确切的注释,但是在NVIDIA CUDA Library Documentation中找到了类似的方法:cuMemcpyHtoD方法。

这个方法 从主机内存复制数据到设备内存。 dstDevice和srcHost分别是目标和源的基地址。 ByteCount指定要复制的字节数。

所以,cuda.memcpy_htod_async方法是把input中的数据从主机内存复制到设备内存(递给GPU),而inputs中的元素恰好是函数可以接受的HostDeviceMem类型。

context.execute_async

这个在上面介绍context中已经提到,利用GPU执行推断的步骤,这里应该是异步的。

cuda.memcpy_dtoh_async

推断,和上面的memcpy_htod_async相同,也有个类似的方法cuMemcpyDtoH,即把计算完的数据从device(GPU)拷回host memory中。

stream.synchronize()

这是在Context.py中的方法,和同步有关,但是目前的知识,还不太会解释。

函数的最后,把存在HostDeviceMem类型的outpus中的host中的数据,取出来,放在一个list中返回。

当然,这是python中doinference的一种实现方法,C++中比较乱,还需要继续学习。