用YOLOv2模型训练VOC数据集

作者:木凌

时间:2016年11月。

博客连接:http://blog.csdn.net/u014540717

前段时间在看YOLO的源代码,后来发现YOLOv2更新了,但官网还没给训练方法,就顺便写个YOLOv2的训练方法

其实跟之前的方法一模一样,就是命令换了而已,.txt文档生成方法就不介绍了,网上一大堆~

1 修改./cfg/voc.data文件

classes= 20

//修改为你训练数据的.txt目录

train = /home/pjreddie/data/voc/train.txt

//修改为你验证数据的.txt目录

valid = /home/pjreddie/data/voc/2007_test.txt

names = data/voc.names

//修改为你的模型备份目录

backup = /home/pjreddie/backup/2 开始训练

这里我选择的是22层的网络,batch_size=64,subdivisions=8,如果你的内存太小,运行出错的话,可以把这两个值改小一点,然后运行以下指令即可开始训练

./darknet detector train ./cfg/voc.data ./cfg/yolo-voc.cfglog如下

nohup: ignoring input

2 layer filters size input output

3 0 conv 32 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 32

4 1 max 2 x 2 / 2 416 x 416 x 32 -> 208 x 208 x 32

5 2 conv 64 3 x 3 / 1 208 x 208 x 32 -> 208 x 208 x 64

6 3 max 2 x 2 / 2 208 x 208 x 64 -> 104 x 104 x 64

7 4 conv 128 3 x 3 / 1 104 x 104 x 64 -> 104 x 104 x 128

8 5 conv 64 1 x 1 / 1 104 x 104 x 128 -> 104 x 104 x 64

9 6 conv 128 3 x 3 / 1 104 x 104 x 64 -> 104 x 104 x 128

10 7 max 2 x 2 / 2 104 x 104 x 128 -> 52 x 52 x 128

11 8 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256

12 9 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128

13 10 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256

14 11 max 2 x 2 / 2 52 x 52 x 256 -> 26 x 26 x 256

15 12 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512

16 13 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256

17 14 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512

18 15 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256

19 16 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512

20 17 max 2 x 2 / 2 26 x 26 x 512 -> 13 x 13 x 512

21 18 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024

22 19 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512

23 20 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024

24 21 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512

25 22 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024

26 23 conv 1024 3 x 3 / 1 13 x 13 x1024 -> 13 x 13 x1024

27 24 conv 1024 3 x 3 / 1 13 x 13 x1024 -> 13 x 13 x1024

28 25 route 16

29 26 reorg / 2 26 x 26 x 512 -> 13 x 13 x2048

30 27 route 26 24

31 28 conv 1024 3 x 3 / 1 13 x 13 x3072 -> 13 x 13 x1024

32 29 conv 125 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 125

33 30 detection

34 yolo-voc

35 Learning Rate: 0.0001, Momentum: 0.9, Decay: 0.0005

36 Loaded: 1.850000 seconds

37 Region Avg IOU: 0.358097, Class: 0.065954, Obj: 0.474037, No Obj: 0.508373, Avg Recall: 0.200000, count: 15

38 Region Avg IOU: 0.360629, Class: 0.065775, Obj: 0.466963, No Obj: 0.508891, Avg Recall: 0.178571, count: 28

39 Region Avg IOU: 0.328480, Class: 0.048047, Obj: 0.471501, No Obj: 0.509045, Avg Recall: 0.217391, count: 23

40 Region Avg IOU: 0.387740, Class: 0.060535, Obj: 0.495033, No Obj: 0.509017, Avg Recall: 0.333333, count: 12

41 Region Avg IOU: 0.284584, Class: 0.046532, Obj: 0.471151, No Obj: 0.508602, Avg Recall: 0.090909, count: 22

42 Region Avg IOU: 0.335542, Class: 0.049446, Obj: 0.520727, No Obj: 0.508899, Avg Recall: 0.150000, count: 20

43 Region Avg IOU: 0.366046, Class: 0.055863, Obj: 0.502995, No Obj: 0.509692, Avg Recall: 0.250000, count: 32

44 Region Avg IOU: 0.411998, Class: 0.050235, Obj: 0.497955, No Obj: 0.506873, Avg Recall: 0.375000, count: 16

45 1: 18.557627, 18.557627 avg, 0.000100 rate, 15.430000 seconds, 64 images

46 Loaded: 0.000000 seconds

47 Region Avg IOU: 0.394109, Class: 0.048967, Obj: 0.449230, No Obj: 0.454679, Avg Recall: 0.333333, count: 15

48 Region Avg IOU: 0.430337, Class: 0.044501, Obj: 0.475584, No Obj: 0.454697, Avg Recall: 0.250000, count: 16

49 Region Avg IOU: 0.318591, Class: 0.069988, Obj: 0.490419, No Obj: 0.454365, Avg Recall: 0.133333, count: 15

50 Region Avg IOU: 0.335521, Class: 0.060138, Obj: 0.408140, No Obj: 0.454221, Avg Recall: 0.277778, count: 18

51 Region Avg IOU: 0.360168, Class: 0.055241, Obj: 0.456031, No Obj: 0.455356, Avg Recall: 0.307692, count: 13

52 Region Avg IOU: 0.343406, Class: 0.056148, Obj: 0.439433, No Obj: 0.454594, Avg Recall: 0.187500, count: 16

53 Region Avg IOU: 0.349903, Class: 0.047826, Obj: 0.392414, No Obj: 0.454783, Avg Recall: 0.235294, count: 17

54 Region Avg IOU: 0.319748, Class: 0.059287, Obj: 0.456736, No Obj: 0.453497, Avg Recall: 0.217391, count: 23

55 2: 15.246732, 18.226538 avg, 0.000100 rate, 9.710000 seconds, 128 images

56 Loaded: 0.000000 seconds3 测试

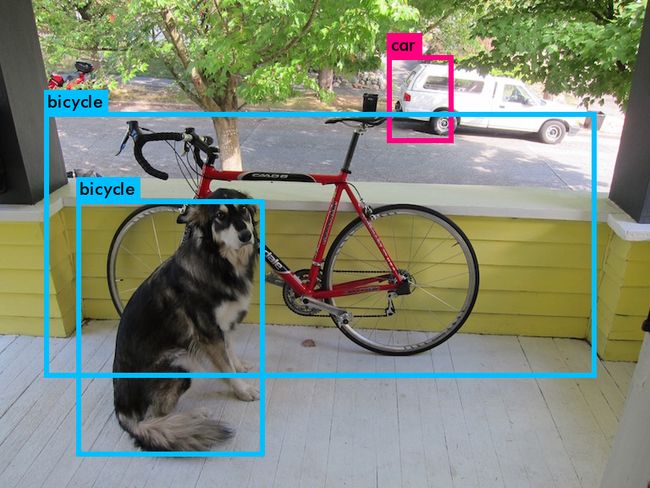

训练了一晚上迭代了6000次,我们测试下,看一下6000次迭代后的效果

$./darknet detector test cfg/voc.data cfg/yolo-voc.cfg backup/yolo-voc_6000.weights data/dog.jpg还没收敛,权重文件上传到百度网盘了,有需要的可以下载: yolo-voc_6000.weights

(END)