视频编解码流程

视频编解码流程

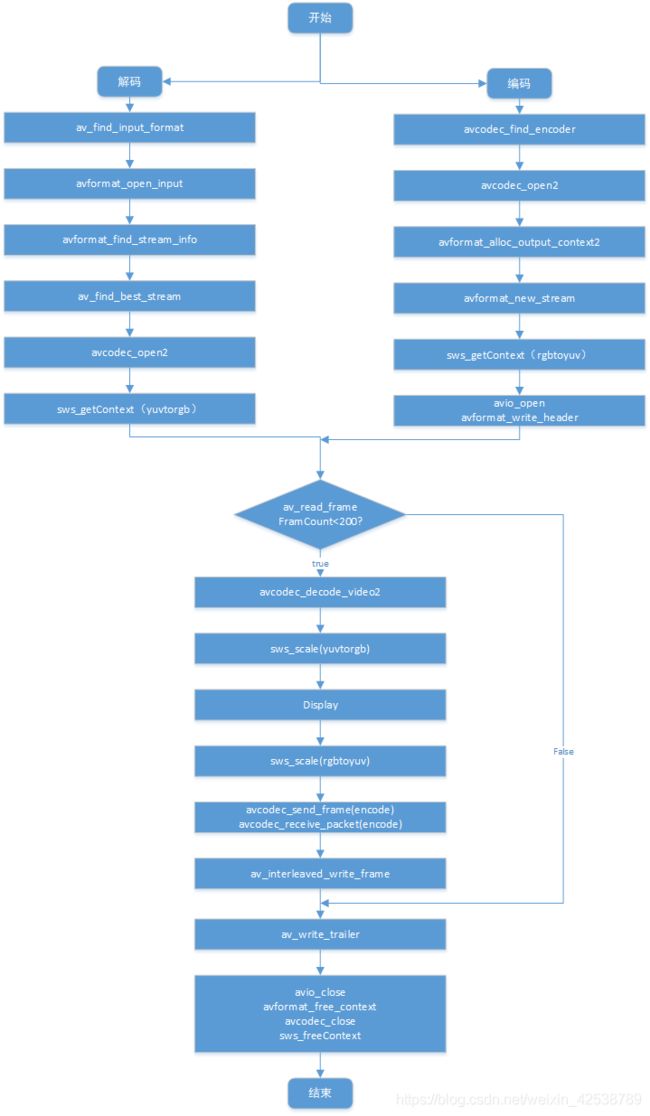

本文是描述视频编解码的过程,实现的功能是从摄像头读取数据(YUV,H264,MJPEG格式),解码播放,编码保存MP4的过程,包括了视频的解封装、解码、格式转换、显示、编码、封装保存等步骤,结尾附完整源码,开发环境为qtCreater5.7。

流程

1 摄像头视频读取

2 解封装

3 初始化解码

4 初始化格式转换(YuvtoRgb)

5 初始化格式转换(RgbtoYuv)

6 初始化编码

7 初始化封装。

8 解码->YuvtoRgb->RgbtoYuv->编码->封装

9 清理内存

程序流程图

1 摄像头视频流读取

//初始化解封装上下文

ifmt_ctx = avformat_alloc_context();

//初始化解封装对象

AVInputFormat *ifmt = av_find_input_format("video4linux2");

//打开输入视频流

avformat_open_input(&ifmt_ctx, inputFilename, ifmt, &options);

2 解封装

avformat_find_stream_info(ifmt_ctx, NULL);

videoStreamIndex = -1;

//从输入封装上下文获取输入视频流索引

videoStreamIndex = av_find_best_stream(ifmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, &deCodec, 0);

//获取输入视频流

in_stream = ifmt_ctx->streams[videoStreamIndex];

3 初始化解码

//获取视频流解码器

deCodecCtx = in_stream->codec;

avcodec_open2(deCodecCtx, deCodec, NULL);

4 初始化格式转换(YuvtoRgb)

//定义像素格式

AVPixelFormat srcFormat = AV_PIX_FMT_YUV420P;

AVPixelFormat dstFormat = AV_PIX_FMT_RGB32;

swsContextYuvtoRgb = sws_getContext(videoWidth, videoHeight, srcFormat, videoWidth, videoHeight, dstFormat, flags, NULL, NULL, NULL);

5 初始化格式转换(RgbtoYuv)

//定义像素格式

AVPixelFormat srcFormat = AV_PIX_FMT_RGB32;

AVPixelFormat dstFormat = AV_PIX_FMT_YUV420P;

//格式转换上下文(RgbtoYuv)

swsContextRgbtoYuv = sws_getContext(videoWidth, videoHeight, srcFormat, \

videoWidth, videoHeight, dstFormat, \

flags, NULL, NULL, NULL);

6 初始化编码

//找到编码器对象

AVCodec *encodec = avcodec_find_encoder(AV_CODEC_ID_H264);

//分配编码器上下文

enCodecCtx = avcodec_alloc_context3(encodec);

//打开编码器

avcodec_open2(enCodecCtx,encodec,¶m);

7 初始化封装

//设置输出封装格式上下文

avformat_alloc_output_context2(&ofmt_ctx,0,0,outputFilename);

//封装格式上下文中增加视频流信息

out_stream = avformat_new_stream(ofmt_ctx,NULL);

//设置封装器参数

out_stream->id = 0;

out_stream->codecpar->codec_tag = 0;

avcodec_parameters_from_context(out_stream->codecpar,enCodecCtx);

8 解码->YuvtoRgb->显示->RgbtoYuv->编码->封装

while(true)

{

if (av_read_frame(ifmt_ctx, avDePacket) >= 0) {

//判断当前包是视频还是音频

int index = avDePacket->stream_index;

in_stream = ifmt_ctx->streams[index];

if (index == videoStreamIndex) {

avcodec_decode_video2(deCodecCtx, avDeFrameYuv, &frameFinish, avDePacket);

if (frameFinish)

{

//格式转换YuvtoRgb

sws_scale(swsContextYuvtoRgb, (const uint8_t *const *)avDeFrameYuv->data, avDeFrameYuv->linesize,\

0, videoHeight, avDeFrameRgb->data, avDeFrameRgb->linesize);

//显示

QImage image((uchar *)buffer, videoWidth, videoHeight, QImage::Format_RGB32)

//格式转换RgbtoYuv

int h = sws_scale(swsContextRgbtoYuv, (const uint8_t *const *)inputdata, inputlinesize,0,videoHeight,\

avEnFrameYuv->data, avEnFrameYuv->linesize);

//发送给ffmpeg队列进行编码

int ret = avcodec_send_frame(enCodecCtx,avEnFrameYuv);

ret = avcodec_receive_packet(enCodecCtx,avEnPacket);

av_interleaved_write_frame(ofmt_ctx,avEnPacket);

}

av_packet_unref(avDePacket);

av_freep(avDePacket);

}

}

}

9 清理内存

//写入视频索引

av_write_trailer(ofmt_ctx);

//关闭视频输出IO

avio_close(ofmt_ctx->pb);

//清理封装格式上下文

avformat_free_context(ofmt_ctx);

avformat_free_context(ifmt_ctx);

//关闭编码和解码器

avcodec_close(enCodecCtx);

avcodec_close(deCodecCtx);

//清理编码器和解码器上下文

avcodec_free_context(&enCodecCtx);

avcodec_free_context(&deCodecCtx);

//清理格式转换上下文

sws_freeContext(swsContextRgbtoYuv);

sws_freeContext(swsContextYuvtoRgb);

附完整源码:

编解码线程头文件

//#define FFMPEG_MJPEG

//#define FFMPEG_H264

#define FFMPEG_YUV

这三个宏定义了根据摄像头输出的格式进行选择,其中引入的ffmpeg的头文件非全部必须

#ifndef FFMPEG_H

#define FFMPEG_H

//#define FFMPEG_MJPEG

//#define FFMPEG_H264

#define FFMPEG_YUV

#include

#include

#include

#include

#include

#include

//引入ffmpeg头文件

extern "C" {

#include "libavutil/opt.h"

#include "libavutil/time.h"

#include "libavutil/frame.h"

#include "libavutil/pixdesc.h"

#include "libavutil/avassert.h"

#include "libavutil/imgutils.h"

#include "libavutil/ffversion.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libavdevice/avdevice.h"

#include "libavformat/avformat.h"

#include "libavfilter/avfilter.h"

#ifndef gcc45

#include "libavutil/hwcontext.h"

#endif

}

namespace Ui {

class ffmpeg;

}

class ffmpeg : public QThread

{

Q_OBJECT

public:

explicit ffmpeg(QWidget *parent = nullptr);

~ffmpeg();

protected:

void run();

signals:

//收到图片信号

void receiveImage(const QImage &image);

private:

int lastMsec;

int videoStreamIndex; //视频流索引

int videoWidth; //视频宽度

int videoHeight; //视频高度

int videoFps; //视频流帧率

int frameFinish; //一帧完成

uint64_t framCount; //帧计数

uint8_t *buffer; //存储解码后图片buffer

AVOutputFormat *ofmt = NULL; //输出格式

AVPacket *avDePacket; //解码包对象

AVPacket *avEnPacket; //编码包对象

AVFrame *avDeFrameYuv; //解码帧对象YUV

AVFrame *avDeFrameRgb; //解码帧对象RGB

AVFrame *avEnFrameYuv; //编码帧对象YUV

AVFrame *avEnFrameRgb; //编码帧对象RGB

AVFormatContext *ifmt_ctx; //输入封装格式对象

AVFormatContext *ofmt_ctx; //输出封装格式对象

AVStream *in_stream; //输入视频流

AVStream *out_stream; //输出视频流

AVCodecContext *deCodecCtx; //解码器上下文

AVCodecContext *enCodecCtx; //编码码器上下文

SwsContext *swsContextYuvtoRgb; //格式转换上下文(YuvtoRgb)

SwsContext *swsContextRgbtoYuv; //格式转换上下文(RgbtoYuv)

int oldWidth; //上一次视频宽度

int oldHeight; //上一次视频高度

const char *outputFilename = "ffmpegVideo.mp4";

const char *inputFilename = "/dev/video0";

private:

Ui::ffmpeg *ui;

int initDecodeVideo();

int initEncodeVideo();

int playVideo();

};

#endif // FFMPEG_H

编解码线程源文件

#include "ffmpeg.h"

#define TIMEMS qPrintable(QTime::currentTime().toString("HH:mm:ss zzz"))

ffmpeg::ffmpeg(QWidget *parent) :

QThread(parent)

{

framCount = 0;

frameFinish = 0;

initDecodeVideo();

initEncodeVideo();

}

ffmpeg::~ffmpeg()

{

}

/* 功能:初始化解封装上下文,解码器上下文,和格式转换上下文(yuv转rgb)

* 1 解封装

* 2 解码

* 3 格式转换

* 参数:无

* 返回值:成功返回零,失败返回-1

*/

int ffmpeg::initDecodeVideo()

{

//注册库中所有可用的文件格式和解码器

av_register_all();

//注册所有设备,主要用于本地摄像机播放支持

avdevice_register_all();

//初始化网络流格式,使用网络流时必须先执行

avformat_network_init();

//初始化所有编解码器

avcodec_register_all();

qDebug() << TIMEMS << "init ffmpeg lib ok" << " version:" << FFMPEG_VERSION;

AVDictionary *options = NULL;

AVCodec *deCodec = NULL; //解码器

av_dict_set_int(&options, "rtbufsize", 18432000 , 0);

#ifdef FFMPEG_MJPEG

av_dict_set(&options, "framerate", "25", 0);

av_dict_set(&options, "video_size", "1920x1080", 0);

//av_dict_set(&options, "video_size", "1280x720", 0);

av_dict_set(&options, "input_format", "mjpeg", 0);

#endif

//为解封装上下文开辟空间

ifmt_ctx = avformat_alloc_context();

//解封装对象

AVInputFormat *ifmt = av_find_input_format("video4linux2");

if (nullptr != ifmt) {

qDebug("input device name video4linux2!");

} else {

qDebug("Null point ");

}

//打开输入视频流,进行解封装

int result = avformat_open_input(&ifmt_ctx, inputFilename, ifmt, &options);

if (result < 0) {

qDebug() << TIMEMS << "open input error" << inputFilename;

return false;

}

//释放设置参数

if(options != NULL) {

av_dict_free(&options);

}

//获取流信息

result = avformat_find_stream_info(ifmt_ctx, NULL);

if (result < 0) {

qDebug() << TIMEMS << "find stream info error";

return false;

}

videoStreamIndex = -1;

videoStreamIndex = av_find_best_stream(ifmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, &deCodec, 0);

if (videoStreamIndex < 0) {

qDebug() << TIMEMS << "find video stream index error";

return false;

}

//从输入封装上下文获取输入视频流

in_stream = ifmt_ctx->streams[videoStreamIndex];

if (!in_stream)

{

printf("Failed get input stream\n");

return false;

}

//获取视频流解码器上下文

deCodecCtx = in_stream->codec;

//获取分辨率大小

videoWidth = in_stream->codec->width;

videoHeight = in_stream->codec->height;

//如果没有获取到宽高则返回

if (videoWidth == 0 || videoHeight == 0) {

qDebug() << TIMEMS << "find width height error";

return false;

}

//获取视频流的帧率 fps,要对0进行过滤,除数不能为0,有些时候获取到的是0

int num = in_stream->codec->framerate.num;

int den = in_stream->codec->framerate.den;

if (num != 0 && den != 0) {

videoFps = num / den ;

}

QString videoInfo = QString("视频流信息 -> 索引: %1 格式: %2 时长: %3 秒 fps: %4 分辨率: %5*%6")

.arg(videoStreamIndex).arg(ifmt_ctx->iformat->name)

.arg((ifmt_ctx->duration) / 1000000).arg(videoFps).arg(videoWidth).arg(videoHeight);

qDebug() << TIMEMS << videoInfo;

//打开视频解码器

result = avcodec_open2(deCodecCtx, deCodec, NULL);

if (result < 0) {

qDebug() << TIMEMS << "open video codec error";

return false;

}

avDePacket = av_packet_alloc();

avDeFrameYuv = av_frame_alloc();

avDeFrameRgb = av_frame_alloc();

//比较上一次文件的宽度高度,当改变时,需要重新分配内存

if (oldWidth != videoWidth || oldHeight != videoHeight) {

int byte = avpicture_get_size(AV_PIX_FMT_RGB32, videoWidth, videoHeight);

buffer = (uint8_t *)av_malloc(byte * sizeof(uint8_t));

oldWidth = videoWidth;

oldHeight = videoHeight;

}

//定义像素格式

AVPixelFormat srcFormat = AV_PIX_FMT_YUV420P;

AVPixelFormat dstFormat = AV_PIX_FMT_RGB32;

//以下两种方法都可以

//avpicture_fill((AVPicture *)avDeFrameRgb, buffer, dstFormat, videoWidth, videoHeight);

av_image_fill_arrays(avDeFrameRgb->data, avDeFrameRgb->linesize, buffer, dstFormat, videoWidth, videoHeight, 1);

//默认最快速度的解码采用的SWS_FAST_BILINEAR参数,可能会丢失部分图片数据,可以自行更改成其他参数

int flags = SWS_FAST_BILINEAR;

#ifdef FFMPEG_MJPEG

srcFormat = AV_PIX_FMT_YUV420P;

#endif

#ifdef FFMPEG_YUV

srcFormat = AV_PIX_FMT_YUYV422;

#endif

#ifdef FFMPEG_H264

srcFormat = AV_PIX_FMT_YUV420P;

#endif

swsContextYuvtoRgb = sws_getContext(videoWidth, videoHeight, srcFormat, videoWidth, videoHeight, dstFormat, flags, NULL, NULL, NULL);

qDebug() << TIMEMS << "init ffmpegVideo ok";

return 0;

}

/* 功能:初始化编码器、格式转换上下文(rgb转yuv)、封装器

* * 1 格式转换

* 2 编码

* 3 封装

* 参数:无

* 返回值:成功返回零,失败返回-1

*/

int ffmpeg::initEncodeVideo()

{

//找到编码`器对象

AVCodec *encodec = avcodec_find_encoder(AV_CODEC_ID_H264);

if(!encodec)

{

qDebug()<<"Failed to find encoder.";

return -1;

}

//分配编码器上下文

enCodecCtx = avcodec_alloc_context3(encodec);

if(!enCodecCtx)

{

qDebug()<<"Failed to alloc context3.";

return -1;

}

videoFps = 10;

//设置编码器参数

enCodecCtx->bit_rate = 400000;

enCodecCtx->width = videoWidth;

enCodecCtx->height = videoHeight;

enCodecCtx->time_base = {1,videoFps};

enCodecCtx->framerate = {videoFps,1};

enCodecCtx->gop_size = 50;

enCodecCtx->keyint_min =20;

enCodecCtx->max_b_frames = 0;

enCodecCtx->pix_fmt = AV_PIX_FMT_YUV420P;

enCodecCtx->codec_id = AV_CODEC_ID_H264;

enCodecCtx->flags |= AV_CODEC_FLAG2_LOCAL_HEADER; //编码器信息存储在头部

enCodecCtx->thread_count = 8;

//量化因子,范围越大,画质越差,编码速度越快

enCodecCtx->qmin = 20;

enCodecCtx->qmax = 30;

enCodecCtx->me_range = 16;

enCodecCtx->max_qdiff = 4;

enCodecCtx->qcompress = 0.6;

enCodecCtx->max_b_frames = 0;

enCodecCtx->b_frame_strategy = true;

AVDictionary *param = 0;

av_dict_set(¶m, "preset", "superfast", 0);

av_dict_set(¶m, "tune", "zerolatency", 0);

//打开编码器

int ret = avcodec_open2(enCodecCtx,encodec,¶m);

if(ret < 0)

{

qDebug()<<"Failed to open enCodecCtx.";

return -1;

}

qDebug()<<"Open enCodecCtx succeed.";

//设置输出封装格式上下文

avformat_alloc_output_context2(&ofmt_ctx,0,0,outputFilename);

//封装格式上下文中增加视频流信息

out_stream = avformat_new_stream(ofmt_ctx,NULL);

//设置封装器参数

out_stream->id = 0;

out_stream->codecpar->codec_tag = 0;

avcodec_parameters_from_context(out_stream->codecpar,enCodecCtx);

qDebug()<<"====================================================";

av_dump_format(ofmt_ctx,0,outputFilename,1);

qDebug()<<"====================================================";

//定义像素格式

AVPixelFormat srcFormat = AV_PIX_FMT_RGB32;

AVPixelFormat dstFormat = AV_PIX_FMT_YUV420P;

//默认最快速度的解码采用的SWS_FAST_BILINEAR参数,可能会丢失部分图片数据,可以自行更改成其他参数

int flags = SWS_FAST_BILINEAR;

//格式转换上下文(RgbtoYuv)

swsContextRgbtoYuv = sws_getContext(videoWidth, videoHeight, srcFormat, \

videoWidth, videoHeight, dstFormat, \

flags, NULL, NULL, NULL);

//分配AVFram及像素存储空间

avEnFrameYuv = av_frame_alloc();

avEnFrameYuv->format = dstFormat;

avEnFrameYuv->width = videoWidth;

avEnFrameYuv->height = videoHeight;

ret = av_frame_get_buffer(avEnFrameYuv,32);

if(ret < 0)

{

qDebug()<<"Failed to av_frame_get_buffer.";

return -1;

}

//写MP4头

avio_open(&ofmt_ctx->pb,outputFilename,AVIO_FLAG_WRITE);

ret = avformat_write_header(ofmt_ctx,NULL);

if(ret < 0)

{

qDebug()<<"Failed to avformat_write_header.";

return -1;

}

avEnPacket = av_packet_alloc();

av_init_packet(avEnPacket);

qDebug() << TIMEMS;

return 0;

}

/*1 解封装--->2 解码--->3 格式转换(YuvtoRgb)

--->4 格式转换(RgbtoYuv)--->5 编码--->6 封装*/

int ffmpeg::playVideo()

{

while(true)

{

if (av_read_frame(ifmt_ctx, avDePacket) >= 0) {

//判断当前包是视频还是音频

int index = avDePacket->stream_index;

in_stream = ifmt_ctx->streams[index];

if (index == videoStreamIndex) {

avcodec_decode_video2(deCodecCtx, avDeFrameYuv, &frameFinish, avDePacket);

//将数据转成一张图片YuvtoRgb

sws_scale(swsContextYuvtoRgb, (const uint8_t *const *)avDeFrameYuv->data, avDeFrameYuv->linesize,\

0, videoHeight, avDeFrameRgb->data, avDeFrameRgb->linesize);

//以下两种方法都可以

//QImage image(avDeFrameRgb->data[0], videoWidth, videoHeight, QImage::Format_RGB32);

QImage image((uchar *)buffer, videoWidth, videoHeight, QImage::Format_RGB32);

if (!image.isNull()) {

emit receiveImage(image);

}

uint8_t *inputdata[AV_NUM_DATA_POINTERS] = { 0 };

int inputlinesize[AV_NUM_DATA_POINTERS] = { 0 };

inputdata[0] = buffer;

inputlinesize[0] = videoWidth * 4;

int h = sws_scale(swsContextRgbtoYuv, (const uint8_t *const *)inputdata, inputlinesize,0,videoHeight,\

avEnFrameYuv->data, avEnFrameYuv->linesize);

int base = 90000/videoFps;

//发送给ffmpeg队列进行编码

avEnFrameYuv->pts = framCount;

framCount = framCount + base;

qDebug()<<"framCount = "< 6000*200)

{

qDebug()<<"编码完成";

qDebug() << TIMEMS;

break;

}

int ret = avcodec_send_frame(enCodecCtx,avEnFrameYuv);

if(ret != 0)

{

continue;

}

ret = avcodec_receive_packet(enCodecCtx,avEnPacket);

if(ret != 0)

{

continue;

}

qDebug()<<"avEnPacket = "<size;

av_interleaved_write_frame(ofmt_ctx,avEnPacket);

}

av_packet_unref(avDePacket);

av_freep(avDePacket);

}

}

//写入视频索引

av_write_trailer(ofmt_ctx);

//关闭视频输出IO

avio_close(ofmt_ctx->pb);

//清理封装格式上下文

avformat_free_context(ofmt_ctx);

avformat_free_context(ifmt_ctx);

//关闭编码和解码器

avcodec_close(enCodecCtx);

avcodec_close(deCodecCtx);

//清理编码器和解码器上下文

avcodec_free_context(&enCodecCtx);

avcodec_free_context(&deCodecCtx);

//清理格式转换上下文

sws_freeContext(swsContextRgbtoYuv);

sws_freeContext(swsContextYuvtoRgb);

qDebug() << TIMEMS << "stop ffmpeg thread";

}

void ffmpeg::run()

{

playVideo();

}

主程序:

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include "ffmpeg.h"

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

ffmpeg * ffmpegThread = new ffmpeg(this);

ffmpegThread->start();

connect(ffmpegThread, SIGNAL(receiveImage(QImage)), this, SLOT(updateImage(QImage)));

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::updateImage(const QImage &image)

{

ui->label->resize(image.width(),image.height());

ui->label->setPixmap(QPixmap::fromImage(image));

}