Hadoop3.3.0 完全分布式(Fully-Distributed Operation)集群搭建需要注意的地方

卷首:业精于勤,行成于思,荒于戏,毁于随。

思来想去还是在这里记录一下吧,之前的所谓的顾虑是Hadoop版本在变化,没有一蹴而就的固定模式。但是在自己亲自过了一下官方的文档,阻断、前进、阻断、前进、再阻断、再前进,前进、进。最后,觉得还是有必要把过程大致记录或者说是还原一番,虽然这个事情比较浪费时间,但是如果可以帮助到一些人,也就值得了,不是吗?!

--“战略上藐视敌人,战术(行动)上重视敌人“。

在这篇博客中Hadoop使用3.3.0版本(下载地址:https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz),所以在下面操作皆以此版本为前提。

三台RedhatLinux Centos6.9服务器,大致配置如下:

/etc/hosts文件

# 注释掉网络ip

#10.177.129.54 nj-gjh-54

#10.177.129.56 nj-gjh-56

#10.177.129.57 nj-gjh-57

# 使用本地网卡

172.16.0.110 nj-gjh-54

172.16.0.108 nj-gjh-56

172.16.0.114 nj-gjh-57啰嗦几句:这三台配置用于搭建Hadoop中的HDFS、YARN完全足够用了;之前使用过cloudera manager平台管理大数据集群环境,CM和另外一家Hortonworks专业平台算是业内的前二强,听着怪别扭啊?!什么时候国内能出个大强。(CM和HortonWorks在2018年10月3号合体了,据‘路透社’报道,Cloudera通过股票方式并购了HortonWorks)。

雷老板说过,“are u okay?”

雷老板还说过,“生死看淡,不行就干!”

雷老板还说过,”大数据环境要时钟同步。“

un:授时服务NTP --记录各种事件发生时序

--1主节点(nj-gjh-57),提供授时服务,修改/etc/ntp.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

# 注释掉其它server

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server 127.127.1.0

Fudge 127.127.1.0 stratum 10--2所有节点(54-56-57)

# Hosts on local network are less restricted.

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

restrict 172.16.0.114 nomodify notrap nopeer noquery #172.16.0.114 -- 各自ip-addr

restrict 172.16.0.1 mask 255.255.255.0 nomodify notrap #172.16.0.1 -- 网关route 查看网关命令route -n 或 netstat -rn 或 ip route show --3其它从节点(54-56)

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#注释掉网络上的server

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

#使用local网络server

server 172.16.0.114 #172.16.0.114 -- 主节点IP

Fudge 172.16.0.114 stratum 10 #172.16.0.114 -- 主节点IP--重启ntpd(54-56-57:~ service ntpd restart)

[root@nj-gjh-54 hadoop-3.3.0]# ntpq -p

remote refid st t when poll reach delay offset jitter

==============================================================================

*nj-gjh-57 LOCAL(0) 6 u 49 64 377 0.220 0.358 0.216

------------------------------------------------------------------------------

[root@nj-gjh-54 hadoop-3.3.0]# ntpstat

unsynchronised

polling server every 64 s

# ntp服务器配置完毕后,需要等待5-10分钟才能与/etc/ntp.conf中配置的标准时间进行同步

------------------------------------------------------------------------------

见证奇迹的时候到了~!

[root@nj-gjh-54 hadoop-3.3.0]# service ntpd restart

Shutting down ntpd: [ OK ]

Starting ntpd: [ OK ]

[root@nj-gjh-54 hadoop-3.3.0]# ntpstat

synchronised to NTP server (172.16.0.114) at stratum 7

time correct to within 7949 ms

polling server every 64 s

[root@nj-gjh-54 hadoop-3.3.0]# ntpq -p

remote refid st t when poll reach delay offset jitter

==============================================================================

*nj-gjh-57 LOCAL(0) 6 u 12 64 1 0.299 0.516 0.000

-------------------------------------------------------------------------------

[root@nj-gjh-56 hadoop-3.3.0]# ntpstat

synchronised to NTP server (172.16.0.114) at stratum 7

time correct to within 34 ms

polling server every 1024 s

-------------------------------------------------------------------------------

其它:

1》 watch "ntpq -p"

2》 remote:本机和上层ntp的ip或主机名,“+”表示优先,“*”表示次优先

refid:参考上一层ntp主机地址

st:stratum阶层

when:多少秒前曾经同步过时间

poll:下次更新在多少秒后

reach:已经向上层ntp服务器要求更新的次数

delay:网络延迟

offset:时间补偿

jitter:系统时间与bios时间差

》》》时钟同步设置完毕

》》》进阶:大数据集群环境下减少重复劳动工作的方式 -- Shell Script(封装rsync)

deux:免密登陆

--1免密登陆基础(非对称加密)

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa #RSA来自1977年由麻省理工学院罗纳德·李维斯特(Ron Rivest)、阿迪·萨莫尔(Adi Shamir)和伦纳德·阿德曼(Leonard Adleman)一起提出的,RSA就是他们三人姓氏开头字母拼在一起组成的,算法原理可以自行了解

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys #自己登陆自己进行免密(首次ssh localhost需要密码,之后便不再需要)

ssh-copy-id nj-gjh-5? #将公钥给其它服务器,其它服务器通过ssh方式访问--2握手(三台服务器全部相互设置免密+自己和自己免密)

次数:(n-1)+n

=>n² #平方使用alt(换挡键)+178》》》进阶:如果更好的办法,欢迎评论区留言

trois:确定每台服务器上的角色

--1解压 hadoop-3.3.0.tar.gz,修改配置 重点!

core-default.xml

hdfs-default.xml

hdfs-rbf-default.xml

mapred-default.xml

yarn-default.xml

----------------------

Deprecated Propertiesetc/hadoop/hadoop-env.sh

--------------------------------------------------------------------------------------

# The java implementation to use. By default, this environment

# variable is REQUIRED on ALL platforms except OS X!

export JAVA_HOME=/usr/java # JAVA_HOME

:

:

:

# Extra Java runtime options for all Hadoop commands. We don't support

# IPv6 yet/still, so by default the preference is set to IPv4.

# export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true"

# For Kerberos debugging, an extended option set logs more information

# export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Dsun.security.krb5.debug=true -Dsun.security.spnego.debug"

export HADOOP_OPTS="$HADOOP_OPTS -Duser.timezone=GMT+08" # 时区设置

-------------------------------------------------------------------------------------

[root@nj-gjh-57 hadoop-3.3.0]# cat etc/hadoop/hadoop-env.sh |grep -v "^[#,;]"|grep -v "^$"

export JAVA_HOME=/usr/java

export HADOOP_OPTS="$HADOOP_OPTS -Duser.timezone=GMT+08"

export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}etc/hadoop/yarn-env.sh

HADOOP_OPTS="$YARN_OPTS -Duser.timezone=GMT+08" # 时区设置,之前使用YARN_OPTS--3.0之后deprecated,换成了HADOOP_OPTS

----------------------------------------------------------------------------------------

[root@nj-gjh-57 hadoop-3.3.0]# cat etc/hadoop/yarn-env.sh |grep -v "^[#,;]"|grep -v "^$"

HADOOP_OPTS="$YARN_OPTS -Duser.timezone=GMT+08"etc/hadoop/core-site.xml

fs.defaultFS

hdfs://nj-gjh-57:9000/

hadoop.tmp.dir

/usr/local/src/hadoop-3.3.0/data/tmp

etc/hadoop/hdfs-site.xml

dfs.replication

3

dfs.namenode.secondary.http-address

nj-gjh-54:9868

etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

nj-gjh-54:10020

mapreduce.jobhistory.webapp.address

nj-gjh-54:19888

-------------------------------------------------------------------------------------etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

nj-gjh-54

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

yarn.app.mapreduce.am.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.map.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.reduce.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.application.classpath

/usr/local/src/hadoop-3.3.0/etc/hadoop:/usr/local/src/hadoop-3.3.0/share/hadoop/common/lib/*:/usr/local/src/hadoop-3.3.0/share/hadoop/common/*:/usr/local/src/hadoop-3.3.0/share/hadoop/hdfs:

/usr/local/src/hadoop-3.3.0/share/hadoop/hdfs/lib/*:/usr/local/src/hadoop-3.3.0/share/hadoop/hdfs/*:/usr/local/src/hadoop-3.3.0/share/hadoop/mapreduce/*:/usr/local/src/hadoop-3.3.0/share/hadoop

/yarn:/usr/local/src/hadoop-3.3.0/share/hadoop/yarn/lib/*:/usr/local/src/hadoop-3.3.0/share/hadoop/yarn/*

---------------------------------------------------------------------------------

mapreduce.application.classpath的值为,

1.export HADOOP_CLASSPATH=$(hadoop classpath)

2.echo $HADOOP_CLASSPATH

etc/hadoop/workers

# DataNode

nj-gjh-54

nj-gjh-56

nj-gjh-57修改完以上配置之后,将57节点的hadoop3.3.0文件夹同步给其它服务器,使用如下命令

集群中环境变量设置

/etc/profile

export JAVA_HOME=/usr/java

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JRE_HOME/lib/tools.jar:$CLASSPATH

export HADOOP_HOME=/usr/local/src/hadoop-3.3.0

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

#export HADOOP_CLASSPATH=$(hadoop classpath)

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$JAVA_HOME/bin:$PATH

-------------------------------------------------------------------------------------

将主节点57整个/usr/local/src/hadoop-3.3.0文件夹同步到其它节点上面

rsync -av $HADOOP_HOME/ nj-gjh-54:$HADOOP_HOME/

rsync -av $HADOOP_HOME/ nj-gjh-56:$HADOOP_HOME/

-------------------------------------------------

----CAUTION:/usr/local/src/hadoop-3.3.0 没有目录就新建

之前看到其它blog中将rsync封装了一番,也是非常好用的

quatre:在57节点NameNode上初始化,并启动dfs

[root@nj-gjh-57 hadoop-3.3.0]# hdfs namenode -format

日志大概如下:

2020-08-07 19:26:15,752 INFO namenode.FSImage: Allocated new BlockPoolId: BP-756426205-172.16.0.114-1596799575742

2020-08-07 19:26:15,779 INFO common.Storage: Storage directory /usr/local/src/hadoop-3.3.0/data/tmp/dfs/name has been successfully formatted.

2020-08-07 19:26:15,818 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/src/hadoop-3.3.0/data/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2020-08-07 19:26:15,970 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/src/hadoop-3.3.0/data/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2020-08-07 19:26:15,998 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2020-08-07 19:26:16,013 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2020-08-07 19:26:16,014 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at nj-gjh-57/172.16.0.114

************************************************************/

---------------------------------------------------------------------------------------

之后在HADOOP_HOME路径下可以看到data文件夹是etc/hadoop/core-site.xml配置文件设置的目录

[root@nj-gjh-57 hadoop-3.3.0]# tree data/

data/

└── tmp

└── dfs

└── name

└── current

├── fsimage_0000000000000000000

├── fsimage_0000000000000000000.md5

├── seen_txid

└── VERSION

4 directories, 4 files

----------------------------------------------------------------------------------------

启动Hadoop文件系统

[root@nj-gjh-57 hadoop-3.3.0]# start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [nj-gjh-57]

Starting datanodes

Starting secondary namenodes [nj-gjh-54]

2020-08-07 19:32:24,868 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

----------------------------------------------------------------------------------------

使用jps[https://docs.oracle.com/javase/7/docs/technotes/tools/share/jps.html]查看各个服务器jvm中运行的任务情况

-----------------------------------

[root@nj-gjh-57 hadoop-3.3.0]# jps

20978 DataNode

20836 NameNode

21303 Jps

-----------------------------------

[root@nj-gjh-54 hadoop-3.3.0]# jps

4624 Jps

4579 SecondaryNameNode

4462 DataNode

-----------------------------------

[root@nj-gjh-56 hadoop-3.3.0]# jps

375 DataNode

447 Jps

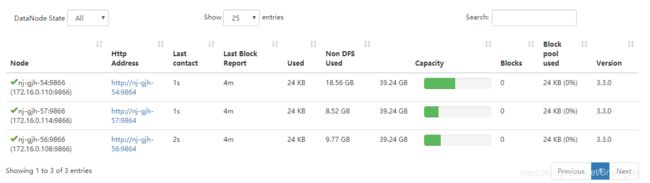

-----------------------------------访问hadoop文件系统前端页面,主节点ip:9870 -- http://nj-gjh-57:9870/dfshealth.html#tab-datanode

如:http://nj-gjh-57:9870/dfshealth.html#tab-datanode

cinq:启动yarn -- Yet Another Resource Negotiator (YARN)

https://hadoop.apache.org/docs/r3.3.0/hadoop-yarn/hadoop-yarn-site/YARN.html

[root@nj-gjh-54 hadoop-3.3.0]# start-yarn.sh

Starting resourcemanager

Starting nodemanagers

-------------------------------------------------------

[root@nj-gjh-54 hadoop-3.3.0]# jps

4579 SecondaryNameNode

5333 Jps

4939 NodeManager # yarn nm role

4462 DataNode

4798 ResourceManager # yarn rm role

-------------------------------------------------------

[root@nj-gjh-56 hadoop-3.3.0]# jps

512 NodeManager # yarn nm role

660 Jps

375 DataNode

-------------------------------------------------------

57节点没有yarn的rm和nm角色

查了一下,貌似在hadoop的从节点上才有nodemanager

不过在设置workers[以前的版本叫slaves]的时候将57也设置了datanode

估计这个问题得去找找架构了,还需要深入的学习

启动之后访问yarn前端页面,状态更加一目了然:

如:http://nj-gjh-54:8088/cluster/nodes

six:使用hadoop3.3.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar 测试一下之前的搭建情况

新建一个文本文档

[root@nj-gjh-57 hadoop-3.3.0]# more ../input

smith

allen

smile

world hello

遇贤

遇贤

hello world

fengshij

mandy.zj

allen.sf

-----------------------------------------------------------------------------------------

上传到文件系统中 固定前缀"hadoop fs -"

[root@nj-gjh-57 hadoop-3.3.0]# hadoop fs -put ../input /

-----------------------------------------------------------------------------------------

使用命令行查看上传结果

[root@nj-gjh-57 hadoop-3.3.0]# hadoop fs -ls /

Found 2 items

-rw-r--r-- 3 root supergroup 83 2020-08-07 20:02 /input

drwx------ - root supergroup 0 2020-08-07 19:57 /tmp

在主节点页面上查看上传情况:

如:http://nj-gjh-57:9870/explorer.html#/

WordCount Example:

这里在任意节点执行如下命令:

[root@nj-gjh-54 hadoop-3.3.0]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount /input /output

-----------------------------------------------------------------------------------

过程控制台输出:

[root@nj-gjh-54 hadoop-3.3.0]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount /input /output

2020-08-07 20:07:31,560 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-08-07 20:07:32,466 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at nj-gjh-54/172.16.0.110:8032

2020-08-07 20:07:33,243 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1596800529553_0002

2020-08-07 20:07:33,597 INFO input.FileInputFormat: Total input files to process : 1

2020-08-07 20:07:33,738 INFO mapreduce.JobSubmitter: number of splits:1

2020-08-07 20:07:33,944 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1596800529553_0002

2020-08-07 20:07:33,944 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-08-07 20:07:34,162 INFO conf.Configuration: resource-types.xml not found

2020-08-07 20:07:34,163 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-08-07 20:07:34,718 INFO impl.YarnClientImpl: Submitted application application_1596800529553_0002

2020-08-07 20:07:34,779 INFO mapreduce.Job: The url to track the job: http://nj-gjh-54:8088/proxy/application_1596800529553_0002/

2020-08-07 20:07:34,780 INFO mapreduce.Job: Running job: job_1596800529553_0002

2020-08-07 20:07:44,054 INFO mapreduce.Job: Job job_1596800529553_0002 running in uber mode : false

2020-08-07 20:07:44,055 INFO mapreduce.Job: map 0% reduce 0%

2020-08-07 20:07:50,152 INFO mapreduce.Job: map 100% reduce 0%

2020-08-07 20:07:57,214 INFO mapreduce.Job: map 100% reduce 100%

2020-08-07 20:07:58,239 INFO mapreduce.Job: Job job_1596800529553_0002 completed successfully

2020-08-07 20:07:58,375 INFO mapreduce.Job: Counters: 54

File System Counters

FILE: Number of bytes read=124

FILE: Number of bytes written=529975

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=175

HDFS: Number of bytes written=82

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=3002

Total time spent by all reduces in occupied slots (ms)=4613

Total time spent by all map tasks (ms)=3002

Total time spent by all reduce tasks (ms)=4613

Total vcore-milliseconds taken by all map tasks=3002

Total vcore-milliseconds taken by all reduce tasks=4613

Total megabyte-milliseconds taken by all map tasks=3074048

Total megabyte-milliseconds taken by all reduce tasks=4723712

Map-Reduce Framework

Map input records=10

Map output records=12

Map output bytes=131

Map output materialized bytes=124

Input split bytes=92

Combine input records=12

Combine output records=9

Reduce input groups=9

Reduce shuffle bytes=124

Reduce input records=9

Reduce output records=9

Spilled Records=18

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=200

CPU time spent (ms)=1700

Physical memory (bytes) snapshot=597913600

Virtual memory (bytes) snapshot=5576343552

Total committed heap usage (bytes)=531103744

Peak Map Physical memory (bytes)=360902656

Peak Map Virtual memory (bytes)=2794258432

Peak Reduce Physical memory (bytes)=237010944

Peak Reduce Virtual memory (bytes)=2782085120

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=83

File Output Format Counters

Bytes Written=82

-----------------------------------------------------------------------------------

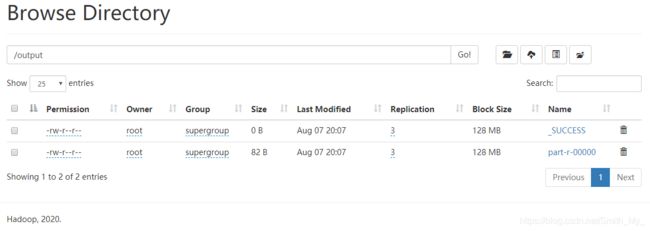

会发现出现了/output目录

[root@nj-gjh-57 hadoop-3.3.0]# hadoop fs -ls /

2020-08-07 20:08:46,810 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 3 items

-rw-r--r-- 3 root supergroup 83 2020-08-07 20:02 /input

drwxr-xr-x - root supergroup 0 2020-08-07 20:07 /output

drwx------ - root supergroup 0 2020-08-07 20:07 /tmp

-----------------------------------------------------------------------------------

统计结果:

[root@nj-gjh-56 hadoop-3.3.0]# hadoop fs -ls /output

Found 2 items

-rw-r--r-- 3 root supergroup 0 2020-08-07 20:07 /output/_SUCCESS

-rw-r--r-- 3 root supergroup 82 2020-08-07 20:07 /output/part-r-00000

------------------------------------

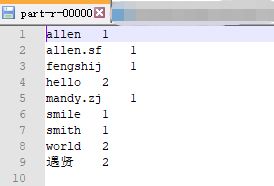

[root@nj-gjh-56 hadoop-3.3.0]# hadoop fs -cat /output/part-r-00000

allen 1

allen.sf 1

fengshij 1

hello 2

mandy.zj 1

smile 1

smith 1

world 2

遇贤 2

-----------------------------------------------------------------------------------最终的结果也可通过页面下载到本地

如:在HDFS中 http://nj-gjh-57:9870/explorer.html#/output

使用文本编辑器打开之后内容为:

如:在YARN中 http://nj-gjh-54:8088/cluster/nodes

之前没有启动mapreduce historyserver,下面把这个角色也启动了

启动historyserver

1.mapred --daemon status historyserver # 新方式

2.mr-jobhistory-daemon.sh status historyserver # Deprecated

-------CAUTION:启动historyserver在配置的54服务上运行启动脚本(这里不知道是否有问题,之前在主节点57上运行启动脚本报错了 ==)

-------------------------------------------------------------------------

[root@nj-gjh-54 hadoop-3.3.0]# mapred --daemon start historyserver

------CAUTION:daemon 容易敲成 deamon

-------------------------------------------------------------------------

[root@nj-gjh-54 hadoop-3.3.0]# jps

4579 SecondaryNameNode

4939 NodeManager

4462 DataNode

4798 ResourceManager

6286 Jps

6207 JobHistoryServer # 通过页面访问:historyserver

如:Tracking URL: http://nj-gjh-54:19888/jobhistory/job/job_1596800529553_0002

sept:小结

Hadoop-Yarn Map-Reduce应用场景:离线计算框架,适用于

Strom 实时流式计算框架

Spark 内存计算框架

Next Marche:Hive