CentOS 安装hadoop3.0.3 版本踩坑

坑坑坑,全是坑

1、but there is no HDFS_NAMENODE_USER defined. Aborting operation.

[root@xcff sbin]# ./start-dfs.sh

Starting namenodes on [localhost]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [localhost]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operatio然后就晕了, 解决方法:

在/hadoop/hadoop-3.0.3/sbin/start-dfs.sh中:

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root在/hadoop/hadoop-3.0.3/sbin/start-yarn.sh中:

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root在/hadoop/hadoop-3.0.3/sbin/stop-dfs.sh中:

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root在/hadoop/hadoop-3.0.3/sbin/stop-yarn.sh中:

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root2、localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

这个问题 是需要免密登陆

[root@xcff sbin]# ./start-dfs.sh

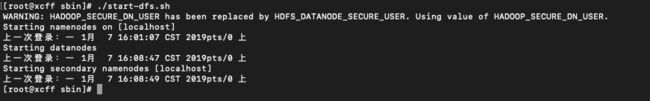

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [localhost]

上一次登录:一 1月 7 15:19:55 CST 2019从 192.168.101.18pts/8 上

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

Starting datanodes

上一次登录:一 1月 7 15:34:53 CST 2019pts/0 上

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

Starting secondary namenodes [localhost]

上一次登录:一 1月 7 15:34:53 CST 2019pts/0 上

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).解决方法是:

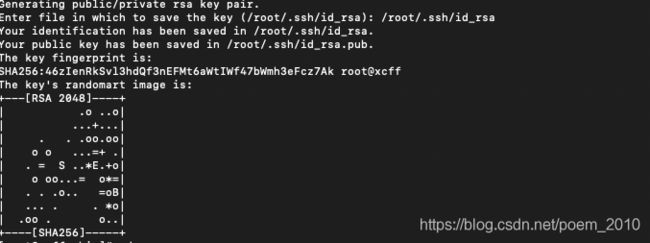

ssh-keygen -t rsa -P ""最后的样子是:

最后进入的数据的目录中: /root/.ssh/id_rsa

在~目录的.ssh下生成秘钥

将生成的公钥id_rsa.pub 内容追加到authorized_keys

使用的命令是(需要进入到这个目录中才行):

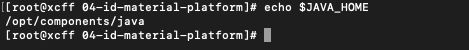

cat id_rsa.pub >> authorized_keys3、localhost: ERROR: JAVA_HOME is not set and could not be found.

但是有JAVA_HOME 环境变量啊, 为啥不行呢?

[root@xcff sbin]# ./start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [localhost]

上一次登录:一 1月 7 15:43:15 CST 2019pts/0 上

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting datanodes

上一次登录:一 1月 7 15:45:53 CST 2019pts/0 上

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [localhost]

上一次登录:一 1月 7 15:45:54 CST 2019pts/0 上

localhost: ERROR: JAVA_HOME is not set and could not be found.修改/hadoop/hadoop-3.0.3/etc/hadoop/hadoop-evn.sh

添加环境变量

打开注释, 最后, 在后面加上 echo $JAVA_HOME显示的目录, 保存

好了 ,启动起来了