Python-Scrapyd

Scrapyd 是一个运行Scrapy爬虫的服务程序, 它提供一系列HTTP接口来帮我们部署,启动,停止,删除爬虫程序,利用它我们可以非常方便的完成Scapy爬虫项目的部署任务调度。而且它不会像pyspider一样可以看到源码。

- scrapyd的安装

- pip install scrapyd

- scrapyd官方文档[http://scrapyd.readthedocs.io/en/stable/](http://scrapyd.readthedocs.io/en/stable/)

- scrapyd的启动

- 安装完成后,在cmd中输入scrapyd即可启动scrapyd

- 默认绑定端口6800

- 然后在浏览器中打开连接 http://127.0.0.1:6800/ 即可进入scrapyd页面

- 配置scrapyd.conf

- 路径 :E:\python\Lib\site-packages\scrapyd

- 内容:

[scrapyd]

# 项目的 eggs 存储位置

eggs_dir = eggs

# Scrapy日志的存储目录。如果要禁用存储日志,设置为空

logs_dir = logs

# Scrapyitem将被存储的目录,默认情况下禁用此选项,如果设置了 值,会覆盖 scrapy的 FEED_URI 配置项

items_dir =

# 每个爬虫保持完成的工作数量。默认为5

jobs_to_keep = 5

# 项目数据库存储的目录

dbs_dir = dbs

# 并发scrapy进程的最大数量,默认为0,没有设置或者设置为0时,将使用系统中可用的cpus数乘以max_proc_per_cpu配置的值

max_proc = 0

# 每个CPU启动的进程数,默认4

max_proc_per_cpu = 4

# 保留在启动器中的完成进程的数量。默认为100

finished_to_keep = 100

# 用于轮询队列的时间间隔,以秒为单位。默认为5.0

poll_interval = 5.0

# webservices监听地址

bind_address = 127.0.0.1

# 默认 http 监听端口

http_port = 6800

# 是否调试模式

debug = off

# 将用于启动子流程的模块,可以使用自己的模块自定义从Scrapyd启动的Scrapy进程

runner = scrapyd.runner

application = scrapyd.app.application

launcher = scrapyd.launcher.Launcher

webroot = scrapyd.website.Root

[services]

schedule.json = scrapyd.webservice.Schedule

cancel.json = scrapyd.webservice.Cancel

addversion.json = scrapyd.webservice.AddVersion

listprojects.json = scrapyd.webservice.ListProjects

listversions.json = scrapyd.webservice.ListVersions

listspiders.json = scrapyd.webservice.ListSpiders

delproject.json = scrapyd.webservice.DeleteProject

delversion.json = scrapyd.webservice.DeleteVersion

listjobs.json = scrapyd.webservice.ListJobs

daemonstatus.json = scrapyd.webservice.DaemonStatus

- 发布项目

step_1:python 安装的scripts目录下有个scrapyd-deploy文件, 将其复制到scrapy项目的根目录下

step_2:修改scrapy项目中的config.cfg文件

[settings]

default = lianjia.settings

[deploy:127]

#项目地址,deploy后面加上name

url = http://localhost:6800/addversion.json

#项目名称

project = lianjia

#也可以是设置多个,deploy后面加上name

[deploy:118]

url = http://192.***.*.***:6800/addversion.json

project = lianjia

step_3: 到scrapy有scrapyd-deploy的目录下可以查看scrapyd服务配置,

执行 python scrapyd-deploy -l命令,可以查看congfig.cfg设置

step_4: 发布项目到指定scrapyd服务上

执行 python scrapyd-deployd deploy_name -p scrapy项目name

例如:python scrapyd-deploy 127 lianjia

C:\Users\wang\Desktop\lianjia>python scrapyd-deploy 127 -p lianjia

Packing version 1530512850

Deploying to project "lianjia" in http://localhost:6800/addversion.json

Server response (200):

{"node_name": "ppp", "status": "ok", "project": "lianjia", "version": "1530512850", "spiders": 1}

step_5:执行成功后,scrapy项目中会声称很多配置文件(这里不做解释,需要的话可以查看官方文档)

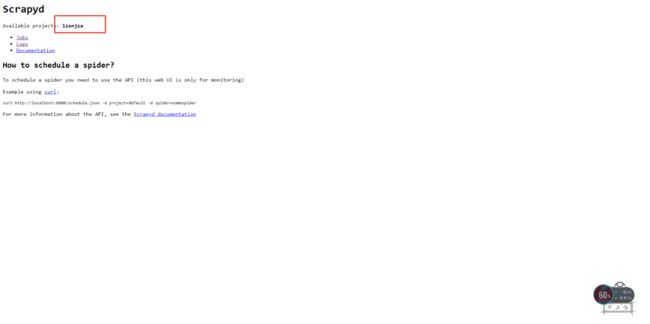

step_6:再来看下 http://127.0.0.1:6800/,项目上传成功,代码如有修改重复上传即可,它会自动更改版本

- 控制API

- 所有的API都是通过http协议发送的请求,目前总共10个api

- 规则是:http://ip:port/api_command.json,有GET和POST两种请求

- 这里介绍常用的,其他的可以查看官方文档

# -*- coding: utf-8 -*-

import requests

ip = 'localhost'

port = 6800

def schedule(project, spider):

url = f'http://{ip}:{port}/schedule.json'

# url = 'http://{}:{}/schedule.json'.format(project, spider)

params = {

"project": project,

"spider": spider

# '_version': version

}

r = requests.post(url, data=params)

return r.json()

def listjobs(project):

url = f'http://{ip}:{port}/listjobs.json?project={project}'

r = requests.get(url)

return r.json()

def cancel(project, job):

url = f'http://{ip}:{port}/cancel.json?project={project}&job={job}'

r = requests.post(url)

return r.json()

if __name__ == '__main__':

# 部署命令: python scrapyd-deploy 127 -p lianjia --version v108

project = 'lianjia'

crawler_name = 'lj_crawler'

# 启动项目

# schedule(project, crawler_name)

# # 获取所有jobs

# j = listjobs(project)

# print(j)

# 停止项目

job = '43b1441a3ff211e8825f58fb8457c654'

j = cancel(project, job)

print(j)

- BUG处理

- builtins.KeyError: ‘project’

进行post提交时,需要将参数提交放入到 params 或 data 中,而不是json - TypeError: init() missing 1 required positional argument: ‘self’

修改 spider ,增加 :

def __init__(self, **kwargs):

super(DingdianSpider, self).__init__(self, **kwargs)

- 启动项目

#总结:

- 这个scrapyd提供了界面,像pyspider一样可以用眼睛在界面看到我们的目录,但是只是看,并不能进行管理

- scrapyd的部署也可以用scrapyd-client

- scrapyd API更优化的方法 gerapy后面在介绍

- scrapyd对接docker 后面在介绍

- scrapy-redis 后面在介绍