基础集合

Collection

-

List

- LinkedList - ArrayList - Vector - Stack -

Queue

- PriorityQueue - Deque - ArrayDeque -

Set

- HashSet - LinkedHashSet - TreeSet

Map

-

HashMap

- LinkedHashMap - TreeMap - HashTable

List

LinkedList

先看字段声明

transient int size = 0;

/**

* Pointer to first node.

* Invariant: (first == null && last == null) ||

* (first.prev == null && first.item != null)

*/

transient Node first;

/**

* Pointer to last node.

* Invariant: (first == null && last == null) ||

* (last.next == null && last.item != null)

*/

transient Node last; 我们看到有三个字段,分别是size、first、last,命名和注释已非常简单明显,不难看出这是一个双向链表。

注意到这三个字段都有个修饰关键字transient,这是比较不常见的,这是与类序列化相关的内容,为了不让这篇将集合的文章太跳,将会在写完LinkedList相关的内容后再讲述。

最核心内容,LinkedList的信息储存单元是一个内部类Node。

LinkedList.Node

三个字段分别是前驱结点,后继结点、节点内容,没什么特别的。

private static class Node {

E item;

Node next;

Node prev;

Node(Node prev, E element, Node next) {

this.item = element;

this.next = next;

this.prev = prev;

}

} 关于对链表的增删结点,获取结点,更换结点等操作比较简单,下面只挑在某个结点前插入一个结点的操作进行讲述:

/**

* Inserts element e before non-null Node succ.

*/

//非public方法,public void add(int index, E element)为上层方法

void linkBefore(E e, Node succ) {//在succ结点前加入以e为值的结点

// assert succ != null;

final Node pred = succ.prev;

//1.将e构造成结点,后继结点为succ,前驱结点为succ的前驱结点,这用在e结点的角度就已经加入到链表中succ前面的位置了,但此时e结点的前后结点指针还未指向e

final Node newNode = new Node<>(pred, e, succ);

//2.将e后面的结点即succ的前驱指向e

succ.prev = newNode;

//3.将e前面的结点的后继指向e,若e此时为第一个结点则将first指针指向e

if (pred == null)

first = newNode;

else

pred.next = newNode;

//4.链表容量增加1

size++;

//5.链表修改次数记录加1

modCount++;

} 通过了解在某个结点前插入一个结点这个操作的实现,不难发现链表一个两个非常重要的特点,一是方便动态增删结点,只需要调整链表局部位置的结点指向,二是随机查询速度较慢,因为需要从头结点一直向前查询。

注意到,其实会对链表结构发生改变的每一个操作,链表都会将修改次数记录modCount加1,那这个modCount的作用是什么呢?其实是用于辅助迭代器正常使用的。

迭代器

迭代器简单来说就是用来对集合的元素进行遍历操作的。调用集合的iterator()或listIterator()方法将实例出从第一个结点开始的迭代器,也可以传入int参数作为第一个迭代的结点。

private class ListItr implements ListIterator {

private Node lastReturned;

private Node next;

private int nextIndex;

private int expectedModCount = modCount;

ListItr(int index) {

// assert isPositionIndex(index);

next = (index == size) ? null : node(index);

nextIndex = index;

}

public boolean hasNext() {

return nextIndex < size;

}

public E next() {

checkForComodification();

if (!hasNext())

throw new NoSuchElementException();

lastReturned = next;

next = next.next;

nextIndex++;

return lastReturned.item;

}

public boolean hasPrevious() {

return nextIndex > 0;

}

public E previous() {

checkForComodification();

if (!hasPrevious())

throw new NoSuchElementException();

lastReturned = next = (next == null) ? last : next.prev;

nextIndex--;

return lastReturned.item;

}

public int nextIndex() {

return nextIndex;

}

public int previousIndex() {

return nextIndex - 1;

}

public void remove() {

checkForComodification();

if (lastReturned == null)

throw new IllegalStateException();

Node lastNext = lastReturned.next;

unlink(lastReturned);

if (next == lastReturned)

next = lastNext;

else

nextIndex--;

lastReturned = null;

expectedModCount++;

}

public void set(E e) {

if (lastReturned == null)

throw new IllegalStateException();

checkForComodification();

lastReturned.item = e;

}

public void add(E e) {

checkForComodification();

lastReturned = null;

if (next == null)

linkLast(e);

else

linkBefore(e, next);

nextIndex++;

expectedModCount++;

}

public void forEachRemaining(Consumer action) {

Objects.requireNonNull(action);

while (modCount == expectedModCount && nextIndex < size) {

action.accept(next.item);

lastReturned = next;

next = next.next;

nextIndex++;

}

checkForComodification();

}

final void checkForComodification() {

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

}

} 从内部类ListItr看到,LinkedList的迭代器可以双向进行迭代的,迭代过程中只能使用迭代器的add()、set()、remove()对链表进行修改,为什么重点标出只能,因为很多新手很容易写出这样的代码:

//虽然没有显式地使用迭代器,但其实底层实现也是使用迭代器进行迭代

for (Object o : linkedList) {

if(o.equals(something)){

linkedList.remove(o);

}

}这样是会报错的,通过注释,我们可以看到这是在迭代过程中是不允许直接修改链表的结构的,fail-fast机制,可以看到,在迭代器的代码中,有个非public方法checkForComodification(),迭代器中几乎每个操作都会调用一下该方法,而该方法的方法体内仅仅做的一件事就是检查链表的modCount是否等于迭代器expectedModCount,不相等将抛出ConcurrentModificationException,从而实现不允许在迭代过程中直接修改链表结构,至于为什么要这样做则自行研究上述迭代的代码看如果在迭代过程中修改了链表结构会有什么错误发生。因此,正确的使用迭代器删除元素应该是像下面这样:

while (iterator.hasNext()){

Object o = iterator.next();

if(o.equals(something)){

iterator.remove();

}

}迭代器的高级用法

从LinkedList的迭代器代码中可以看到一个方法forEachRemaining(),我们查看Iterator接口的描述:

/**

* Performs the given action for each remaining element until all elements

* have been processed or the action throws an exception. Actions are

* performed in the order of iteration, if that order is specified.

* Exceptions thrown by the action are relayed to the caller.

*

* @implSpec

* The default implementation behaves as if:

*

{@code

* while (hasNext())

* action.accept(next());

* }

*

* @param action The action to be performed for each element

* @throws NullPointerException if the specified action is null

* @since 1.8

*/

default void forEachRemaining(Consumer action) {

Objects.requireNonNull(action);

while (hasNext())

action.accept(next());

}看到该方法是在jdk1.8版本后才加入的,用于实现对集合每个元素做特定操作,传入参数为Consumer接口,Consumer接口是一个只有一个方法需要实现的接口,所以不难看出其实该方法是用于配合lambda来使用的,以此更加简化java的表达,举例:

iterator.forEachRemaining(System.out::println);

iterator.forEachRemaining(item -> {

System.out.println(item);

});高级迭代器

继续看Linked的源代码,我们看到一个Spliterator,这是jdk1.8才加入的迭代器,该迭代器其实就是可分割的迭代器,可分割,意味着可以将迭代过程分给不同的线程去完成,从而提高效率。为了方便,源码分析将在下述代码以注释方式进行:

@Override

public Spliterator spliterator() {

return new LLSpliterator(this, -1, 0);

}

/** A customized variant of Spliterators.IteratorSpliterator */

//变种的IteratorSpliterator,区别:IteratorSpliterator使用interator进行迭代,LLSpliterator直接使用Node的next指针迭代,原则上迭代速度更快

static final class LLSpliterator implements Spliterator {

static final int BATCH_UNIT = 1 << 10; // batch array size increment;分割的长度增加单位,每分割一次下次分割长度增加1024

static final int MAX_BATCH = 1 << 25; // max batch array size;最大分割长度,大于2^25分割长度将不再增加

final LinkedList list; // null OK unless traversed

Node current; // current node; null until initialized

int est; // size estimate; -1 until first needed

int expectedModCount; // initialized when est set

int batch; // batch size for splits;当前分割长度

LLSpliterator(LinkedList list, int est, int expectedModCount) {

this.list = list;

this.est = est;

this.expectedModCount = expectedModCount;

}

final int getEst() {

int s; // force initialization

final LinkedList lst;

if ((s = est) < 0) {

if ((lst = list) == null)

s = est = 0;

else {

expectedModCount = lst.modCount;

current = lst.first;

s = est = lst.size;

}

}

return s;

}

public long estimateSize() { return (long) getEst(); }

//分割出batch长度的Spliterator

public Spliterator trySplit() {

Node p;

int s = getEst();

if (s > 1 && (p = current) != null) {

//每次分割长度增加BATCH_UNIT,达到MAX_BATCH便不再增加

int n = batch + BATCH_UNIT;

if (n > s)

n = s;

if (n > MAX_BATCH)

n = MAX_BATCH;

//将需要分割的元素生成数组

Object[] a = new Object[n];

int j = 0;

do { a[j++] = p.item; } while ((p = p.next) != null && j < n);

current = p;

batch = j;

est = s - j;

//返回新的Spliterator,注意:新的Spliterator为ArraySpliterator类型,实现上有所区别,ArraySpliterator每次分割成一半一半,而IteratorSpliterator算术递增

return Spliterators.spliterator(a, 0, j, Spliterator.ORDERED);

}

return null;

}

//遍历当前迭代器中所有元素并对获取元素值进行action操作(操作所有元素)

public void forEachRemaining(Consumer action) {

Node p; int n;

if (action == null) throw new NullPointerException();

if ((n = getEst()) > 0 && (p = current) != null) {

current = null;

est = 0;

do {

E e = p.item;

p = p.next;

action.accept(e);

} while (p != null && --n > 0);

}

if (list.modCount != expectedModCount)

throw new ConcurrentModificationException();

}

//对当前迭代元素的元素值进行action操作(只操作一个元素)

public boolean tryAdvance(Consumer action) {

Node p;

if (action == null) throw new NullPointerException();

if (getEst() > 0 && (p = current) != null) {

--est;

E e = p.item;

current = p.next;

action.accept(e);

if (list.modCount != expectedModCount)

throw new ConcurrentModificationException();

return true;

}

return false;

}

public int characteristics() {

return Spliterator.ORDERED | Spliterator.SIZED | Spliterator.SUBSIZED;

}

} 序列化和Transient

来自百度百科的解释:

java语言的关键字,变量修饰符,如果用transient声明一个实例变量,当对象存储时,它的值不需要维持。换句话来说就是,用transient关键字标记的成员变量不参与序列化过程。

解释了跟没解释差不多。好,其实对象存储就是一个对象序列化的过程,下面提供一个程序以便更好的理解:

package Serializable;

import java.io.*;

public class SerializableTest {

private static void testSerializable(AbstractClass cl) throws IOException {

//依次读取对象的各个字段值

System.out.printf("Minor version number: %d%n", cl.getMinorVer());

System.out.printf("Major version number: %d%n", cl.getMajorVer());

cl.showString();

System.out.println();

//将对象写入硬盘

File file = new File("resource/ObjectRecords.txt");

if (!file.exists()) {

file.createNewFile();

}

try (FileOutputStream fos = new FileOutputStream(file);

ObjectOutputStream oos = new ObjectOutputStream(fos))

{

oos.writeObject(cl);

}

//置空对象的引用

cl = null;

//将对象重新从硬盘读回

try (FileInputStream fis = new FileInputStream("resource/ObjectRecords.txt");

ObjectInputStream ois = new ObjectInputStream(fis))

{

cl = (AbstractClass) ois.readObject();

//依次读取反序列化后的对象的各个字段值

System.out.printf("Minor version number: %d%n", cl.getMinorVer());

System.out.printf("Major version number: %d%n", cl.getMajorVer());

cl.showString();

System.out.println();

} catch (ClassNotFoundException cnfe) {

System.err.println(cnfe.getMessage());

}

}

public static void main(String[] args) throws IOException {

ClassSerializable cl1 = new ClassSerializable("string");

testSerializable(cl1);

ClassAllSerializable cl2 = new ClassAllSerializable("string");

testSerializable(cl2);

ClassNotSerializable cl3 = new ClassNotSerializable("string");

testSerializable(cl3);

}

}

从main方法可以看到这里用来测试的有三个类,分别是ClassSerializable、ClassAllSerializable、ClassNotSerializable,其中前两个实现了Serializable接口,代表这两个类是可以进行序列化的,所以前两个类的对象在进行writeObject的时候不会报错,而ClassNotSerializable则抛出java.io.NotSerializableException: Serializable.ClassNotSerializable,而前两个类区别在于ClassSerializable所有字段均带有Transient关键字,而ClassAllSerializable没有,以下是程序输出结果:

ClassSerializable called

Minor version number: 1

Major version number: 2

string

Minor version number: 0

Major version number: 0

null

ClassAllSerializable called

Minor version number: 1

Major version number: 2

string

Minor version number: 1

Major version number: 2

string

ClassNotSerializable called

Minor version number: 1

Major version number: 2

string

Exception in thread "main" java.io.NotSerializableException: Serializable.ClassNotSerializable

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1184)

at java.io.ObjectOutputStream.writeObject(ObjectOutputStream.java:348)

at Serializable.SerializableTest.testSerializable(SerializableTest.java:19)

at Serializable.SerializableTest.main(SerializableTest.java:42)

可以明显看到,被transient修饰的字段经过对象的序列化和反序列化后没有被保存起来。

ArrayList

先看字段声明进行初步分析:

/**

* Default initial capacity.

*/

private static final int DEFAULT_CAPACITY = 10;

/**

* Shared empty array instance used for empty instances.

*/

private static final Object[] EMPTY_ELEMENTDATA = {};

/**

* Shared empty array instance used for default sized empty instances. We

* distinguish this from EMPTY_ELEMENTDATA to know how much to inflate when

* first element is added.

*/

private static final Object[] DEFAULTCAPACITY_EMPTY_ELEMENTDATA = {};

/**

* The array buffer into which the elements of the ArrayList are stored.

* The capacity of the ArrayList is the length of this array buffer. Any

* empty ArrayList with elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA

* will be expanded to DEFAULT_CAPACITY when the first element is added.

*/

transient Object[] elementData; // non-private to simplify nested class access

/**

* The size of the ArrayList (the number of elements it contains).

*

* @serial

*/

private int size;

显然,elementData是用来存储元素的,也就是说ArrayList底层由数组维护的。

我们都知道,数组的大小初始化之后就是固定的,而数组表的元素是需要进行增删操作的,那么ArrayList是如何实现改变大小的呢?

扩容

不难想象,当进行add()操作的时候需要进行扩容:

public boolean add(E e) {

ensureCapacityInternal(size + 1); // Increments modCount!!

elementData[size++] = e;

return true;

}

public boolean addAll(Collection c) {

Object[] a = c.toArray();

int numNew = a.length;

ensureCapacityInternal(size + numNew); // Increments modCount

System.arraycopy(a, 0, elementData, size, numNew);

size += numNew;

return numNew != 0;

}

private void ensureCapacityInternal(int minCapacity) {

if (elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA) {

minCapacity = Math.max(DEFAULT_CAPACITY, minCapacity);

}

ensureExplicitCapacity(minCapacity);

}

private void ensureExplicitCapacity(int minCapacity) {

modCount++;

// overflow-conscious code

if (minCapacity - elementData.length > 0)

grow(minCapacity);

}

private void grow(int minCapacity) {

// overflow-conscious code

int oldCapacity = elementData.length;

int newCapacity = oldCapacity + (oldCapacity >> 1);

if (newCapacity - minCapacity < 0)

newCapacity = minCapacity;

if (newCapacity - MAX_ARRAY_SIZE > 0)

newCapacity = hugeCapacity(minCapacity);

// minCapacity is usually close to size, so this is a win:

elementData = Arrays.copyOf(elementData, newCapacity);

}可以看到,重点是grow()方法,我们分析一下grow()的行为,参数变量minCapacity其实只是一个参考大小,通常为当前大小加新增元素个数,grow首要考虑是将容量增加两倍,若此时minCapacity更大的话才考虑取minCapacity,最后考虑值若比MAX_ARRAY_SIZE还要大则只能尽可能大进行扩容,最后使用Arrays.copyOf()进行新建数组后复制。

其实Arraylist的所有add和remove操作都是基于Arrays.copyOf()进行的,此时,ArrayList相较于LinkedList的特点很明显了,一是因为其底层是数组,所以ArrayList非常擅长与随机读取,二是因为基于Arrays.copyOf()实现的原因,ArrayList增删元素效率很低,而且导致内存占用增加,提高GC触发的几率。

高阶函数

分别是foreach、removeIf、replaceAll、sort,其参数均可使用lumbda表达式,这四个方法来自不同的接口,其实LinkedList也有这几个方法,不过LinkedList均使用的是上级接口的default实现,而ArrayList则对其进行覆盖了,下面将在代码中增加注释加以分析:

@Override

//default使用迭代器迭代,下面实现原理一样,简化检查过程

public void forEach(Consumer action) {

Objects.requireNonNull(action);

final int expectedModCount = modCount;

@SuppressWarnings("unchecked")

final E[] elementData = (E[]) this.elementData;

final int size = this.size;

for (int i=0; modCount == expectedModCount && i < size; i++) {

action.accept(elementData[i]);

}

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

}

@Override

//default使用迭代器迭代,后满足条件进行remove(),上面也讲了ArrayList随机增减元素效率很低,所以default的实现是绝对不可取的,下面实现的思想其实是吧需要删除的元素序号记录下来,然后跳过这些元素把剩余元素按顺序排回this.elementData中

public boolean removeIf(Predicate filter) {

Objects.requireNonNull(filter);

// figure out which elements are to be removed

// any exception thrown from the filter predicate at this stage

// will leave the collection unmodified

int removeCount = 0;

final BitSet removeSet = new BitSet(size);

final int expectedModCount = modCount;

final int size = this.size;

for (int i=0; modCount == expectedModCount && i < size; i++) {

@SuppressWarnings("unchecked")

final E element = (E) elementData[i];

if (filter.test(element)) {

removeSet.set(i);

removeCount++;

}

}

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

// shift surviving elements left over the spaces left by removed elements

final boolean anyToRemove = removeCount > 0;

if (anyToRemove) {

final int newSize = size - removeCount;

for (int i=0, j=0; (i < size) && (j < newSize); i++, j++) {

i = removeSet.nextClearBit(i);

elementData[j] = elementData[i];

}

for (int k=newSize; k < size; k++) {

elementData[k] = null; // Let gc do its work

}

this.size = newSize;

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

modCount++;

}

return anyToRemove;

}

@Override

@SuppressWarnings("unchecked")

//default使用迭代器迭代,下面实现原理一样,简化检查过程

public void replaceAll(UnaryOperator operator) {

Objects.requireNonNull(operator);

final int expectedModCount = modCount;

final int size = this.size;

for (int i=0; modCount == expectedModCount && i < size; i++) {

elementData[i] = operator.apply((E) elementData[i]);

}

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

modCount++;

}

@Override

@SuppressWarnings("unchecked")

//default方法先将集合用toArray()转换成数组再用Arrays.sort,而这对于ArrayList来说肯定是多余的,因为ArrayList的元素容器就是数组

public void sort(Comparator c) {

final int expectedModCount = modCount;

Arrays.sort((E[]) elementData, 0, size, c);

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

modCount++;

} Vector和Stack

简单翻看了Vector的源码和注释,发现它在jdk1.0就存在的,而ArrayList、LinkedList这种都是jdk1.2才增加的,然后在jdk1.2版本的时候稍微改造增加了对List的实现,而其大部分内容和ArrayList没什么大的差别,只是对public方法加上了synchronized关键字,也就是说jdk1.2以后Vector其实相当于是线程安全的ArrayList,这一点注释上也有提及:

/**

...

* As of the Java 2 platform v1.2, this class was retrofitted to

* implement the {@link List} interface, making it a member of the

*

* Java Collections Framework. Unlike the new collection

* implementations, {@code Vector} is synchronized. If a thread-safe

* implementation is not needed, it is recommended to use {@link

* ArrayList} in place of {@code Vector}.

...

*/

而Stack,继承自Vector,在它之上增加了栈的操作(push、pop)。

在注释中我们也注意到,对栈这种后进先出的操作也在双端队列Deque接口的实现中提供,例子给出的是ArrayDeque,关于队列及双端队列我们后续在讲:

/**

...

* A more complete and consistent set of LIFO stack operations is

* provided by the {@link Deque} interface and its implementations, which

* should be used in preference to this class. For example:

*

{@code

* Deque stack = new ArrayDeque();}

...

*/Queue

PriorityQueue

我们先看Queue接口方法:

public interface Queue extends Collection {

/**

* Inserts the specified element into this queue if it is possible to do so

* immediately without violating capacity restrictions, returning

* {@code true} upon success and throwing an {@code IllegalStateException}

* if no space is currently available.

*

* @param e the element to add

* @return {@code true} (as specified by {@link Collection#add})

* @throws IllegalStateException if the element cannot be added at this

* time due to capacity restrictions

* @throws ClassCastException if the class of the specified element

* prevents it from being added to this queue

* @throws NullPointerException if the specified element is null and

* this queue does not permit null elements

* @throws IllegalArgumentException if some property of this element

* prevents it from being added to this queue

*/

boolean add(E e);

/**

* Inserts the specified element into this queue if it is possible to do

* so immediately without violating capacity restrictions.

* When using a capacity-restricted queue, this method is generally

* preferable to {@link #add}, which can fail to insert an element only

* by throwing an exception.

*

* @param e the element to add

* @return {@code true} if the element was added to this queue, else

* {@code false}

* @throws ClassCastException if the class of the specified element

* prevents it from being added to this queue

* @throws NullPointerException if the specified element is null and

* this queue does not permit null elements

* @throws IllegalArgumentException if some property of this element

* prevents it from being added to this queue

*/

boolean offer(E e);

/**

* Retrieves and removes the head of this queue. This method differs

* from {@link #poll poll} only in that it throws an exception if this

* queue is empty.

*

* @return the head of this queue

* @throws NoSuchElementException if this queue is empty

*/

E remove();

/**

* Retrieves and removes the head of this queue,

* or returns {@code null} if this queue is empty.

*

* @return the head of this queue, or {@code null} if this queue is empty

*/

E poll();

/**

* Retrieves, but does not remove, the head of this queue. This method

* differs from {@link #peek peek} only in that it throws an exception

* if this queue is empty.

*

* @return the head of this queue

* @throws NoSuchElementException if this queue is empty

*/

E element();

/**

* Retrieves, but does not remove, the head of this queue,

* or returns {@code null} if this queue is empty.

*

* @return the head of this queue, or {@code null} if this queue is empty

*/

E peek();

} element()和peek()均是获取队列头元素,但不删除,区别是在队列空时peek()返回null,element()抛出NoSuchElementException。

对于队列来说,我们知道,普通队列的特性是先进先出(区别于栈的先进后出),比如LinkedList,队列的特有方法offer()和poll()就是用来对队列插入和取出元素的,而另外两个方法add()和remove()通常也是在队列尾插入在队列头取出,因此通常与offer()和poll()实现的其实是一样的,但是在使用队列时,使用后者方法名语义更强。而PriorityQueue与普通的队列不同,因为元素有优先级,所以不具备先进先出的特点,下面看PriorityQueue的源码,还是先来看字段信息:

/**

* Priority queue represented as a balanced binary heap: the two

* children of queue[n] are queue[2*n+1] and queue[2*(n+1)]. The

* priority queue is ordered by comparator, or by the elements'

* natural ordering, if comparator is null: For each node n in the

* heap and each descendant d of n, n <= d. The element with the

* lowest value is in queue[0], assuming the queue is nonempty.

*/

transient Object[] queue; // non-private to simplify nested class access

/**

* The number of elements in the priority queue.

*/

private int size = 0;

/**

* The comparator, or null if priority queue uses elements'

* natural ordering.

*/

private final Comparator comparator;

/**

* The number of times this priority queue has been

* structurally modified. See AbstractList for gory details.

*/

transient int modCount = 0; // non-private to simplify nested class access很明显,queue是底层存储元素的队列,是一个Object数组,但是不同的是,该数组其实代表的是一颗平衡二叉堆(上面的元素小,下面的元素大),queue[n]为父节点时,queue[2n+1]为左孩子,queue[2(n+1)]为右孩子,其大小关系也就是优先级关系通过comparator作比较决定。

继续看插入过程的源码:

public boolean offer(E e) {

if (e == null)

throw new NullPointerException();

modCount++;

int i = size;

if (i >= queue.length)

grow(i + 1);

size = i + 1;

if (i == 0)

queue[0] = e;

else

siftUp(i, e);

return true;

}

private void siftUp(int k, E x) {

if (comparator != null)

siftUpUsingComparator(k, x);

else

siftUpComparable(k, x);

}

private void siftUpUsingComparator(int k, E x) {

while (k > 0) {

int parent = (k - 1) >>> 1;

Object e = queue[parent];

if (comparator.compare(x, (E) e) >= 0)

break;

queue[k] = e;

k = parent;

}

queue[k] = x;

}可以看到,插入的过程充分体现堆的特点,从二叉树的最后的位置依次向上与父节点比较,比父节点小(优先级大)则将父节点下移,继续比较,知道比父节点大停止并且插入,目的是将优先级小的放到堆的下面使得取出时优先取出优先级大的元素,下面看取出元素的方法:

public E poll() {

if (size == 0)

return null;

int s = --size;

modCount++;

E result = (E) queue[0];

E x = (E) queue[s];

queue[s] = null;

if (s != 0)

siftDown(0, x);

return result;

}

private void siftDown(int k, E x) {

if (comparator != null)

siftDownUsingComparator(k, x);

else

siftDownComparable(k, x);

}

private void siftDownComparable(int k, E x) {

Comparable key = (Comparable)x;

int half = size >>> 1; // loop while a non-leaf

while (k < half) {

int child = (k << 1) + 1; // assume left child is least

Object c = queue[child];

int right = child + 1;

if (right < size &&

((Comparable) c).compareTo((E) queue[right]) > 0)

c = queue[child = right];

if (key.compareTo((E) c) <= 0)

break;

queue[k] = c;

k = child;

}

queue[k] = key;

}取出0号元素(即优先级最大的元素),然后从0号的左孩子依次向上填补直至最后的元素小于当前左孩子则填补。

Deque

Deque继承于queue,区别在于Deque是一个双端队列,两头均可当作队列头或队列尾。

ArrayDeque

比较有代表性的双端队列实现为ArrayDeque,基于数组实现的双端循环队列,虽然使用对象模拟C语言前驱后继指针的方式实现双端循环队列更为直观,但是在不需要随机增删节点情况下在java使用数组实现比模拟链表开销更小,下面直接看源码:

/**

* The array in which the elements of the deque are stored.

* The capacity of the deque is the length of this array, which is

* always a power of two. The array is never allowed to become

* full, except transiently within an addX method where it is

* resized (see doubleCapacity) immediately upon becoming full,

* thus avoiding head and tail wrapping around to equal each

* other. We also guarantee that all array cells not holding

* deque elements are always null.

*/

transient Object[] elements; // non-private to simplify nested class access

/**

* The index of the element at the head of the deque (which is the

* element that would be removed by remove() or pop()); or an

* arbitrary number equal to tail if the deque is empty.

*/

transient int head;

/**

* The index at which the next element would be added to the tail

* of the deque (via addLast(E), add(E), or push(E)).

*/

transient int tail;我们看到有两个int字段,从名字上看也能判断到是头尾指针(并不是说它是真的指针,就是那个效果相当于指针),方法源码不赘述,循环的原理就是移动头尾指针,而不需要说比如获取0号元素后将后面元素依次向前移动,这种操作十分花费开销,增加头尾指针只需直接修改头尾指针数值,因此整个数组虽然是线性的但是可以实现环形的效果。

Set

HashSet、LinkedHashSet和TreeSet

依然先看看字段信息,这个PRESENT先不理,直接看不太知道是什么,先看这个map,看到这个map其实可以猜HashSet的底层是通过HashMap储存的了:

private transient HashMap map;

// Dummy value to associate with an Object in the backing Map

private static final Object PRESENT = new Object(); 不过我们还没开始看HashMap的源码,所以可以先从注释等方面简单了解HashSet和HashMap的关系:

/**

* This class implements the Set interface, backed by a hash table

* (actually a HashMap instance). It makes no guarantees as to the

* iteration order of the set; in particular, it does not guarantee that the

* order will remain constant over time. This class permits the null

* element.

...

**/首先基于HashTable(实际上是HashMap),然后没办法保证Set的迭代顺序,也没办法保证Set元素的顺序不会因为时间而变化,同时允许空值null存入。接下来继续往下看源码,看看是怎么通过HashMap存元素的:

public boolean add(E e) {

return map.put(e, PRESENT)==null;

}原来,是吧Set的元素当成HashMap的Key值存储,而Value值则存入PRESENT这个常量对象,利用了Map的Key值不能重复的特性。至此,对HashSet源代码的了解已经足够,翻看下面基本都是对HashMap的调用。

而LinkedHashSet则继承HashSet,然后调用HashSet的另一个构造器,以LinkedHashMap作为底层存储容器:

//输入变量dummy只用作区分其他以initialCapacity和loadFactor作为输入变量的构造函数

HashSet(int initialCapacity, float loadFactor, boolean dummy) {

map = new LinkedHashMap<>(initialCapacity, loadFactor);

}仍然通过注释简单了解LinkedHashSet:

/**

* Hash table and linked list implementation of the Set interface,

* with predictable iteration order. This implementation differs from

* HashSet in that it maintains a doubly-linked list running through

* all of its entries. This linked list defines the iteration ordering,

* which is the order in which elements were inserted into the set

* (insertion-order). Note that insertion order is not affected

* if an element is re-inserted into the set. (An element e

* is reinserted into a set s if s.add(e) is invoked when

* s.contains(e) would return true immediately prior to

* the invocation.)

通过HashTable(实际上是HashMap)和LinkedList实现Set接口,不同于HashSet的是LinkedHashSet通过维护一个双向链表来保证元素的顺序,其顺序则是元素的插入顺序。

而TreeSet则继承NavigableSet再继承与SortedSet,以TreeMap作为底层存储容器,方法的实现同样是调用TreeMap的方法:

/**

* A {@link NavigableSet} implementation based on a {@link TreeMap}.

* The elements are ordered using their {@linkplain Comparable natural

* ordering}, or by a {@link Comparator} provided at set creation

* time, depending on which constructor is used.

*

* This implementation provides guaranteed log(n) time cost for the basic

* operations ({@code add}, {@code remove} and {@code contains}).

*

TreeSet的元素会使用Comparator对元素大小进行排序,不过因此add和remove以及contains操作将会花费log(n)的时间复杂度(比HashSet多)。

Map

HashMap

先来提取一下注释中的重点:

* An instance of HashMap has two parameters that affect its

* performance: initial capacity and load factor. The

* capacity is the number of buckets in the hash table, and the initial

* capacity is simply the capacity at the time the hash table is created. The

* load factor is a measure of how full the hash table is allowed to

* get before its capacity is automatically increased. When the number of

* entries in the hash table exceeds the product of the load factor and the

* current capacity, the hash table is rehashed (that is, internal data

* structures are rebuilt) so that the hash table has approximately twice the

* number of buckets.

这里说有两个参数是会影响HashMap的性能的,一个是initial capacity(初始容量),另一个是load factor(暂且称作负荷系数),initial capacity就创建HashMap时Hash表的大小,load factor则是用来触发Hash表自动扩容的标准衡量值。当HashMap中的实体数量超过了load factor和当前容量的乘积,HashMap将会触发rehash,调整一下整个哈希表的结构,一般来说调整一次会将Hash表容量编程原来的两倍。

* This map usually acts as a binned (bucketed) hash table, but

* when bins get too large, they are transformed into bins of

* TreeNodes, each structured similarly to those in

* java.util.TreeMap. Most methods try to use normal bins, but

* relay to TreeNode methods when applicable (simply by checking

* instanceof a node). Bins of TreeNodes may be traversed and

* used like any others, but additionally support faster lookup

* when overpopulated. However, since the vast majority of bins in

* normal use are not overpopulated, checking for existence of

* tree bins may be delayed in the course of table methods.

*注释把hash表的每一个格比喻成箱子,箱子里面存储的元素(有时为hash冲突的多个元素)有两种结构,这两种情况对应该箱子有两种称呼,一是normal bins,这是一般情况,箱子中元素较少的时候,以链表形式连接各个元素,第二种是tree bins,此时为因为hash相同放到同一个箱子中元素较多时,这些元素将转化成一种叫红黑树的结构储存。

有了上述基本认识后,正式看源码。

首先,当然先看字段信息:

/**

* The table, initialized on first use, and resized as

* necessary. When allocated, length is always a power of two.

* (We also tolerate length zero in some operations to allow

* bootstrapping mechanics that are currently not needed.)

*/

transient Node[] table; table,显然是底层存储map元素的Hash表,是个Node数组,每一项就是注释中所说的bin

Node和TreeNode

上面说了箱子里的元素有两种结构,一开始为Node构成的链表,当箱子内元素数量达到超过8个则转化成TreeNode构成的红黑树

/**

* Basic hash bin node, used for most entries. (See below for

* TreeNode subclass, and in LinkedHashMap for its Entry subclass.)

*/

static class Node implements Map.Entry {

final int hash;

final K key;

V value;

Node next;

...

/**

* Entry for Tree bins. Extends LinkedHashMap.Entry (which in turn

* extends Node) so can be used as extension of either regular or

* linked node.

*/

static final class TreeNode extends LinkedHashMap.Entry {

TreeNode parent; // red-black tree links

TreeNode left;

TreeNode right;

TreeNode prev; // needed to unlink next upon deletion

boolean red;

TreeNode(int hash, K key, V val, Node next) {

super(hash, key, val, next);

}

...

有两种情况箱子结构会从红黑树变回链表:

一是在红黑树中删除节点后,该红黑树节点太少时。下面截取注释片段和代码片段,具体看HashMap.TreeNode的removeTreeNode方法。

...If the current tree appears to have too few nodes,

* the bin is converted back to a plain bin. (The test triggers

* somewhere between 2 and 6 nodes, depending on tree structure).

*/

final void removeTreeNode(HashMap map, Node[] tab,

boolean movable) {

...

if (root == null || root.right == null ||

(rl = root.left) == null || rl.left == null) {

tab[index] = first.untreeify(map); // too small

return;

} 二是扩容后,元素重新分配位置时,本来在红黑树中的节点也会因为扩容而一部分节点分开,在进行分开操作时同时检查分开后的两部分元素数量是否小于等于6,是则构造链表,不是则重新构造红黑树或保持原本红黑树结构:

/**

* Splits nodes in a tree bin into lower and upper tree bins,

* or untreeifies if now too small. Called only from resize;

* see above discussion about split bits and indices.

...

*/

final void split(HashMap map, Node[] tab, int index, int bit) {

...

//通过hash计算后在低位置的元素

if (loHead != null) {

if (lc <= UNTREEIFY_THRESHOLD)

tab[index] = loHead.untreeify(map);

else {

tab[index] = loHead;

if (hiHead != null) // (else is already treeified)

loHead.treeify(tab);

}

}

//通过hash计算后在高位置的元素

if (hiHead != null) {

if (hc <= UNTREEIFY_THRESHOLD)

tab[index + bit] = hiHead.untreeify(map);

else {

tab[index + bit] = hiHead;

if (loHead != null)

hiHead.treeify(tab);

}

}

} /**

* Holds cached entrySet(). Note that AbstractMap fields are used

* for keySet() and values().

*/

transient Set> entrySet; entrySet,用于储存所有元素的Set集合,同样的还有继承自AbstractMap的keySet和values,它们是比较特殊的Set,不是想象的那样重新存储一遍HashMap的所有元素,它们均不存储元素,只是在使用时通过调用HashMap的迭代器获取元素。

/**

* The number of key-value mappings contained in this map.

*/

transient int size;

/**

* The number of times this HashMap has been structurally modified

* Structural modifications are those that change the number of mappings in

* the HashMap or otherwise modify its internal structure (e.g.,

* rehash). This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

transient int modCount;

/**

* The next size value at which to resize (capacity * load factor).

*

* @serial

*/

// (The javadoc description is true upon serialization.

// Additionally, if the table array has not been allocated, this

// field holds the initial array capacity, or zero signifying

// DEFAULT_INITIAL_CAPACITY.)

int threshold;

/**

* The load factor for the hash table.

*

* @serial

*/

final float loadFactor;threshold,是一个阈值,达到这个阈值进行rehash操作(调用resize()),然后threshold增大,threshold的值在HashMap实例化后为大于initialCapacity的第一个2次幂数,之后的增大的值为原本的两倍。

下面重点解析插入元素的方法:

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node[] tab; Node p; int n, i;

//table空,则进行第一次扩容

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

//通过hash计算的位置无占用则直接将引用指向一个新的Node

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

//通过hash计算的位置有占用

else {

Node e; K k;

//占用的元素key值相同,则先增加e对占用元素的引用,后续进行替换value值

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

//占用的元素为TreeNode,也就是该位置下是一颗红黑树,则调用TreeNode的putTreeVal方法插入节点

else if (p instanceof TreeNode)

e = ((TreeNode)p).putTreeVal(this, tab, hash, key, value);

//占用的元素为普通的Node,遍历到链表尾添加节点

else {

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

//在链表中添加节点后箱子元素数量达到8则转换成红黑树

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

//链表中的元素与插入元素key值相同,跳出循环后续替换value值

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

//key值相同时统一在此替换value值,并直接返回,因为元素数量无变化

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

//元素数量大于阈值则进行扩容操作

if (++size > threshold)

resize();

afterNodeInsertion(evict);

return null;

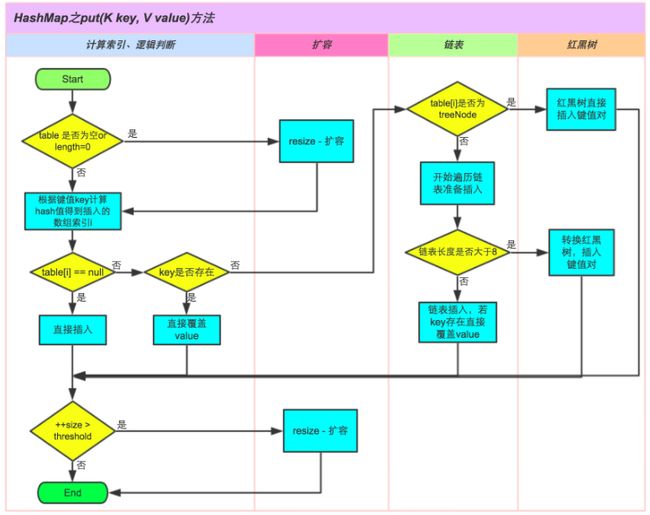

} 配合流程图更好理解:

理解了插入流程后,关于删除和查找其实已经不难,唯一的重点则是关于红黑树的内容。

红黑树

红黑树是一个平衡的二叉树,但不是一个完美的平衡二叉树。虽然我们希望一个所有查找都保持在O(lgn)的时间复杂度,但是这样在动态插入中保持树的完美平衡代价太高,所以,我们稍微放松一下限制,希望找到一个能在对数时间内完成查找的数据结构。这个时候,红黑树站了出来。

首先,一颗红黑树需要满足以下五条性质:

性质一:节点是红色或者是黑色;

在树里面的节点不是红色的就是黑色的,没有其他颜色,要不怎么叫红黑树呢,是吧;

性质二:根节点是黑色;

根节点总是黑色的。它不能为红;

性质三:每个叶节点(NIL或空节点)是黑色;

性质四:每个红色节点的两个子节点都是黑色的(也就是说不存在两个连续的红色节点);

就是连续的两个节点不能是连续的红色,连续的两个节点的意思就是父节点与子节点不能是连续的红色;

性质五:从任一节点到其没个叶节点的所有路径都包含相同数目的黑色节点。

基本介绍完了,下面是红黑树插入和删除操作的介绍:

插入

因为要满足红黑树的五条性质,如果我们插入的是黑色节点,那就违反了性质五,需要进行大规模调整,如果我们插入的是红色节点,那就只有在要插入节点的父节点也是红色的时候违反性质四或者是当插入的节点是根节点时,违反性质二,所以,我们把要插入的节点的颜色变成红色。

插入节点的父节点为黑色或插入节点为根节点时,直接插入:

1.插入的节点是根节点,直接插入黑色根节点

2.插入的节点的父节点是黑色节点,直接插入红色节点

插入节点的父节点为红色,无法直接插入,分三种情况考虑:(以下例子均假设插入节点的父节点是祖父节点的左支,右支的情况为镜像)

下面我们再讲讲删除的操作:

首先你要了解普通二叉树的删除操作:

1.如果删除的是叶节点,可以直接删除;

2.如果被删除的元素有一个子节点,可以将子节点直接移到被删除元素的位置;

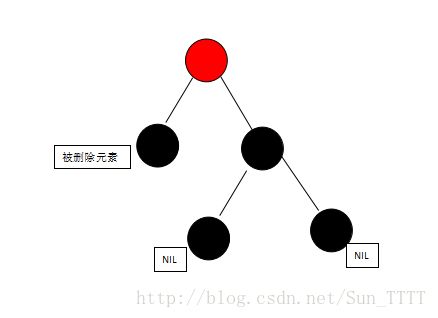

3.如果有两个子节点,这时候就可以把被删除元素的右支的最小节点(被删除元素右支的最左边的节点)和被删除元素互换,我们把被删除元素右支的最左边的节点称之为后继节点(后继元素),然后在根据情况1或者情况2进行操作。如图:

下面所称的被删除元素,皆是指已经互换之后的被删除元素。

加入颜色之后,被删除元素和后继元素互换只是值得互换,并不互换颜色,这个要注意。

下面开始讲一下红黑树删除的规则:

1.当被删除元素为红时,对五条性质没有什么影响,直接删除。

2.当被删除元素为黑且为根节点时,直接删除。

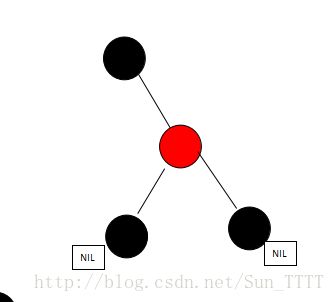

3.当被删除元素为黑,且有一个右子节点为红时,将右子节点涂黑放到被删除元素的位置。如图:

由

4.当被删除元素为黑,且兄弟节点为黑,兄弟节点两个孩子也为黑,父节点为红,此时,交换兄弟节点与父节点的颜色;NIL元素是指每个叶节点都有两个空的,颜色为黑的NIL元素,需要他的时候就可以把它看成两个黑元素,不需要的时候可以忽视他。 如图:

由

5.当被删除元素为黑、并且为父节点的左支,且兄弟颜色为黑,兄弟的右支为红色,这个时候需要交换兄弟与父亲的颜色,并把父亲涂黑、兄弟的右支涂黑,并以父节点为中心左转。如图:

由:

6.当被删除元素为黑、并且为父节点的左支,且兄弟颜色为黑,兄弟的左支为红色,这个时候需要先把兄弟与兄弟的左子节点颜色互换,进行右转,然后就变成了规则5一样了,在按照规则5进行旋转。如图:

由

7.当被删除元素为黑且为父元素的右支时,跟情况5.情况6 互为镜像。

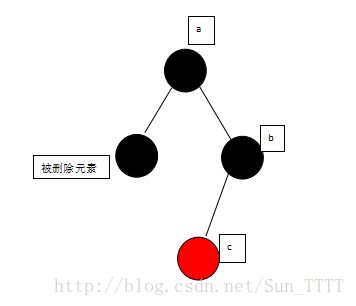

8.被删除元素为黑且兄弟节点为黑,兄弟节点的孩子为黑,父亲为黑,这个时候需要将兄弟节点变为红,再把父亲看做那个被删除的元素(只是看做,实际上不删除),看看父亲符和哪一条删除规则,进行处理变化如图:

由:

8.当被删除的元素为黑,且为父元素的左支,兄弟节点为红色的时候,需要交换兄弟节点与父亲结点的颜色,以父亲结点进行左旋,就变成了情况4,在按照情况四进行操作即可,变化如下:

由:

好了,删除的步骤也讲完,没有讲到的一点就是,在添加删除的时候,时刻要记得更改根元素的颜色为黑。

TreeMap

TreeMap继承自NavigableMap和SortedMap,在Map的基础上增加了获取特定范围的元素(如大于某个值的所有元素,最小的元素),同时因为其底层是红黑树结构,其查找、插入和删除的操作都能保证在O(n)的时间复杂度内完成,最优情况下时间复杂度为O(logN)

* This implementation provides guaranteed log(n) time cost for the

* {@code containsKey}, {@code get}, {@code put} and {@code remove}

* operations. Algorithms are adaptations of those in Cormen, Leiserson, and

* Rivest's Introduction to Algorithms.

继续看字段信息:

/**

* The comparator used to maintain order in this tree map, or

* null if it uses the natural ordering of its keys.

*

* @serial

*/

private final Comparator comparator;

private transient Entry root;

/**

* The number of entries in the tree

*/

private transient int size = 0;

/**

* The number of structural modifications to the tree.

*/

private transient int modCount = 0; 其中有两个字段是最重要的,一个是comparator,因为TreeMap是一个有序的Map,所以comparator是非常的重要;二是root,从名字来看就知道这是红黑树的根指针,整个TreeMap就是一颗红黑树。基本的红黑树也讲解了,在此不做赘述。

LinkedHashMap

LinkedHashMap其实就是在HashMap的基础上增加链表用以记录元素插入顺序,那么它是怎么维护这个链表的呢?从源码中我们首先我们发现,LinkedHashMap的实体类继承了HashMap的实体类并在此基础上增加前后指针:

static class Entry extends HashMap.Node {

Entry before, after;

Entry(int hash, K key, V value, Node next) {

super(hash, key, value, next);

}

} LinkedHashMap的普通元素(区别于树元素)和HashMap直接使用的是不相同的,但是从LinkedHashMap的构造方法来看,LinkedHashMap又是直接构造HashMap实例来存储的,而且并没有修改插入的方法,也就是说,插入元素使用的是HashMap的put方法,那这两者是怎么区别出来的呢?我们回头看看HashMap的put方法:

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node[] tab; Node p; int n, i;

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

if ((p = tab[i = (n - 1) & hash]) == null)

//1

tab[i] = newNode(hash, key, value, null);

else {

Node e; K k;

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

//2

else if (p instanceof TreeNode)

e = ((TreeNode)p).putTreeVal(this, tab, hash, key, value);

... 有两个需要注意的地方,在上面代码用注释标记出来了,第一个是插入普通节点(区别于树节点)的地方,我们看到,该方法在创建新节点时并不是直接使用构造方法构造node,而是使用了newNode方法:

// Create a regular (non-tree) node

Node newNode(int hash, K key, V value, Node next) {

return new Node<>(hash, key, value, next);

} 而LinkedHashMap同样有这个方法,也就是说,LinkedHashMap是通过覆盖了newNode方法实现创建带有前驱后继指针的节点的,而上面插入方法中第二个需要注意的地方就是插入树节点的地方,这个其实也是一样的,在putTreeVal中同样调用newTreeNode方法:

//HashMap

Node newNode(int hash, K key, V value, Node next) {

return new Node<>(hash, key, value, next);

}

TreeNode newTreeNode(int hash, K key, V value, Node next) {

return new TreeNode<>(hash, key, value, next);

}

//LinkedHashMap

Node newNode(int hash, K key, V value, Node e) {

LinkedHashMap.Entry p =

new LinkedHashMap.Entry(hash, key, value, e);

linkNodeLast(p);

return p;

}

TreeNode newTreeNode(int hash, K key, V value, Node next) {

TreeNode p = new TreeNode(hash, key, value, next);

linkNodeLast(p);

return p;

} 对比两者的新建节点的方法不难发现,除了构造不同的普通节点外,LinkedHashMap的方法还新增了调用linkNodeLast方法,这个方法其实就是将该节点连接到链表的最后。

在插入节点是直接替换值时又是怎样的呢?仍然看put方法:

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

...

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

...在替换value值后,调用了afterNodeAccess方法,这个方法在HashMap是空的,在LinkedHashMap被覆盖了:

void afterNodeAccess(Node e) { // move node to last

LinkedHashMap.Entry last;

if (accessOrder && (last = tail) != e) {

LinkedHashMap.Entry p =

(LinkedHashMap.Entry)e, b = p.before, a = p.after;

p.after = null;

if (b == null)

head = a;

else

b.after = a;

if (a != null)

a.before = b;

else

last = b;

if (last == null)

head = p;

else {

p.before = last;

last.after = p;

}

tail = p;

++modCount;

}

} 方法的目的是为了将刚修改的节点从链表中间调换到最后。

在插入节点的最后,还调用了一个方法afterNodeInsertion,先看该方法的代码:

void afterNodeInsertion(boolean evict) { // possibly remove eldest

LinkedHashMap.Entry first;

if (evict && (first = head) != null && removeEldestEntry(first)) {

K key = first.key;

removeNode(hash(key), key, null, false, true);

}

} 我们发现该方法是用于在链表中删除第一个节点的,但是if中的条件永远为false,那很奇怪这个方法到底有什么用?查看removeEldestEntry的方法注释:

/**

* Returns true if this map should remove its eldest entry.

* This method is invoked by put and putAll after

* inserting a new entry into the map. It provides the implementor

* with the opportunity to remove the eldest entry each time a new one

* is added. This is useful if the map represents a cache: it allows

* the map to reduce memory consumption by deleting stale entries.

*

* Sample use: this override will allow the map to grow up to 100

* entries and then delete the eldest entry each time a new entry is

* added, maintaining a steady state of 100 entries.

*

* private static final int MAX_ENTRIES = 100;

*

* protected boolean removeEldestEntry(Map.Entry eldest) {

* return size() > MAX_ENTRIES;

* }

* 注释说道,是为了采用LinkedHashMap设计缓存类时使用的,通过覆盖removeEldestEntry方法设置对应的条件返回false以达到降低内存使用的目的。

同样的,删除节点时同样有一个方法调用用于在链表中删除该节点,方法比较简单,不多介绍:

void afterNodeRemoval(Node e) { // unlink

LinkedHashMap.Entry p =

(LinkedHashMap.Entry)e, b = p.before, a = p.after;

p.before = p.after = null;

if (b == null)

head = a;

else

b.after = a;

if (a == null)

tail = b;

else

a.before = b;

}

protected boolean removeEldestEntry(Map.Entry eldest) {

return false;

}