8 FFmpeg4Android:视频文件推流

8.1 推流原理

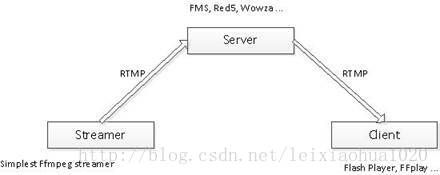

RTMP推流器(Streamer)的在流媒体系统中的作用可以用下图表示。首先将视频数据以RTMP的形式发送到流媒体服务器端(Server,比如FMS,Red5,Wowza等),然后客户端(一般为Flash Player)通过访问流媒体服务器就可以收看实时流了。

运行本程序之前需要先运行RTMP流媒体服务器,并在流媒体服务器上建立相应的Application。有关流媒体服务器的操作不在本文的论述范围内,在此不再详述。本程序运行后,即可通过RTMP客户端(例如 Flash Player, FFplay等等)收看推送的直播流。

需要要注意的地方:

1)封装格式

RTMP采用的封装格式是FLV。因此在指定输出流媒体的时候需要指定其封装格式为“flv”。同理,其他流媒体协议也需要指定其封装格式。例如采用UDP推送流媒体的时候,可以指定其封装格式为“mpegts”。

2)延时

发送流媒体的数据的时候需要延时。不然的话,FFmpeg处理数据速度很快,瞬间就能把所有的数据发送出去,流媒体服务器是接受不了的。因此需要按照视频实际的帧率发送数据。本文记录的推流器在视频帧与帧之间采用了av_usleep()函数休眠的方式来延迟发送。这样就可以按照视频的帧率发送数据了,参考代码如下。

8.2 nginx服务器搭建

先下载安装 nginx 和 nginx-rtmp 编译依赖工具

sudo apt-get install build-essential libpcre3 libpcre3-dev libssl-dev创建一个工作目录,并切换到工作目录

mkdir /usr/jason/nginx

cd /usr/jason/nginx下载 nginx 和 nginx-rtmp源码(wget是一个从网络上自动下载文件的自由工具)

wget http://nginx.org/download/nginx-1.7.5.tar.gz(或wget http://nginx.org/download/nginx-1.8.1.tar.gz)

wget https://github.com/arut/nginx-rtmp-module/archive/master.zip安装unzip工具,解压下载的安装包

sudo apt-get install unzip解压 nginx 和 nginx-rtmp安装包

tar -zxvf nginx-1.7.5.tar.gz

-zxvf分别是四个参数

x : 从 tar 包中把文件提取出来

z : 表示 tar 包是被 gzip 压缩过的,所以解压时需要用 gunzip 解压

v : 显示详细信息

f xxx.tar.gz : 指定被处理的文件是 xxx.tar.gz

unzip master.zip

切换到 nginx-目录

cd nginx-1.7.5添加 nginx-rtmp 模板编译到 nginx

./configure --with-http_ssl_module --add-module=../nginx-rtmp-module-master编译安装

make

sudo make install安装nginx init 脚本

(/etc/init.d/下:)

sudo wget https://raw.github.com/JasonGiedymin/nginx-init-ubuntu/master/nginx -O /etc/init.d/nginx

sudo chmod +x /etc/init.d/nginx

sudo update-rc.d nginx defaults启动和停止nginx 服务,生成配置文件

sudo service nginx start

sudo service nginx stop

(tip : /usr/local/nginx/conf下的nginx.conf文件,在server:listen中可以改端口)

安装 FFmpeg

./configure --disable-yasm(末尾会有fail字样的WARNING,为正常情况)

make

make install配置 nginx-rtmp 服务器

打开 /usr/local/nginx/conf/nginx.conf

在末尾添加如下 配置

rtmp {

server {

listen 1935;

chunk_size 4096;

application live {

live on;

record off;

exec ffmpeg -i rtmp://localhost/live/$name -threads 1 -c:v libx264 -profile:v baseline -b:v 350K -s 640x360 -f flv -c:a aac -ac 1 -strict -2 -b:a 56k rtmp://localhost/live360p/$name;

}

application live360p {

live on;

record off;

}

}

}

保存上面配置文件,然后重新启动nginx服务

sudo service nginx restart如果你使用了防火墙,请允许端口 tcp 1935

如:阿里云需要在安全组中设置好1935端口

- 服务器配置测试播放器:

将播放器复制到目录:/usr/local/nginx/html/,然后修改播放地址

附:推流与播放命令

推流:ffmpeg -re -i "D:\sintel.mp4" -vcodec libx264 -vprofile baseline -acodec aac -ar 44100 -strict -2 -ac 1 -f flv -s 1280x720 -q 10 rtmp://180.76.117.119:1935/live/walker

播放:ffplay rtmp://180.76.117.119:1935/live/walker

8.3 视频推流

java端代码,主要是转入rtmp地址和声明jni函数:

import android.os.Bundle;

import android.os.Environment;

import android.app.Activity;

import android.util.Log;

import android.view.Menu;

import android.view.View;

import android.view.View.OnClickListener;

import android.widget.Button;

import android.widget.EditText;

public class MainActivity extends Activity {

// rtmp url

private static final String rtmpURL = "rtmp://118.190.211.61:1935/live/walker";

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

Button startButton = findViewById(R.id.button_start);

final EditText urlEdittext_input = findViewById(R.id.input_url);

final EditText urlEdittext_output = findViewById(R.id.output_url);

urlEdittext_output.setText(rtmpURL);

startButton.setOnClickListener(new OnClickListener() {

public void onClick(View view) {

String folderurl = Environment.getExternalStorageDirectory().getPath();

String urltext_input = urlEdittext_input.getText().toString();

String inputurl = folderurl + "/" + urltext_input;

String outputurl = urlEdittext_output.getText().toString();

Log.e("inputurl", inputurl);

Log.e("outputurl", outputurl);

String info = "";

stream(inputurl, outputurl);

Log.e("Info", info);

}

});

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

// Inflate the menu; this adds items to the action bar if it is present.

getMenuInflater().inflate(R.menu.main, menu);

return true;

}

// JNI

public native int stream(String inputurl, String outputurl);

static {

System.loadLibrary("avutil-54");

System.loadLibrary("swresample-1");

System.loadLibrary("avcodec-56");

System.loadLibrary("avformat-56");

System.loadLibrary("swscale-3");

System.loadLibrary("postproc-53");

System.loadLibrary("avfilter-5");

System.loadLibrary("avdevice-56");

System.loadLibrary("sffstreamer");

}

}

c端代码,实现视频的解封装与推流:

#include

#include

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/log.h"

#ifdef ANDROID

#include

#include

#define LOGE(format, ...) __android_log_print(ANDROID_LOG_ERROR, "walker", format, ##__VA_ARGS__)

#define LOGI(format, ...) __android_log_print(ANDROID_LOG_INFO, "walker", format, ##__VA_ARGS__)

#else

#define LOGE(format, ...) LOGE("(>_<) " format "\n", ##__VA_ARGS__)

#define LOGI(format, ...) LOGE("(^_^) " format "\n", ##__VA_ARGS__)

#endif

//Output FFmpeg's av_log()

void custom_log(void *ptr, int level, const char* fmt, va_list vl){

//To TXT file

FILE *fp=fopen("/storage/emulated/0/av_log.txt","a+");

if(fp){

vfprintf(fp,fmt,vl);

fflush(fp);

fclose(fp);

}

//To Logcat

//LOGE(fmt, vl);

}

JNIEXPORT jint JNICALL Java_com_ffmpeg_push_MainActivity_stream

(JNIEnv *env, jobject obj, jstring input_jstr, jstring output_jstr)

{

AVOutputFormat *ofmt = NULL;

AVFormatContext *ifmt_ctx = NULL, *ofmt_ctx = NULL;

AVPacket pkt;

int ret, i;

char input_str[500]={0};

char output_str[500]={0};

char info[1000]={0};

sprintf(input_str,"%s",(*env)->GetStringUTFChars(env,input_jstr, NULL));

sprintf(output_str,"%s",(*env)->GetStringUTFChars(env,output_jstr, NULL));

//FFmpeg av_log() callback

av_log_set_callback(custom_log);

av_register_all();

//Network

avformat_network_init();

//Input

if ((ret = avformat_open_input(&ifmt_ctx, input_str, 0, 0)) < 0) {

LOGE( "Could not open input file.");

goto end;

}

if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {

LOGE( "Failed to retrieve input stream information");

goto end;

}

int videoindex=-1;

for(i=0; inb_streams; i++)

if(ifmt_ctx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

//Output

avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv",output_str); //RTMP

//avformat_alloc_output_context2(&ofmt_ctx, NULL, "mpegts", output_str);//UDP

if (!ofmt_ctx) {

LOGE( "Could not create output context\n");

ret = AVERROR_UNKNOWN;

goto end;

}

ofmt = ofmt_ctx->oformat;

for (i = 0; i < ifmt_ctx->nb_streams; i++) {

//Create output AVStream according to input AVStream

AVStream *in_stream = ifmt_ctx->streams[i];

AVStream *out_stream = avformat_new_stream(ofmt_ctx, in_stream->codec->codec);

if (!out_stream) {

LOGE( "Failed allocating output stream\n");

ret = AVERROR_UNKNOWN;

goto end;

}

//Copy the settings of AVCodecContext

ret = avcodec_copy_context(out_stream->codec, in_stream->codec);

if (ret < 0) {

LOGE( "Failed to copy context from input to output stream codec context\n");

goto end;

}

out_stream->codec->codec_tag = 0;

if (ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

out_stream->codec->flags |= CODEC_FLAG_GLOBAL_HEADER;

}

//Open output URL

if (!(ofmt->flags & AVFMT_NOFILE)) {

ret = avio_open(&ofmt_ctx->pb, output_str, AVIO_FLAG_WRITE);

if (ret < 0) {

LOGE( "Could not open output URL '%s'\n 'ret':%d", output_str, ret);

goto end;

}

}

//Write file header

ret = avformat_write_header(ofmt_ctx, NULL);

if (ret < 0) {

LOGE( "Error occurred when opening output URL\n 'ret: %d'", ret);

goto end;

}

int frame_index=0;

int64_t start_time=av_gettime();

while (1) {

AVStream *in_stream, *out_stream;

//Get an AVPacket

ret = av_read_frame(ifmt_ctx, &pkt);

if (ret < 0)

break;

//FIX��No PTS (Example: Raw H.264)

//Simple Write PTS

if(pkt.pts==AV_NOPTS_VALUE) {

//Write PTS

AVRational time_base1=ifmt_ctx->streams[videoindex]->time_base;

//Duration between 2 frames (us)

int64_t calc_duration=(double)AV_TIME_BASE/av_q2d(ifmt_ctx->streams[videoindex]->r_frame_rate);

//Parameters

pkt.pts=(double)(frame_index*calc_duration)/(double)(av_q2d(time_base1)*AV_TIME_BASE);

pkt.dts=pkt.pts;

pkt.duration=(double)calc_duration/(double)(av_q2d(time_base1)*AV_TIME_BASE);

}

//Important:Delay

if(pkt.stream_index==videoindex){

AVRational time_base=ifmt_ctx->streams[videoindex]->time_base;

AVRational time_base_q={1,AV_TIME_BASE};

int64_t pts_time = av_rescale_q(pkt.dts, time_base, time_base_q);

int64_t now_time = av_gettime() - start_time;

if (pts_time > now_time)

av_usleep(pts_time - now_time);

}

in_stream = ifmt_ctx->streams[pkt.stream_index];

out_stream = ofmt_ctx->streams[pkt.stream_index];

/* copy packet */

//Convert PTS/DTS

pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);

pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);

pkt.pos = -1;

//Print to Screen

if(pkt.stream_index==videoindex){

LOGE("Send %8d video frames to output URL\n",frame_index);

frame_index++;

}

//ret = av_write_frame(ofmt_ctx, &pkt);

ret = av_interleaved_write_frame(ofmt_ctx, &pkt);

if (ret < 0) {

LOGE( "Error muxing packet\n");

break;

}

av_free_packet(&pkt);

}

//Write file trailer

av_write_trailer(ofmt_ctx);

end:

avformat_close_input(&ifmt_ctx);

/* close output */

if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))

avio_close(ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

if (ret < 0 && ret != AVERROR_EOF) {

LOGE( "Error occurred.\n");

return -1;

}

return 0;

}

源码下载:https://download.csdn.net/download/itismelzp/10317555