自然语言处理入门实战4:基于注意力机制的文本匹配

基于注意力机制的文本匹配

- 数据集

- 数据预处理

- 模型

- 模型训练

- 参考

解锁新任务:文本匹配

任务目标:输入两个句子判断,判断它们之间的关系。

代码参考https://github.com/Alic-yuan/nlp-beginner-finish/tree/master/task3

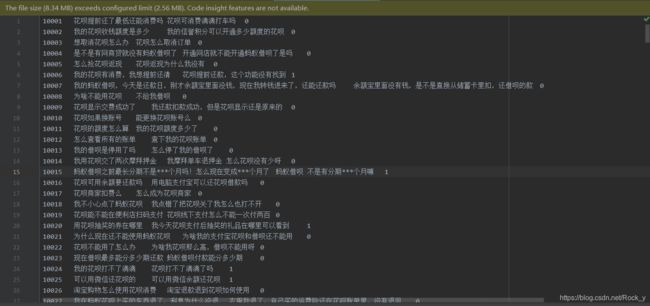

数据集

标签为1表示两句话相匹配,标签为0表示两句话不匹配

atec_nlp_sim_train_all.csv:

dev.csv:

所形成的词汇表:vocab.txt

数据预处理

data.py:

import re

import gensim

import numpy as np

import pandas as pd

import torch

from hanziconv import HanziConv

from torch.utils.data import Dataset

from collections import Counter

class LCQMC_Dataset(Dataset):

def __init__(self, LCQMC_file, vocab_file, max_char_len):

p, h, self.label = load_sentences(LCQMC_file)

word2idx, _, _ = load_vocab(vocab_file)

self.p_list, self.p_lengths, self.h_list, self.h_lengths = word_index(p, h, word2idx, max_char_len)

self.p_list = torch.from_numpy(self.p_list).type(torch.long)

self.h_list = torch.from_numpy(self.h_list).type(torch.long)

self.max_length = max_char_len

def __len__(self):

return len(self.label)

def __getitem__(self, idx):

return self.p_list[idx], self.p_lengths[idx], self.h_list[idx], self.h_lengths[idx], self.label[idx]

def open_file(filename, mode='r'):

"""

常用文件操作,可在python2和python3间切换.

mode: 'r' or 'w' for read or write

"""

return open(filename, mode, encoding='utf-8', errors='ignore')

# 加载word_index训练数据

def load_sentences(file, data_size=None):

df = pd.read_csv(file, sep='\t', names=['sentence1', 'sentence2', 'label'])

# print(df)

p = map(get_word_list, df['sentence1'].values[0:data_size])

h = map(get_word_list, df['sentence2'].values[0:data_size])

label = df['label'].values[0:data_size]

# # p_c_index, h_c_index = word_index(p, h)

return p, h, label

# word->index

def word_index(p_sentences, h_sentences, word2idx, max_char_len):

p_list, p_length, h_list, h_length = [], [], [], []

for p_sentence, h_sentence in zip(p_sentences, h_sentences):

p = [word2idx[word] for word in p_sentence if word in word2idx.keys()]

h = [word2idx[word] for word in h_sentence if word in word2idx.keys()]

p_list.append(p)

p_length.append(min(len(p), max_char_len))

h_list.append(h)

h_length.append(min(len(h), max_char_len))

p_list = pad_sequences(p_list, maxlen = max_char_len)

h_list = pad_sequences(h_list, maxlen = max_char_len)

return p_list, p_length, h_list, h_length

# 加载字典

def load_vocab(vocab_file):

vocab = [line.strip() for line in open(vocab_file, encoding='utf-8').readlines()]

word2idx = {word: index for index, word in enumerate(vocab)}

idx2word = {index: word for index, word in enumerate(vocab)}

return word2idx, idx2word, vocab

''' 把句子按字分开,中文按字分,英文数字按空格, 大写转小写,繁体转简体'''

def get_word_list(query):

query = HanziConv.toSimplified(query.strip())

regEx = re.compile('[\\W]+')#我们可以使用正则表达式来切分句子,切分的规则是除单词,数字外的任意字符串

res = re.compile(r'([\u4e00-\u9fa5])')#[\u4e00-\u9fa5]中文范围

sentences = regEx.split(query.lower())

str_list = []

for sentence in sentences:

if res.split(sentence) == None:

str_list.append(sentence)

else:

ret = res.split(sentence)

str_list.extend(ret)

return [w for w in str_list if len(w.strip()) > 0]

def load_embeddings(embdding_path):

model = gensim.models.KeyedVectors.load_word2vec_format(embdding_path, binary=False)

embedding_matrix = np.zeros((len(model.index2word) + 1, model.vector_size))

#填充向量矩阵

for idx, word in enumerate(model.index2word):

embedding_matrix[idx + 1] = model[word]#词向量矩阵

return embedding_matrix

def pad_sequences(sequences, maxlen=None, dtype='int32', padding='post',

truncating='post', value=0.):

""" pad_sequences

把序列长度转变为一样长的,如果设置了maxlen则长度统一为maxlen,如果没有设置则默认取

最大的长度。填充和截取包括两种方法,post与pre,post指从尾部开始处理,pre指从头部

开始处理,默认都是从尾部开始。

Arguments:

sequences: 序列

maxlen: int 最大长度

dtype: 转变后的数据类型

padding: 填充方法'pre' or 'post'

truncating: 截取方法'pre' or 'post'

value: float 填充的值

Returns:

x: numpy array 填充后的序列维度为 (number_of_sequences, maxlen)

"""

lengths = [len(s) for s in sequences]

nb_samples = len(sequences)

if maxlen is None:

maxlen = np.max(lengths)

# print(maxlen)

x = (np.ones((nb_samples, maxlen)) * value).astype(dtype)

for idx, s in enumerate(sequences):

if len(s) == 0:

continue # empty list was found

if truncating == 'pre':

trunc = s[-maxlen:]

elif truncating == 'post':

trunc = s[:maxlen]

else:

raise ValueError("Truncating type '%s' not understood" % padding)

# x[idx] = trunc

if padding == 'post':

x[idx, :len(trunc)] = trunc

elif padding == 'pre':

x[idx, -len(trunc):] = trunc

else:

raise ValueError("Padding type '%s' not understood" % padding)

return x

# 构建词汇表

def build_vocab(train_dir, vocab_size=5000):

df = pd.read_csv(train_dir, sep='\t', names=['sentence1', 'sentence2', 'label'])

# print(df)

p = map(get_word_list, df['sentence1'].values)

h = map(get_word_list, df['sentence2'].values)

p2 = ''.join(''.join(i) for i in p)

h2= ''.join(''.join(i) for i in h)

text = p2 + h2

counter = Counter(text)

# print(counter)

count_pairs = counter.most_common(vocab_size - 1) #统计最常出现的字

print(count_pairs)

words, _ = list(zip(*count_pairs))

print(words)

# 添加一个 来将所有文本pad为同一长度

words = ['' ] + list(words)

open_file('vocab.txt', mode='w').write('\n'.join(words) + '\n')

if __name__ == '__main__':

build_vocab('atec_nlp_sim_train_all.csv')

模型

采用ESIM模型,不考虑Tree-LSTM,用双向的注意力机制实现

EmbeddingLayer

EncodingLayer

Local Inference Model

Inference Composition

LinearSoftmax

model.py:

import torch

from torch import nn

class VariationalDropout(nn.Dropout):

"""

Apply the dropout technique in Gal and Ghahramani, "Dropout as a Bayesian Approximation:

Representing Model Uncertainty in Deep Learning" (https://arxiv.org/abs/1506.02142) to a

3D tensor.

This module accepts a 3D tensor of shape ``(batch_size, num_timesteps, embedding_dim)``

and samples a single dropout mask of shape ``(batch_size, embedding_dim)`` and applies

it to every time step.

"""

def forward(self, input_tensor):

"""

Apply dropout to input tensor.

Parameters

----------

input_tensor: ``torch.FloatTensor``

A tensor of shape ``(batch_size, num_timesteps, embedding_dim)``

Returns

-------

output: ``torch.FloatTensor``

A tensor of shape ``(batch_size, num_timesteps, embedding_dim)`` with dropout applied.

"""

ones = input_tensor.data.new_ones(input_tensor.shape[0], input_tensor.shape[-1])

dropout_mask = torch.nn.functional.dropout(ones, self.p, self.training, inplace=False)

if self.inplace:

input_tensor *= dropout_mask.unsqueeze(1)

return None

else:

return dropout_mask.unsqueeze(1) * input_tensor

class EmbeddingLayer(nn.Module):

"""Implement embedding layer.

"""

def __init__(self, vector_size, vocab_size, dropout=0.5):

"""

Arguments:

vector_size {int} -- word embedding size.

vocab_size {int} -- vocabulary size.

Keyword Arguments:

dropout {float} -- dropout rate. (default: {0.5})

"""

super(EmbeddingLayer, self).__init__()

self.vector_size = vector_size

self.embed = nn.Embedding(vocab_size, vector_size)

self.dropout = VariationalDropout(dropout)

def load(self, vectors):

"""Load pre-trained embedding weights.

Arguments:

vectors {torch.Tensor} -- from "TEXT.vocab.vectors".

"""

self.embed.weight.data.copy_(vectors)

def forward(self, x):

"""

Arguments:

x {torch.Tensor} -- input tensor with shape [batch_size, seq_length]

"""

e = self.embed(x)

return self.dropout(e)

class EncodingLayer(nn.Module):

"""BiLSTM encoder which encodes both the premise and hypothesis.

对前提和假设进行编码的BiLSTM编码器

"""

def __init__(self, input_size, hidden_size):

super(EncodingLayer, self).__init__()

self.lstm = nn.LSTM(input_size, hidden_size,

num_layers=1,

bidirectional=True)

def forward(self, x):

"""

Arguments:

x {torch.Tensor} -- input embeddings with shape [batch, seq_len, input_size]

Returns:

output {torch.Tensor} -- [batch, seq_len, num_directions * hidden_size]

"""

self.lstm.flatten_parameters()

output, _ = self.lstm(x)

return output

class LocalInferenceModel(nn.Module):

"""The local inference model introduced in the paper.

本文引入了局部推理模型。

"""

def __init__(self):

super(LocalInferenceModel, self).__init__()

self.softmax_1 = nn.Softmax(dim=1)

self.softmax_2 = nn.Softmax(dim=2)

def forward(self, p, h, p_mask, h_mask):

"""Apply local inference to premise and hyopthesis.

Arguments:

p {torch.Tensor} -- p has shape [batch, seq_len_p, 2 * hidden_size]

h {torch.Tensor} -- h has shape [batch, seq_len_h, 2 * hidden_size]

p_mask {torch.Tensor (int)} -- p has shape [batch, seq_len_p], 0 in the mask

means padding.

h_mask {torch.Tensor (int)} -- h has shape [batch, seq_len_h]

Returns:

m_p, m_h {torch.Tensor} -- tensor with shape [batch, seq_len, 8 * hidden_size]

"""

# equation 11 in the paper:

e = torch.matmul(p, h.transpose(1, 2)) # [batch, seq_len_p, seq_len_h]

# masking the scores for padding tokens

inference_mask = torch.matmul(p_mask.unsqueeze(2).float(),

h_mask.unsqueeze(1).float())

e.masked_fill_(inference_mask < 1e-7, -1e7)

# equation 12 & 13 in the paper:

h_score, p_score = self.softmax_1(e), self.softmax_2(e)

h_ = h_score.transpose(1, 2).bmm(p)

p_ = p_score.bmm(h)

# equation 14 & 15 in the paper:

m_p = torch.cat((p, p_, p - p_, p * p_), dim=-1)

m_h = torch.cat((h, h_, h - h_, h * h_), dim=-1)

assert inference_mask.shape == e.shape

assert p.shape == p_.shape and h.shape == h_.shape

assert m_p.shape[-1] == p.shape[-1] * 4

return m_p, m_h

class CompositionLayer(nn.Module):

"""The composition layer.

"""

def __init__(self, input_size, output_size, hidden_size, dropout=0.5):

"""

Arguments:

input_size {int} -- input size to the feedforward neural network.

output_size {int} -- output size of the feedforward neural network.

hidden_size {int} -- output hidden size of the LSTM model.

Keyword Arguments:

dropout {float} -- dropout rate (default: {0.5})

"""

super(CompositionLayer, self).__init__()

self.hidden_size = hidden_size

self.F = nn.Linear(input_size, output_size)

self.lstm = nn.LSTM(output_size, hidden_size,

num_layers=1, bidirectional=True)

self.dropout = VariationalDropout(dropout)

def forward(self, m):

"""

Arguments:

m {torch.Tensor} -- [batch, seq_len, input_size]

Returns:

outputs {torch.Tensor} -- [batch, seq_len, hidden_size * 2]

"""

y = self.dropout(self.F(m))

self.lstm.flatten_parameters()

outputs, _ = self.lstm(y)

assert m.shape[:2] == outputs.shape[:2] and \

outputs.shape[-1] == self.hidden_size * 2

return outputs

class Pooling(nn.Module):

"""Apply maxing pooling and average pooling to the outputs of LSTM.

对LSTM的输出应用最大池和平均池

"""

def __init__(self):

super(Pooling, self).__init__()

def forward(self, x, x_mask):

"""

Arguments:

x {torch.Tensor} -- [batch, seq_len, hidden_size * 2]

x_mask {torch.Tensor} -- [batch, seq_len], 0 in the mask means padding

Returns:

v {torch.Tensor} -- [batch, hidden_size * 4]

"""

mask_expand = x_mask.unsqueeze(-1).expand(x.shape)

# average pooling

x_ = x * mask_expand.float()

v_avg = x_.sum(1) / x_mask.sum(-1).unsqueeze(-1).float()

# max pooling

x_ = x.masked_fill(mask_expand == 0, -1e7)

v_max = x_.max(1).values

assert v_avg.shape == v_max.shape == (x.shape[0], x.shape[-1])

return torch.cat((v_avg, v_max), dim=-1)

class InferenceComposition(nn.Module):

"""Inference composition described in paper section 3.3

"""

def __init__(self, input_size, output_size, hidden_size, dropout=0.5):

"""

Arguments:

input_size {int} -- input size to the feedforward neural network.

output_size {int} -- output size of the feedforward neural network.

hidden_size {int} -- output hidden size of the LSTM model.

Keyword Arguments:

dropout {float} -- dropout rate (default: {0.5})

"""

super(InferenceComposition, self).__init__()

self.composition = CompositionLayer(input_size,

output_size,

hidden_size,

dropout=dropout)

# self.composition_h = deepcopy(self.composition_p)

self.pooling = Pooling()

def forward(self, m_p, m_h, p_mask, h_mask):

"""

Arguments:

m_p {torch.Tensor} -- [batch, seq_len, input_size]

m_h {torch.Tensor} -- [batch, seq_len, input_size]

mask {torch.Tensor} -- [batch, seq_len], 0 means padding

Returns:

v {torch.Tensor} -- [batch, input_size * 8]

"""

# equation 16 & 17 in the paper

v_p, v_h = self.composition(m_p), self.composition(m_h)

# equation 18 & 19 in the paper

v_p_, v_h_ = self.pooling(v_p, p_mask), self.pooling(v_h, h_mask)

# equation 20 in the paper

v = torch.cat((v_p_, v_h_), dim=-1)

assert v.shape == (m_p.shape[0], v_p.shape[-1] * 4)

return v

class LinearSoftmax(nn.Module):

"""Implement the final linear layer.

"""

def __init__(self, input_size, output_size, class_num, activation='relu', dropout=0.5):

super(LinearSoftmax, self).__init__()

if activation == 'relu':

self.activation = nn.ReLU()

elif activation == 'tanh':

self.activation = nn.Tanh()

else:

raise ValueError("Unknown activation function!!!")

self.dropout = nn.Dropout(dropout)

self.mlp = nn.Sequential(

self.dropout,

nn.Linear(input_size, output_size),

self.activation,

# self.dropout,

nn.Linear(output_size, class_num)

)

def forward(self, x):

"""

Arguments:

x {torch.Tensor} -- [batch, features]

Returns:

logits {torch.Tensor} -- raw, unnormalized scores for each class. [batch, class_num]

"""

logits = self.mlp(x)

return logits

class ESIM(nn.Module):

"""Implement ESIM model using the modules defined above.

使用上面定义的模块实现ESIM模型。

"""

def __init__(self,

hidden_size,

vector_size=64,

vocab_size = 1973,

num_labels=3,

dropout=0.5,

device='gpu'

):

"""

Arguments:

vector_size {int} -- word embedding size.

vocab_size {int} -- the size of the vocabulary.

hidden_size {int} -- LSTM hidden size.

Keyword Arguments:

class_num {int} -- number of class for classification (default: {3})

dropout {float} -- dropout rate (default: {0.5})

"""

super(ESIM, self).__init__()

self.device = device

self.embedding_layer = EmbeddingLayer(vector_size, vocab_size, dropout)

self.encoder = EncodingLayer(vector_size, hidden_size)

# self.hypo_encoder = deepcopy(self.premise_encoder)

self.inference = LocalInferenceModel()

self.inferComp = InferenceComposition(hidden_size * 8,

hidden_size,

hidden_size,

dropout)

self.linear = LinearSoftmax(hidden_size * 8,

hidden_size,

num_labels,

activation='tanh')

def load_embeddings(self, vectors):

"""Load pre-trained word embeddings.

Arguments:

vectors {torch.Tensor} -- pre-trained vectors.

"""

self.embedding_layer.load(vectors)

def forward(self, p, p_length, h, h_length):

"""

Arguments:

p {torch.Tensor} -- premise [batch, seq_len]

h {torch.Tensor} -- hypothesis [batch, seq_len]

Returns:

logits {torch.Tensor} -- raw, unnormalized scores for each class

with shape [batch, class_num]

"""

# input embedding

p_embeded = self.embedding_layer(p)

h_embeded = self.embedding_layer(h)

p_ = self.encoder(p_embeded)

h_ = self.encoder(h_embeded)

# local inference

p_mask, h_mask = (p != 1).long(), (h != 1).long()

m_p, m_h = self.inference(p_, h_, p_mask, h_mask)

# inference composition

v = self.inferComp(m_p, m_h, p_mask, h_mask)

# final multi-layer perceptron

logits = self.linear(v)

probabilities = nn.functional.softmax(logits, dim=-1)

return logits,probabilities

模型训练

训练需要调用utils.py里的train, validate函数

utils.py:

import torch

import torch.nn as nn

import time

from tqdm import tqdm

from sklearn.metrics import roc_auc_score

def sort_by_seq_lens(batch, sequences_lengths, descending=True):

sorted_seq_lens, sorting_index = sequences_lengths.sort(0, descending=descending)

sorted_batch = batch.index_select(0, sorting_index)

idx_range = torch.arange(0, len(sequences_lengths)).type_as(sequences_lengths)

#idx_range = sequences_lengths.new_tensor(torch.arange(0, len(sequences_lengths)))

_, revese_mapping = sorting_index.sort(0, descending=False)

restoration_index = idx_range.index_select(0, revese_mapping)

return sorted_batch, sorted_seq_lens, sorting_index, restoration_index

def get_mask(sequences_batch, sequences_lengths):

batch_size = sequences_batch.size()[0]

max_length = torch.max(sequences_lengths)

mask = torch.ones(batch_size, max_length, dtype=torch.float)

mask[sequences_batch[:, :max_length] == 0] = 0.0

return mask

def masked_softmax(tensor, mask):

"""

Apply a masked softmax on the last dimension of a tensor.

The input tensor and mask should be of size (batch, *, sequence_length).

Args:

tensor: The tensor on which the softmax function must be applied along

the last dimension.

mask: A mask of the same size as the tensor with 0s in the positions of

the values that must be masked and 1s everywhere else.

Returns:

A tensor of the same size as the inputs containing the result of the

softmax.

在张量的最后一维上应用masked softmax。

输入张量和掩码的大小应该是(batch, *, sequence_Length)。

参数:

tenson: softmax函数必须在最后一个维度上应用的张量。

masked:与位置为0的张量大小相同的masked必须被掩盖的值和其他地方的1。

返回:

与包含的结果的输入相同大小的张量softftmax

"""

tensor_shape = tensor.size()

reshaped_tensor = tensor.view(-1, tensor_shape[-1])

# Reshape the mask so it matches the size of the input tensor.

# 重塑mask,使其与输入张量的大小匹配。

while mask.dim() < tensor.dim():

mask = mask.unsqueeze(1)

mask = mask.expand_as(tensor).contiguous().float()

reshaped_mask = mask.view(-1, mask.size()[-1])

result = nn.functional.softmax(reshaped_tensor * reshaped_mask, dim=-1)

result = result * reshaped_mask

# 1e-13 is added to avoid divisions by zero.

result = result / (result.sum(dim=-1, keepdim=True) + 1e-13)

return result.view(*tensor_shape)

def weighted_sum(tensor, weights, mask):

"""

Apply a weighted sum on the vectors along the last dimension of 'tensor',

and mask the vectors in the result with 'mask'.

沿着“张量”的最后一个维对这些向量进行加权和,

然后用掩模对结果中的向量进行掩模。

Args:

tensor: A tensor of vectors on which a weighted sum must be applied.

weights: The weights to use in the weighted sum.

mask: A mask to apply on the result of the weighted sum.

Returns:

A new tensor containing the result of the weighted sum after the mask

has been applied on it.

"""

weighted_sum = weights.bmm(tensor)

while mask.dim() < weighted_sum.dim():

mask = mask.unsqueeze(1)

mask = mask.transpose(-1, -2)

mask = mask.expand_as(weighted_sum).contiguous().float()

return weighted_sum * mask

def replace_masked(tensor, mask, value):

"""

Replace the all the values of vectors in 'tensor' that are masked in

'masked' by 'value'.

把所有被掩盖的张量的值替换掉“蒙面”“价值”。

Args:

tensor: The tensor in which the masked vectors must have their values

replaced.

mask: A mask indicating the vectors which must have their values

replaced.

value: The value to place in the masked vectors of 'tensor'.

Returns:

A new tensor of the same size as 'tensor' where the values of the

vectors masked in 'mask' were replaced by 'value'.

"""

mask = mask.unsqueeze(1).transpose(2, 1)

reverse_mask = 1.0 - mask

values_to_add = value * reverse_mask

return tensor * mask + values_to_add

def correct_predictions(output_probabilities, targets):

"""

Compute the number of predictions that match some target classes in the

output of a model.方法中匹配某些目标类的预测数模型的输出。

Args:

output_probabilities: A tensor of probabilities for different output

classes.

targets: The indices of the actual target classes.

Returns:

The number of correct predictions in 'output_probabilities'.

"""

_, out_classes = output_probabilities.max(dim=1)

correct = (out_classes == targets).sum()

return correct.item()

def validate(model, dataloader, criterion):

"""

Compute the loss and accuracy of a model on some validation dataset.

计算模型在某些验证数据集上的损失和准确性。

Args:

model: A torch module for which the loss and accuracy must be

computed.

dataloader: A DataLoader object to iterate over the validation data.

criterion: A loss criterion to use for computing the loss.

epoch: The number of the epoch for which validation is performed.

device: The device on which the model is located.

Returns:

epoch_time: The total time to compute the loss and accuracy on the

entire validation set.

epoch_loss: The loss computed on the entire validation set.

epoch_accuracy: The accuracy computed on the entire validation set.

"""

# Switch to evaluate mode.

model.eval()

device = model.device

epoch_start = time.time()

running_loss = 0.0

running_accuracy = 0.0

all_prob = []

all_labels = []

# Deactivate autograd for evaluation.

with torch.no_grad():

for (q, q_len, h, h_len, label) in dataloader:

# Move input and output data to the GPU if one is used.

q1 = q.to(device)

q1_lengths = q_len.to(device)

q2 = h.to(device)

q2_lengths = h_len.to(device)

labels = label.to(device)

logits, probs = model(q1, q1_lengths, q2, q2_lengths)

loss = criterion(logits, labels)

running_loss += loss.item()

running_accuracy += correct_predictions(probs, labels)

all_prob.extend(probs[:, 1].cpu().numpy())

all_labels.extend(label)

epoch_time = time.time() - epoch_start

epoch_loss = running_loss / len(dataloader)

epoch_accuracy = running_accuracy / (len(dataloader.dataset))

return epoch_time, epoch_loss, epoch_accuracy, roc_auc_score(all_labels, all_prob)

def test(model, dataloader):

"""

Test the accuracy of a model on some labelled test dataset.

在标记测试数据集上测试模型的准确性。

Args:

model: The torch module on which testing must be performed.

dataloader: A DataLoader object to iterate over some dataset.

Returns:

batch_time: The average time to predict the classes of a batch.

total_time: The total time to process the whole dataset.

accuracy: The accuracy of the model on the input data.

"""

# Switch the model to eval mode.

model.eval()

device = model.device

time_start = time.time()

batch_time = 0.0

accuracy = 0.0

all_prob = []

all_labels = []

# Deactivate autograd for evaluation.

with torch.no_grad():

for (q, q_len, h, h_len, label) in dataloader:

batch_start = time.time()

# Move input and output data to the GPU if one is used.

q1 = q.to(device)

q1_lengths = q_len.to(device)

q2 = h.to(device)

q2_lengths = h_len.to(device)

labels = label.to(device)

_, probs = model(q1, q1_lengths, q2, q2_lengths)

accuracy += correct_predictions(probs, labels)

batch_time += time.time() - batch_start

all_prob.extend(probs[:, 1].cpu().numpy())

all_labels.extend(label)

batch_time /= len(dataloader)

total_time = time.time() - time_start

accuracy /= (len(dataloader.dataset))

return batch_time, total_time, accuracy, roc_auc_score(all_labels, all_prob)

def train(model, dataloader, optimizer, criterion, epoch_number, max_gradient_norm):

"""

Train a model for one epoch on some input data with a given optimizer and

criterion.用给定的优化器和在一些输入数据上训练一个epoch的模型标准。

Args:

model: A torch module that must be trained on some input data.

dataloader: A DataLoader object to iterate over the training data.

optimizer: A torch optimizer to use for training on the input model.

criterion: A loss criterion to use for training.

epoch_number: The number of the epoch for which training is performed.

max_gradient_norm: Max. norm for gradient norm clipping.

Returns:

epoch_time: The total time necessary to train the epoch.

epoch_loss: The training loss computed for the epoch.

epoch_accuracy: The accuracy computed for the epoch.

"""

# Switch the model to train mode.

# print('train')

model.train()

device = model.device

epoch_start = time.time()

batch_time_avg = 0.0

running_loss = 0.0

correct_preds = 0

tqdm_batch_iterator = tqdm(dataloader)

# print(tqdm_batch_iterator)

for batch_index, (q, q_len, h, h_len, label) in enumerate(tqdm_batch_iterator):

# print(q)

# print(h)

# print(batch_index)

# print((q, q_len, h, h_len, label))

batch_start = time.time()

# Move input and output data to the GPU if it is used.

q1 = q.to(device)

q1_lengths = q_len.to(device)

q2 = h.to(device)

q2_lengths = h_len.to(device)

labels = label.to(device)

optimizer.zero_grad()

logits, probs = model(q1, q1_lengths, q2, q2_lengths)

# print(logits)

loss = criterion(logits, labels)

loss.backward()

nn.utils.clip_grad_norm_(model.parameters(), max_gradient_norm)

optimizer.step()

batch_time_avg += time.time() - batch_start

running_loss += loss.item()

correct_preds += correct_predictions(probs, labels)

print(loss.item())

print(correct_preds)

description = "Avg. batch proc. time: {:.4f}s, loss: {:.4f}"\

.format(batch_time_avg/(batch_index+1), running_loss/(batch_index+1))

tqdm_batch_iterator.set_description(description)

epoch_time = time.time() - epoch_start

epoch_loss = running_loss / len(dataloader)

epoch_accuracy = correct_preds / len(dataloader.dataset)

return epoch_time, epoch_loss, epoch_accuracy

训练过程train.py:

import os

import torch

from torch.utils.data import DataLoader

import torch.nn as nn

from data import LCQMC_Dataset, load_embeddings

from utils import train, validate

from model import ESIM

def main(train_file, dev_file, vocab_file, target_dir,

max_length=50,

hidden_size=300,

dropout=0.2,

num_classes=2,

epochs=50,

batch_size=256,

lr=0.0005,

patience=5,

max_grad_norm=10.0,

gpu_index=0,

checkpoint=None):

device = torch.device("cuda:{}".format(gpu_index) if torch.cuda.is_available() else "cpu")

print(20 * "=", " Preparing for training ", 20 * "=")

# 保存模型的路径

if not os.path.exists(target_dir):

os.makedirs(target_dir)

# -------------------- Data loading ------------------- #

print("\t* Loading training data...")

train_data = LCQMC_Dataset(train_file, vocab_file, max_length)

train_loader = DataLoader(train_data, shuffle=True, batch_size=batch_size)

print("\t* Loading validation data...")

dev_data = LCQMC_Dataset(dev_file, vocab_file, max_length)

dev_loader = DataLoader(dev_data, shuffle=True, batch_size=batch_size)

# -------------------- Model definition ------------------- #

print("\t* Building model...")

# embeddings = load_embeddings(embeddings_file)

model = ESIM(hidden_size, dropout=dropout,

num_labels=num_classes, device=device).to(device)

# -------------------- Preparation for training ------------------- #

print('a')

criterion = nn.CrossEntropyLoss()

# 过滤出需要梯度更新的参数

parameters = filter(lambda p: p.requires_grad, model.parameters())

print('b')

# optimizer = optim.Adadelta(parameters, params["LEARNING_RATE"])

optimizer = torch.optim.Adam(parameters, lr=lr)

# optimizer = torch.optim.Adam(model.parameters(), lr=lr)

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode="max",

factor=0.85, patience=0)

best_score = 0.0

start_epoch = 1

# Data for loss curves plot

epochs_count = []

train_losses = []

valid_losses = []

# Continuing training from a checkpoint if one was given as argument

if checkpoint:

checkpoint = torch.load(checkpoint)

start_epoch = checkpoint["epoch"] + 1

best_score = checkpoint["best_score"]

print("\t* Training will continue on existing model from epoch {}...".format(start_epoch))

model.load_state_dict(checkpoint["model"])

optimizer.load_state_dict(checkpoint["optimizer"])

epochs_count = checkpoint["epochs_count"]

train_losses = checkpoint["train_losses"]

valid_losses = checkpoint["valid_losses"]

# Compute loss and accuracy before starting (or resuming) training.

_, valid_loss, valid_accuracy, auc = validate(model, dev_loader, criterion)

print("\t* Validation loss before training: {:.4f}, accuracy: {:.4f}%, auc: {:.4f}".format(valid_loss,

(valid_accuracy * 100),

auc))

# -------------------- Training epochs ------------------- #

print("\n", 20 * "=", "Training ESIM model on device: {}".format(device), 20 * "=")

patience_counter = 0

for epoch in range(start_epoch, epochs + 1):

epochs_count.append(epoch)

print("* Training epoch {}:".format(epoch))

epoch_time, epoch_loss, epoch_accuracy = train(model, train_loader, optimizer,

criterion, epoch, max_grad_norm)

train_losses.append(epoch_loss)

print("-> Training time: {:.4f}s, loss = {:.4f}, accuracy: {:.4f}%"

.format(epoch_time, epoch_loss, (epoch_accuracy * 100)))

print("* Validation for epoch {}:".format(epoch))

epoch_time, epoch_loss, epoch_accuracy, epoch_auc = validate(model, dev_loader, criterion)

valid_losses.append(epoch_loss)

print("-> Valid. time: {:.4f}s, loss: {:.4f}, accuracy: {:.4f}%, auc: {:.4f}\n"

.format(epoch_time, epoch_loss, (epoch_accuracy * 100), epoch_auc))

# Update the optimizer's learning rate with the scheduler.

scheduler.step(epoch_accuracy)

# Early stopping on validation accuracy.

if epoch_accuracy < best_score:

patience_counter += 1

else:

best_score = epoch_accuracy

patience_counter = 0

torch.save({"epoch": epoch,

"model": model.state_dict(),

"best_score": best_score,

"epochs_count": epochs_count,

"train_losses": train_losses,

"valid_losses": valid_losses},

os.path.join(target_dir, "best.pth.tar"))

# Save the model at each epoch.

torch.save({"epoch": epoch,

"model": model.state_dict(),

"best_score": best_score,

"optimizer": optimizer.state_dict(),

"epochs_count": epochs_count,

"train_losses": train_losses,

"valid_losses": valid_losses},

os.path.join(target_dir, "esim_{}.pth.tar".format(epoch)))

if patience_counter >= patience:

print("-> Early stopping: patience limit reached, stopping...")

break

if __name__ == "__main__":

main("atec_nlp_sim_train_all.csv", "dev.csv", "vocab.txt", "models")

将训练好的模型保存下来,

# 保存模型的路径

if not os.path.exists(target_dir):

os.makedirs(target_dir)

参考

https://github.com/Alic-yuan/nlp-beginner-finish/tree/master/task3