Hive实现Zebra

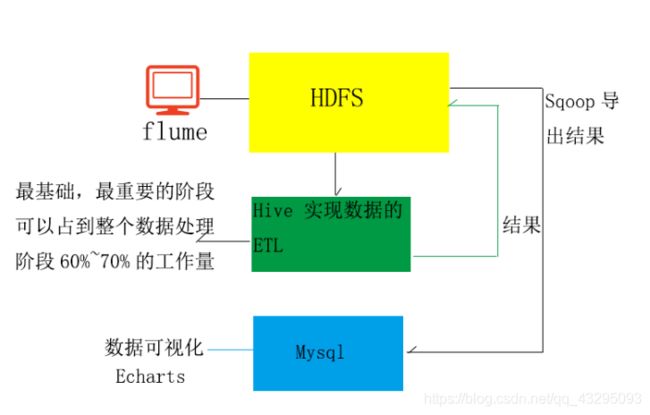

实现流程:

1、使用flume收集数据

2、存储到hdfs系统中

3、创建hive的外部表管理hdfs中收集的日志数据

4、利用hql处理zebra的业务逻辑

5、使用sqoop技术将hdfs中处理完成的数据导出到mysql中

flume组件工作说明:

flume在收集日志的时候,按天为单位进行收集。Hive在处理的时候,按天作为分区条件,继而对每天的日志进行统计分析。最后,hive将统计分析的结果利用sqoop导出到关系型数据库里,然后做时间可视化的相关工作。对于时间的记录,一种思路是把日志文件名利的日志信息拿出来,第二种思路是flume在收集日志的时候,将当天的日期记录下来,我们用第二种思路。

flume的配置:

a1.sources=r1

a1.channels=c1

a1.sinks=s1

#spooling Directory类型的source

#将指定的文件加入到“自动搜集”目录中。flume会持续监听这个目录,把文件当做source

#来处理。注意:一旦文件被放到“自动收集”目录中后,便不能修改,如果修改,flume会

#报错。此外,也不能有重名的文件,如果有,flume也会报错。

a1.sources.r1.type=spooldir

a1.sources.r1.spoolDir=/home/data

a1.sources.r1.interceptors=i1

a1.sources.r1.interceptors.i1.type=timestamp

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=200

#sink类型设置为hdfs类型,此Sink将事件写入到Hadoop分布式文件系统HDFS中。

a1.sinks.s1.type=hdfs

#hdfs目录路径

a1.sinks.s1.hdfs.path=hdfs://lhj01:9000/zebra/reportTime=%Y-%m-%d

处理数据的类型,DataStream为普通的文本类型

a1.sinks.s1.hdfs.fileType=DataStream

#按30s滚动生成一个新文件

a1.sinks.s1.hdfs.rollInterval=30

#当临时文件达到多少时,滚动成目标文件,如果设置成0则表示不根据临时文件的大小来滚动成目标文件

a1.sinks.s1.hdfs.rollSize=0

#当 events 数据达到该数量时候,将临时文件滚动成目标文件,

#如果设置成0,则表示不根据events数据来滚动文件

a1.sinks.s1.hdfs.rollCount=0

a1.sources.r1.channels=c1

a1.sinks.s1.channel=c1

将待处理的日志文件上传到/home/data下,最终,这个文件会被flume收集到,最后被上传到hdfs上。

注意:在上传日志文件的时候,,不要再/root/work/data/flumedata目录下通过rz上传,因为rz是连续传输文件,这样会使得flume在处理时报错,错误为正在处理的日志文件大小被修改,所以最后是先把日志上传到Linux的其他目录下,然后通过mv指令移动到/root/work/data/flumedata目录下。

Hive组件工作流程(建表语句不用写,重在了解整个ETL过程,这个过程很重要)

使用hive,创建zebra数据库

执行:create database zebra;

执行:use zebra;

然后再建立分区,再建立表

详细建表语句:create EXTERNAL table zebra (a1 string,a2 string,a3 string,a4 string,a5 string,a6 string,a7 string,a8

string,a9 string,a10 string,a11 string,a12 string,a13 string,a14 string,a15 string,a16 string,a17

string,a18 string,a19 string,a20 string,a21 string,a22 string,a23 string,a24 string,a25 string,a26

string,a27 string,a28 string,a29 string,a30 string,a31 string,a32 string,a33 string,a34 string,a35

string,a36 string,a37 string,a38 string,a39 string,a40 string,a41 string,a42 string,a43 string,a44

string,a45 string,a46 string,a47 string,a48 string,a49 string,a50 string,a51 string,a52 string,a53

string,a54 string,a55 string,a56 string,a57 string,a58 string,a59 string,a60 string,a61 string,a62

string,a63 string,a64 string,a65 string,a66 string,a67 string,a68 string,a69 string,a70 string,a71

string,a72 string,a73 string,a74 string,a75 string,a76 string,a77 string) partitioned by (reporttime

string) row format delimited fields terminated by ‘|’ stored as textfile location '/zebra

增加分区操作执行:

ALTER TABLE zebra add PARTITION (reportTime=‘2018-09-06’) location '/zebra/reportTime= 分区 hive02 的第 2 页 执行:ALTER TABLE zebra add PARTITION (reportTime=‘2018-09-06’) location '/zebra/reportTime=2018-09-06

**可以通过抽样语法来检验:**select * from zebra TABLESAMPLE (1 ROWS);

**清洗数据,**从原来的77个字段变为23个字段建表语句:create table dataclear(reporttime string,appType bigint,appSubtype bigint,userIp string,userPort

bigint,appServerIP string,appServerPort bigint,host string,cellid string,appTypeCodebigint,interruptType String,transStatus bigint,trafficUL bigint,trafficDL bigint,retranUL

bigint,retranDL bigint,procdureStartTime bigint,procdureEndTime bigint)row format delimited fields

terminated by ‘|’

从zebra表里导出数据到dataclear表里(23个字段的值)

建表语句:insert overwrite table dataclear select concat(reporttime,’

',‘00:00:00’),a23,a24,a27,a29,a31,a33,a59,a17,a19,a68,a55,a34,a35,a40,a41,a20,a21 from zebra; ',‘00:00:00’),a23,a24,a27,a29,a31,a33,a59,a17,a19,a68,a55,a34,a35,a40,a41,a20,a21 from

zebra;处理业务逻辑,得到dataproc表

建表语句:create table dataproc (reporttime string,appType bigint,appSubtype bigint,userIp string,userPort

bigint,appServerIP string,appServerPort bigint,host string,cellid string,attempts bigint,accepts

bigint,trafficUL bigint,trafficDL bigint,retranUL bigint,retranDL bigint,failCount bigint,transDelay

bigint)row format delimited fields terminated by ‘|’

根据业务规则,做字段处理

建表语句:

insert overwrite table dataproc select

reporttime,appType,appSubtype,userIp,userPort,appServerIP,appServerPort,host,if(cellid == '',"000000000",cellid),if(appTypeCode == 103,1,0),if(appTypeCode == 103 and

find_in_set(transStatus,"10,11,12,13,14,15,32,33,34,35,36,37,38,48,49,50,51,52,53,54,55,199,200,201,02,203,204,205,206,302,304,306")!=0 and interruptType == 0,1,0),if(apptypeCode == 103,trafficUL,0),

if(apptypeCode == 103,trafficDL,0), if(apptypeCode == 103,retranUL,0), if(apptypeCode ==

103,retranDL,0), if(appTypeCode == 103 and transStatus == 1 and interruptType ==

0,1,0),if(appTypeCode == 103, procdureEndTime - procdureStartTime,0) from dataclea

查询关心的信息,以应用受欢迎程度表为

create table D_H_HTTP_APPTYPE(hourid string,appType int,appSubtype int,attempts bigint,accepts

bigint,succRatio double,trafficUL bigint,trafficDL bigint,totalTraffic bigint,retranUL

bigint,retranDL bigint,retranTraffic bigint,failCount bigint,transDelay bigint) row format delimited

fields terminated by '|

根据总表dataproc,按条件做聚合以及字段的

建表语

insert overwrite table D_H_HTTP_APPTYPE select

reporttime,apptype,appsubtype,sum(attempts),sum(accepts),round(sum(accepts)/sum(attempts),2),sum(trafficUL),sum(trafficDL),sum(trafficUL)+sum(trafficDL),sum(retranUL),sum(retranDL),sum(retranUL)+sum(retranDL),sum(failCount),sum(transDelay)from dataproc group by reporttime,apptype,appsubtyp

18.查询前5名受欢迎app

select hourid,apptype,sum(totalTraffic) as tt from D_H_HTTP_APPTYPE group by hourid,apptype sort by

tt desc limit

最后将hive中的表从hdfs导出到mysql

1、在mysql建立对应的表

2、利用sqoop导出d_h_http_apptype表

导出语句:

sh sqoop export --connect jdbc:mysql://hadoop01:3306/zebra --username root --password root --export-dir ‘/user/hive/warehouse/zebra.db/d_h_http_apptype/000000_0’ --table D_H_HTTP_APPTYPE -m

1 --fields-terminated-by ’