汽车之家车型爬取

这是一篇不完整的文章,嗯,因为后期我需要的参数不能完全爬取出来,所以我要先去借鉴一下其他大神的步骤。

以下代码都是自己想的,所以想记录一下,以便之后温习和反思。

首先,打开汽车之家的车型库https://car.autohome.com.cn/

然后按F12进入开发者模式,找到Ashx的连接,因为还是新手,所有的连接都一个个的点击了,找到了车型品牌的连接。

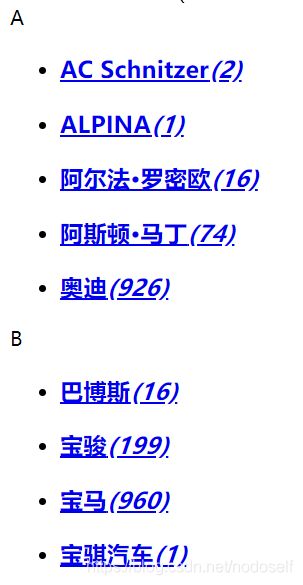

好了,现在可以进行代码爬取了。爬取车型品牌及连接。之前操作的时候,并没有发现少了一个或几个品牌。

import requests

import re

import random

import time

#获取车型的品牌及ID

url="https://car.autohome.com.cn/AsLeftMenu/As_LeftListNew.ashx?typeId=1%20&brandId=94%20&fctId=0%20&seriesId=0"

Html = requests.get(url, timeout=2).text

pattern= "(.*?)(.*?)

"

uids = re.findall(pattern, Html)

with open('cars_qiche.txt', 'w', encoding='utf-8') as f:

for i in uids:

f.write(str(i) + '\n')

f.close()结果如下:

将获得的品牌的id,构建成完整的连接。菜鸟阶段,只能一步一步的操作。

#将品牌ID添加到链接当中

with open('cars_qiche.txt', 'r', encoding='utf-8') as f:

pattern = '/price/brand-(.*?).html'

with open('brandid_qiche.txt','w') as b:

for i in f:

sid = re.findall(pattern, i)

urls = 'https://car.autohome.com.cn/AsLeftMenu/As_LeftListNew.ashx?typeId=1%20&brandId='+sid[0]+'%20&fctId=0%20&seriesId=0'

b.write(urls+'\n')

b.close()

f.close()遍历车型品牌的连接,找到车系的id。

#获得车型系列的链接

with open('brandid_qiche.txt', 'r') as f:

with open('Series_qiche.txt', 'a+', encoding='utf-8') as b:

for i in f:

url = i

Html2 = requests.get(url, timeout=3).text

pattern = '(.*?)(.*?)'

Series = re.findall(pattern, Html2)

for j in Series:

b.write(str(j)+'\n')

b.close()

f.close()后面的代码我就一次性贴上了,半成品代码orz,因为我是一步一步做的,所以每次操作完了一步都需要将前面的注释掉。

#获得车型品牌的细分品牌的在售链接和停售链接

#在售链接url = 'https://car.autohome.com.cn//price/series-3825.html'

# 停售链接url = 'https://car.autohome.com.cn//price/series-3825-0-3-1.html'

with open('Series_qiche.txt','r',encoding='utf-8') as f:

with open('OnSales_qiche.txt','a+') as b:

with open('OffSales_qiche.txt','a+') as a:

for i in f:

cut1 = i.split(',')[0]

cut2 = cut1.split('(')[1]

cut3 = cut2.split('.')[0] + '\''

Onsales = 'https://car.autohome.com.cn/' + eval(cut2)

Offsales = 'https://car.autohome.com.cn/' + eval(cut3) + '-0-3-1.html'

b.write(Onsales + '\n')

a.write(Offsales + '\n')

b.close()

f.close()

# #获得停售车型链接对应的分页

with open('OffSales_qiche.txt', 'r') as f:

with open('OffSale_page.txt', 'a+') as b:

for i in f:

#url='https://car.autohome.com.cn//price/series-19-0-3.html'

url = i.split('\n')

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

timeSleep = random.randrange(1,5)

Html0 = requests.get(url[0], headers=headers ).text

pattern = '(\d)'

page = re.findall(pattern, Html0)

b.write(str(page)+'\n')

time.sleep(timeSleep)

print(page)

b.close()

f.close()

#获得所有车型在售及停售的链接

with open('OffSale_page.txt', 'r') as f:

with open('Offsales_qiche.txt','r') as b:

with open('allSales_qiche.txt', 'a+') as a:

list1 = []#分页

list2 = []#链接

list3 = []

count = 0

for i in f:

list1.append(i.split('\n')[0])

for i in b:

list2.append(i.split('\n')[0])

for i in list1:

if i == '[]':

list3.append(list2[count])

else:

str1 = list2[count]

for j in list1[count]:

pattern = '(.*?)-1.html'

str2 = re.findall(pattern,str1)

if j.isdigit():

bouns = (str2)[0] + '-0-0-0-0-'+ j + '.html'

list3.append(bouns)

count = count + 1

for i in list3:

a.write(i+'\n')

a.close()

b.close()

f.close()

#获得所有停售车型的ID

url='https://car.autohome.com.cn//price/series-692.html'

with open('allSales_qiche.txt', 'r') as a:

with open('qichezhijiaCar.txt', 'a+', encoding='utf-8') as f:

for i in a:

url = i.split('\n')[0]

timeSleep = random.randrange(1,5)

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

r = requests.get(url, headers = headers)

Html = r.text

time.sleep(timeSleep)

pattern = 'id="p(\d{5})".*?(.*?).*?(.*?).*?(.*?).*?'

cut = re.findall(pattern, Html)

for i in cut:

f.write(str(i)+ '\n')

f.close()

a.close()

#获得所有在售的车型ID

#url='https://car.autohome.com.cn//price/series-692.html'

with open('OffSales_qiche.txt', 'r') as a:

with open('qichezhijiaCar.txt', 'a+', encoding='utf-8') as f:

for i in a:

url = i.split('\n')[0]

timeSleep = random.randrange(1,5)

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

r = requests.get(url, headers = headers)

Html = r.text

time.sleep(timeSleep)

pattern = 'id="p(\d{7})".*?(.*?).*?(.*?).*?(.*?).*?'

cut = re.findall(pattern, Html)

for i in cut:

f.write(str(i)+ '\n')

f.close()

a.close()

#获取参数

with open('Cars_parmers.txt','a+') as a:

with open('qichezhijiaCar.txt', 'r', encoding='utf-8') as f:

list = []

list2 = []

for i in f:

list.append(i.split(',')[0])

for i in list:

s = i.split('\'')

list2.append(s)

for i in list2:

url = 'https://car.autohome.com.cn/config/spec/' + i[1] + '.html'

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

r = requests.get(url, headers = headers)

time.sleep(random.randrange(1,5))

Html = r.text

pattern = re.compile(r'{"specid":' + i[1] + ',"value":(.*?)}')

strings = re.findall(pattern, Html)

# print(Html.encode(encoding='utf-8'))

# print(r.status_code)

for i in strings:

a.write(i + '\n')

a.write(url+'\n')

a.close()

with open('Cars_parmers2.txt','a+') as f:

with open('Cars_parmers.txt','r') as a:

count = 0

counts = 0

cuts1 = []

list0 = []

list1 = []

list2 = []

for i in a:

if re.match('https://.*?',i):

cuts = count - counts

counts = count

cuts1.append(cuts)

list2.append(count)

count += 1

list0.append(i)

# print(cuts1)

for i in cuts1:

list1.append(list0[:i])

del list0[0:i]

for i in list1:

f.write(str(i)+'\n')

# print(list0)

# print(list1)

a.close()

f.close()