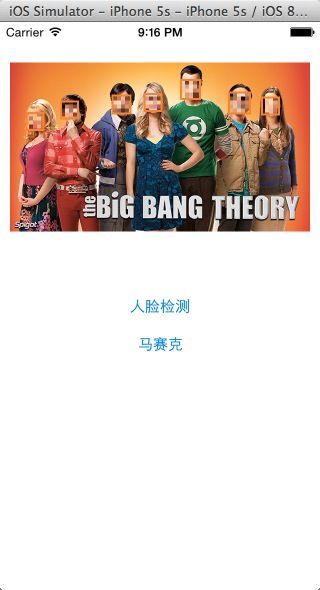

iOS8 Core Image In Swift:人脸检测以及马赛克

iOS8 Core Image In Swift:自动改善图像以及内置滤镜的使用

iOS8 Core Image In Swift:更复杂的滤镜

iOS8 Core Image In Swift:人脸检测以及马赛克

iOS8 Core Image In Swift:视频实时滤镜

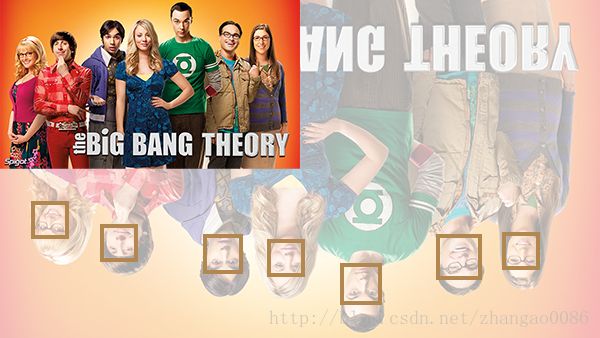

Core Image不仅内置了诸多滤镜,还能检测图像中的人脸,不过Core Image只是检测,并非识别,检测人脸是指在图像中寻找符合人脸特征(只要是个人脸)的区域,识别是指在图像中寻找指定的人脸(比如某某某的脸)。Core Image在找到符合人脸特征的区域后,会返回该特征的信息,比如人脸的范围、眼睛和嘴巴的位置等。

人脸检测并标记检测到的区域

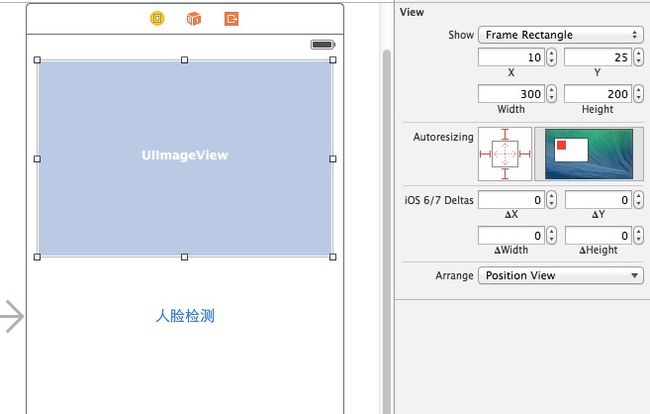

- 新建一个Single View Application工程

- 然后在Storyboard里放入UIImageView,ContentMode设置为Aspect Fit

- 将UIImageView连接到VC里

- 放入一个名为“人脸检测”的UIButton,然后连接到VC的faceDetecting方法上

- 关闭Auto Layout以及Size Classes

class ViewController: UIViewController {

@IBOutlet var imageView: UIImageView!

lazy var originalImage: UIImage = {

return UIImage(named: "Image")

}()

lazy var context: CIContext = {

return CIContext(options: nil)

}()

......

override func viewDidLoad() {

super.viewDidLoad()

// Do any additional setup after loading the view, typically from a nib.

self.imageView.image = originalImage

}

@IBAction func faceDetecing() {

let inputImage = CIImage(image: originalImage)

let detector = CIDetector(ofType: CIDetectorTypeFace,

context: context,

options: [CIDetectorAccuracy: CIDetectorAccuracyHigh])

var faceFeatures: [CIFaceFeature]!

if let orientation: AnyObject = inputImage.properties()?[kCGImagePropertyOrientation] {

faceFeatures = detector.featuresInImage(inputImage,

options: [CIDetectorImageOrientation: orientation]

) as [CIFaceFeature]

} else {

faceFeatures = detector.featuresInImage(inputImage) as [CIFaceFeature]

}

println(faceFeatures)

......

| 使用kCGImagePropertyOrientation的时候,可能需要导入ImageIO框架 |

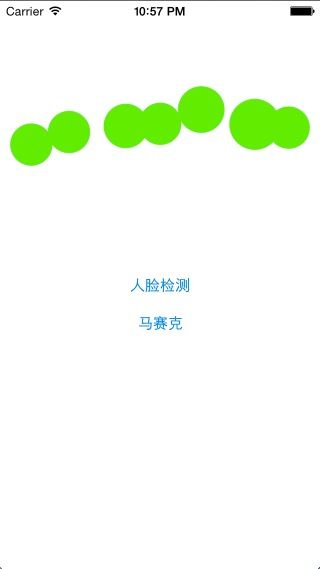

- 获取所有的面部特征

- 用bounds实例化一个UIView

- 把View显示出来

@IBAction func faceDetecing() {

let inputImage = CIImage(image: originalImage)

let detector = CIDetector(ofType: CIDetectorTypeFace,

context: context,

options: [CIDetectorAccuracy: CIDetectorAccuracyHigh])

var faceFeatures: [CIFaceFeature]!

if let orientation: AnyObject = inputImage.properties()?[kCGImagePropertyOrientation] {

faceFeatures = detector.featuresInImage(inputImage, options: [CIDetectorImageOrientation: orientation]) as [CIFaceFeature]

} else {

faceFeatures = detector.featuresInImage(inputImage) as [CIFaceFeature]

}

println(faceFeatures)

for faceFeature in faceFeatures {

let faceView = UIView(frame: faceFeature.bounds)

faceView.layer.borderColor = UIColor.orangeColor().CGColor

faceView.layer.borderWidth = 2

imageView.addSubview(faceView)

}

}

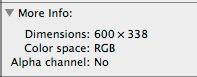

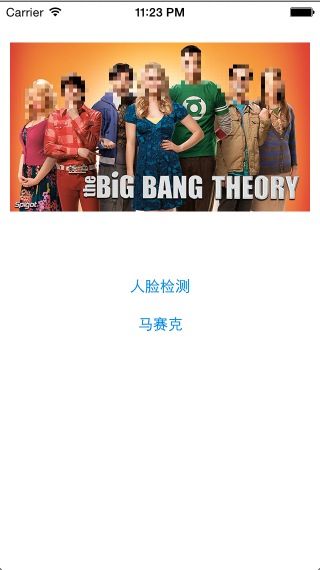

- 调整transform,让它正过来

- 缩放bounds,让它适配imageView

@IBAction func faceDetecing() {

let inputImage = CIImage(image: originalImage)

let detector = CIDetector(ofType: CIDetectorTypeFace,

context: context,

options: [CIDetectorAccuracy: CIDetectorAccuracyHigh])

var faceFeatures: [CIFaceFeature]!

if let orientation: AnyObject = inputImage.properties()?[kCGImagePropertyOrientation] {

faceFeatures = detector.featuresInImage(inputImage, options: [CIDetectorImageOrientation: orientation]) as [CIFaceFeature]

} else {

faceFeatures = detector.featuresInImage(inputImage) as [CIFaceFeature]

}

println(faceFeatures)

// 1.

let inputImageSize = inputImage.extent().size

var transform = CGAffineTransformIdentity

transform = CGAffineTransformScale(transform, 1, -1)

transform = CGAffineTransformTranslate(transform, 0, -inputImageSize.height)

for faceFeature in faceFeatures {

var faceViewBounds = CGRectApplyAffineTransform(faceFeature.bounds, transform)

// 2.

let scaleTransform = CGAffineTransformMakeScale(0.5, 0.5)

faceViewBounds = CGRectApplyAffineTransform(faceViewBounds, scaleTransform)

let faceView = UIView(frame: faceViewBounds)

faceView.layer.borderColor = UIColor.orangeColor().CGColor

faceView.layer.borderWidth = 2

imageView.addSubview(faceView)

}

}

@IBAction func faceDetecing() {

let inputImage = CIImage(image: originalImage)

let detector = CIDetector(ofType: CIDetectorTypeFace,

context: context,

options: [CIDetectorAccuracy: CIDetectorAccuracyHigh])

var faceFeatures: [CIFaceFeature]!

if let orientation: AnyObject = inputImage.properties()?[kCGImagePropertyOrientation] {

faceFeatures = detector.featuresInImage(inputImage, options: [CIDetectorImageOrientation: orientation]) as [CIFaceFeature]

} else {

faceFeatures = detector.featuresInImage(inputImage) as [CIFaceFeature]

}

println(faceFeatures)

// 1.

let inputImageSize = inputImage.extent().size

var transform = CGAffineTransformIdentity

transform = CGAffineTransformScale(transform, 1, -1)

transform = CGAffineTransformTranslate(transform, 0, -inputImageSize.height)

for faceFeature in faceFeatures {

var faceViewBounds = CGRectApplyAffineTransform(faceFeature.bounds, transform)

// 2.

var scale = min(imageView.bounds.size.width / inputImageSize.width,

imageView.bounds.size.height / inputImageSize.height)

var offsetX = (imageView.bounds.size.width - inputImageSize.width * scale) / 2

var offsetY = (imageView.bounds.size.height - inputImageSize.height * scale) / 2

faceViewBounds = CGRectApplyAffineTransform(faceViewBounds, CGAffineTransformMakeScale(scale, scale))

faceViewBounds.origin.x += offsetX

faceViewBounds.origin.y += offsetY

let faceView = UIView(frame: faceViewBounds)

faceView.layer.borderColor = UIColor.orangeColor().CGColor

faceView.layer.borderWidth = 2

imageView.addSubview(faceView)

}

}

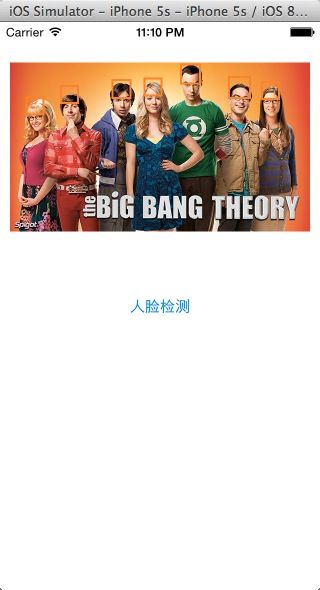

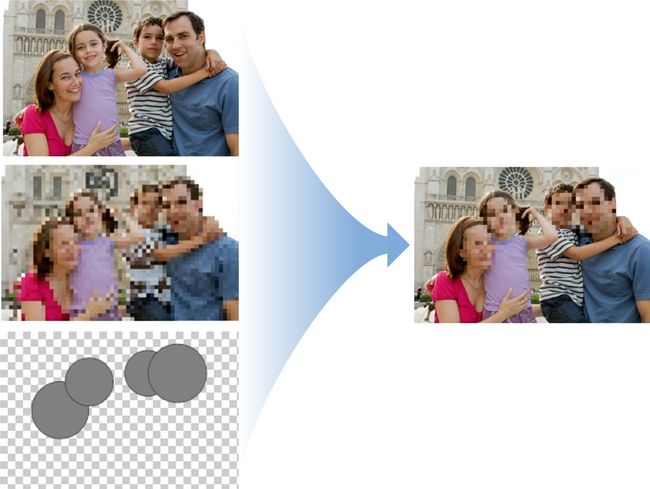

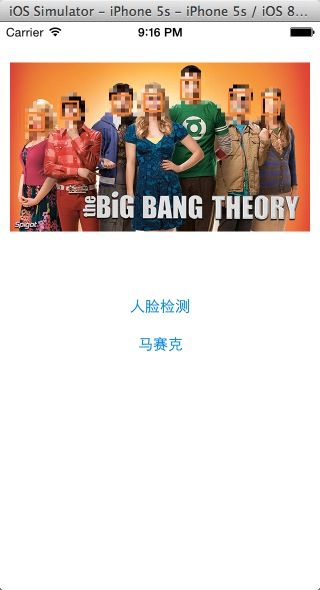

面部马赛克

- 基于原图,创建一个将所有部分都马赛克的图片

- 为检测到的人脸创建一张蒙版图

- 用蒙版图,将完全马赛克的图和原图混合起来

创建完全马赛克的图

- 设置inputImage为原图

- 可以根据自己的需要,选择设置inputScale参数,inputScale取值为1到100,取值越大,马赛克就越大

为检测到的人脸创建蒙版图

- 使用CIRadialGradient滤镜创建一个把脸包围起来的圆

- 使用CISourceOverCompositing滤镜把各个蒙版(有几张脸其实就有几个蒙版)组合起来

混合马赛克图、蒙版图以及原图

- 设置inputImage为马赛克图

- 设置inputBackground为原图

- 设置inputMaskImage为蒙版图

@IBAction func pixellated() {

// 1.

var filter = CIFilter(name: "CIPixellate")

println(filter.attributes())

let inputImage = CIImage(image: originalImage)

filter.setValue(inputImage, forKey: kCIInputImageKey)

// filter.setValue(max(inputImage.extent().size.width, inputImage.extent().size.height) / 60, forKey: kCIInputScaleKey)

let fullPixellatedImage = filter.outputImage

// let cgImage = context.createCGImage(fullPixellatedImage, fromRect: fullPixellatedImage.extent())

// imageView.image = UIImage(CGImage: cgImage)

// 2.

let detector = CIDetector(ofType: CIDetectorTypeFace,

context: context,

options: nil)

let faceFeatures = detector.featuresInImage(inputImage)

// 3.

var maskImage: CIImage!

for faceFeature in faceFeatures {

println(faceFeature.bounds)

// 4.

let centerX = faceFeature.bounds.origin.x + faceFeature.bounds.size.width / 2

let centerY = faceFeature.bounds.origin.y + faceFeature.bounds.size.height / 2

let radius = min(faceFeature.bounds.size.width, faceFeature.bounds.size.height)

let radialGradient = CIFilter(name: "CIRadialGradient",

withInputParameters: [

"inputRadius0" : radius,

"inputRadius1" : radius + 1,

"inputColor0" : CIColor(red: 0, green: 1, blue: 0, alpha: 1),

"inputColor1" : CIColor(red: 0, green: 0, blue: 0, alpha: 0),

kCIInputCenterKey : CIVector(x: centerX, y: centerY)

])

println(radialGradient.attributes())

// 5.

let radialGradientOutputImage = radialGradient.outputImage.imageByCroppingToRect(inputImage.extent())

if maskImage == nil {

maskImage = radialGradientOutputImage

} else {

println(radialGradientOutputImage)

maskImage = CIFilter(name: "CISourceOverCompositing",

withInputParameters: [

kCIInputImageKey : radialGradientOutputImage,

kCIInputBackgroundImageKey : maskImage

]).outputImage

}

}

// 6.

let blendFilter = CIFilter(name: "CIBlendWithMask")

blendFilter.setValue(fullPixellatedImage, forKey: kCIInputImageKey)

blendFilter.setValue(inputImage, forKey: kCIInputBackgroundImageKey)

blendFilter.setValue(maskImage, forKey: kCIInputMaskImageKey)

// 7.

let blendOutputImage = blendFilter.outputImage

let blendCGImage = context.createCGImage(blendOutputImage, fromRect: blendOutputImage.extent())

imageView.image = UIImage(CGImage: blendCGImage)

}

- 用CIPixellate滤镜对原图先做个完全马赛克

- 检测人脸,并保存在faceFeatures中

- 初始化蒙版图,并开始遍历检测到的所有人脸

- 由于我们要基于人脸的位置,为每一张脸都单独创建一个蒙版,所以要先计算出脸的中心点,对应为x、y轴坐标,再基于脸的宽度或高度给一个半径,最后用这些计算结果初始化一个CIRadialGradient滤镜(我将inputColor1的alpha赋值为0,表示将这些颜色值设为透明,因为我不关心除了蒙版以外的颜色,这点和苹果官网中的例子有太一样,苹果将其赋值为了1)

- 由于CIRadialGradient滤镜创建的是一张无限大小的图,所以在使用之前先对它进行裁剪(苹果官网例子中没有对其裁剪。。),然后把每一张脸的蒙版图合在一起

- 用CIBlendWithMask滤镜把马赛克图、原图、蒙版图混合起来

- 输出,在界面上显示

GitHub下载地址

UPDATED:

var scale = min(imageView.bounds.size.width / inputImage.extent().size.width,

imageView.bounds.size.height / inputImage.extent().size.height)

let radius = min(faceFeature.bounds.size.width, faceFeature.bounds.size.height) * scale

参考资料:

https://developer.apple.com/library/mac/documentation/graphicsimaging/conceptual/CoreImaging/ci_intro/ci_intro.html