rancher单点+K3s集群

文章目录

- 介绍

- OP配置

- op 初始化本地变量

- 系统脚本

- 配置yum源

- 局域网部署centos源

- 安装ftp服务

- 复制/上传文件

- 挂载iso

- docker/yum_lan.sh ftp源

- docker/yum_cdrom.sh 光盘作为源

- docker/init.sh 优化系统及环境配置

- Docker 安装

- docker/install_docker.sh

- certs/generate_ca.sh 证书生成脚本

- Nginx 安装

- nginx/docker-compose.yml 容器配置

- nginx/nginx.conf 整体配置

- docker-compose up 容器运行

- 镜像仓库

- harbor

- nginx/conf.d/harbor.conf nginx域名转发

- nexus3(推荐)

- nexus3/docker-compose.yml 镜像配置

- nginx/conf.d/nexus3.conf nginx域名转发

- 配置

- Rancher

- 镜像导入

- rancher/docker-compose.yml 镜像配置

- Postgres

- pg_k3s/docker-compose.yml k3s用库

- K3s资源&脚本

- 公网资源

- 脚本

- 卸载

- 安装

- for docker (pg)

- containerd(推荐)

- 执行指令脚本

- 复制文件(夹)脚本

- K3s负载均衡配置

- K3s集群部署

- 免密登录

- 修改主机名

- 复制资源

- init

- install

- 重装前清理

- with docker

- with containerd(推荐)

- postgresql

- DQlite

- 集群管理

- K3s 导入到 rancher

- 参考

- 修改集群IP范围

- 下一章

介绍

- 系统为Centos7.8 (8.1部署longhorn中遇到问题)

- 镜像采用 harbor / nexus3 部署

- rancher采用docker 单点部署(2.4.4)

- K3s部署三节点master(postgres1.19/DQlite)

| 节点 | IP | 应用 | 备注 |

|---|---|---|---|

| op | 172.19.129.98 | nginx/harbor/nexus3/rancher/postgres | |

| m1 | 172.19.201.241 | K3s server | |

| m2 | 172.19.201.242 | K3s server | |

| m3 | 172.19.201.243 | K3s server |

| 应用 | URI:端口 | 备注 |

|---|---|---|

| nginx | 80/443 | 域名转发/K3s负载均衡 |

| nexus3 | 8001/8082 | |

| harbor | 9443 | nginx 443 转发 |

| rancher | 8443 | 以ip方便访问 |

| postgres | 5432 |

目录结果

# /home/rancher作为后续操作的基础目录

mkdir -p /home/rancher/{certs,nexus3,nginx,nginx/conf.d,docker,k3s,longhorn,pg_k3s,rancher} && cd /home/rancher

OP配置

op 初始化本地变量

cat >> /etc/profile << EOF

echo "export PS1='[\u@\h \t \w]\$ '" >> /etc/profile

## 环境 [此处IP需修改]

export master_ips=(172.19.129.241 172.19.129.242 172.19.129.243)

export op=172.19.129.98

export domain=do.io

EOF

source /etc/profile

for ip in ${master_ips[@]}

do

echo "${ip} m${ip##*.}" >> /etc/hosts

done

系统脚本

配置yum源

局域网部署centos源

安装ftp服务

yum install vsftpd -y

systemctl start vsftpd

systemctl enable vsftpd

复制/上传文件

mkdir -p /data/centosISO

cd /data/centosISO

# 在此目录下上传或者下载镜像文件

挂载iso

# 创建挂载目录(确保空间足够)

mkdir -p /var/ftp/centos/centos78

# 挂载iso

mount -o loop /data/centosISO/CentOS-7.8-x86_64-DVD-2003.iso /var/ftp/centos/centos78/

# 开机挂载

cat >> /etc/profile << EOF

/data/centosISO/CentOS-7.5-x86_64-DVD-1804.iso /var/ftp/centos/centos75/ iso9660 defaults,ro,loop 0 0

EOF

docker/yum_lan.sh ftp源

# yum指向op源

cat > docker/yum_lan.sh << EOF

[c7-media]

name=CentOS-\$releasever - Media

baseurl=ftp://${op}/centos/centos78(注意修改IP地址为你的地址)

gpgcheck=0

enabled=1

gpgkey=ftp://${op}/centos/centos78/RPM-GPG-KEY-CentOS-7

EOF

docker/yum_cdrom.sh 光盘作为源

# yum源指向本地光盘

cat > docker/yum_cdrom.sh << 'END'

#!/bin/bash --login

mkdir -p /media/cdrom && mount /dev/sr0 -t iso9660 /media/cdrom

rm -rf /etc/yum.repos.d/ && mkdir -p /etc/yum.repos.d/

ver=`lsb_release -sr`

ver=${ver:0:1}

if [ $ver -ge 8 ] ; then

cat > /etc/yum.repos.d/CentOS-Media.repo << EOF

[c8-media-BaseOS]

name=CentOS-BaseOS-$releasever - Media

baseurl=file:///media/cdrom/BaseOS

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

[c8-media-AppStream]

name=CentOS-AppStream-$releasever - Media

baseurl=file:///media/cdrom/AppStream

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

EOF

else

cat > /etc/yum.repos.d/CentOS-Media.repo << EOF

[c7-media]

name=CentOS-$releasever - Media

baseurl=file:///media/cdrom/

gpgcheck=0

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

EOF

fi

yum clean all && yum makecache

echo "/dev/sr0 /media/cdrom auto defaults 0 0" >> /etc/fstab

END

docker/init.sh 优化系统及环境配置

cat > docker/init.sh << END

#!/bin/bash --login

echo "export PS1='[\u@\h \t \w]\$ '" >> /etc/profile

echo "export op=$op" >> /etc/profile

echo "export domain=$domain" >> /etc/profile

echo "$op harbor.$domain op" >> /etc/hosts

END

cat >> docker/init.sh << END

source /etc/profile

# 优化OS ali版本

cat > /etc/sysctl.conf << EOF

vm.swappiness = 0

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

# see details in https://help.aliyun.com/knowledge_detail/39428.html

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

# see details in https://help.aliyun.com/knowledge_detail/41334.html

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

EOF

# 优化OS rancher版本

cat > /etc/sysctl.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

net.ipv4.conf.all.forwarding=1

net.ipv4.neigh.default.gc_thresh1=4096

net.ipv4.neigh.default.gc_thresh2=6144

net.ipv4.neigh.default.gc_thresh3=8192

net.ipv4.neigh.default.gc_interval=60

net.ipv4.neigh.default.gc_stale_time=120

# 参考 https://github.com/prometheus/node_exporter#disabled-by-default

kernel.perf_event_paranoid=-1

#sysctls for k8s node config

net.ipv4.tcp_slow_start_after_idle=0

net.core.rmem_max=16777216

fs.inotify.max_user_watches=524288

kernel.softlockup_all_cpu_backtrace=1

kernel.softlockup_panic=0

kernel.watchdog_thresh=30

fs.file-max=2097152

fs.inotify.max_user_instances=8192

fs.inotify.max_queued_events=16384

vm.max_map_count=262144

fs.may_detach_mounts=1

net.core.netdev_max_backlog=16384

net.ipv4.tcp_wmem=4096 12582912 16777216

net.core.wmem_max=16777216

net.core.somaxconn=32768

net.ipv4.ip_forward=1

net.ipv4.tcp_max_syn_backlog=8096

net.ipv4.tcp_rmem=4096 12582912 16777216

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1

kernel.yama.ptrace_scope=0

vm.swappiness=0

# 可以控制core文件的文件名中是否添加pid作为扩展。

kernel.core_uses_pid=1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route=0

net.ipv4.conf.all.accept_source_route=0

# Promote secondary addresses when the primary address is removed

net.ipv4.conf.default.promote_secondaries=1

net.ipv4.conf.all.promote_secondaries=1

# Enable hard and soft link protection

fs.protected_hardlinks=1

fs.protected_symlinks=1

# 源路由验证

# see details in https://help.aliyun.com/knowledge_detail/39428.html

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_announce=2

# see details in https://help.aliyun.com/knowledge_detail/41334.html

net.ipv4.tcp_max_tw_buckets=5000

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_fin_timeout=30

net.ipv4.tcp_synack_retries=2

kernel.sysrq=1

EOF

cat > /etc/security/limits.conf << EOF

root soft nofile 65535

root hard nofile 65535

* soft nofile 65535

* hard nofile 65535

EOF

sysctl -p

# 关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

# 关闭防火墙

systemctl stop firewalld.service && systemctl disable firewalld.service

# 修改时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# 关闭swap

swapoff -a && sysctl -w vm.swappiness=0 && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# 重启

reboot

END

Docker 安装

资源地址

# docker

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-selinux-17.03.3.ce-1.el7.noarch.rpm

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-19.03.8-3.el7.x86_64.rpm

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-cli-19.03.8-3.el7.x86_64.rpm

# docker-compose

https://github.com/docker/compose/releases/download/1.26.0/docker-compose-Linux-x86_64

docker/install_docker.sh

# 下载资源文件放到docker目录中

cat > docker/install_docker.sh << 'END'

#!/bin/bash --login

dir=$(cd `dirname $0`; pwd)

cd $dir

# Docker

yum -y install ./docker-ce-selinux-17.03.3.ce-1.el7.noarch.rpm

yum -y install ./containerd.io-1.2.6-3.3.el7.x86_64.rpm

yum -y install ./docker-ce-cli-19.03.8-3.el7.x86_64.rpm

yum -y install ./docker-ce-19.03.8-3.el7.x86_64.rpm

# 启动服务

systemctl enable docker

systemctl start docker

# docker-compose

cp ./docker-compose /usr/local/bin/

chmod a+x /usr/local/bin/docker-compose

# docker 配置

mkdir -p /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors":["https://harbor.${domain}"],

"storage-driver": "overlay2",

"storage-opts": ["overlay2.override_kernel_check=true"]

}

{

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}

EOF

# 信任

mkdir -p /etc/docker/certs.d/harbor.${domain}

\cp ../certs/ca.crt /etc/docker/certs.d/harbor.${domain}/

\cp ../certs/${domain}.crt /etc/pki/ca-trust/source/anchors/${domain}.crt

# 更新

update-ca-trust extract

# 服务

systemctl restart docker

END

certs/generate_ca.sh 证书生成脚本

cat > certs/generate_ca.sh << 'END'

openssl genrsa -out ca.key 4096

openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=CN/ST=ShangHai/L=ShangHai/O=example/OU=Personal/CN=${domain}" -key ca.key -out ca.crt

openssl genrsa -out ${domain}.key 4096

openssl req -sha512 -new -subj "/C=CN/ST=ShangHai/L=ShangHai/O=example/OU=Personal/CN=${domain}" -key ${domain}.key -out ${domain}.csr

cat > v3.ext << EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1=${domain}

DNS.2=harbor.${domain}

DNS.3=rancher.${domain}

DNS.4=hub.${domain}

DNS.5=abc.${domain}

EOF

openssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in ${domain}.csr -out ${domain}.crt

openssl x509 -inform PEM -in ${domain}.crt -out ${domain}.cert

\cp ${domain}.crt ${domain}.pem

END

cd certs && sh generate_ca.sh && cd ..

Nginx 安装

nginx/docker-compose.yml 容器配置

cat > nginx/docker-compose.yml << EOF

version: '3.1'

services:

nginx:

image: nginx:1.19

container_name: nginx

restart: always

environment:

- TZ=Asia/Shanghai

ports:

- "80:80"

- "443:443"

- "6443:6443"

volumes:

- ./:/etc/nginx/ # nginx配置

- ../certs:/certs # SSL证书

EOF

nginx/nginx.conf 整体配置

cat > nginx/nginx.conf << EOF

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

stream {

include /etc/nginx/conf.d/*.stream;

}

http {

include /etc/nginx/conf.d/*.conf;

}

EOF

docker-compose up 容器运行

docker load -i nginx1.19.tgz

docker-compose -f nginx/docker-compose.yml up -d

镜像仓库

harbor

# 离线安装时复制tgz文件到内网即可

# wget https://github.com/goharbor/harbor/releases/download/v2.0.0/harbor-offline-installer-v2.0.0.tgz

tar -xzf harbor.v2.0.0.tar.gz

cd harbor && docker load -i ./harbor.v2.0.0.tar.gz

\cp harbor.yml.tmpl harbor.yml

# hostname

sed -i "s#hostname:.*#hostname: harbor.${domain}#" harbor.yml

# SSL验证

sed -i "s#certificate:.*#certificate: $PWD/../certs/${domain}.cert#" harbor.yml

sed -i "s#private_key:.*#private_key: $PWD/../certs/${domain}.key#" harbor.yml

# 数据目录

sed -i "s#data_volume.*#data_volume: $PWD/hbdata#" harbor.yml

# 安装

./prepare

# 修改docker-compose配置

sed -i "s#: nginx#: nginx_harbor#" docker-compose.yml

sed -i "s#\"proxy\"#\"nginx\"#" docker-compose.yml

# 修改端口映射8080+9443

sed -i "s#80:8080#8080:8080#g" docker-compose.yml

sed -i "s#443:8443#9443:8443#g" docker-compose.yml

docker-compose up -d && cd ..

nginx/conf.d/harbor.conf nginx域名转发

cat > nginx/conf.d/harbor.conf << EOF

upstream harbor {

server ${op}:9443;

}

server {

listen 443 ssl;

server_name harbor.${domain};

ssl_certificate /certs/${domain}.cert;

ssl_certificate_key /certs/${domain}.key;

client_max_body_size 5000m; # 上传大文件

location / {

proxy_pass https://harbor;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto \$scheme;

proxy_set_header Upgrade \$http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

EOF

#nginx 配置生效

docker restart nginx

nexus3(推荐)

nexus3/docker-compose.yml 镜像配置

mkdir ./nexus3/data &&

chmod 777 ./nexus3/data

cat > nexus3/docker-compose.yml << EOF

version: "3.1"

services:

nexus3:

image: sonatype/nexus3:3.24.0

container_name: nexus3

restart: always

privileged: true

environment:

- TZ=Asia/Shanghai

ports:

- "8081:8081"

- "8082:8082"

volumes:

- ./data:/nexus-data

EOF

docker load -i nexus3.3.24.0.tgz

docker-compose -f nexus3/docker-compose.yml up -d

nginx/conf.d/nexus3.conf nginx域名转发

cat > nginx/conf.d/nexus3.conf << EOF

upstream nexus3_http {

server $(ping op -c 1 | sed '1{s/[^(]*(//;s/).*//;q}'):8081;

}

server {

listen 80;

server_name harbor.${domain};

location / {

proxy_pass http://nexus3_http;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto \$scheme;

proxy_set_header Upgrade \$http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

upstream nexus3 {

server $(ping op -c 1 | sed '1{s/[^(]*(//;s/).*//;q}'):8082;

}

server {

listen 443 ssl;

server_name harbor.${domain};

ssl_certificate /certs/${domain}.cert;

ssl_certificate_key /certs/${domain}.key;

client_max_body_size 5000m; # 上传大文件

location / {

proxy_pass http://nexus3;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto \$scheme;

proxy_set_header Upgrade \$http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

EOF

# nginx 配置生效

docker restart nginx

配置

修改admin密码为Harbor12345 允许匿名读取数据

创建 docker(hosted) 仓库

设置http 8082端口

- Allow anonymous docker pull (不要勾选)

Rancher

镜像导入

# 信任

mkdir -p /etc/docker/certs.d/harbor.${domain}

\cp ../certs/ca.crt /etc/docker/certs.d/harbor.${domain}/

\cp ../certs/${domain}.crt /etc/pki/ca-trust/source/anchors/${domain}.crt

update-ca-trust extract

# 登录

docker login harbor.do.io -u admin -p Harbor12345

# 镜像入库

sh ./rancher-load-images.sh --image-list ./rancher-images.txt --registry harbor.${domain}

# 单独处理busybox供应用使用

docker tag rancher/busybox harbor.${domain}/library/busybox

docker push harbor.${domain}/library/busybox

# 清理缓存

docker rmi -f $(docker images |grep rancher)

rancher/docker-compose.yml 镜像配置

# 采用ip地址访问,域名访问import集群失败

cat > rancher/docker-compose.yml << EOF

version: '3.1'

services:

rancher:

image: rancher/rancher:v2.4.4

container_name: rancher

restart: unless-stopped

environment:

- TZ=Asia/Shanghai

- AUDIT_LEVEL=3

ports:

- "8443:443"

volumes:

- ./rancher:/var/lib/rancher

- ./auditlog:/var/log/auditlog

EOF

# docker-compose up

docker-compose -f rancher/docker-compose.yml up -d

Postgres

pg_k3s/docker-compose.yml k3s用库

cat > pg_k3s/docker-compose.yml << EOF

version: '3.1'

services:

pg:

image: postgres:12

container_name: pg_k3s

restart: always

environment:

- TZ=Asia/Shanghai

- POSTGRES_PASSWORD=12345

ports:

- "5432:5432"

volumes:

- ./pgdata:/var/lib/postgresql/data

EOF

# 运行容器

docker load -i pg12.tgz

docker-compose -f pg_k3s/docker-compose.yml up -d

K3s资源&脚本

公网资源

# k3s 及镜像 注意选择amd64

国内: http://mirror.cnrancher.com/

# 资源下载

wget http://rancher-mirror.cnrancher.com/k3s/v1.18.8-k3s1/k3s-airgap-images-amd64.tar

wget http://rancher-mirror.cnrancher.com/k3s/v1.18.8-k3s1/k3s

curl -o install.sh http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh

脚本

卸载

k3s/remove.sh

cat > k3s/remove.sh << 'END'

#!/bin/bash --login

k3s-killall.sh

k3s-uninstall.sh

docker rm -f $(docker ps -a |grep k8s_|awk '{print $1}')

rm ~/.kube -rf

END

安装

for docker (pg)

k3s/docker_installed.sh

cat > k3s/docker_installed.sh << END

#!/bin/bash --login

dir=\$(cd \`dirname \$0\`; pwd)

cd \$dir

mkdir -p /var/lib/rancher/k3s/agent/images/

\cp ./k3s-airgap-images-amd64.tar /var/lib/rancher/k3s/agent/images/

chmod a+x ./k3s ./install.sh

## 安装

export INSTALL_K3S_SKIP_DOWNLOAD=true

export INSTALL_K3S_EXEC="server --docker --no-deploy traefik

--datastore-endpoint=postgres://postgres:12345@${op}:5432/k3s?sslmode=disable --write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666"

\cp ./k3s /usr/local/bin/ && ./install.sh

END

containerd(推荐)

k3s/containerd_installed.sh

cat > k3s/containerd_installed.sh << END

#!/bin/bash --login

dir=\$(cd \`dirname \$0\`; pwd)

cd \$dir

mkdir -p /var/lib/rancher/k3s/agent/images/

\cp ./k3s-airgap-images-amd64.tar /var/lib/rancher/k3s/agent/images/

chmod a+x ./k3s ./install.sh

# docker

echo "alias docker='k3s crictl'" >> /etc/profile

source /etc/profile

## harbor认证

\cp ../certs/ca.crt /etc/ssl/certs/

## 信任

\cp ../certs/\${domain}.crt /etc/pki/ca-trust/source/anchors/\${domain}.crt

update-ca-trust extract

## 配置 /etc/rancher/k3s/registries.yaml

mkdir -p /etc/rancher/k3s

cat > /etc/rancher/k3s/registries.yaml << EOF

mirrors:

docker.io: # 若安装rancher到此k3s集群则不能用docker.io,会影响rancher的镜像拉取

endpoint:

- "https://harbor.\${domain}"

configs:

docker.io:

auth:

username: admin # 镜像仓库用户名

password: Harbor12345 # 镜像仓库密码

tls:

cert_file: /home/certs/\${domain}.cert #<镜像仓库所用的客户端证书文件路径>

key_file: /home/certs/\${domain}.key #<镜像仓库所用的客户端密钥文件路径>

ca_file: /home/certs/ca.key # <镜像仓库所用的ca文件路径>

EOF

END

执行指令脚本

xcall.sh

cat > xcall.sh << 'END'

#!/bin/sh --login

if [ $# = 0 ]; then

echo no params

exit;

fi

params=$@

for ip in ${master_ips[@]}

do

echo ============ ssh 【m${ip##*.}】 and run 【${params}】 ========

ssh m${ip##*.} ${params}

done

END

# 软连接

chmod a+x xcall.sh && ln -sf $PWD/xcall.sh /usr/local/bin

复制文件(夹)脚本

xscp.sh

cat > xscp.sh << 'END'

#!/bin/bash --login

for ip in ${master_ips[@]}

do

echo ========= scp 【$1】 to 【m${ip##*.}:/home/$1】 =========

scp -r $1 m${ip##*.}:/home/$(dirname $1)

done

END

# 软连接

chmod a+x xscp.sh && ln -sf $PWD/xscp.sh /usr/local/bin

K3s负载均衡配置

nginx/conf.d/k3s.stream master_ips改变时需重新执行

# stream 6443

cat > nginx/conf.d/k3s.stream << EOF

upstream k3s_api {

least_conn;

EOF

# master ips

for ip in ${master_ips[@]}

do

cat >> nginx/conf.d/k3s.stream << EOF

server $ip:6443 max_fails=3 fail_timeout=5s;

EOF

done

cat >> nginx/conf.d/k3s.stream << EOF

}

server {

listen 6443;

proxy_pass k3s_api;

}

EOF

# 80

cat > nginx/conf.d/http80.conf << EOF

upstream http80 {

least_conn;

EOF

# master ips

for ip in ${master_ips[@]}

do

cat >> nginx/conf.d/http80.conf << EOF

server $ip:80 max_fails=3 fail_timeout=5s;

EOF

done

# 80

cat >> nginx/conf.d/http80.conf << EOF

}

server {

listen 80;

server_name *.${domain};

location / {

proxy_pass http://http80;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto \$scheme;

proxy_set_header Upgrade \$http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

EOF

# nginx 配置生效

docker restart nginx

K3s集群部署

免密登录

# 生成~/.ssh/id_rsa id_rsa.pub

ssh-keygen -t rsa &&

rm -f ~/.ssh/known_hosts &&

# 复制密钥

for ip in ${master_ips[@]}

do

ssh-copy-id -f m${ip##*.}

done

修改主机名

for ip in ${master_ips[@]}

do

ssh m${ip##*.} hostnamectl set-hostname m${ip##*.}

done

复制资源

# 复制k3s安装文件(k3s/install.sh/k3s-airgap-images-amd64.tar)到k3s目录中

xscp.sh certs/ &&

xscp.sh docker/ &&

xscp.sh k3s/

init

xcall.sh chmod a+x /home/docker/*.sh &&

xcall.sh chmod a+x /home/k3s/*.sh &&

# 挂载光盘yum源

# xcall.sh /home/docker/yum_cdrom.sh &&

# 内网yum源

# xcall.sh /home/docker/yum_lan

xcall.sh /home/docker/init.sh

# init后机器重启,请稍等。。。

dns配置

# 为coredns服务配置

xcall.sh ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf &&

xcall.sh sed -i "s/.*DNSStubListener.*/DNSStubListener=no/" /etc/systemd/resolved.conf &&

# 卸载dns服务

xcall.sh yum remove -y dnsmasq

install

重装前清理

# 如果之前安装过k3s,先卸载清除

xcall.sh /home/k3s/remove.sh &&

# 清除pg库

docker-compose -f ./pg_k3s/docker-compose.yml down &&

rm -rf ./pg_k3s/pgdata &&

docker-compose -f ./pg_k3s/docker-compose.yml up -d &&

# 清除rancher

docker-compose -f ./rancher/docker-compose.yml down &&

rm -rf ./rancher/auditlog/ ./rancher/rancher/ &&

docker-compose -f ./rancher/docker-compose.yml up -d

with docker

xcall.sh /home/docker/install_docker.sh &&

xcall.sh /home/k3s/docker_installed.sh

with containerd(推荐)

postgresql

xcall.sh /home/k3s/containerd_installed.sh &&

xcall.sh \cp /home/k3s/k3s /usr/local/bin/ &&

xcall.sh "INSTALL_K3S_SKIP_DOWNLOAD=true INSTALL_K3S_EXEC=\"server --datastore-endpoint=postgres://postgres:12345@${op}:5432/kubernetes?sslmode=disable --write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666\" /home/k3s/install.sh"

DQlite

# 首个节点

ssh ${master_ips[0]} /home/k3s/containerd_installed.sh &&

ssh ${master_ips[0]} \cp /home/k3s/k3s /usr/local/bin/ &&

ssh ${master_ips[0]} "INSTALL_K3S_SKIP_DOWNLOAD=true INSTALL_K3S_EXEC=\"--cluster-init --write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666\" /home/k3s/install.sh"

# 取token

token=$(ssh ${master_ips[0]} "cat /var/lib/rancher/k3s/server/node-token")

# 其他节点台

for ((i=1; i<${#master_ips[*]}; i++))

do

echo ************* ${master_ips[i]} **************

ssh ${master_ips[i]} /home/k3s/containerd_installed.sh

ssh ${master_ips[i]} \cp /home/k3s/k3s /usr/local/bin/

ssh ${master_ips[i]} "INSTALL_K3S_SKIP_DOWNLOAD=true K3S_TOKEN=${token} INSTALL_K3S_EXEC=\"server --server https://${master_ips[0]}:6443 --write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666\" /home/k3s/install.sh"

done

# 检查仓库设置

xcall.sh cat /var/lib/rancher/k3s/agent/etc/containerd/config.toml

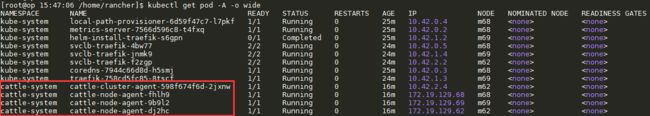

集群管理

# 检查pods是否正常,若有pod异常可执行 xcall.sh systemctl restart k3s

# 软连接kubectl与k3s

chmod a+x k3s/k3s && ln -s $PWD/k3s/k3s /usr/local/bin/kubectl

# 复制集群配置文件到本机

scp master_ips[0]:/root/.kube/config ~/.kube/

kubectl get no -A -o wide

kubectl get pod -A -o wide

K3s 导入到 rancher

rancher -> 添加集群 -> 导入 -> 复制升级命令 -> 粘贴命令

# 检查pods是否正常,若有pod异常可执行 xcall.sh systemctl restart k3s

kubectl get pod -A -o wide

参考

https://rancher2.docs.rancher.cn/

修改集群IP范围

各主机修改 /var/lib/rancher/k3s/agent/etc/flannel/net-conf.json

下一章

longhorn存储HA部署