评价分类结果

评价分类结果

- 一、混淆矩阵 Confusion Matrix

- 1.1 精准率

- 1.2 召回率

- 二、混淆矩阵的实现

- 2.1 TN

- 2.2 FP

- 2.3 FN

- 2.4 TP

- 2.5 混淆矩阵

- 2.6 精确率 precision_score

- 2.7 召回率

- 2.8 scikit-learn中的confusion_matrix

- 三、F1 score

- 四、Precision-Recall之间的平衡

- 五、ROC曲线

- 六、多分类中的ROC曲线

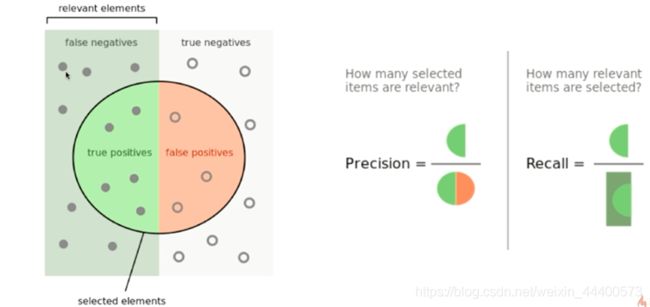

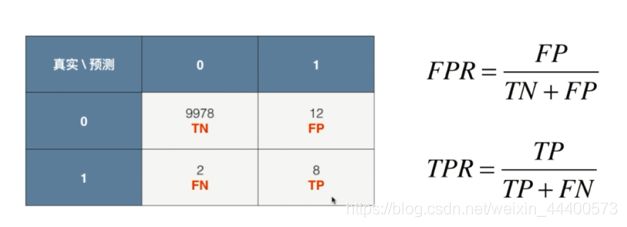

一、混淆矩阵 Confusion Matrix

1.1 精准率

- 将1作为我们真正预测关注的对象

1.2 召回率

二、混淆矩阵的实现

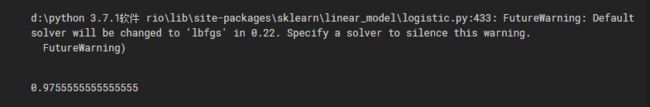

- 导入数据并训练

import numpy as np

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

digits = datasets.load_digits()

X = digits.data

y = digits.target.copy()

y[digits.target==9] = 1

y[digits.target!=9] = 0

X_train,X_test,y_train,y_test = train_test_split(X,y,random_state=666)

log_reg = LogisticRegression()

log_reg.fit(X_train,y_train)

log_reg.score(X_test,y_test)

y_log_predict = log_reg.predict(X_test)

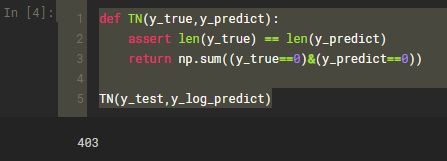

2.1 TN

def TN(y_true,y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true==0)&(y_predict==0))

TN(y_test,y_log_predict)

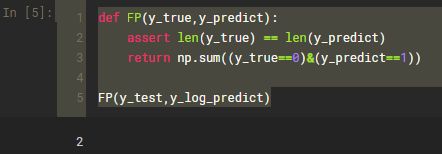

2.2 FP

def FP(y_true,y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true==0)&(y_predict==1))

FP(y_test,y_log_predict)

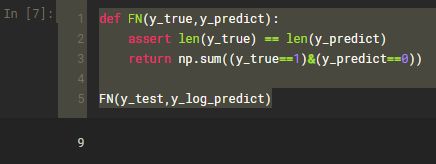

2.3 FN

def FN(y_true,y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true==1)&(y_predict==0))

FN(y_test,y_log_predict)

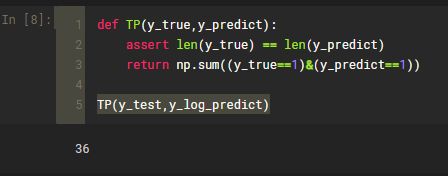

2.4 TP

def TP(y_true,y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true==1)&(y_predict==1))

TP(y_test,y_log_predict)

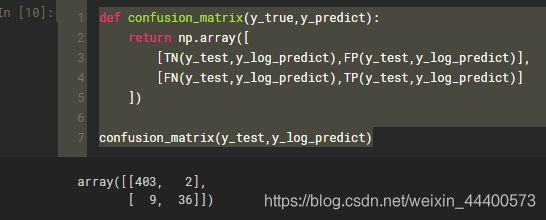

2.5 混淆矩阵

def confusion_matrix(y_true,y_predict):

return np.array([

[TN(y_test,y_log_predict),FP(y_test,y_log_predict)],

[FN(y_test,y_log_predict),TP(y_test,y_log_predict)]

])

confusion_matrix(y_test,y_log_predict)

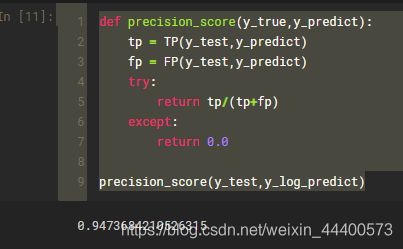

2.6 精确率 precision_score

def precision_score(y_true,y_predict):

tp = TP(y_test,y_predict)

fp = FP(y_test,y_predict)

try:

return tp/(tp+fp)

except:

return 0.0

precision_score(y_test,y_log_predict)

2.7 召回率

def recall_score(y_true,y_predict):

tp = TP(y_test,y_predict)

fn = FN(y_test,y_log_predict)

try:

return tp/(tp+fn)

except:

return 0.0

recall_score(y_test,y_log_predict)

== 真实值中为1的预测出来的TP的概率==

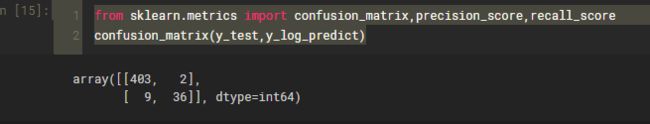

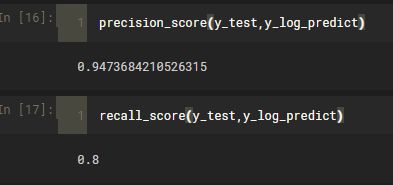

2.8 scikit-learn中的confusion_matrix

from sklearn.metrics import confusion_matrix,precision_score,recall_score

confusion_matrix(y_test,y_log_predict)

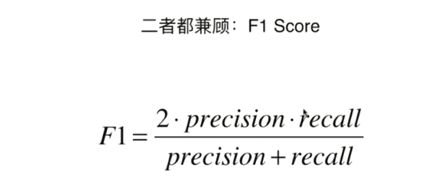

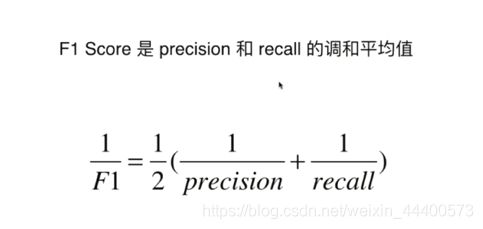

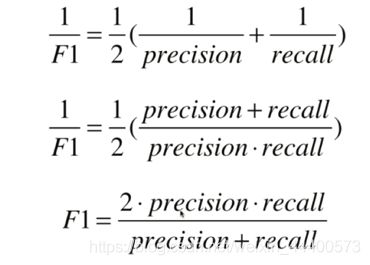

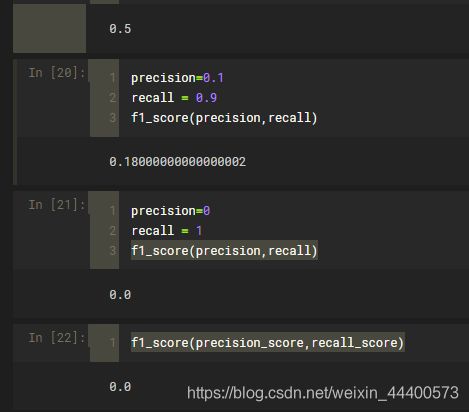

三、F1 score

def f1_score(precision,recall):

try:

return 2*precision*recall/(precision+recall)

except:

return 0.0

precision = 0.5

recall = 0.5

f1_score(precision,recall)

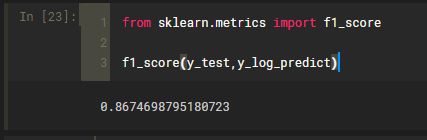

from sklearn.metrics import f1_score

f1_score(y_test,y_log_predict)

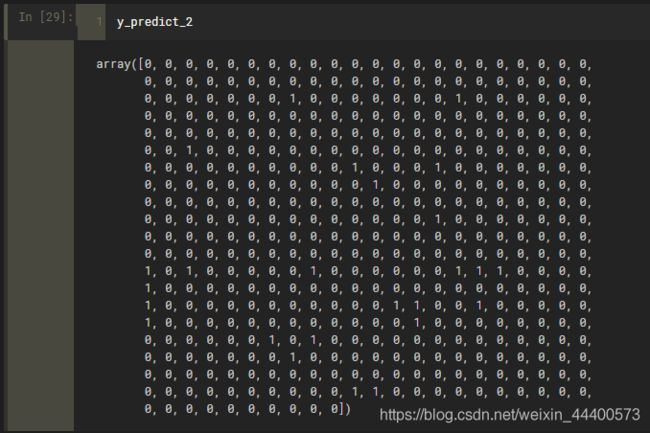

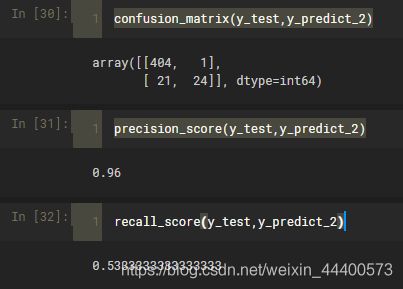

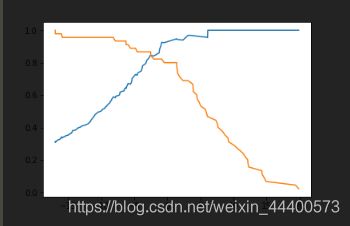

四、Precision-Recall之间的平衡

- decision_function

decision_scores = log_reg.decision_function(X_test)

y_predict_2 = np.array(decision_scores>=5,dtype='int')

y_predict_3 = np.array(decision_scores>=-5,dtype='int')

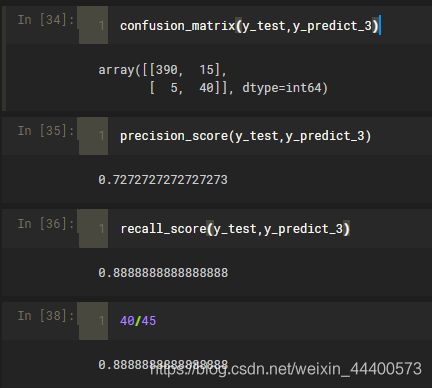

decision_scores = log_reg.decision_function(X_test)

precision = []

recall = []

thresholds = np.arange(np.min(decision_scores),np.max(decision_scores),0.1)

for threshold in thresholds:

y_predict = np.array(decision_scores >= threshold,dtype ='int')

precision.append(precision_score(y_test,y_predict))

recall.append(recall_score(y_test,y_predict))

import matplotlib.pyplot as plt

plt.plot(thresholds,precision)

plt.plot(thresholds,recall)

plt.show()

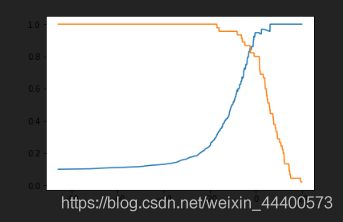

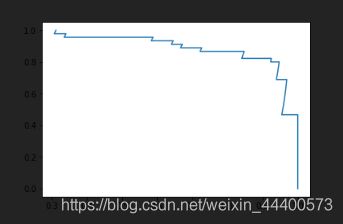

- precision-recall曲线

plt.plot(precision,recall)

plt.show()

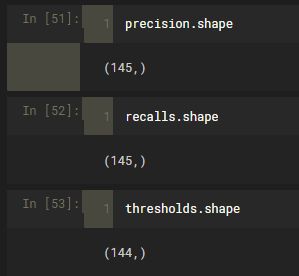

- scikit-learn中的precision-recall曲线

from sklearn.metrics import precision_recall_curve

precision,recalls,thresholds = precision_recall_curve(y_test,decision_scores)

plt.plot(thresholds,precision[:-1])

plt.plot(thresholds,recalls[:-1])

plt.show()

plt.plot(precision,recalls)

plt.show()

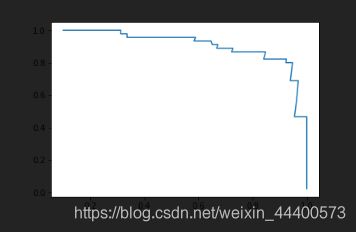

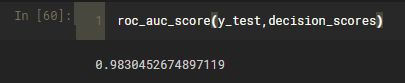

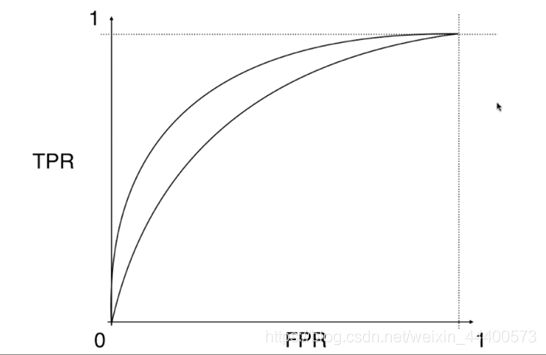

五、ROC曲线

digits = datasets.load_digits()

X = digits.data

y = digits.target.copy()

y[digits.target==9] = 1

y[digits.target!=9] = 0

X_train,X_test,y_train,y_test = train_test_split(X,y,random_state=666)

log_reg = LogisticRegression()

log_reg.fit(X_train,y_train)

log_reg.score(X_test,y_test)

decision_scores = log_reg.decision_function(X_test)

from sklearn.metrics import roc_curve,roc_auc_score

fprs,tprs,thresholds = roc_curve(y_test,decision_scores)

plt.plot(fprs,tprs)

plt.show()

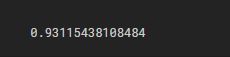

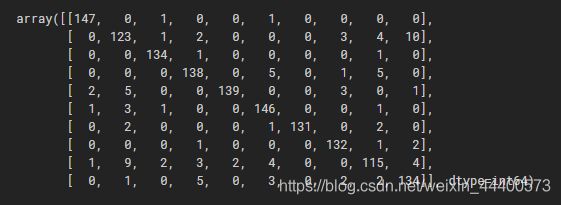

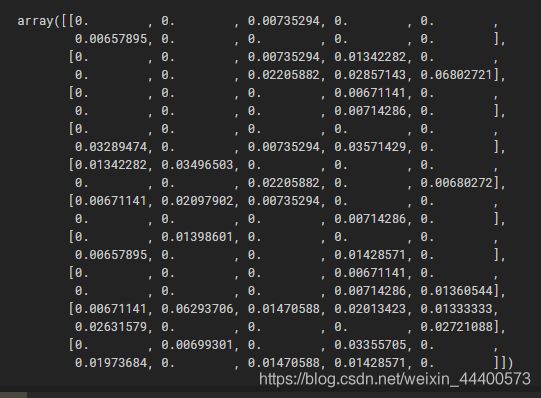

六、多分类中的ROC曲线

digits = datasets.load_digits()

X = digits.data

y = digits.target

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size = 0.8,random_state=666)

log_reg = LogisticRegression()

log_reg.fit(X_train,y_train)

log_reg.score(X_test,y_test)

y_predict = log_reg.predict(X_test)

precision_score(y_test,y_predict,average = "micro") # 多分类,报错

confusion_matrix(y_test,y_predict)

cfm = confusion_matrix(y_test,y_predict)

plt.figure(figsize=(10,8))

plt.matshow(cfm,cmap=plt.cm.gray)

plt.show()

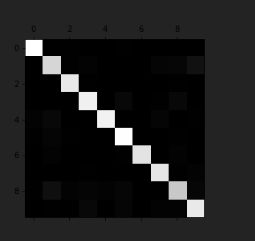

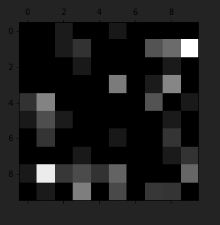

row_sums = np.sum(cfm,axis=1) # 每一行的数字和

err_matrix = cfm/row_sums

np.fill_diagonal(err_matrix,0)

err_matrix

plt.matshow(err_matrix,cmap=plt.cm.gray)

plt.show()

# 哪里犯错多,哪里就变白