简单的基于Tacotron2的中英文混语言合成, 包括code-switch和voice clone. 以及深入结构设计的探讨.

之前的讨论

33. 韵律评测, 很重要. https://zhuanlan.zhihu.com/p/43240701

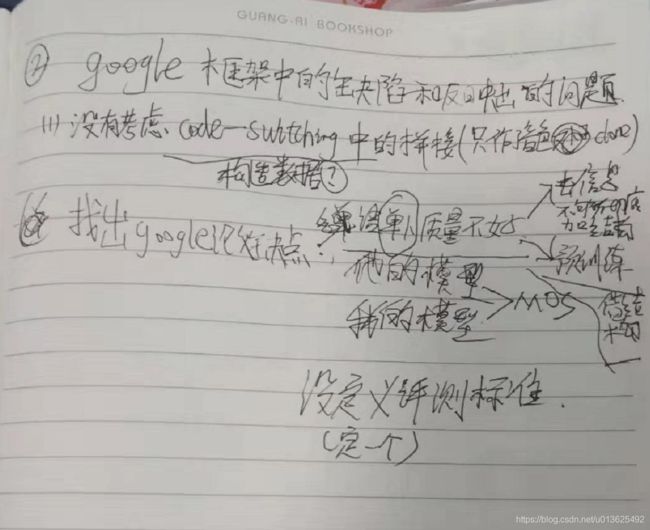

34. 复现了Tacotron2 中文和英文 单语言合成, 音质满足期望(忽略inference时间), 下一步方向在哪里, 如果想Expressive, 靠谱的方法有什么经验吗, 同时我尝试下混语言:

expressive最简单用look up table就可以,不过需要标注,继续深化就是vae系列了,比如gmvae,木神应该更加熟悉这些东西,mixlingual现在看来有数据就能做,不过跨说话人的话,可能vocoder的影响就会变得很大

Expressive, 如果有标注的话, 就类似于,speaker id, 之后用look up table, 这个我去找找有没有论文/数据集, 跑跑试试;

VAE (Encoder) 作为prosody Encoder, 这个应该是也要尝试的, 虽然对于VAE我.....;

mixlingual/cross-lingual 双语同人数据集有的话, 直接正常训练, 不涉及speaker id和language id, 这个看看有没有数据集 (或者把LJSPeech和标贝当成一个人), 但具体涉及到code-switching还有些细节 (比如训练数据switch的比例和测试语句相差很大);

跨语言说话人, 特别是一种语言只有一个说话人 (但是语料质量非常高), 如何做到voice clone, switch-coding, 确实是个难题, 但借助与VAE也可能有解决方法, 不过没有明白师兄说的"可能vocoder的影响就会变得很大"的含义, 是指的整个网络decoder端的网络设计吗

"可能vocoder的影响就会变得很大" 。我觉得是训练wavenet啥的,跟说话人关系比较大

翻翻王木师兄的论文了, 重音

举个例子啊,比如mixlingual的一句话: Amazon并购了Google

现在实际有两个说话人,一个英文说话人,一个中文说话人,然后训了一个multispeaker,multilingual的模型,在inference的时候,指定了中文说话人的ID,然后合成,这个时候英文部分的发音铁定不好,这个时候就需要靠vocoder的鲁棒性修了

就是英文从context text => aucostic feature会很难受, 因为他训练的时候没见过这个ID

但是网络还是会硬着去搞(找平衡), 这样的aucostic feature就不是那么完美, 需要vocoder来修

当然还有共享phone集这些办法

全共享phone这种, 其实音色迁移(统一)是很好的应该.

但是会丢掉每段语音内部自身语言的独特性(韵律, 口音, 发音)

总而言之任重道远

我其实设计了个很大的网络

步骤一: 尽量简单化的实现一个cross-language TTS, 直至state of art.

(到20号完成)

1. 直接使用最简单的text的group, 然后数据放到一起, 不加任何标记, 不加模型结构.

- LJSpeech1.1 + 标贝.

- 字母序列+拼音版本, 英文音素+声韵母版本都尝试. 工程性工作.

- 音素版本的调调更加"抑扬", "优美", 先调查英文转成音标. 使用这个: https://github.com/Kyubyong/g2p 具体代码在下面的py转换脚本中.

- Welcome Mr. Li Xiang to join in our human-computer speech interaction laboratory, we are a big family. 中文名字的发音? Mr. 是否可以.

- tar -xjvf httpd-2.4.4.tar.bz2

- 安装ntlk的时候, 用arch版本, sudo pacman -S python-nltk sudo pacman -S nltk-data

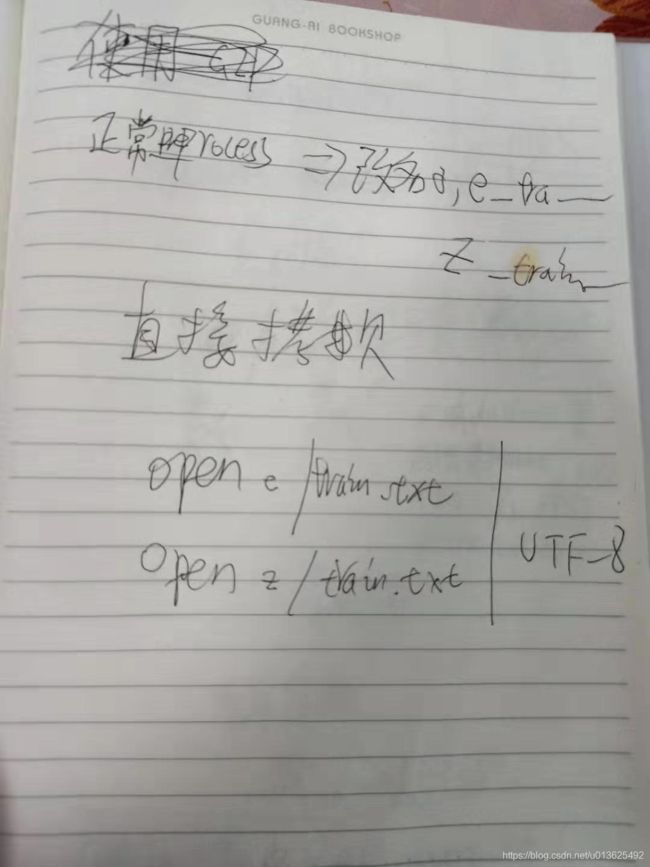

- 使用from preprocess to phoneme.py脚本就能转换, 过程如下图 (注意中文, 英文提取的声学参数应该完全一样维度):

- 但是发现的确不同, 中文版本的: https://github.com/Joee1995/tacotron2-mandarin-griffin-lim/blob/master/hparams.py 英文版本的:https://github.com/Rayhane-mamah/Tacotron-2/blob/master/hparams.py

- 说不清哪个好, 等实验之后再说吧. 或者看google等的论文.

- 制作中文_phoneme在之前的joee文件夹有, 英文_phoneme在test_t2_wavenet/old_del/Mix_phoneme_G2P_demo.ipynb 中, 然后粘贴到: text_list_for_synthesis.txt

- 发现问题: 2w steps的时候, 中文合成已经很好, 单独英文竟然还会出现断句, 中英文混合会发现后面的英文竟然发不出音. 不知道为什么, 收敛的慢? python synthesize.py --model='Tacotron2-mix-phoneme' --output_dir='mix-phoneme-output_dir/' --text_list='text_list_for_synthesis.txt'

- 基本的排查原因: 还是得等训练到8w左右吧.

2. 加入speaker id的 (无language id)

https://github.com/Rayhane-mamah/Tacotron-2

•Related work:

•[3] Learning pronunciation from a foreign language in speech synthesis networks

•MIT. Revised 12 Apr 2019 (unpublished)

•Implement a multilingual multi-speaker tacotron2. On pre-training(then coming with a fine-tune using low-source language data), using high-source language data and low-source language data together is much better than only use hight—source language

•Paper studied learning pronunciation from a bilingual TTS model. Learned phoneme embedding vectors are located closer if their pronunciations are similar across the languages. This can generalize to every low-source language. Knowing this, we can always give small data big data whatever its language is to improve network convergence.

•In fact, this embedding vectors is something like IPA. But IPA (1) has no stress label (2)no mapping for small language. Speech in different languages is different speakers.

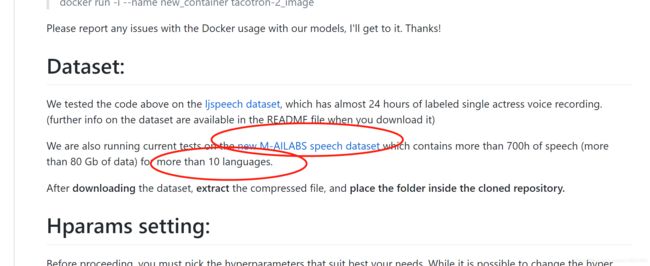

•Use dataset: VCTK, CMU Arctic, LJSpeech, 2013 Blizzard, CSS10.

MODEL:

A sequence of phonemes are converted to phoneme embeddings, then fed to the encoder as input. We concatenate phoneme embedding dictionary of each language to form the entire phoneme embedding dictionary, so there may exist duplicated phonemes in the dictionary if the languages share same phonemes. Note that, the phoneme embeddings are normalized to have the same norm. In order to model multiple speakers’ voices in a single TTS model, we adopt Deep Voice 2 [4] style speaker embedding network. One-hot speaker identity vector is converted to a 32-dimensional speaker embedding vector by the speaker embedding network.

the amount of speech data differs across speakers. This data imbalance may induce a bias to the TTS model. To cope with this data imbalance, we divide the loss of each sample from one speaker by the total number of samples in a training set which belongs to the speaker. We empirically found that this adjustment in loss function yields better synthesis quality

同时可以关注下这篇文章的phoneme输入, 并且pre-trained的应用也需要关注.

步骤二: 收集现在情况, 包括代码和思路

最重要的是定义一个评测函数

Cross-lingual Voice Conversion

I wish I could speak many languages. Wait. Actually I do. But only 4 or 5 languages with limited proficiency. Instead, can I create a voice model that can copy any voice in any language? Possibly! A while ago, me and my colleage Dabi opened a simple voice conversion project. Based on it, I expanded the idea to cross-languages. I found it's very challenging with my limited knowledge. Unfortunately, the results I have for now are not good, but hopefully it will be helpful for some people.

February 2018

Author: Kyubyong Park ([email protected])

Version: 1.0

VQ-VAE

This is a Tensorflow Implementation of VQ-VAE Speaker Conversion introduced in Neural Discrete Representation Learning. Although the training curves look fine, the samples generated during training were bad. Unfortunately, I have no time to dig more in this as I'm tied with my other projects. So I publish this project for those who are interested in the paper or its implementation. If you succeed in training based on this repo, please share the good news.

Voice Conversion with Non-Parallel Data

What if you could imitate a famous celebrity's voice or sing like a famous singer? This project started with a goal to convert someone's voice to a specific target voice. So called, it's voice style transfer. We worked on this project that aims to convert someone's voice to a famous English actress Kate Winslet's voice. We implemented a deep neural networks to achieve that and more than 2 hours of audio book sentences read by Kate Winslet are used as a dataset.

tacotron2-vae

https://github.com/jinhan/tacotron2-vae

tacotron2-gst

(Expressive)

https://github.com/jinhan/tacotron2-gst

VAE Tacotron-2:

https://github.com/rishikksh20/vae_tacotron2

https://arxiv.org/pdf/1812.04342.pdf

TPSE Tacotron2

https://github.com/cnlinxi/tpse_tacotron2

paper: Predicting Expressive Speaking Style From Text in End-to-End Speech Synthesis

reference : Rayhane-mamah/Tacotron-2

Tacotron-2:

https://github.com/cnlinxi/tacotron2decoder

pretrain decoder for fewer parallel corpus.

Paper: Semi-Supervised Training for Improving Data Efficiency in End-to-End Speech Synthesis

Reference: Rayhane-mamah/Tacotron-2

style-token Tacotron2

https://github.com/cnlinxi/style-token_tacotron2#style-token-tacotron2

paper: Style Tokens: Unsupervised Style Modeling, Control and Transfer in End-to-End Speech Synthesis

reference : Rayhane-mamah/Tacotron-2

HTS-Project

https://github.com/cnlinxi/HTS-Project

HTS-demo project with blank data, expecially extended for Mandarin Chinese SPSS system.

speech_emotion

https://github.com/cnlinxi/speech_emotion#speech_emotion

Detect human emotion from audio.

Refer to some code in Speech_emotion_recognition_BLSTM, thanks a lot.

create dir 'logs' in the project dir, so you can find most of the logs of step, you can find details in them. Also, it create a log file in the project dir as befrore..

Tacotron-2:

https://github.com/karamarieliu/gst_tacotron2_wavenet

cvqvae

Conditional VQ-VAE, adaped from the VQ-VAE code provided by MishaLaskin [https://github.com/MishaLaskin/vqvae].

Tensorflow implementation of DeepMind's Tacotron-2. A deep neural network architecture described in this paper: Natural TTS synthesis by conditioning Wavenet on MEL spectogram predictions

Vector Quantized Variational Autoencoder

This is a PyTorch implementation of the vector quantized variational autoencoder (https://arxiv.org/abs/1711.00937).

You can find the author's original implementation in Tensorflow here with an example you can run in a Jupyter notebook.

Tacotron2 for 瑞典语

https://github.com/ruclion/taco2swe

A modification of https://github.com/Rayhane-mamah/Tacotron-2 that is intended for use with the Swedish language.