搜索客户端传参监控,request日志监控

设计思路

README.md

客户端传参request监控客户端:

1.客户端入手:客户端添加接口请求日志,打印request 和responses,把日志单独存放,然后进行日志分析监控

2.服务端入手:抓取api访问日志(请求详情的),进行日志分析监控

如果是日志的话,可以用Tlog去分析日志,然后直接用meta消息发送,建立个后台监听消息的应用,处理后入库,直接统计监控即可

搜索客户端和服务端传参监控初步设计思想

a.客户端入手:客户端添加接口请求日志,打印request 和responses,把日志单独存放,然后进行日志分析监控或者直接读取日志系统(只是这样日志可能不全)

最好的方式是通过uiautomatorviewer设计代码,模拟各场景的点击等,再捞取日志

b.服务端入手:对接口返回进行日志分析监控

搜索接口日志在的库(线上,没有预发)XX_engine_midlog,存储格式为pb,需要转换为json

后端RD已配合添加最全的客户端传参参数列表

SELECT *

FROM XX_cost_log_tt4

where ds='${}'

limit 50;

这个表里把前端参数都记录下来了

Content里的origialParam里就是客户端传参

截取出这个数据,按json去解析就可以

客户端传参监控设计:使用阿里中间件潘多拉生成项目结构,并参考项目内部一些代码

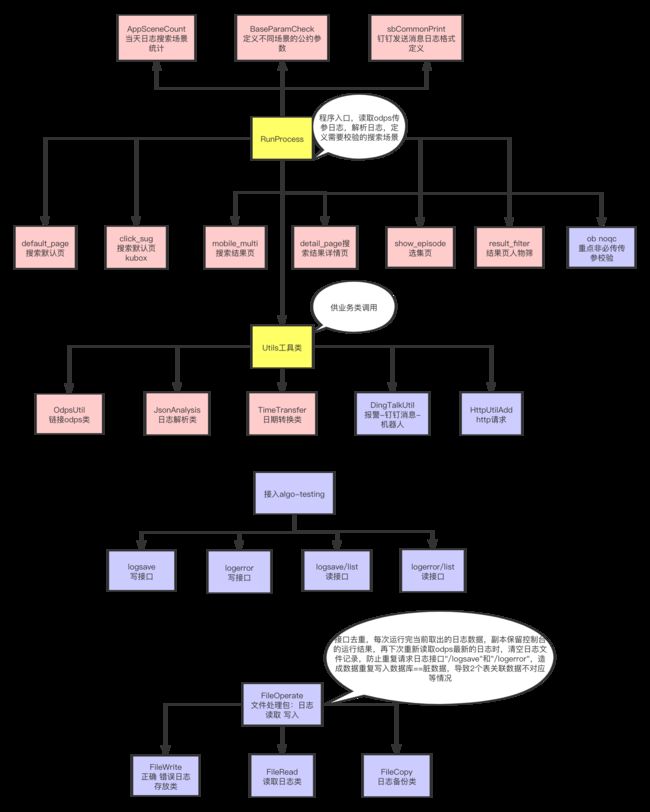

## 脚本设计思路

客户端传参字段都存在odps.database.table_name中content.xx字段

参考timeassess中连接 读取odps的工具类和方法

和双十一搜索接口监控的代码设计

使用中间件开发环境-潘多拉(http://mw.xx-xx.com/xx.html)

生成工程,配置maven,需要的依赖:com.aliyun.odps、fastjson、httpcore

脚本部分:

1.由于客户端代码庞大,RD也不清楚搜索来源以及含义。故此自己读取odps日志,用HashMap对appScene的来源做去重统计,再筛选出搜索业务线的场景

2.从数据库读取出日志,每次读取5000行,获取每条SQL的查询字段内是否存在content字段(里面为所有日志,origialParam为客户端全部传参)

3.解析xx.content.origialParam的json:找到字符串中{第一次出现的位置,截取字符串,把截取的字符串

转换为json

4.根据不同的搜索场景设计不同的类,工具类,将公共参数设计为单独的工具类供appScene场景调用。方便代码维护,一目了然

5.判断json里必须包含的字段,用if对每个字段进行判断:是否包含的key,获取key对应的value,判断value是否为空;

类型转换,判断枚举值的范围;正则校验key是否匹配算法规则;jsonlist里的每个json的判断:list必须存在的key以及value的判断;

根据以上打印不同的日志信息。逻辑校验:如json.getString("appScene").equals("default_page")时, mustExistKey = new String[]{"cn","source_from"};

其他传参时候,mustExistKey = new String[]{"cn","ok","search_from"};

appScenes去重后的列表为{ott_sug=87, default_page=637, detail_page=66, show_policy=961, imerge=316, ott_search=16, result_filter=7, show_episode=95, click_sug=819, ott_search_new=905, mobile_multi=1091},个数为:11,

ott_sug为ott大屏用的sug,default_page为搜索默认页,click_sug为搜索默认页kubox,ott_search大屏搜索,

mobile_multi为搜索结果页,detail_page为搜索结果详情页,show_episode为选集页,result_filter是结果页的人物筛选

show_policy是给引擎算法的同学用的

搜索相关场景如下

String[] appSceneCheck = {"default_page", "click_sug", "mobile_multi", "show_episode", "detail_page", "result_filter"};

6. 日志分类设计:

err 搜索场景分类以及个数统计、打印每条SQL查询出的日志内容、客户端key value的校验出错时

out 正常日志判断,线上定时任务跑数据的时候注释掉

System.err.println("第"+count+"条日志传参key详细判断=======trackInfoAppend里面的json不满足以上任何一种组合逻辑,客户端传参错误,BUG BUG BUG!!!");

7. 不同搜索场景的特殊参数校验DiffSceneParam、公约参数校验BaseParam

8. /Users/lishan/Desktop/code/clientParamWatch/clientParamWatch-service/src/main/java/com/xx/xx/RunProcess.java

为程序执行入口,读取日志

9. 相关文档

搜索SDK-yksearch接口文档:xx

APP默认搜索场景接口文档:xx

根据业务调整脚本

1. 9月16日新增 兜底策略

keyword传参用正则匹配校验是否为urlencode后的参数((%[0-9A-Fa-f]{2}){2})+ 或者英文 数字等情况

} else if (!(keyword.matches("((%[0-9A-Fa-f]{2}){2})+")|| keyword.matches("[0-9a-zA-Z]+"))) {

%E5%A4%A9%E5%9D%91%E9%B9%B0%E7%8C%8E

将需要转码的字符转为16进制,然后从右到左,取4位(不足4位直接处理),每2位做一位,前面加上%,编码成%XY格式

但考虑到用户输入的query可能为abc 123 ??? 《我爱我家》 加油,优雅!非诚勿扰2019 等特殊字符

正则增加匹配双字节字符(汉字、中文标点符号等)的判断 [^\x00-\xff]

} else if (!(keyword.matches("((%[0-9A-Fa-f]{2}){2})+") | | keyword.matches("[0-9a-zA-Z]+") || !keyword.matches("[^\\x00-\\xff]*[a-zA-Z0-9]*"))) {

2. 9月18日新增 ipv4转换为ipv6业务

暂时把ip的校验改为仅ipv6的校验,取灰度包APP版本号,读取odps最新的日志,观察日志

3. 为了一目了然的查看日志具体时间,定位问题。把客户端传参里的时间戳clientTimeStamp转换成日期格式字符串"yyyy年MM月dd日 HH:mm:ss",

并在每条err日志打印,若无clientTimeStamp,则日志打印"暂无clientTimeStamp"

4. 10月29日新增 log4j日志组件,提供方便的日志记录,debug info error warn fatal5个级别

遇到的问题:log4j与slf4j冲突的解决与思考。最终项目使用log4j配置

5. 11月2日新增断言错误的日志接入钉钉报警,参考其他项目的DingTalkUtil、HttpUtilAdd,本地运行通过,接入海川平台。配置定时任务,每周每天18:00开始执行,每一个小时执行一次,每天5次

6. 11月3日设计正常日志、报警日志入库-未完待续

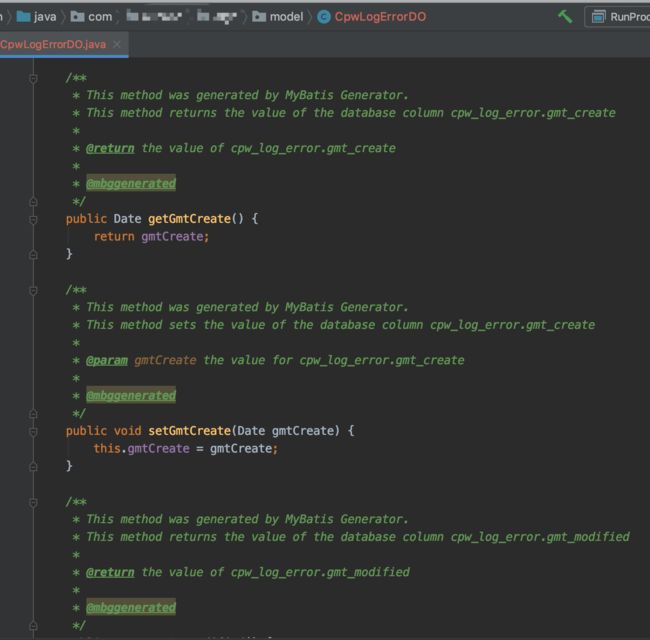

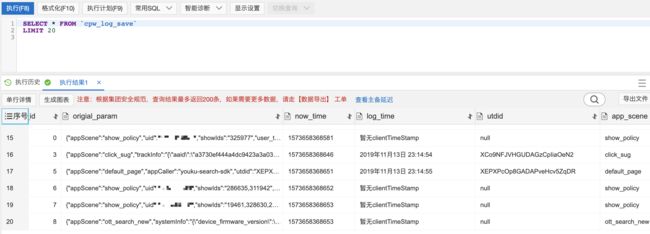

设计3张表

cpw_log_info --记录从odps同步过来的原始日志

cpw_log_rta --记录原始日志信息,从odps的ytsoku.s_tt_ytsoku_ysearch_cost_log_tt4.content拆分出的currentTime、clientTimeStamp、appScene、utdid为、origialParam等字段

cpw_log_param --错误参数日志入库,记录log_id 错误日志error_log 正常日志right_log 错误级别error_level 是否展示is_show

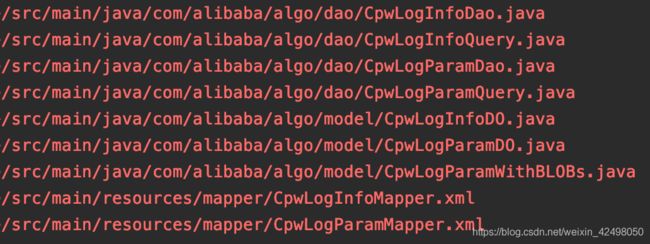

原始newcpw工程迁移algo-testing,修改algo-testing-service/src/main/resources/Generator.xml配置,增加如上3张表的配置

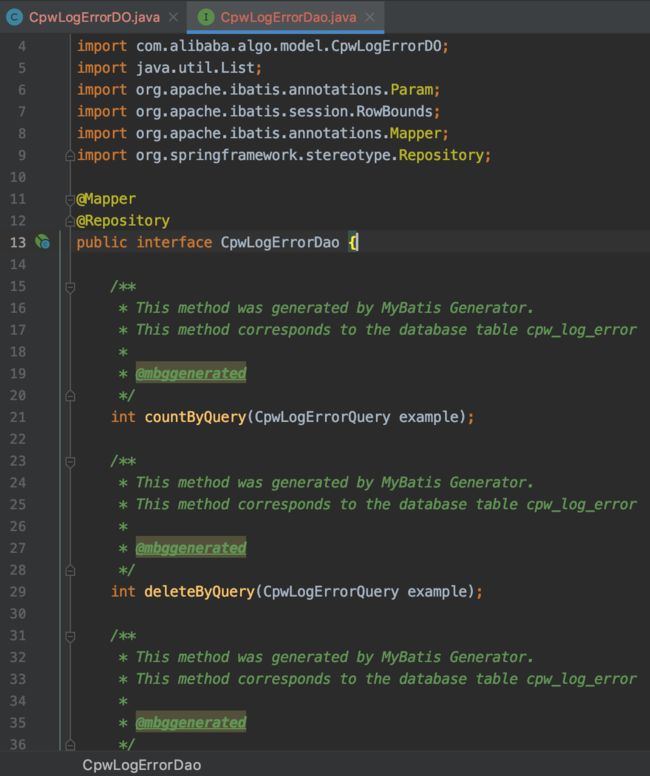

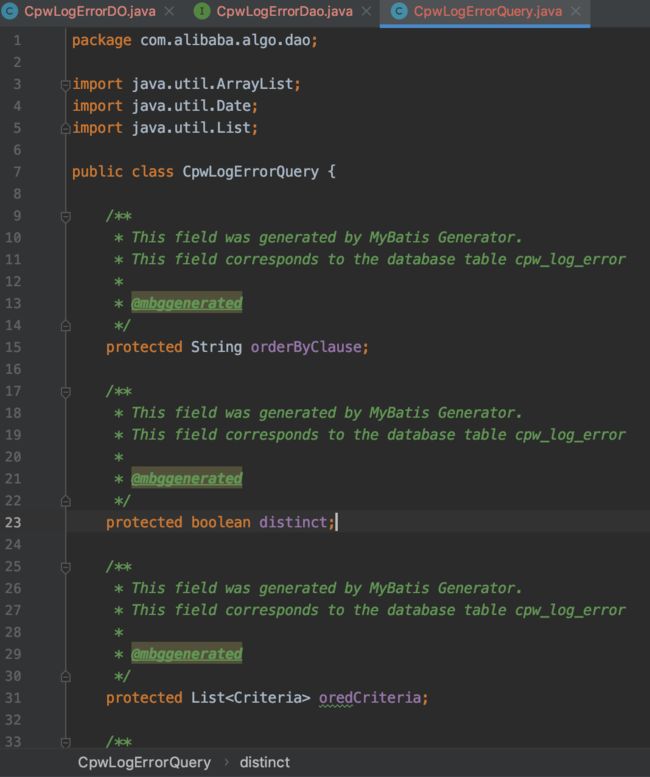

通过mybatis-generator:generate在/Users/lishan/Desktop/code/xx/xx-service/src/main/java/com/alibaba/algo/dao下生成Dao文件

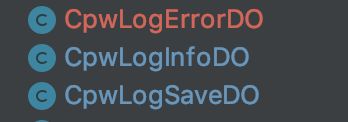

在/Users/lishan/Desktop/code/xx/xx-service/src/main/java/com/alibaba/algo/model生成Do文件

待续。。

由于algo-testing使用的是slf4j,与我原来的项目log4j冲突了,故此需要修改pom.xml文件和代码,与团队保持一致

7. 11月06新增 keyword与version的版本关系

version的判断:

比较版本号的大小,前者大则返回一个正数,后者大返回一个负数,相等则返回0

先根据长度判断,取最小长度值,如8.5和8.5.1版本比较,取前者长度。先比较长度,再比较数字大小

如果已经分出大小,则直接返回,如果未分出大小,则再比较位数,有子版本的为大;如果logVersionminVersion则为正数

当version>=9.1.8时,判断keyword为urlencode。小于时,判断为\S*

8. 11月8日

将项目中log4j配置改为slf4j

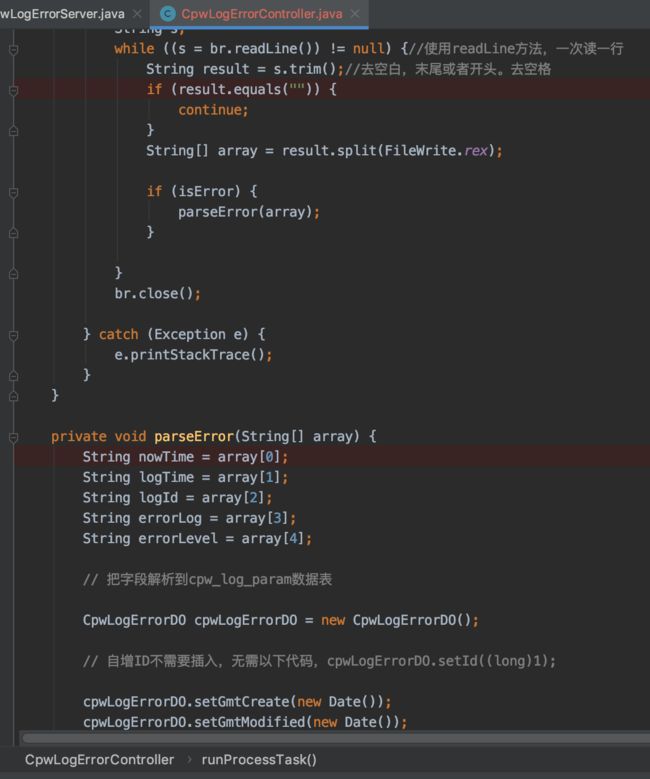

9. 11月12日新增FileWrite FileRead,拆分assert断言日志,用于写入数据库

System之前新增如下代码,用于写入错误日志文件errorLog,再做字段拆分,从而写入数据库,记录读取的log日志

Demo如下:

一级报错日志如下:

用于写入错误日志文件errorLog,再做字段拆分,从而写入数据库,记录读取的log日志

FileWrite.errorLog(currentTimeMillis + "||" + count + "||" + "条日志,客户端传参缺少【utdid】数据节点,BUG BUG BUG!!!" + "||" + "1" + "||");

二级报错日志如下:

用于写入错误日志文件errorLog,再做字段拆分,从而写入数据库,记录读取的log日志

FileWrite.errorLog(currentTimeMillis + "||" + count + "||" + "条日志,utdid客户端传参value为空,结果为" + "||" + "2" + "||");

当前时间,日志log_id,错误信息,错误级别1为某个字段未传,2为字段value值错误

10. 11月18日,全局代码assert断言新增

增加异常判断,如果记录用户行为日志的时间戳clientTimeStamp为空,获取不到,则打印暂无clientTimeStamp

if (clientTimeStamp == null || clientTimeStamp == "") {

// 用于写入日志文件,再做字段拆分,从而写入数据库,记录读取的log日志

FileWrite.originLog(currentTimeMillis + FileWrite.rex + "暂无clientTimeStamp" + FileWrite.rex + count + FileWrite.rex + appScene + FileWrite.rex + utdid +FileWrite.rex+ json);

// 控制台展示的日志

System.err.println(currentTime + "日," + "暂无clientTimeStamp" + ",第【" + count + "】条数据,appScene为:" + appScene + ",utdid为:" + utdid + ",===ytsoku.content.origialParam的json内容为=======" + json);

} else {

FileWrite.originLog(currentTimeMillis + FileWrite.rex + clientTimeStamp + FileWrite.rex + count + FileWrite.rex + appScene + FileWrite.rex + utdid + FileWrite.rex + json);

System.err.println(currentTime + "日," + clientTimeStamp + ",第【" + count + "】条数据,appScene为:" + appScene + ",utdid为:" + utdid + ",===ytsoku.content.origialParam的json内容为=======" + json);

}

11. 11月21日

重新调整结构,FileOperate-FileWrite FileRead FileCopy

1> 每次运行完RunProcess本地生成origin_log.txt和error_log.txt 记录每次读取odps的运行的所有原始日志和错误日志

2> 请求完"/logsave"和"/logerror",拷贝origin_log.txt和error_log.txt到副本origin_log_copy.txt和error_log_copy.txt(拷贝之前增加try catch判断,是否已有副本,兼容debug调试过程中的数据),再删除origin_log.txt和error_log.txt

3> 在下次定时任务再次调度RunProcess时,删除以上4个日志文件

4> 以上做到每次运行完当前取出的日志数据,副本保留控制台的运行结果,再下次重新读取odps最新的日志时,清空日志文件记录,防止重复请求日志接口"/logsave"和"/logerror",造成数据重复写入数据库==脏数据,导致2个表关联数据不对应等情况

日志文件拷贝参考博客:https://www.cnblogs.com/zq-boke/p/8523710.html

12. 11月21日

只有端上来的请求带utdid,show_policy是给引擎算法的同学用的,非客户端请求

SELECT count(*) FROM `cpw_log_save` where gmt_create >="2019-11-20 17:31:43" ORDER BY id DESC

SELECT count(*) FROM `cpw_log_error` where gmt_create >="2019-11-20 17:31:43" ORDER BY id DESC

SELECT * FROM `cpw_log_save` AS a INNER JOIN cpw_log_error AS b ON a.log_id=b.log_id where

a.gmt_create >="2019-11-20 17:31:43" ORDER BY a.id DESC

=================================================================================================

=================================================================================================

搜索客户端传参监控接入海川指令:

cd /Users/lishan/Desktop/code/xx/xx-service

mvn compile

mvn exec:java -pl :algo-testing-service -Dexec.mainClass=com.xx.newcpw.sdksearch.RunProcess

git地址 http://xx

海川地址-客户端传参监控

https://xx

每天17:00定时任务执行,每小时一次,数据范围:线上APP(8.2.2版本以上)全部客户端传参日志request请求

=================================================================================================

=================================================================================================

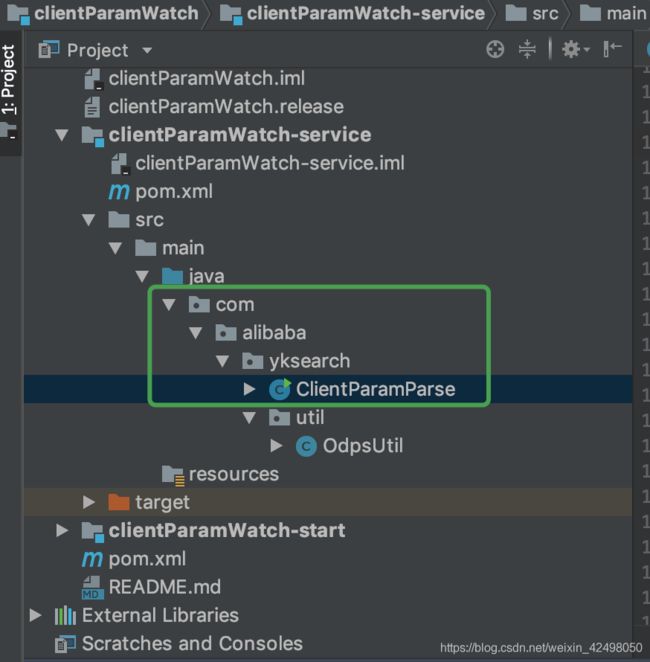

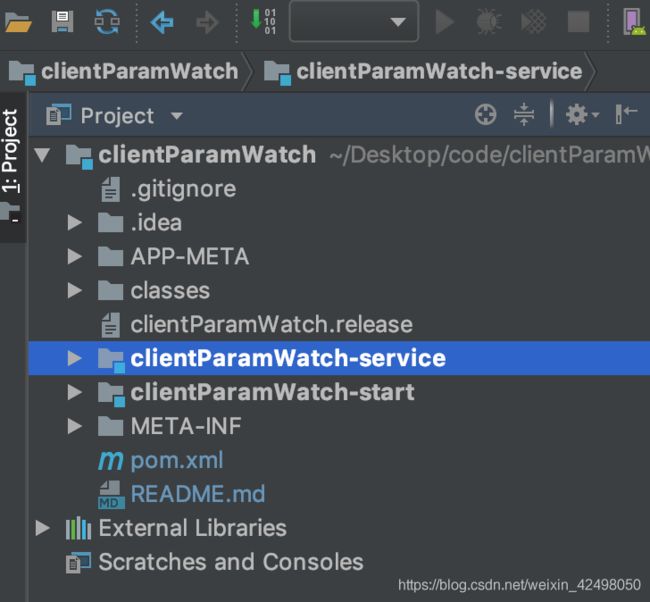

## 目录结构

解压后生成以下两个子目录

* clientParamWatch-service,包含各中间件的使用示例代码,代码在src/main/java目录下的com.alibaba.yksearch包中。

* clientParamWatch-start,包含启动类`com.alibaba.unusedsdksearch.Application`。中间件使用示例的单元测试代码在`src/test/java`目录下的`com.alibaba.unusedsdksearch`包中。日志配置文件为`src/main/resources`目录下的logback-spring.xml。

## 使用方式

### 在开发工具中执行

将工程导入eclipse或者idea后,直接执行包含main方法的类`com.alibaba.unusedsdksearch.Application`。

### 使用fat jar的方式

这也是pandora boot应用发布的方式。首先执行下列命令打包

```sh

mvn package

```

如果选择了auto-config,可在命令后加

```sh

-Dautoconfig.userProperties={fullPath}/bootstrap-start/antx.properties

```

通过-D参数指定antx.properties的位置,否则会进入autoconfig的交互模式

然后进入`clientParamWatch-start/target`目录,执行fat jar

```sh

java -Dpandora.location=${sar} -jar clientParamWatch-start-1.0.0-SNAPSHOT.jar

```

其中${sar}为sar包的路径

### 通过mvn命令直接启动

第一次调用前先要执行

```sh

mvn install

```

如果maven工程的Artifact,group id,version等都未变化,只需执行一次即可。

然后直接通过命令执行start子工程

```sh

mvn -pl clientParamWatch-start pandora-boot:run

```

以上两个命令,如果选择了auto-config,可在命令后加

```sh

-Dautoconfig.userProperties={fullPath}/bootstrap-start/antx.properties

```

通过-D参数指定antx.properties的位置,否则会进入autoconfig的交互模式properties的位置

## 升级指南

* http://xx/pandora-boot/wikis/changelog

## Docker 模板

* APP-META 目录里

* http://xx/wikis/docker

## aone发布

请参考文档 http://xx/pandora-boot/wikis/aone-guide

### Logger配置

* logger配置: http://xx/pandora-boot/wikis/log-config

### Docker相关链接

* 如果工程有docker模板,目录是 APP-META,docker模板的说明文件是:APP-META/README.md

* docker参考说明:http://xx/pandora-boot/wikis/docker

代码整体设计:

由于代码庞大,RD也不清楚搜索来源,故此,QA自己统计日志得知。再根据我们的业务筛选自己的搜索来源

未完待调整。。。

package com.alibaba.yksearch.util;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import com.aliyun.odps.data.Record;

import org.apache.http.util.TextUtils;

import java.util.HashMap;

import java.util.HashSet;

import java.util.List;

import java.util.Set;

/**

*

{

"appScene": "mobile_multi",

"appCaller": "y-search-sdk",

"utdid": "WEUJw96TyyUDAFqtb2eRn0O5",

"ip": "223.12.213.5",

"clientTimeStamp": 1561219572,

"pz": "30",

"pid": "69b81504767483cf",

"userAgent": "MTOPSDK/1.4.0 (iOS;12.3.1;Apple;iPhone9,2)",

"version": "8.0.5",

"userId": "UNDAyNzY1ODM2OA==",

"srid": "0",

"scene": "mobile_multi",

"systemInfo": "{\"osVer\":\"12.3.1\",\"appPackageKey\":\"Y\",\"ouid\":\"895478688c97bbb7aca798f885132a838f496508\",\"idfa\":\"4499CA06-CD0D-42E0-96A0-8BFA6227778F\",\"brand\":\"apple\",\"os\":\"ios\",\"imei\":\"WEUJw96TyyUDAFqtb2eRn0O5\",\"ver\":\"8.0.5\",\"guid\":\"895478688c97bbb7aca798f885132a838f496508\",\"network\":\"WIFI\",\"btype\":\"iPhone9,2\",\"pid\":\"69b81504767483cf\"}",

"searchFrom": "2",

"aaid": "cb5a46b35ea6bb8dcef7a74b2091ee7c",

"sdkver": "107",

"userNumId": 1006914592,

"userType": "vip",

"keyword": "雷洛传",

"trackInfoAppend": "{\"ok\":\"雷洛\",\"source_from\":\"home\",\"cn\":\"精选\"}",

"sourceFrom": "home"

}

*

*/

public class appSceneCount {

private static String accessId = "XX";

private static String accessKey = "XX";

public static void main(String[] args) {

String sql = "SELECT content FROM ytsoku.s_tt_ytsoku_ysearch_cost_log_tt4 where ds='20190623' limit 200;";

List list = OdpsUtil.getSQLResult(sql, accessId, accessKey);

// 定义HashMap集合,去重,存取key value

HashMap appScenes = new HashMap<>();

// 定义set集合,去重,只存key

// Set appScenes = new HashSet<>();

for (int i = 0; i < list.size(); i++) {

// 获取单条SQL的查询字段内容

Record record = list.get(i);

// 正序读取数据库内容,与SQL查询出的内容一致

// System.out.println("读取odps数据库的第"+i+"条数据:"+record);

String appScene = parseJSON(record.getString("content"), i); // i == count

// appScenes.add(appScene);

// object为value,个数

Object object = appScenes.get(appScene);

// System.out.println("object为"+object);

if (object != null){

int value =Integer.parseInt(object.toString());

appScenes.put(appScene, value+1);

} else {

appScenes.put(appScene, 1);

}

// 若日志显示为红色,则打印err的日志

// System.err.println("第【"+i+"】条数据判断结束");

}

System.err.println("数据库一次查询的数量为" + list.size());

System.err.println("appScenes去重后的列表为" + appScenes + ",个数为:" + appScenes.size());

}

/**

* @param jsonText

* @param count

*/

private static String parseJSON(String jsonText, int count) {

String appScene = null;

// SQL中的content字段解析,jsonText为整个content内容,查找origialParam字段

int firstIndex = jsonText.indexOf("{");

// 从{开始截取字符串

String jsonTextReal = jsonText.substring(firstIndex);

// 把字符串转换为json

JSONObject json = JSON.parseObject(jsonTextReal);

System.out.println("第【" + count + "】条数据,ytsoku.content.origialParam的json内容为=======" + json);

// appScene

if (json.containsKey("appScene")){

appScene = json.getString("appScene");

// System.out.println(appScene);

// 把int类型转换为string类型

if (TextUtils.isEmpty(appScene)){

// System.out.println("第【"+count+"】条数据,appScene客户端传参为空,结果为"+appScene);

}

}

return appScene;

}

}

package com.alibaba.yksearch.util;

/**

*

*

* @author lishan

* @date 20190628

*/

import java.util.Iterator;

import java.util.ArrayList;

import java.util.List;

import com.aliyun.odps.Instance;

import com.aliyun.odps.Odps;

import com.aliyun.odps.OdpsException;

import com.aliyun.odps.account.Account;

import com.aliyun.odps.account.AliyunAccount;

import com.aliyun.odps.data.Record;

import com.aliyun.odps.task.SQLTask;

public class OdpsUtil {

// 以下为https://datastudio2.dw.alibaba-inc.com/ 点击用户的头像,获取如下连接开发环境数据库的参数

// private static String accessId = "XX";

// private static String accessKey = "XX";

private static String accessId = "XX";

private static String accessKey = "XX";

private static String odpsUrl = "http://service-corp.odps.aliyun-inc.com/api";

// yt_sokurec_ensure_dev为odps的dev环境(测试环境),线上为xx

// private static String project = "xx";

private static String project = "xx_dev";

public static List getSQLResult(String sql){

Account account = new AliyunAccount(accessId, accessKey);

Odps odps =new Odps(account);

odps.setEndpoint(odpsUrl);

odps.setDefaultProject(project);

Instance i;

List records = new ArrayList<>();

try {

i = SQLTask.run(odps, sql);

i.waitForSuccess();

records = SQLTask.getResult(i);

} catch (OdpsException e) {

e.printStackTrace();

}

return records;

}

public static List getSQLResult(String sql,String accessSelfId,String accessSelfKey){

Account account = new AliyunAccount(accessSelfId, accessSelfKey);

Odps odps =new Odps(account);

odps.setEndpoint(odpsUrl);

odps.setDefaultProject(project);

Instance i;

List records = new ArrayList<>();

try {

i = SQLTask.run(odps, sql);

i.waitForSuccess();

records = SQLTask.getResult(i);

} catch (OdpsException e) {

e.printStackTrace();

}

return records;

}

public static List record2wordList(List list)

{

List listFile = new ArrayList<>();

if(list !=null && list.size()>0)

{

Iterator iterator=list.iterator();

while (iterator.hasNext())

{

Record record= (Record) iterator.next();

String keyWord=record.getString(0);

listFile.add(keyWord);

}

}

return listFile;

}

}

待续

脚本接入实验室,定时任务执行

mvn clean compile

mvn compile exec:java -Dexec.mainClass="com.alibaba.sdksearch.ClientParamParse"

从Java后的路径开始写

第39条日志,ip格式错误,结果为2409:8938:6098:143e:75a6:610a:ef44:ed4c ,BUG BUG BUG!!!

第519条日志,ip格式错误,结果为2408:84f2:302:5867::1,BUG BUG BUG!!!

以上是因为ipv6的ip格式本身就是这样的

原因:IP个别是这样的 绝大数 是 "ip": "120.230.70.150" ipv4;现在ipv4的地址快要用完了,逐渐在往ipv6上靠,很多ipv4要兼容到ipv6

ipv4 ipv6正则

([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}) ipv6的正则

([0-9A-Fa-f]{1,4}:){4}:+([0-9A-Fa-f]{1,4}) 但这些还不够

最标准的正则

\s*((([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}|:))|(([0-9A-Fa-f]{1,4}:){6}(:[0-9A-Fa-f]{1,4}|((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){5}(((:[0-9A-Fa-f]{1,4}){1,2})|:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){4}(((:[0-9A-Fa-f]{1,4}){1,3})|((:[0-9A-Fa-f]{1,4})?:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){3}(((:[0-9A-Fa-f]{1,4}){1,4})|((:[0-9A-Fa-f]{1,4}){0,2}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){2}(((:[0-9A-Fa-f]{1,4}){1,5})|((:[0-9A-Fa-f]{1,4}){0,3}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){1}(((:[0-9A-Fa-f]{1,4}){1,6})|((:[0-9A-Fa-f]{1,4}){0,4}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(:(((:[0-9A-Fa-f]{1,4}){1,7})|((:[0-9A-Fa-f]{1,4}){0,5}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:)))(%.+)?\s*

// ip判断 ipv4 ipv6

if (json.containsKey("ip")) {

String ip = json.getString("ip");

if (TextUtils.isEmpty(ip)) {

System.err.println("第" + count + "条日志,ip客户端传参为空,结果为" + ip + ",BUG BUG BUG!!!");

} else if (!(ip.matches("[0-9]\\d*.[0-9]\\d*.[0-9]\\d*.[0-9]\\d*") ||

ip.matches("([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4})")||

ip.matches("([0-9A-Fa-f]{1,4}:){4}:+([0-9A-Fa-f]{1,4})")||

ip.matches("\\s*((([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}|:))|(([0-9A-Fa-f]{1,4}:){6}(:[0-9A-Fa-f]{1,4}|((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){5}(((:[0-9A-Fa-f]{1,4}){1,2})|:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){4}(((:[0-9A-Fa-f]{1,4}){1,3})|((:[0-9A-Fa-f]{1,4})?:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){3}(((:[0-9A-Fa-f]{1,4}){1,4})|((:[0-9A-Fa-f]{1,4}){0,2}:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){2}(((:[0-9A-Fa-f]{1,4}){1,5})|((:[0-9A-Fa-f]{1,4}){0,3}:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){1}(((:[0-9A-Fa-f]{1,4}){1,6})|((:[0-9A-Fa-f]{1,4}){0,4}:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3}))|:))|(:(((:[0-9A-Fa-f]{1,4}){1,7})|((:[0-9A-Fa-f]{1,4}){0,5}:((25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)(\\.(25[0-5]|2[0-4]\\d|1\\d\\d|[1-9]?\\d)){3}))|:)))(%.+)?\\s*"))) {

System.err.println("第" + count + "条日志,ip格式错误,结果为" + ip + ",BUG BUG BUG!!!");

} else {

System.out.println("第" + count + "条日志,ip客户端传参正确,校验通过~~~");

}

}

} else {

System.err.println("第" + count + "条日志,客户端传参缺少【ip】数据节点" + ",BUG BUG BUG!!!");

}

小坑坑:

ysearch011011054165.zbyk.na62,/home/admin/ysearch/logs/cost.log,2019-08-26 00:08:00.841 INFO 2846 --- [HSFBizProcessor-DEFAULT-8-thread-87] COST : 20190826000800,appScene:imerge,appCaller:soku-search-yapi,ip:11.11.54.165,spip:11.185.202.152:2084,xCaller:soku-search-yapi,bucketName:116,utdid:,aaid:,eagleEye:0badca7315667492807845543e,success:1,method:imerge1,filling_result:1,policyUgcIds:1043299369,1043325864,677115729,387506184,1038450295,1039225941,163333522,1032759994,1038847326,1043305754,1048769338,1043303956,1046746330,1049879378,1043309158,1040313806,131561752,1043307902,91252642,1043327869,1043306490,1038403128,1043329393,1043322711,ugcPolicyRate:0.26373626373626374,ugc:38,ugc_result:1,policy-match:7,tair:10,scheme-match:0,scheme:0,total:52,keyword:{utf8},origialParam:{"appScene":"imerge","appCaller":"soku-search-yapi","searchType":"8","ip":"144.48.242.121","user_from":"1","pz":"10","user_terminal":"2","is_apple":"2","scene":"imerge","site":"1","user_type":"guest","pg":"1","keyword":"{utf8}"}

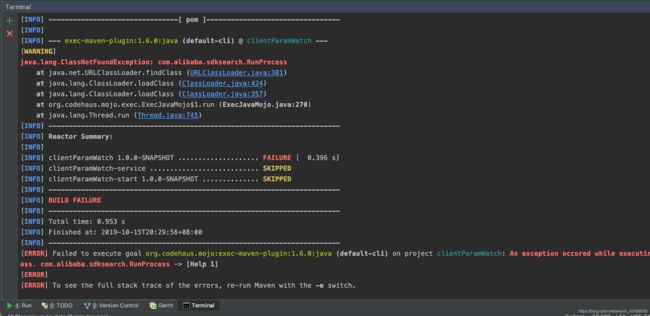

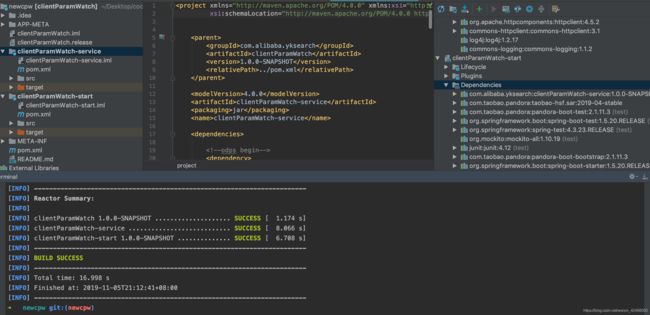

由于要接入公司的平台,每天定时运行代码,生成报警,钉钉消息。需要用maven指令运行代码

mvn compile exec:java -D exec.mainClass="com.alibaba.sdksearch.RunProcess"

但报错如下

➜ clientParamWatch git:(master) ✗ mvn compile exec:java -D exec.mainClass="com.alibaba.sdksearch.RunProcess"

[INFO] Scanning for projects...

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] clientParamWatch [pom]

[INFO] clientParamWatch-service [jar]

[INFO] clientParamWatch-start [jar]

[INFO]

[INFO] ---------------< com.alibaba.yksearch:clientParamWatch >----------------

[INFO] Building clientParamWatch 1.0.0-SNAPSHOT [1/3]

[INFO] --------------------------------[ pom ]---------------------------------

[INFO]

[INFO] --- exec-maven-plugin:1.6.0:java (default-cli) @ clientParamWatch ---

[WARNING]

java.lang.ClassNotFoundException: com.alibaba.sdksearch.RunProcess

at java.net.URLClassLoader.findClass (URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass (ClassLoader.java:424)

at java.lang.ClassLoader.loadClass (ClassLoader.java:357)

at org.codehaus.mojo.exec.ExecJavaMojo$1.run (ExecJavaMojo.java:270)

at java.lang.Thread.run (Thread.java:745)

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] clientParamWatch 1.0.0-SNAPSHOT .................... FAILURE [ 0.370 s]

[INFO] clientParamWatch-service ........................... SKIPPED

[INFO] clientParamWatch-start 1.0.0-SNAPSHOT .............. SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 0.852 s

[INFO] Finished at: 2019-10-16T17:23:37+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.codehaus.mojo:exec-maven-plugin:1.6.0:java (default-cli) on project clientParamWatch: An exception occured while executing the Java class. com.alibaba.sdksearch.RunProcess -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR] Failed to execute goal org.codehaus.mojo:exec-maven-plugin:1.6.0:java (default-cli) on project clientParamWatch: An exception occured while executing the Java class. com.alibaba.sdksearch.RunProcess -> [Help 1]

原因:

开始以为是 这个类 com.alibaba.sdksearch.RunProcess 没有,jar包依赖 应该没引入,classnotfound是缺少类,运行时发现缺少了

于是,要把所有的jar包都打好,再放到运行环境

参考博客:https://blog.csdn.net/qq_17213067/article/details/82771745

【JAVA】idea mac打Jar包build方法及Maven方法

但不生效,连续问了8 9个RD大神,最终解决方案如下:

跟着大神学习了一招,把我电脑的代码传到他电脑上,使用如下命令

rsync -e ssh -aP clientParamWatch is@ipv4(地址):src

关于rsync 可以参考 https://blog.csdn.net/cdnight/article/details/78861543

cd /Users/lishan/Desktop/code/clientParamWatch

mvn compile

mvn exec:java -pl :clientParamWatch-service -Dexec.mainClass=com.alibaba.sdksearch.RunProcess

因为是用潘多拉生成的项目,父子项目。。。我的主程序在子项目里,需要用pl指定子项目文件。此命令执行时候需要进入到项目路径,再指定主程序入口的文件夹,执行命令

当前路径下查找文件名 : find . -iname 'Run*.class'

运行maven指令结果如下

搜索客户端传参监控海川地址:

https://XXX#/task/result/detail?resultId=190853&configId=10731

结果列表-操作-查看-结果地址-点击下载-解压缩-1500042_console日志为最终结果

2019年10月11日,通过监控日志发现

String sql = "SELECT content FROM ytsoku.s_tt_ytsoku_ysearch_cost_log_tt4 where ds=20191011 limit 400;";

这天的日志格式多了参数,排查方法:打印从odps读取的原始日志数据

System.out.println(record.getString("content")); // i == count

格式如下:发现在json后面多了deleteReason: nul,针对这种情况调整脚本

y- search011139116189.zbyk.na61, /home/admin / y - search / logs / cost.log, 2019 - 10 - 11 00: 02: 26.833 INFO 3014-- - [HSFBizProcessor - DEFAULT - 8 - thread - 24] COST: 20191011000226, appScene: click_sug, appCaller: y - search - sdk, ip: 11.139 .116 .189, spip: null, xCaller: y - search - sdk, bucketName: 0, spCacheBucket: 0, spCacheHit: 0, appPageCache: null, rstate: null, utdid: W0eIhR6vxDkDAPbuR5jvCStE, aaid: 7 d0b0c8276edf88619af455e21d67acd, eagleEye: 0b 8 b6e6315707233468264293ef851, success: 1, method: click_sug, scheme - match: 0, scheme: 0, total: 5, keyword: 铜雀台, origialParam: {

"appScene": "click_sug",

"appCaller": "y-search-sdk",

"pid": "6b5f94f4ab33c702",

"cliShowId": "12187dee2c1411e19194",

"scene": "click_sug",

"aaid": "7d0b0c8276edf88619af455e21d67acd",

"sdkver": "300",

"keyword": "铜雀台",

"trackInfoAppend": "{\"soku_test_ab\":\"c\"}",

"trackInfo": "{\"aaid\":\"7d0b0c8276edf88619af455e21d67acd\",\"cn\":\"精选\",\"engine\":\"expid~req.ugc113.sort1004.rank113.qa1$eid~0b8b6e6315707233413643977ef851$bts~soku_qp#1@soku_engine_master#113@soku_irank#201@soku_ogc#303@soku_ugc#402@soku_ai#A@y_user#B@show_scg#A@role_bigshow_style#A@ott_sp#A@user_folder#B@ugc_black#C@big_store#B@sp_big_ha3#A@user_folder_ab#A@soku_ott#501@default_page_rank#A@week_ugc#A@default_recommend_like#A@show_ip_card#C@search_two_row#A@rank_scg_show#A@ysearch_sp_cache#A@app_page#B@big_store_ugc#B@default_page_scg#A$r_p_n~28\",\"group_id\":\"12187dee2c1411e19194\",\"group_num\":1,\"group_type\":1,\"item_log\":\"eps_t~1$site~137$s_ply_s_yk~1$s_ply_s_td~1$show_id~12187dee2c1411e19194$doc_source~1$eng_source~6$sp_id~1153913891160326144$rec_scg_id~26936$rec_alginfo~-1show_id-2231058-1sceneId-214945-1scg_id-226936$rec_recext~reqid=b23d1077-0335-4d7c-8398-e236d740e45e$scg_id~26936\",\"k\":\"铜雀台\",\"object_title\":\"铜雀台\",\"object_type\":107,\"object_url\":\"y://soku/outsite?title=铜雀台&showid=12187dee2c1411e19194&thumbUrl=https%3A%2F%2Fr1.ykimg.com%2F050C000059438DB3ADBA1FCFFB0AED41&url=https%3A%2F%2Fm.miguvideo.com%2Fmgs%2Fmsite%2Fprd%2Fdetail.html%3Fcid%3D627404476%26channelid%3D10290002017&outSourceSiteId=137\",\"ok\":\"铜\",\"search_from\":\"2\",\"searchtab\":\"0\",\"soku_test_ab\":\"c\",\"source_from\":\"home\",\"source_id\":137}",

"utdid": "W0eIhR6vxDkDAPbuR5jvCStE",

"ip": "183.69.203.252",

"clientTimeStamp": 1570723346,

"userAgent": "MTOPSDK/3.0.5.4 (Android;9;Xiaomi;MI 6)",

"version": "8.1.4",

"userId": "UNjM0OTY5MTI4OA==",

"systemInfo": "{\"ver\":\"8.1.4\",\"os\":\"Android\",\"young\":0,\"btype\":\"MI 6\",\"pid\":\"6b5f94f4ab33c702\",\"deviceId\":\"W0eIhR6vxDkDAPbuR5jvCStE\",\"resolution\":\"1920*1080\",\"operator\":\"中国联通_46001\",\"osVer\":9,\"network\":\"WIFI\",\"ouid\":\"\",\"guid\":\"47fee4a16adb6a68ebcc0e36c2f624bc\",\"appPackageId\":\"com.y.phone\",\"time\":1570723346,\"brand\":\"Xiaomi\"}",

"userNumId": 1587422822,

"userType": "vip"

}, deleteReason: nul

另外针对部分日志不规范,导致脚本解析不了的数据进行异常捕获。

System.err.println("===========第"+count+"条request日志解析出错~~~已跳过===========");

========================================================================================

修改util/JsonAnalysis.java

package com.alibaba.sdksearch.util;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

public class JsonAnalysis {

public static JSONObject jsonText (String jsonText,int count) {

// SQL中的content字段解析,jsonText为整个content内容,查找origialParam字段

// boolean hasUTF8 = jsonText.contains("{utf8}");

// if (hasUTF8) {

// jsonText = jsonText.substring(jsonText.indexOf("}"));

// }

JSONObject json = null;

try {

int firstIndex = jsonText.indexOf("{");

// 从{开始截取字符串

String jsonTextReal = jsonText.substring(firstIndex);

int index = jsonTextReal.lastIndexOf("}");

// 把字符串转换

为json

json = JSON.parseObject(jsonTextReal.substring(0,index)+"}");

}catch (Exception e) {

System.err.println("===========第"+count+"条request日志解析出错~~~已跳过===========");

}

// System.err.println("第【" + count + "】条数据,ytsoku.content.origialParam的json内容为=======" + json);

return json;

}

}调整脚本后,日志运行结果如下

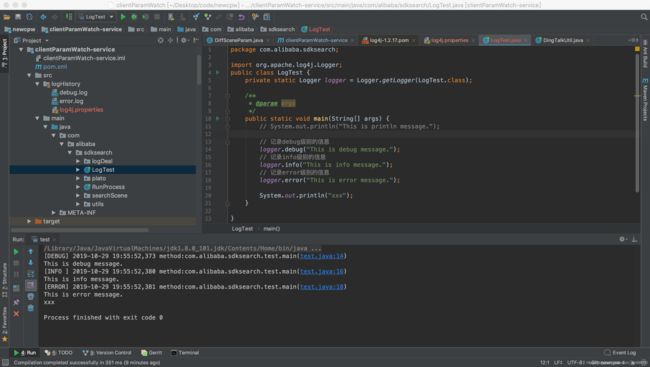

maven项目使用log4j生成日志

参考博客 https://blog.csdn.net/qq_38941812/article/details/87975938

1.去maven仓库下载log4j在pom.xml中的配置

log4j在maven仓库中的配置下载地址

把配置复制到xml中

注:pom文件只需要填写:

log4j

log4j

1.2.17

commons-logging

commons-logging

1.1.2

不需要填写如下

参考 别人代码,全局搜索logger点击f 方法

/Users/lishan/Desktop/code/timeassess/timeassess-service/src/main/java/com/alibaba/ytsoku/ugcBigWords/ResultAnalysiser.java 注意不能用这里面代码调用配置的pom.xml

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;需要导入

import org.apache.log4j.Logger;

调用:

// 记录info级别的信息

logger.info("log======第" + count + "条日志,aaid客户端传参正确,校验通过~~~");

2.src同级创建并设置log4j.properties

### 设置###

log4j.rootLogger = DEBUG, stdout, D, E, I

log4j.additivity.org.apache=true

### 输出信息到控制抬 ###

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern = [%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

### 输出DEBUG 级别以上的日志到=XX/logs/debug.log ###

log4j.appender.D = org.apache.log4j.DailyRollingFileAppender

log4j.appender.D.File = /Users/lishan/Desktop/code/newcpw/clientParamWatch-service/src/logHistory/debug.log

log4j.appender.D.Append = true

log4j.appender.D.Threshold = DEBUG

log4j.appender.D.layout = org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

### 输出ERROR 级别以上的日志到=XX/logs/error.log ###

log4j.appender.E = org.apache.log4j.DailyRollingFileAppender

log4j.appender.E.File =/Users/lishan/Desktop/code/newcpw/clientParamWatch-service/src/logHistory/error.log

log4j.appender.E.Append = true

log4j.appender.E.Threshold = ERROR

log4j.appender.E.layout = org.apache.log4j.PatternLayout

log4j.appender.E.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

### 输出ERROR 级别以上的日志到=XX/logs/info.log ###

log4j.appender.I = org.apache.log4j.DailyRollingFileAppender

log4j.appender.I.File =/Users/lishan/Desktop/code/newcpw/clientParamWatch-service/src/logHistory/info.log

log4j.appender.I.Append = true

log4j.appender.I.Threshold = INFO

log4j.appender.I.layout = org.apache.log4j.PatternLayout

log4j.appender.I.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

参考博客 https://www.jianshu.com/p/ccafda45bcea

再在下方的链接中将log4j.properties的配置复制放进去

log4j详细配置

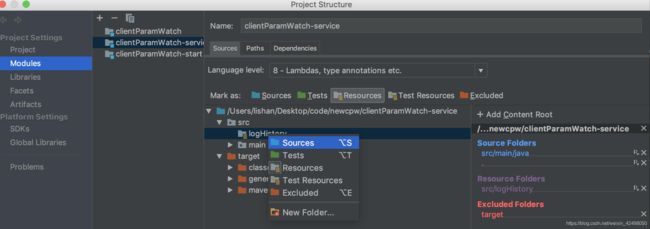

顶部-File-Project Structure-Modules-Source-src/logHistory-Resource-OK即可

创建Demo-LogTest

package com.alibaba.sdksearch;

import org.apache.log4j.Logger;

public class LogTest {

private static Logger logger = Logger.getLogger(LogTest.class);

/**

* @param args

*/

public static void main(String[] args) {

// System.out.println("This is println message.");

// 记录debug级别的信息

logger.debug("This is debug message.");

// 记录info级别的信息

logger.info("This is info message.");

// 记录error级别的信息

logger.error("This is error message.");

System.out.println("xxx");

}

}运行结果如下,在查看src/LogHistory,出现debug.log error.log

org.apach.httpclient

httpclient

4.5.1

org.apache.httpcomponents

httpclient

4.5.2

commons-httpclient

commons-httpclient

3.1

com.alibaba.schedulerx

schedulerx2-spring-boot-starter

0.3.2

slf4j-log4j12

org.slf4j

config

com.typesafe

接入钉钉机器人报警

报警信息入库-未完待续

odps数据迁移至idb

odps的DDL语句:

CREATE TABLE `table_A` ( `content` STRING, `rowkey` STRING ) COMMENT 'TT source table' PARTITIONED BY ( ds STRING COMMENT 'day', hh STRING COMMENT 'hour', mm STRING COMMENT 'minutes' ) LIFECYCLE 600;

idb的DDL语句:

CREATE TABLE `table_B` (

`id` bigint unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`content` text NOT NULL COMMENT '客户端传参原始日志',

`rowkey` varchar(100) NOT NULL COMMENT '标识',

`ds` varchar(50) NOT NULL COMMENT 'day',

`hh` varchar(50) NOT NULL COMMENT 'hour',

`mm` varchar(50) NOT NULL DEFAULT '' COMMENT 'minutes',

PRIMARY KEY (`id`)

) DEFAULT CHARACTER SET=utf8mb4 COMMENT='客户端传参监控';

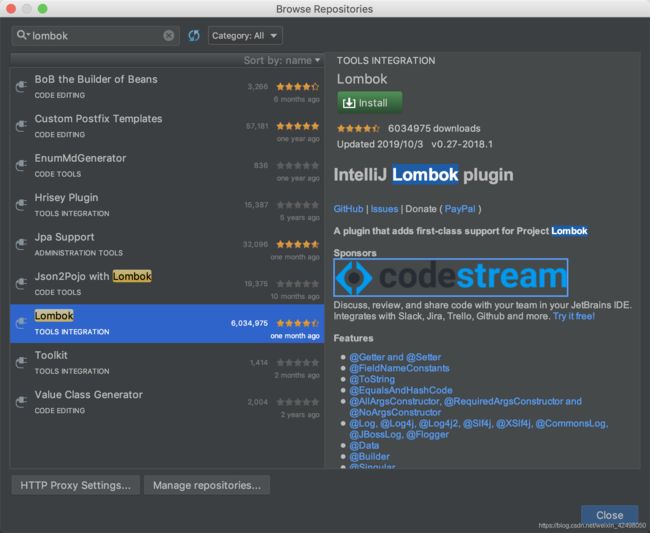

因为我自己的项目使用的日志配置是log4j 而迁移之后的项目是slf4j。发现代码里log是飘红的

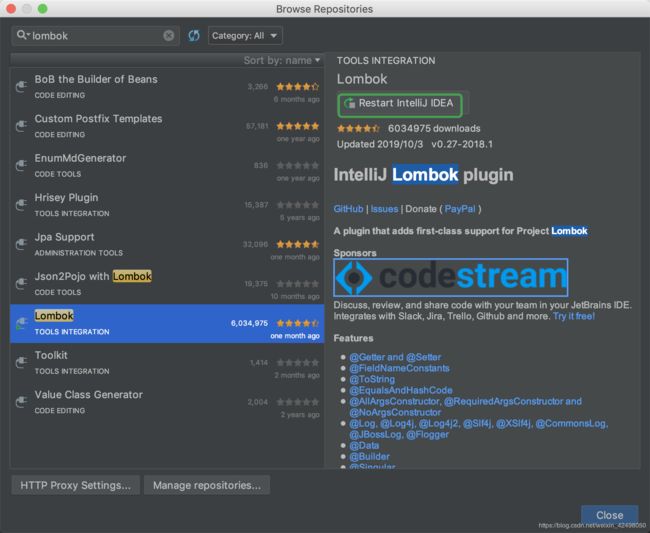

首先解释一下为什么要安装Lombok插件–为什么呢?

因为在idea导入项目的时候,你会看见,卧槽,都是错误,打开一个一个错误,就没有不报错误的,log飘红,提示Cannot resolve symbol‘log’,这就是因为Lombok了原因了!

eclipse和idea开发环境下都有自动生成的bean,entity等类快捷方式,绝大部分数据类类中都需要get、set、toString、equals和hashCode方法,如果bean中的属性一旦有修改、删除或增加时,需要重新生成或删除get/set等方法,给代码维护增加负担。

而使用了lombok则不一样,使用了lombok的注解(@Setter, @Getter, @ToString, @RequiredArgsConstructor, @EqualsAndHashCode 或 @Data)之后,就不需要编写或生成get/set等方法。

在线安装bomlok插件

在intellig idea中选择preferences -- plugs -- Search in repositories搜索lombok

install后Restart IntelliJ IDEA 重启idea后,代码正常

关于mybatis-generator-maven-plugin:2.0.0报错解决:

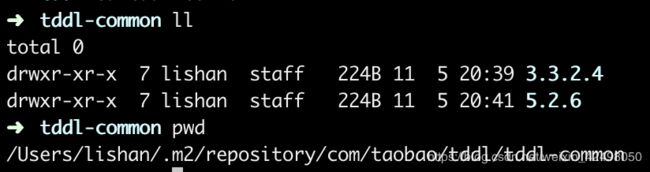

百度了半天,找3个同事对比本地个人目录下/Users/lishan/.m2/repository/com/taobao/tddl

最终发现同事的/Users/lishan/.m2/repository/com/taobao/tddl/tddl-common 下的版本为 5.2.6-1 3.3.2.4 5.2.6

而我自己的都是5以上的版本,缺少3.3.2.4版本

经排查为/Users/lishan/.m2/settings.xml 与其他同事的配置不太一样,导致每次运行mybatis-generator:generate时报错如下。同事把tddl整个文化夹以及settings.xml发给我后,替换完成,重新加载maven配置,再运行generate就ok了

Downloading: http://jcenter.bintray.com/com/alibaba/configserver/google/code/gson/ali-gson/maven-metadata.xml

Downloading: https://jitpack.io/com/alibaba/configserver/google/code/gson/ali-gson/maven-metadata.xml

[INFO]

[INFO] --- mybatis-generator-maven-plugin:2.0.0:generate (default-cli) @ algo-testing-service ---

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 9.886 s

[INFO] Finished at: 2019-11-05T19:49:31+08:00

[INFO] Final Memory: 28M/323M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal com.tmall.mybatis.generator:mybatis-generator-maven-plugin:2.0.0:generate (default-cli) on project algo-testing-service: Execution default-cli of goal com.tmall.mybatis.generator:mybatis-generator-maven-plugin:2.0.0:generate failed: A required class was missing while executing com.tmall.mybatis.generator:mybatis-generator-maven-plugin:2.0.0:generate: com/taobao/tddl/common/util/DataSourceFetcher

[ERROR] -----------------------------------------------------

[ERROR] realm = plugin>com.tmall.mybatis.generator:mybatis-generator-maven-plugin:2.0.0

[ERROR] strategy = org.codehaus.plexus.classworlds.strategy.SelfFirstStrategy

[ERROR] urls[0] = file:/Users/lishan/.m2/repository/com/tmall/mybatis/generator/mybatis-generator-maven-plugin/2.0.0/mybatis-generator-maven-plugin-2.0.0.jar

[ERROR] urls[1] = file:/Users/lishan/.m2/repository/com/taobao/tddl/tddl-group-datasource/3.3.2.4/tddl-group-datasource-3.3.2.4.jar

[ERROR] urls[2] = file:/Users/lishan/.m2/repository/org/mybatis/generator/mybatis-generator-core/1.3.2/mybatis-generator-core-1.3.2.jar

[ERROR] urls[3] = file:/Users/lishan/.m2/repository/org/mybatis/generator/mybatis-generator-maven-plugin/1.3.0/mybatis-generator-maven-plugin-1.3.0.jar

[ERROR] urls[4] = file:/Users/lishan/.m2/repository/com/google/code/javaparser/javaparser/1.0.11/javaparser-1.0.11.jar

[ERROR] urls[5] = file:/Users/lishan/.m2/repository/javax/enterprise/cdi-api/1.0/cdi-api-1.0.jar

[ERROR] urls[6] = file:/Users/lishan/.m2/repository/javax/annotation/jsr250-api/1.0/jsr250-api-1.0.jar

[ERROR] urls[7] = file:/Users/lishan/.m2/repository/org/eclipse/sisu/org.eclipse.sisu.inject/0.3.0/org.eclipse.sisu.inject-0.3.0.jar

[ERROR] urls[8] = file:/Users/lishan/.m2/repository/org/codehaus/plexus/plexus-component-annotations/1.5.5/plexus-component-annotations-1.5.5.jar

[ERROR] urls[9] = file:/Users/lishan/.m2/repository/backport-util-concurrent/backport-util-concurrent/3.1/backport-util-concurrent-3.1.jar

[ERROR] urls[10] = file:/Users/lishan/.m2/repository/org/codehaus/plexus/plexus-interpolation/1.11/plexus-interpolation-1.11.jar

[ERROR] urls[11] = file:/Users/lishan/.m2/repository/org/codehaus/plexus/plexus-utils/1.5.15/plexus-utils-1.5.15.jar

[ERROR] urls[12] = file:/Users/lishan/.m2/repository/junit/junit/3.8.1/junit-3.8.1.jar

[ERROR] urls[13] = file:/Users/lishan/.m2/repository/org/apache/maven/reporting/maven-reporting-api/2.0.9/maven-reporting-api-2.0.9.jar

[ERROR] urls[14] = file:/Users/lishan/.m2/repository/org/apache/maven/doxia/doxia-sink-api/1.0-alpha-10/doxia-sink-api-1.0-alpha-10.jar

[ERROR] urls[15] = file:/Users/lishan/.m2/repository/commons-cli/commons-cli/1.0/commons-cli-1.0.jar

[ERROR] urls[16] = file:/Users/lishan/.m2/repository/org/codehaus/plexus/plexus-interactivity-api/1.0-alpha-4/plexus-interactivity-api-1.0-alpha-4.jar

[ERROR] urls[17] = file:/Users/lishan/.m2/repository/commons-io/commons-io/2.1/commons-io-2.1.jar

[ERROR] Number of foreign imports: 1

[ERROR] import: Entry[import from realm ClassRealm[maven.api, parent: null]]

[ERROR]

[ERROR] -----------------------------------------------------: com.taobao.tddl.common.util.DataSourceFetcher

[ERROR] -> [Help 1]

解决后运行生成

删除之前的dependency

mvn clean -U test-compile

刷新就ok了,效果如下

关于Error creating bean with name 'cpwLogInfoController'

Caused by: org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'cpwLogInfoController': Unsatisfied dependency expressed through field 'cpwLogInfoService'; nested exception is org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'cpwLogInfoService': Unsatisfied dependency expressed through field 'cpwLogInfoDao'; nested exception is org.springframework.beans.factory.NoSuchBeanDefinitionException: No qualifying bean of type 'com.alibaba.algo.dao.CpwLogInfoDao' available: expected at least 1 bean which qualifies as autowire candidate. Dependency annotations: {@org.springframework.beans.factory.annotation.Autowired(required=true)}

原因:可能的原因1

#import com.alibaba.dubbo.config.annotation.Service;

import org.springframework.stereotype.Service;导包错误导致,应为 import org.springframework.stereotype.Service;

实际导包为 #import com.alibaba.dubbo.config.annotation.Service;

是否确实与注入相关便签的依赖。比如dubbo服务下,添加了spring的相关依赖但是服务端并不需要Spring的@Service标签,而是dubbo的@Service标签。检查maven依赖是否正确,修改完毕记得Install,report下

参考博客 https://blog.csdn.net/butterfly_resting/article/details/80044863

但是并未解决:真正的原因如下 是dao下的CpwLogInfoDao需要添加@Mapper @Repository

import org.apache.ibatis.annotations.Mapper;

import org.springframework.stereotype.Repository;

@Mapper

@Repository修改之后运行,ok

基于SpringBoot springframework框架的项目设计

=================================================================================

=================================================================================

1> 设计好自己的数据表。我这里是3张

从odps同步到idb的数据

CREATE TABLE `cpw_log_info` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`content` text NOT NULL COMMENT '客户端传参原始日志',

`rowkey` varchar(100) NOT NULL COMMENT '标识',

`ds` varchar(50) NOT NULL COMMENT 'day',

`hh` varchar(50) NOT NULL COMMENT 'hour',

`mm` varchar(50) NOT NULL DEFAULT '' COMMENT 'minutes',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=9 DEFAULT CHARSET=utf8mb4 COMMENT='客户端传参监控'

;

存储传参日志的原始日志,odps读取的前N条数据

CREATE TABLE `cpw_log_save` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`log_id` int(11) NOT NULL COMMENT 'content读取odps的第N条数据',

`origial_param` text COMMENT 'odps的第N条日志原始参数',

`now_time` varchar(50) DEFAULT NULL COMMENT '读取opdps时间,当前时间,年月日',

`log_time` varchar(50) DEFAULT NULL COMMENT '日志的时间戳',

`utdid` varchar(50) DEFAULT NULL COMMENT '用户utdid信息',

`app_scene` varchar(50) DEFAULT NULL COMMENT '搜索场景',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=512 DEFAULT CHARSET=utf8mb4 COMMENT='客户端传参日志实时监控'

;

存储newcpw解析断言后的错误日志

CREATE TABLE `cpw_log_error` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',

`gmt_create` datetime NOT NULL COMMENT '创建时间',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`now_time` varchar(50) NOT NULL COMMENT 'log运行时间',

`log_id` int(11) NOT NULL COMMENT '读取odps的日志id',

`error_log` text COMMENT '错误参数信息',

`error_level` int(11) DEFAULT NULL COMMENT '错误级别',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='客户端传参错误日志入库'

;

2> 分为testing-service和testing-start,在service.resources下面的Generator.xml添加配置文件

3> 生成beans文件

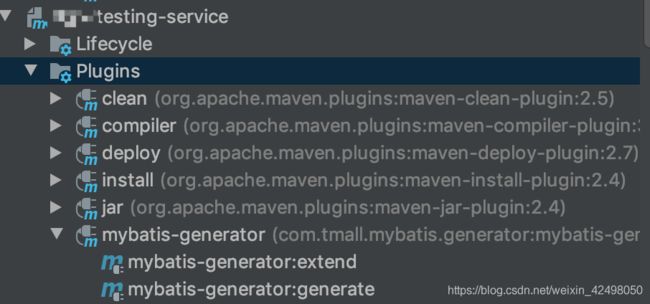

3.1> 点击idea右侧Maven-testing-serivice-Plugins-mybatis-generator

3.2> 在service- dao包下自动生成Dao接口和Query类===对数据库函数的封装,接口

注意:需要在所有Dao接口文件下添加如下注解(方法的标签,注解)

@Mapper

@Repository

3.3> 在service- mode包自动下生成Do文件===对数据库字段属性的封装,get set方法。即JavaBeans

3.4> 在service- resources- mapper自动生成xml文件

mapper是对数据库增删改查方法的封装

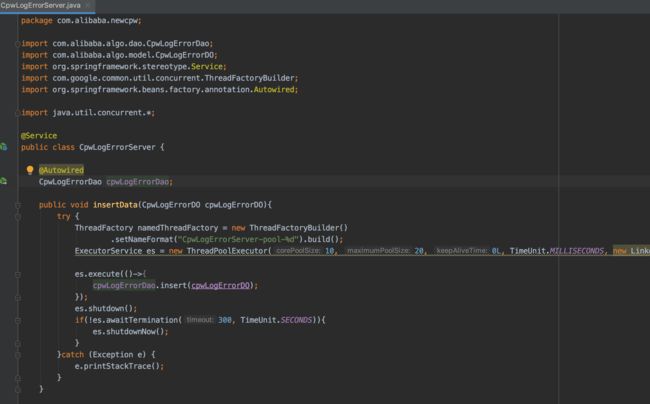

3.5> /XX-testing/algo-testing-service/src/main/java/com/xx/newcpw/CpwLogErrorServer.java

是执行对数据库操作的入口,Service层

3.6> /XX-testing/algo-testing-start/src/main/java/com/xx/algo/controller/CpwLogErrorController.java

是业务数据对数据库的处理(增删改查)Dao Do ,controller层

3.7> 修改testing-start/Application.java运行程序入口,修改完成以debug模式启运行

3.8> 请求写好的接口,http://localhost:port/logsave 把原始日志写入数据表cpw_log_save

http://localhost:port/logerror 把错误日志写入数据表cpw_log_error

关于SpringBoot遇到的问题

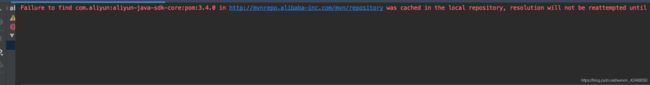

如遇Failure to find com.aliyun:aliyun-java-sdk-core:pom:3.4.0 in http://mvnrepo.alibaba-inc.com/mvn/repository was cached in the local repository, resolution will not be reattempted until the update interval of tbmirror-all has elapsed or updates are forced

解决如下两个问题

解决settings.xml修改镜像地址不生效的问题,

解决maven打包构建时出现如下问题

Failure to find com.ibatis:xxx-xxx-plugin:jar:1.0.7 in http://maven.aliyun.com/nexus/content/repositories/central/ was cached in the local repository,

resolution will not be reattempted until the update interval of alimaven has elapsed or updates are forced ->

问题的意思是,阿里云maven仓库中,没有你需要的依赖包。此时需要修改一下你的maven settings.xml镜像地址。

还有一种可能是因为,多模块化工程。某一个模块依赖另一个模块,那个模块没有install。解决方式是,依赖的模块进行 mvn clean install 就行了

先执行mvn clean,然后再执行一下mvn package -U ,第二个命令能治百病

mvn clean install -Dmaven.test.skip=true -U试一下

不行就手动删除 .m2下被缓存的包

keyword断言增加version条件判断

// keyword判断,0923版本需要改为urlencode传参,((%[0-9A-Fa-f]{2}){2})+

// %E5%A4%A9%E5%9D%91%E9%B9%B0%E7%8C%8E

// 将需要转码的字符转为16进制,然后从右到左,取4位(不足4位直接处理),每2位做一位,前面加上%,编码成%XY格式,但考虑到用户输入的query可能为abc 123 《我爱我家》 亲爱的,热爱的特殊字符

String logVersion = json.getString("version");

String minVersion = "8.1.9";

int s = 0;

try {

s = compareVersion(logVersion, minVersion);

} catch (Exception e) {

e.printStackTrace();

}

if (json.containsKey("keyword")) {

String keyword = json.getString("keyword");

if (TextUtils.isEmpty(keyword)) {

System.err.println("第" + count + "条日志,keyword客户端传参value为空,结果为" + keyword + ",BUG BUG BUG!!!");

// 记录error级别的信息

logger.error("error.log======第" + count + "条日志,keyword客户端传参value为空,结果为" + keyword + ",BUG BUG BUG!!!");

} else if (s >= 0 && !(keyword.matches("((%[0-9A-Fa-f]{2}){2})+") || keyword.matches("[0-9a-zA-Z]+") || !keyword.matches("[^\\x00-\\xff]*[a-zA-Z0-9]*"))) {

System.err.println("第" + count + "条日志,keyword客户端传参不符合正则规则,没有urlencode,结果为" + keyword + ",BUG BUG BUG!!!");

// 记录error级别的信息

logger.error("error.log======第" + count + "条日志,keyword客户端传参不符合正则规则,没有urlencode,结果为" + keyword + ",BUG BUG BUG!!!");

// logger.error("第" + count + "条日志,keyword客户端传参不符合正则规则,没有urlencode,结果为" + keyword + ",BUG BUG BUG!!!");

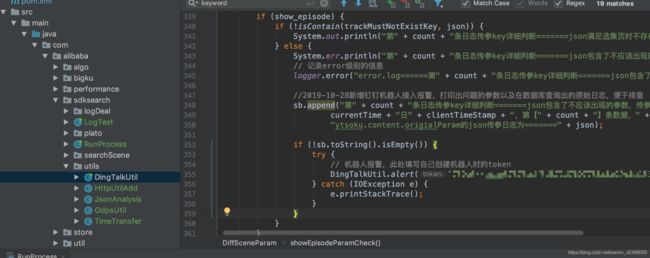

/**

* 2019-10-28新增钉钉机器人接入报警,打印出问题的参数以及在数据库查询出的原始日志,便于排查

*/

sb.append("第" + count + "条日志,keyword客户端传参不符合正则规则,没有urlencode,结果为" + keyword + ",BUG BUG BUG!!!" +

currentTime + "日" + clientTimeStamp + ",第【" + count + "】条数据," +

"ytsoku.content.origialParam的json传参日志为=======" + json);

if (!sb.toString().isEmpty()) {

try {

// 机器人报警,此处填写自己创建机器人时的token

DingTalkUtil.alert("750e544acab37373edf5d872d7607738c8b29c6a133428ab599c51ea120aeccb", sb.toString(), null, false);

} catch (IOException e) {

e.printStackTrace();

}

}

} else if (s < 0 && !keyword.matches("\\S")) {

System.err.println("第" + count + "条日志,keyword客户端传参不符合正则规则,没有urlencode,结果为" + keyword + ",BUG BUG BUG!!!");

} else {

System.out.println("第" + count + "条日志,keyword客户端传参正确,校验通过~~~");

// 记录info级别的信息

logger.info("log======第" + count + "条日志,keyword客户端传参正确,校验通过~~~");

}

} else {

System.err.println("第" + count + "条日志,客户端传参缺少【keyword】数据节点,BUG BUG BUG!!!");

// 记录error级别的信息

logger.error("error.log======第" + count + "条日志,客户端传参缺少【keyword】数据节点,BUG BUG BUG!!!");

//2019-10-28新增钉钉机器人接入报警,打印出问题的参数以及在数据库查询出的原始日志,便于排查

sb.append("第" + count + "条日志,客户端传参缺少【keyword】数据节点,BUG BUG BUG!!!" +

currentTime + "日" + clientTimeStamp + ",第【" + count + "】条数据," +

"ytsoku.content.origialParam的json传参日志为=======" + json);

if (!sb.toString().isEmpty()) {

try {

// 机器人报警,此处填写自己创建机器人时的token

DingTalkUtil.alert("750e544acab37373edf5d872d7607738c8b29c6a133428ab599c51ea120aeccb", sb.toString(), null, false);

} catch (IOException e) {

e.printStackTrace();

}

}

}

/**

* 比较版本号的大小,前者大则返回一个正数,后者大返回一个负数,相等则返回0

*

* @param logVersion

* @param minVersion

* @return

*/

public static int compareVersion(String logVersion, String minVersion) throws Exception {

if (logVersion == null || minVersion == null) {

throw new Exception("compareVersion error:illegal params.");

}

String[] versionArray1 = logVersion.split("\\.");//注意此处为正则匹配,不能用".";

String[] versionArray2 = minVersion.split("\\.");

int idx = 0;

int minLength = Math.min(versionArray1.length, versionArray2.length);//取最小长度值,如8.5和8.5.1版本比较,取前者长度

int diff = 0;

// 按小数点拆分的长度比较,如8.1.0和8.11.0

while (idx < minLength

&& (diff = versionArray1[idx].length() - versionArray2[idx].length()) == 0//先比较长度

&& (diff = versionArray1[idx].compareTo(versionArray2[idx])) == 0) {//再比较字符

// while里先加再用

++idx;

}

//如果已经分出大小,则直接返回,如果未分出大小,则再比较位数,有子版本的为大;如果logVersionminVersion则为正数

diff = (diff != 0) ? diff : versionArray1.length - versionArray2.length;

if (diff == 0) {

}

return diff;

}

public static void main(String args[]) {

try {

int in = compareVersion("8.8.5", "8.33.6");

System.out.println("前者比后者大:" + in + " ==");

} catch (Exception e) {

e.printStackTrace();

}

try {

int in = compareVersion("8.2.1", "8.1.11");

System.out.println("后者比前者大:" + in + " ==");

} catch (Exception e) {

e.printStackTrace();

}

}

待续

待续