大数据学习hadoop3.1.3——Flume企业开发案例二(负载均衡和故障转移)

1、案例需求

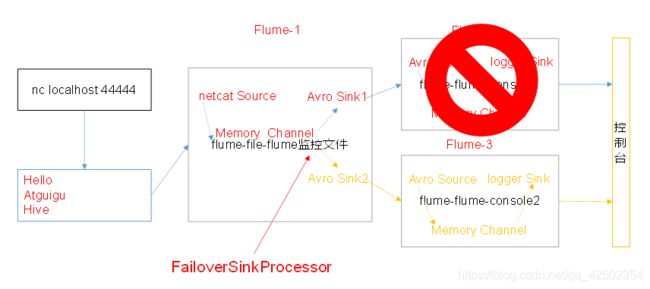

使用Flume1监控一个端口,其sink组中的sink分别对接Flume2和Flume3,采用FailoverSinkProcessor,实现故障转移的功能。

(1)准备工作

在/opt/module/flume/job目录下创建group2文件夹

cd group2/(2)创建flume-netcat-flume.conf

配置1个netcat source和1个channel、1个sink group(2个sink),分别输送给flume-flume-console1和flume-flume-console2。

编辑配置文件

vim flume-netcat-flume.conf添加如下内容

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinkgroups = g1

a1.sinks = k1 k2

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.maxpenalty = 10000

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop101

a1.sinks.k1.port = 4141

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop101

a1.sinks.k2.port = 4142

# Describe the channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

(3)创建flume-flume-console1.conf

配置上级Flume输出的Source,输出是到本地控制台。

编辑配置文件

vim flume-flume-console1.conf添加如下内容

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop101

a2.sources.r1.port = 4141

# Describe the sink

a2.sinks.k1.type = logger

# Describe the channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

(4)创建flume-flume-console2.conf

配置上级Flume输出的Source,输出是到本地控制台。

编辑配置文件

vim flume-flume-console2.conf添加如下内容

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c2

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop101

a3.sources.r1.port = 4142

# Describe the sink

a3.sinks.k1.type = logger

# Describe the channel

a3.channels.c2.type = memory

a3.channels.c2.capacity = 1000

a3.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c2

a3.sinks.k1.channel = c2

(5)执行配置文件

分别开启对应配置文件:flume-flume-console2,flume-flume-console1,flume-netcat-flume。

bin/flume-ng agent --conf conf/ --name a3 --conf-file job/group2/flume-flume-console2.conf -Dflume.root.logger=INFO,console

bin/flume-ng agent --conf conf/ --name a2 --conf-file job/group2/flume-flume-console1.conf -Dflume.root.logger=INFO,console

bin/flume-ng agent --conf conf/ --name a1 --conf-file job/group2/flume-netcat-flume.conf

(6)使用netcat工具向本机的44444端口发送内容

nc localhost 44444(7)查看Flume2及Flume3的控制台打印日志

(8)将Flume2 kill,观察Flume3的控制台打印情况。

注:使用jps -ml查看Flume进程。