【强化学习】Policy Gradient

原文链接:https://www.yuque.com/yahei/hey-yahei/rl-policy_gradient

参考:

- 机器学习深度学习(李宏毅)- Deep Reinforcemen Learning3_1

- 机器学习深度学习(李宏毅)- Policy Gradient

- 机器学习深度学习(李宏毅)- Proximal Policy Optimization

策略梯度

策略梯度(Policy Gradient)是一种policy-based的RL算法。

基本形式

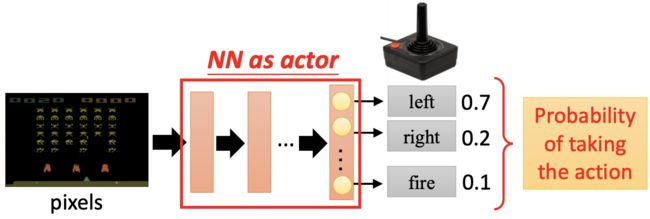

将游戏图像输入给神经网络,输出各个动作的决策概率,在预测阶段直接挑选概率最大的动作,在训练阶段则按照概率随机执行动作。

我们用采取某个动作之后的奖励总和(包括后续动作产生的奖励)来评估执行者actor的好坏,也即

R θ = ∑ t = 1 T r t R_{\theta}=\sum_{t=1}^{T} r_{t} Rθ=t=1∑Trt

其中, r t r_t rt是采取该动作后第 t t t个状态所获的奖励。

由于状态的复杂多变,以及每次采取动作的随机性,这里的 R θ R_\theta Rθ显然是一个随机变量,实际操作中我们使用它的期望 R ˉ θ \bar{R}_\theta Rˉθ来评估执行者 π θ ( s ) \pi_\theta(s) πθ(s)的好坏。

记一个动作序列(通常成为片段episode)为

τ = { τ 1 , τ 2 , . . . , τ T } \tau = \{\tau_1, \tau_2, ..., \tau_T\} τ={τ1,τ2,...,τT}

τ i = ( s i , a i , r i ) \tau_i = (s_i, a_i, r_i) τi=(si,ai,ri)表示该片段中第 i i i个状态 s i s_i si下采取动作 a i a_i ai后获得了奖励 r i r_i ri;

【这里强调一下上下标的含义,上标代表采样序号,下标代表本次采用的时间序号,如下文的 τ t n \tau_t^n τtn代表第 n n n次采样中,第 t t t个时间点的阶段(三元组 s t n , a t n , r t n s_t^n,a_t^n,r_t^n stn,atn,rtn);另外, r r r和 τ \tau τ长得可太像了,千万别看错!】

实际中我们几乎不可能遍历所有可能的动作序列,一般都是通过 N N N次采样的平均结果来估计期望,也即

R ˉ θ = ∑ τ R ( τ ) P ( τ ∣ θ ) ≈ 1 N ∑ n = 1 N R ( τ n ) \bar{R}_{\theta}=\sum_{\tau} R(\tau) P(\tau | \theta) \approx \frac{1}{N} \sum_{n=1}^{N} R\left(\tau^{n}\right) Rˉθ=τ∑R(τ)P(τ∣θ)≈N1n=1∑NR(τn)

我们的目标是最大化奖励期望,即

θ ∗ = arg max θ R ˉ θ \theta^{*}=\arg \max _{\theta} \bar{R}_{\theta} θ∗=argθmaxRˉθ

自然而然想到,可以用梯度上升法迭代更新权重

θ t + 1 ← θ t + η ∇ R ˉ θ t \theta^{t+1} \leftarrow \theta^{t}+\eta \nabla \bar{R}_{\theta^{t}} θt+1←θt+η∇Rˉθt

接下来考虑如何求解 ∇ R ˉ θ \nabla \bar{R}_{\theta} ∇Rˉθ,直接对 R θ ˉ \bar{R_\theta} Rθˉ求导

∇ R ˉ θ = ∑ τ R ( τ ) ∇ P ( τ ∣ θ ) \nabla \bar{R}_{\theta} =\sum_{\tau} R(\tau) \nabla P(\tau | \theta) ∇Rˉθ=τ∑R(τ)∇P(τ∣θ)

注意 R ( τ ) R(\tau) R(τ)跟 θ \theta θ无关,它不需要可导,即使是个黑盒都无关紧要;而

P ( τ ∣ θ ) = p ( s 1 ) ∏ t = 1 T p ( a t ∣ s t , θ ) p ( r t , s t + 1 ∣ s t , a t ) P(\tau|\theta) = p\left(s_{1}\right) \prod_{t=1}^{T} p\left(a_{t} | s_{t}, \theta\right) p\left(r_{t}, s_{t+1} | s_{t}, a_{t}\right) P(τ∣θ)=p(s1)t=1∏Tp(at∣st,θ)p(rt,st+1∣st,at)

这里 p ( s 1 ) p\left(s_{1}\right) p(s1)和 p ( r t , s t + 1 ∣ s t , a t ) p\left(r_{t}, s_{t+1} | s_{t}, a_{t}\right) p(rt,st+1∣st,at)都与 θ \theta θ无关,看起来可太碍事了,不如我们取个对数

l o g P ( τ ∣ θ ) = log p ( s 1 ) + ∑ t = 1 T log p ( a t ∣ s t , θ ) + log p ( r t , s t + 1 ∣ s t , a t ) log P(\tau|\theta) = \log p\left(s_{1}\right)+\sum_{t=1}^{T} \log p\left(a_{t} | s_{t}, \theta\right)+\log p\left(r_{t}, s_{t+1} | s_{t}, a_{t}\right) logP(τ∣θ)=logp(s1)+t=1∑Tlogp(at∣st,θ)+logp(rt,st+1∣st,at)

显然,

∇ log P ( τ ∣ θ ) = ∑ t = 1 T ∇ log p ( a t ∣ s t , θ ) \nabla \log P(\tau | \theta)=\sum_{t=1}^{T} \nabla \log p\left(a_{t} | s_{t}, \theta\right) ∇logP(τ∣θ)=t=1∑T∇logp(at∣st,θ)

这可干净多了。为了应用对数形式的梯度,那么将奖励梯度做一下简单变换,

∇ R ˉ θ = ∑ τ R ( τ ) ∇ P ( τ ∣ θ ) = ∑ τ R ( τ ) P ( τ ∣ θ ) ∇ P ( τ ∣ θ ) P ( τ ∣ θ ) = ∑ τ R ( τ ) P ( τ ∣ θ ) ∇ log P ( τ ∣ θ ) 【 dlog ( f ( x ) ) d x = 1 f ( x ) d f ( x ) d x 】 ≈ 1 N ∑ n = 1 N R ( τ n ) ∇ log P ( τ n ∣ θ ) 【 通 过 采 样 来 估 计 ∑ τ P ( τ ∣ θ ) 】 = 1 N ∑ n = 1 N R ( τ n ) ∑ t = 1 T n ∇ log p ( a t n ∣ s t n , θ ) 【 将 ∇ log P ( τ ∣ θ ) 代 入 】 = 1 N ∑ n = 1 N ∑ t = 1 T n R ( τ n ) ∇ log p ( a t n ∣ s t n , θ ) \begin{aligned} \nabla \bar{R}_{\theta} &=\sum_{\tau} R(\tau) \nabla P(\tau | \theta) \\ &=\sum_{\tau} R(\tau) P(\tau | \theta) \frac{\nabla P(\tau | \theta)}{P(\tau | \theta)} \\ &= \sum_{\tau} R(\tau) P(\tau | \theta) \nabla \log P(\tau | \theta) && 【\frac{\operatorname{dlog}(f(x))}{d x}=\frac{1}{f(x)} \frac{d f(x)}{d x}】\\ & \approx \frac{1}{N} \sum_{n=1}^{N} R\left(\tau^{n}\right) \nabla \log P\left(\tau^{n} | \theta\right) && 【通过采样来估计\sum_{\tau} P(\tau | \theta)】\\ &=\frac{1}{N} \sum_{n=1}^{N} R\left(\tau^{n}\right) \sum_{t=1}^{T_{n}} \nabla \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) && 【将\nabla \log P(\tau | \theta)代入】\\ &=\frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}} R\left(\tau^{n}\right) \nabla \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) \end{aligned} ∇Rˉθ=τ∑R(τ)∇P(τ∣θ)=τ∑R(τ)P(τ∣θ)P(τ∣θ)∇P(τ∣θ)=τ∑R(τ)P(τ∣θ)∇logP(τ∣θ)≈N1n=1∑NR(τn)∇logP(τn∣θ)=N1n=1∑NR(τn)t=1∑Tn∇logp(atn∣stn,θ)=N1n=1∑Nt=1∑TnR(τn)∇logp(atn∣stn,θ)【dxdlog(f(x))=f(x)1dxdf(x)】【通过采样来估计τ∑P(τ∣θ)】【将∇logP(τ∣θ)代入】

为什么要使用对数呢?

除了前边提到的求导形式简单之外,还有一个理由——首先考虑原始的 ∇ p ( a t n ∣ s t n , θ ) \nabla p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) ∇p(atn∣stn,θ),

假设某种相对极端的情况,在一个片段中频繁采取动作a,那么在训练时会倾向于增加动作a的概率,即使动作b的奖励要高于动作a(但动作b的采用概率很低),这显然是不合理的,而按照前述,对数形式有

log p ( a t n ∣ s t n , θ ) = ∇ p ( a t n ∣ s t n , θ ) p ( a t n ∣ s t n , θ ) \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) = \frac{\nabla p\left(a_{t}^{n} | s_{t}^{n}, \theta\right)}{p\left(a_{t}^{n} | s_{t}^{n}, \theta\right)} logp(atn∣stn,θ)=p(atn∣stn,θ)∇p(atn∣stn,θ)

这相当于用概率 p ( a t n ∣ s t n , θ ) p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) p(atn∣stn,θ)对原始的梯度 ∇ p ( a t n ∣ s t n , θ ) \nabla p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) ∇p(atn∣stn,θ)做了规范化,从而避免了前边提到的极端情况。

两点改进

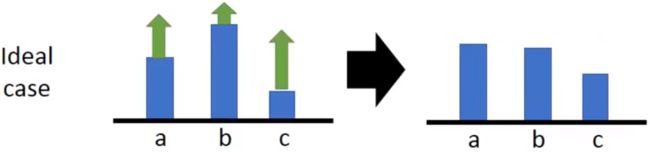

接下来我们看看 R ( τ n ) R\left(\tau^{n}\right) R(τn),有些任务可能并没有惩罚,也就是 R ( τ n ) ≥ 0 R\left(\tau^{n}\right) \geq 0 R(τn)≥0;即使任务有惩罚,但也有可能出现一个片段中所有奖励都是正值的情况,那么此时所有采样涉及的动作概率都会增加——但不用担心,由于所有动作的概率之和约束为1,所以概率“增加”较少的动作会被其他同样“增加”概率的动作挤兑,实际上概率是下降了。

但这只考虑了大家都被采样到的情况,假如有某个动作很倒霉,它在当前片段中一次都没有被采样,但它的概率依旧被降低了,这明显是不合理的。

所以我们可以为奖励引入一个baseline,让reward有正有负,从而缓解这样的问题。

∇ R ˉ θ ≈ 1 N ∑ n = 1 N ∑ t = 1 T n ( R ( τ n ) − b ) ∇ log p ( a t n ∣ s t n , θ ) \nabla \bar{R}_{\theta} \approx \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}}\left(R\left(\tau^{n}\right)-b\right) \nabla \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) ∇Rˉθ≈N1n=1∑Nt=1∑Tn(R(τn)−b)∇logp(atn∣stn,θ)

baseline可以手工设计;也可以取奖励的期望,即 b = E [ R ( τ ) ] b = E[R(\tau)] b=E[R(τ)]

再来讨论权重 ( R ( τ n ) − b ) \left(R\left(\tau^{n}\right)-b\right) (R(τn)−b)的合理性。

考虑一个很小的片段 τ = { ( s 1 , a 1 , + 5 ) , ( s 2 , a 2 , 0 ) , ( s 3 , a 3 , − 2 ) } \tau = \{ (s_1, a_1, +5), (s_2, a_2, 0), (s_3, a_3, -2) \} τ={(s1,a1,+5),(s2,a2,0),(s3,a3,−2)},可以算出它的奖励为 R ( τ ) = + 3 R(\tau)=+3 R(τ)=+3,这样一来 ∇ log p ( a 1 ∣ s 1 , θ ) , ∇ log p ( a 2 ∣ s 2 , θ ) , ∇ log p ( a 3 ∣ s 3 , θ ) \nabla \log p\left(a_1 | s_1, \theta\right), \nabla \log p\left(a_2 | s_2, \theta\right), \nabla \log p\left(a_3 | s_3, \theta\right) ∇logp(a1∣s1,θ),∇logp(a2∣s2,θ),∇logp(a3∣s3,θ)的权重都是+3。可是总奖励 R ( τ ) R(\tau) R(τ)的正值贡献主要来源于 τ 1 = ( s 1 , a 1 , + 5 ) \tau_1 = (s_1, a_1, +5) τ1=(s1,a1,+5),但我们又以相同的力度鼓励 τ 2 = ( s 2 , a 2 , 0 ) \tau_2 = (s_2, a_2, 0) τ2=(s2,a2,0)甚至 τ 3 = ( s 3 , a 3 , − 2 ) \tau_3 = (s_3, a_3, -2) τ3=(s3,a3,−2),这显然是有问题的。更合理的做法应当是统计当前阶段之后的奖励之和来作为自己的权重,而不是整个片段的总奖励,也即

R ( τ t n ) = ∑ t ′ = t T n r t ′ n R(\tau^n_t) = \sum^{T_n}_{t'=t}r_{t'}^n R(τtn)=t′=t∑Tnrt′n

而且,为了鼓励尽早取得奖励(或者反过来说,为了减弱遥远未来的奖励的重要性),可以引入一个超参数折扣因子(discount factor) γ \gamma γ,奖励值进一步改造为

R ( τ t n ) = ∑ t ′ = t T n γ t ′ − t r t ′ n , γ < 1 R(\tau^n_t) = \sum^{T_n}_{t'=t} \gamma^{t'-t} r_{t'}^n, \gamma < 1 R(τtn)=t′=t∑Tnγt′−trt′n,γ<1

最终变为

∇ R ˉ θ ≈ 1 N ∑ n = 1 N ∑ t = 1 T n ( R ( τ t n ) − b ) ∇ log p ( a t n ∣ s t n , θ ) = 1 N ∑ n = 1 N ∑ t = 1 T n ( ∑ t ′ = t T n γ t ′ − t r t ′ n − b ) ∇ log p ( a t n ∣ s t n , θ ) , γ < 1 \begin{aligned} \nabla \bar{R}_{\theta} &\approx \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}}\left(R\left(\tau^{n}_t\right)-b\right) \nabla \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right) \\ &= \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}}\left(\sum^{T_n}_{t'=t} \gamma^{t'-t} r_{t'}^n - b \right) \nabla \log p\left(a_{t}^{n} | s_{t}^{n}, \theta\right), \gamma < 1 \end{aligned} ∇Rˉθ≈N1n=1∑Nt=1∑Tn(R(τtn)−b)∇logp(atn∣stn,θ)=N1n=1∑Nt=1∑Tn(t′=t∑Tnγt′−trt′n−b)∇logp(atn∣stn,θ),γ<1

事实上, ( R ( τ t n ) − b ) \left(R\left(\tau^{n}_t\right)-b\right) (R(τtn)−b)这一项被称为Advantage Function,记为 A θ ( s t , a t ) A^\theta(s_t, a_t) Aθ(st,at),甚至可以训练一个critic来替代这里的手工设计,也即之后会讲的Value-based方法。

Off-Policy

如果用于采样的模型跟实际训练的模型是同一个模型,则称为On-Policy;如果不是同一个模型,则称为Off-Policy。为什么好好的不用同一个模型呢?这是因为我们可以用之前的模型进行采样获得很多数据,而用这些数据来做多次迭代,每迭代一定部署后再用训练模型的参数去更新采样模型,从而有效加快强化学习的训练过程。

分布修正

由于经过迭代,采样的模型的采样结果跟实际训练的模型的采样是会有差距的,因此需要引入一些措施来修正。

原始的梯度为

∇ R ˉ θ = E τ ∼ p θ ( τ ) [ R ( τ ) ∇ log p θ ( τ ) ] \nabla \bar{R}_{\theta}=E_{\tau \sim p_{\theta}(\tau)}\left[R(\tau) \nabla \log p_{\theta}(\tau)\right] ∇Rˉθ=Eτ∼pθ(τ)[R(τ)∇logpθ(τ)]

记训练模型的样本分布、采样模型的样本分布分别为 p ( x ) , q ( x ) p(x), q(x) p(x),q(x)

E x ∼ p [ f ( x ) ] = ∫ f ( x ) p ( x ) d x = ∫ f ( x ) p ( x ) q ( x ) q ( x ) d x = E x ∼ q [ f ( x ) p ( x ) q ( x ) ] \begin{aligned} E_{x \sim p}[f(x)] &= \int f(x) p(x) d x \\ &=\int f(x) \frac{p(x)}{q(x)} q(x) d x \\ &= E_{x \sim q}[f(x)\frac{p(x)}{q(x)}] \end{aligned} Ex∼p[f(x)]=∫f(x)p(x)dx=∫f(x)q(x)p(x)q(x)dx=Ex∼q[f(x)q(x)p(x)]

修正后的梯度可以表示为

∇ R ˉ θ = E τ ∼ p θ ′ ( τ ) [ p θ ( τ ) p θ ′ ( τ ) R ( τ ) ∇ log p θ ( τ ) ] \nabla \bar{R}_{\theta}=E_{\tau \sim p_{\theta^{\prime}}(\tau)}\left[\frac{p_{\theta}(\tau)}{p_{\theta^{\prime}}(\tau)} R(\tau) \nabla \log p_{\theta}(\tau)\right] ∇Rˉθ=Eτ∼pθ′(τ)[pθ′(τ)pθ(τ)R(τ)∇logpθ(τ)]

也即用 π θ ′ \pi_{\theta'} πθ′采样的数据来训练 π θ \pi_\theta πθ,并且给奖励乘以一个 p θ ( τ ) p θ ′ ( τ ) \frac{p_\theta(\tau)}{p_{\theta'}(\tau)} pθ′(τ)pθ(τ)重要性因子作为修正(称为Importance Sample),修正后的梯度,可以保证采样数据的样本上期望是一致的。

展开来的话

∇ R ˉ θ = E τ ∼ p θ ′ ( τ ) [ p θ ( τ ) p θ ′ ( τ ) R ( τ ) ∇ log p θ ( τ ) ] = E ( s t , a t ) ∼ π θ [ A θ ( s t , a t ) ∇ log p θ ( a t n ∣ s t n ) ] = E ( s t , a t ) ∼ π θ ′ [ P θ ( s t , a t ) P θ ′ ( s t , a t ) A θ ′ ( s t , a t ) ∇ log p θ ( a t n ∣ s t n ) ] = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) p θ ( s t ) p θ ′ ( s t ) A θ ′ ( s t , a t ) ∇ log p θ ( a t n ∣ s t n ) ] ( . . . p θ ( s t ) p θ ′ ( s t ) 的 实 际 值 不 好 获 得 , 但 一 般 认 为 环 境 是 θ 无 关 的 , 所 以 不 妨 假 设 其 值 为 1... ) = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) A θ ′ ( s t , a t ) ∇ log p θ ( a t n ∣ s t n ) ] \begin{aligned} \nabla \bar{R}_{\theta} &= E_{\tau \sim p_{\theta^{\prime}}(\tau)}\left[\frac{p_{\theta}(\tau)}{p_{\theta^{\prime}}(\tau)} R(\tau) \nabla \log p_{\theta}(\tau)\right] \\ &=E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta}}\left[A^{\theta}\left(s_{t}, a_{t}\right) \nabla \log p_{\theta}\left(a_{t}^{n} | s_{t}^{n}\right)\right] \\ &= E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{P_{\theta}\left(s_{t}, a_{t}\right)}{P_{\theta'}\left(s_{t}, a_{t}\right)} A^{\theta'}\left(s_{t}, a_{t}\right) \nabla \log p_{\theta}\left(a_{t}^{n} | s_{t}^{n}\right)\right] \\ &= E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} \frac{p_{\theta}\left(s_{t}\right)}{p_{\theta^{\prime}}\left(s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right) \nabla \log p_{\theta}\left(a_{t}^{n} | s_{t}^{n}\right)\right] \\ &(...\frac{p_{\theta}\left(s_{t}\right)}{p_{\theta^{\prime}}\left(s_{t}\right)}的实际值不好获得,但一般认为环境是\theta无关的,所以不妨假设其值为1...) \\ &= E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right) \nabla \log p_{\theta}\left(a_{t}^{n} | s_{t}^{n}\right)\right] \end{aligned} ∇Rˉθ=Eτ∼pθ′(τ)[pθ′(τ)pθ(τ)R(τ)∇logpθ(τ)]=E(st,at)∼πθ[Aθ(st,at)∇logpθ(atn∣stn)]=E(st,at)∼πθ′[Pθ′(st,at)Pθ(st,at)Aθ′(st,at)∇logpθ(atn∣stn)]=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)pθ′(st)pθ(st)Aθ′(st,at)∇logpθ(atn∣stn)](...pθ′(st)pθ(st)的实际值不好获得,但一般认为环境是θ无关的,所以不妨假设其值为1...)=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)Aθ′(st,at)∇logpθ(atn∣stn)]

根据 ∇ f ( x ) = f ( x ) ∇ log f ( x ) \nabla f(x) = f(x) \nabla \log f(x) ∇f(x)=f(x)∇logf(x)可以由梯度反推出目标函数

J θ ′ ( θ ) = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) A θ ′ ( s t , a t ) ] J^{\theta^{\prime}}(\theta)=E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right)\right] Jθ′(θ)=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)Aθ′(st,at)]

有了修正,是不是就可以为所欲为了呢?其实也不是,从方差上看

Var x ∼ p [ f ( x ) ] = E x ∼ p [ f ( x ) 2 ] − ( E x ∼ p [ f ( x ) ] ) 2 \operatorname{Var}_{x \sim p}[f(x)]=E_{x \sim p}\left[f(x)^{2}\right]-\left(E_{x \sim p}[f(x)]\right)^{2} Varx∼p[f(x)]=Ex∼p[f(x)2]−(Ex∼p[f(x)])2

而

Var x ∼ q [ f ( x ) p ( x ) q ( x ) ] = E x ∼ q [ ( f ( x ) p ( x ) q ( x ) ) 2 ] − ( E x ∼ q [ f ( x ) p ( x ) q ( x ) ] ) 2 = E x ∼ q [ f ( x ) 2 p ( x ) q ( x ) p ( x ) q ( x ) ] − ( E x ∼ p [ f ( x ) ] ) 2 = E x ∼ p [ f ( x ) 2 p ( x ) q ( x ) ] − ( E x ∼ p [ f ( x ) ] ) 2 \begin{aligned} \operatorname{Var}_{x \sim q}\left[f(x) \frac{p(x)}{q(x)}\right] &= E_{x \sim q}\left[\left(f(x) \frac{p(x)}{q(x)}\right)^{2}\right]-\left(E_{x \sim q}\left[f(x) \frac{p(x)}{q(x)}\right]\right)^{2} \\ &= E_{x \sim q}\left[f(x)^{2} \frac{p(x)}{q(x)} \frac{p(x)}{q(x)}\right]-\left(E_{x \sim p}\left[f(x) \right]\right)^{2} \\ &= E_{x \sim p}\left[f(x)^{2} \frac{p(x)}{q(x)}\right]-\left(E_{x \sim p}\left[f(x) \right]\right)^{2} \end{aligned} Varx∼q[f(x)q(x)p(x)]=Ex∼q[(f(x)q(x)p(x))2]−(Ex∼q[f(x)q(x)p(x)])2=Ex∼q[f(x)2q(x)p(x)q(x)p(x)]−(Ex∼p[f(x)])2=Ex∼p[f(x)2q(x)p(x)]−(Ex∼p[f(x)])2

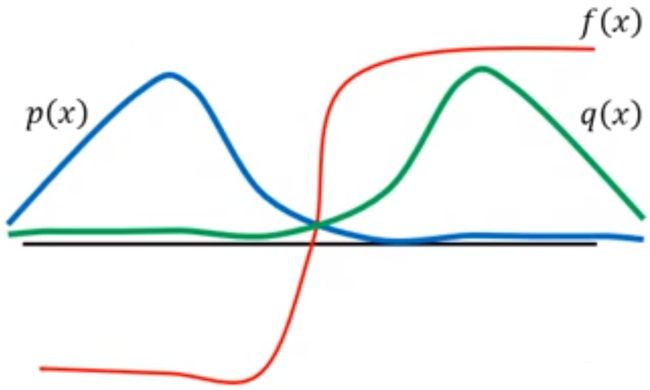

显然方差是不完全相同的,而且随着 p ( x ) , q ( x ) p(x), q(x) p(x),q(x)差异变大,方差的差异也会随之变大。

举个直观点的例子,

因为 p ( x ) p(x) p(x)在左半部分概率比较高,而且这部分 f ( x ) < 0 f(x) < 0 f(x)<0,显然 E x ∼ p [ f ( x ) ] E_{x \sim p}[f(x)] Ex∼p[f(x)]是负数;

因为 q ( x ) q(x) q(x)在右半部分概率比较高,所以有可能采样点都在右侧,此时 E x ∼ q [ f ( x ) p ( x ) q ( x ) ] ≈ 1 N ∑ i = 1 N f ( x i ) p ( x i ) q ( x i ) E_{x \sim q}[f(x)\frac{p(x)}{q(x)}] \approx \frac{1}{N}\sum^N_{i=1} f(x_i) \frac{p(x_i)}{q(x_i)} Ex∼q[f(x)q(x)p(x)]≈N1i=1∑Nf(xi)q(xi)p(xi)算出来却是正数,这下可差得远了。

所以需要采取一些额外措施,来避免 p ( x ) , q ( x ) p(x),q(x) p(x),q(x)相差太大。

TRPO和PPO

原始的目标函数为

J θ ′ ( θ ) = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) A θ ′ ( s t , a t ) ] J^{\theta^{\prime}}(\theta)=E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right)\right] Jθ′(θ)=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)Aθ′(st,at)]

**信任区域策略优化(Trust Region Policy Optimization, TRPO)**对目标函数做了一些约束,要求采样模型和训练模型在输出分布上的差异不能太大,

J T R P O θ ′ ( θ ) = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) A θ ′ ( s t , a t ) ] , K L ( θ , θ ′ ) < δ J_{T R P O}^{\theta^{\prime}}(\theta)=E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right)\right], K L\left(\theta, \theta^{\prime}\right)<\delta JTRPOθ′(θ)=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)Aθ′(st,at)],KL(θ,θ′)<δ

注意:这里 K L ( θ , θ ′ ) KL(\theta, \theta') KL(θ,θ′)指的不是参数分布的KL散度,而是模型输出的分布,下文的PPO也是如此。

但带条件约束的目标函数在实际操作中并不好处理;

邻近策略优化(Proximal Policy Optimization, PPO)将该约束体现在了计算公式中去,直接用它来做惩罚,

J P P O θ ′ ( θ ) = E ( s t , a t ) ∼ π θ ′ [ p θ ( a t ∣ s t ) p θ ′ ( a t ∣ s t ) A θ ′ ( s t , a t ) ] − β K L ( θ , θ ′ ) J_{P P O}^{\theta^{\prime}}(\theta) = E_{\left(s_{t}, a_{t}\right) \sim \pi_{\theta^{\prime}}}\left[\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{\prime}}\left(a_{t} | s_{t}\right)} A^{\theta^{\prime}}\left(s_{t}, a_{t}\right)\right]-\beta K L\left(\theta, \theta^{\prime}\right) JPPOθ′(θ)=E(st,at)∼πθ′[pθ′(at∣st)pθ(at∣st)Aθ′(st,at)]−βKL(θ,θ′)

同时也有一种更简洁的形式,通常称为PPO2,

J P P O 2 θ k ( θ ) = ∑ ( s t , a t ) min ( p θ ( a t ∣ s t ) p θ k ( a t ∣ s t ) A θ k ( s t , a t ) , clip ( p θ ( a t ∣ s t ) p θ k ( a t ∣ s t ) , 1 − ε , 1 + ε ) A θ k ( s t , a t ) ) J_{P P O 2}^{\theta^{k}}(\theta) = \sum_{\left(s_{t}, a_{t}\right)} \min \left(\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{k}}\left(a_{t} | s_{t}\right)} A^{\theta^{k}}\left(s_{t}, a_{t}\right) , \text { clip }\left(\frac{p_{\theta}\left(a_{t} | s_{t}\right)}{p_{\theta^{k}}\left(a_{t} | s_{t}\right)}, 1-\varepsilon, 1+\varepsilon\right) A^{\theta^{k}}\left(s_{t}, a_{t}\right) \right) JPPO2θk(θ)=(st,at)∑min(pθk(at∣st)pθ(at∣st)Aθk(st,at), clip (pθk(at∣st)pθ(at∣st),1−ε,1+ε)Aθk(st,at))

看起来复杂,实际上是加了一个自适应的一个权重,