强化学习笔记(六)策略梯度法(Policy Gradient)及Pytorch实现

强化学习笔记(六)策略梯度法(Policy Gradient)及Pytorch实现

- Q1:Policy-Based方法相比Value-Based的优劣在哪?

- Q2:如何直观地理解Policy-Based模型?

- Q3:似然技巧(Likelihood Ratios)

- Q4:基于Pytorch的蒙特卡罗策略梯度Reinforce算法

这节对应UCL课程第七讲,我决定把Actor-Critic放到下一节学习。之前所学习的方法都是Value-Based,算是一种间接方法。我们先算出价值函数,再去做决策。我们使用的策略都是确定性策略,类似一条路走到黑。在面对一个确定的状态时,我们会采用动作价值函数最大的动作,而不会考虑其它,即 π ( a ∣ s ) = 1 \pi(a|s)=1 π(a∣s)=1。而Policy-Based是一种直接的方法,我们直接去评估策略的好坏,然后进行选择。并且在执行的时候,策略不再是Deterministic了,而遵循我们算出的概率分布。

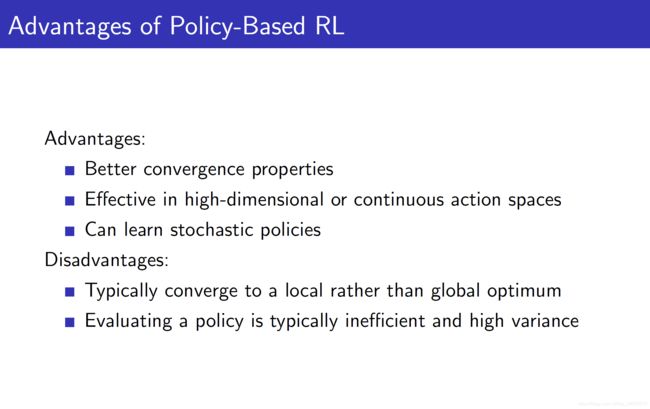

Q1:Policy-Based方法相比Value-Based的优劣在哪?

Q2:如何直观地理解Policy-Based模型?

Value-based输入状态,输出的是各动作价值,然后选动作价值最大的策略;Policy-based输入状态,输出的直接是策略 π ( a ∣ s ) \pi(a|s) π(a∣s),以概率的形式呈现,且 ∑ a π ( a ∣ s ) = 1 \sum\limits_{a}\pi(a|s)=1 a∑π(a∣s)=1.

但在寻优的过程中我们必须先建立一个优化函数或者有一个收敛目标,像Value-Based是使价值函数逐渐收敛,Monte Carlo使用收获值来进行偏移,时序差分用TD Target,这些都是老生常谈了,最终价值函数会收敛到一个固定值。所以我们必须先建立一个策略梯度的优化目标 J ( θ ) J(\theta) J(θ). 这个 θ \theta θ就是生成策略 π θ ( a ∣ s ) \pi_{\theta}(a|s) πθ(a∣s)的权重,类比于DQN中的权重 w \textbf w w. 直观来说,可以看成就是个输入为状态量,输出为价值或者策略的神经网络。

总结一下:

- 输入是状态量,输出是最优策略(即各个动作实施的概率)的模型就是Policy-based模型,它的输出直接告诉你怎么做,而不像Value-Based需要价值函数去引导。

- 怎么去更新这个网络:优化目标 J ( θ ) J(\theta) J(θ). 它可以有多种形式,比如每一时间步的平均奖励,平均价值等。

Q3:似然技巧(Likelihood Ratios)

∇ θ π θ ( s , a ) = π θ ( s , a ) ∇ θ π θ ( s , a ) π θ ( s , a ) = π θ ( s , a ) ∇ θ log π θ ( s , a ) \begin{aligned} \nabla_{\theta} \pi_{\theta}(s, a) &=\pi_{\theta}(s, a) \frac{\nabla_{\theta} \pi_{\theta}(s, a)}{\pi_{\theta}(s, a)} \\ &=\pi_{\theta}(s, a) \nabla_{\theta} \log \pi_{\theta}(s, a) \end{aligned} ∇θπθ(s,a)=πθ(s,a)πθ(s,a)∇θπθ(s,a)=πθ(s,a)∇θlogπθ(s,a)

其中, ∇ θ log π θ ( s , a ) \nabla_{\theta} \log \pi_{\theta}(s, a) ∇θlogπθ(s,a)叫做分数( Score Function \textbf {Score Function} Score Function)。这个操作让期望的计算更加简单。

策略函数可以选择Softmax或者高斯分布。对数化梯度后,确实方便计算了。使用似然比可以计算one-step MDP的策略梯度:

J ( θ ) = E π θ [ r ] = ∑ s ∈ S d ( s ) ∑ a ∈ A π θ ( s , a ) R s , a ∇ θ J ( θ ) = ∑ s ∈ S d ( s ) ∑ a ∈ A π θ ( s , a ) ∇ θ log π θ ( s , a ) R s , a = E π θ [ ∇ θ log π θ ( s , a ) r ] \begin{aligned} J(\theta) &=\mathbb{E}_{\pi_{\theta}}[r] \\ &=\sum_{s \in \mathcal{S}} d(s) \sum_{a \in \mathcal{A}} \pi_{\theta}(s, a) \mathcal{R}_{s, a} \\ \nabla_{\theta} J(\theta) &=\sum_{s \in \mathcal{S}} d(s) \sum_{a \in \mathcal{A}} \pi_{\theta}(s, a) \nabla_{\theta} \log \pi_{\theta}(s, a) \mathcal{R}_{s, a} \\ &=\mathbb{E}_{\pi_{\theta}}\left[\nabla_{\theta} \log \pi_{\theta}(s, a) r\right] \end{aligned} J(θ)∇θJ(θ)=Eπθ[r]=s∈S∑d(s)a∈A∑πθ(s,a)Rs,a=s∈S∑d(s)a∈A∑πθ(s,a)∇θlogπθ(s,a)Rs,a=Eπθ[∇θlogπθ(s,a)r]

其中 E π θ E_{\pi \theta} Eπθ是权重为 θ \theta θ计算策略 π \pi π时产生的目标期望,它可以代替式子中的 ∑ s ∈ S d ( s ) ∑ a ∈ A π θ ( s , a ) \sum_{s \in \mathcal{S}} d(s) \sum_{a \in \mathcal{A}} \pi_{\theta}(s, a) ∑s∈Sd(s)∑a∈Aπθ(s,a). (之前一直不明白加期望的式子怎么拆开的)

因此可以看到,如果想要提高目标函数,得到更多奖励,就要朝着由分数(Score Function)乘以奖励的乘积方向前进。

对于n-step MDP,只需要将即时奖励换成价值函数或长期奖励。

∇ θ J ( θ ) = E π θ [ ∇ θ log π θ ( s , a ) Q π θ ( s , a ) ] \nabla_{\theta} J(\theta)=\mathbb{E}_{\pi_{\theta}}\left[\nabla_{\theta} \log \pi_{\theta}(s, a) Q^{\pi_{\theta}}(s, a)\right] ∇θJ(θ)=Eπθ[∇θlogπθ(s,a)Qπθ(s,a)]

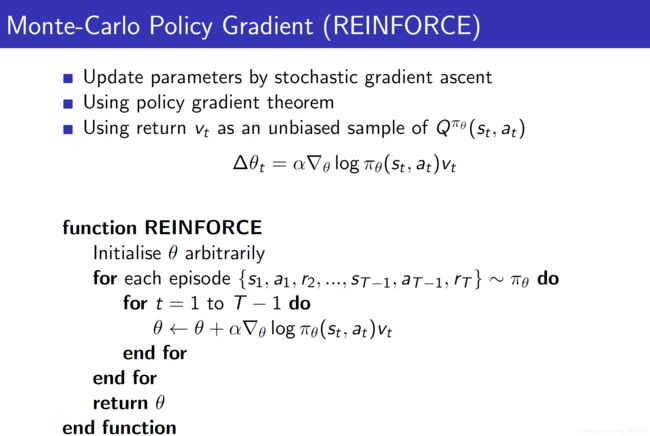

Q4:基于Pytorch的蒙特卡罗策略梯度Reinforce算法

先注意几个点:

- Q π θ ( s t , a t ) Q^{\pi \theta}(s_{t}, a_{t}) Qπθ(st,at)这里用收获 v t v_{t} vt来表示。因为一次训练更新只采样一个蒙卡序列,所以 v t = G t v_{t}=G_{t} vt=Gt.

- 我们使用的目标函数是 J a v V ( θ ) = ∑ s d π θ ( s ) V π θ ( s ) J_{a v V}(\theta)=\sum_{s} d^{\pi_{\theta}}(s) V^{\pi_{\theta}}(s) JavV(θ)=∑sdπθ(s)Vπθ(s),即使用平均价值。理论已经证明(详见策略梯度论文的附录证明)三种目标策略形式得出来的求导都是 E π θ [ ∇ θ log π θ ( s , a ) Q π θ ( s , a ) ] \mathbb{E}_{\pi_{\theta}}\left[\nabla_{\theta} \log \pi_{\theta}(s, a) Q^{\pi_{\theta}}(s, a)\right] Eπθ[∇θlogπθ(s,a)Qπθ(s,a)]. 而 d π θ ( s ) d^{\pi_{\theta}}(s) dπθ(s)状态分布就使用策略分布近似,即 J a v V ( θ ) = ∑ s π θ ( s , a ) V π θ ( s ) J_{a v V}(\theta)=\sum_{s} \pi_{\theta}(s,a) V^{\pi_{\theta}}(s) JavV(θ)=∑sπθ(s,a)Vπθ(s)。 这一段是我分析代码后个人的理解,不知是否正确。

代码仍然是参考了刘老师的https://github.com/ljpzzz/machinelearning/blob/master/reinforcement-learning/policy_gradient.py

我只是改成了Pytorch搭建网络,并添加了CUDA训练。

"""

@ Author: Peter Xiao

@ Date: 2020.7.20

@ Filename: PG.py

@ Brief: 使用 蒙特卡洛策略梯度Reinforce训练CartPole-v0

"""

import gym

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

import random

import time

from collections import deque

# Hyper Parameters for PG Network

GAMMA = 0.95 # discount factor

LR = 0.01 # learning rate

# Use GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# torch.backends.cudnn.enabled = False # 非确定性算法

class PGNetwork(nn.Module):

def __init__(self, state_dim, action_dim):

super(PGNetwork, self).__init__()

self.fc1 = nn.Linear(state_dim, 20)

self.fc2 = nn.Linear(20, action_dim)

def forward(self, x):

out = F.relu(self.fc1(x))

out = self.fc2(out)

return out

def initialize_weights(self):

for m in self.modules():

nn.init.normal_(m.weight.data, 0, 0.1)

nn.init.constant_(m.bias.data, 0.01)

# m.bias.data.zero_()

class PG(object):

# dqn Agent

def __init__(self, env): # 初始化

# 状态空间和动作空间的维度

self.state_dim = env.observation_space.shape[0]

self.action_dim = env.action_space.n

# init N Monte Carlo transitions in one game

self.ep_obs, self.ep_as, self.ep_rs = [], [], []

# init network parameters

self.network = PGNetwork(state_dim=self.state_dim, action_dim=self.action_dim).to(device)

self.optimizer = torch.optim.Adam(self.network.parameters(), lr=LR)

# init some parameters

self.time_step = 0

def choose_action(self, observation):

observation = torch.FloatTensor(observation).to(device)

network_output = self.network.forward(observation)

with torch.no_grad():

prob_weights = F.softmax(network_output, dim=0).cuda().data.cpu().numpy()

# prob_weights = F.softmax(network_output, dim=0).detach().numpy()

action = np.random.choice(range(prob_weights.shape[0]),

p=prob_weights) # select action w.r.t the actions prob

return action

# 将状态,动作,奖励这一个transition保存到三个列表中

def store_transition(self, s, a, r):

self.ep_obs.append(s)

self.ep_as.append(a)

self.ep_rs.append(r)

def learn(self):

self.time_step += 1

# Step 1: 计算每一步的状态价值

discounted_ep_rs = np.zeros_like(self.ep_rs)

running_add = 0

# 注意这里是从后往前算的,所以式子还不太一样。算出每一步的状态价值

# 前面的价值的计算可以利用后面的价值作为中间结果,简化计算;从前往后也可以

for t in reversed(range(0, len(self.ep_rs))):

running_add = running_add * GAMMA + self.ep_rs[t]

discounted_ep_rs[t] = running_add

discounted_ep_rs -= np.mean(discounted_ep_rs) # 减均值

discounted_ep_rs /= np.std(discounted_ep_rs) # 除以标准差

discounted_ep_rs = torch.FloatTensor(discounted_ep_rs).to(device)

# Step 2: 前向传播

softmax_input = self.network.forward(torch.FloatTensor(self.ep_obs).to(device))

# all_act_prob = F.softmax(softmax_input, dim=0).detach().numpy()

neg_log_prob = F.cross_entropy(input=softmax_input, target=torch.LongTensor(self.ep_as).to(device), reduction='none')

# Step 3: 反向传播

loss = torch.mean(neg_log_prob * discounted_ep_rs)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

# ---------------------------------------------------------

# Hyper Parameters

ENV_NAME = 'CartPole-v0'

EPISODE = 3000 # Episode limitation

STEP = 300 # Step limitation in an episode

TEST = 10 # The number of experiment test every 100 episode

def main():

# initialize OpenAI Gym env and dqn agent

env = gym.make(ENV_NAME)

agent = PG(env)

for episode in range(EPISODE):

# initialize task

state = env.reset()

# Train

# 只采一盘?N个完整序列

for step in range(STEP):

action = agent.choose_action(state) # softmax概率选择action

next_state, reward, done, _ = env.step(action)

agent.store_transition(state, action, reward) # 新函数 存取这个transition

state = next_state

if done:

# print("stick for ",step, " steps")

agent.learn() # 更新策略网络

break

# Test every 100 episodes

if episode % 100 == 0:

total_reward = 0

for i in range(TEST):

state = env.reset()

for j in range(STEP):

env.render()

action = agent.choose_action(state) # direct action for test

state, reward, done, _ = env.step(action)

total_reward += reward

if done:

break

ave_reward = total_reward/TEST

print ('episode: ', episode, 'Evaluation Average Reward:', ave_reward)

if __name__ == '__main__':

time_start = time.time()

main()

time_end = time.time()

print('The total time is ', time_end - time_start)