Kubernetes部署思路+ssl+etcd+flannel

官方提供的三种部署方式

- minikube

minikube是一个工具,可以在本地快速运行一个单点的kubernetes,仅用于尝试K8S或日常开发的测试环境使用

部署地址:https://kubernetes.io/docs/setup/minkube/

- kubeadm

kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署kubernetes集群

部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

- 二进制包

从官方下载发行版的二进制包,手动部署每个组件,组成kubernetes集群

下载地址:https://github.com/kubernetes/kubernetes/releases

https://github.com/kubernetes/kubernetes/releases?after=v1.13.1

服务器

初步环境部署

1、关闭网络管理器,清空iptabels,关闭核心防护,编辑主机名

master01:192.168.49.205

[root@localhost ~]# hostnamectl set-hostname master1

[root@localhost ~]# su

[root@master1 ~]# systemctl stop NetworkManager

[root@master1 ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

[root@master1 ~]# setenforce 0

[root@master1 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

[root@master1 ~]# iptables -F

node01:192.168.49.129

[root@node01 ~]# systemctl stop NetworkManager

[root@node01 ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

[root@node01 ~]# setenforce 0

[root@node01 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

[root@node01 ~]# iptables -F

node02:192.168.49.130

[root@localhost ~]# hostnamectl set-hostname node02

[root@localhost ~]# su

[root@node02 ~]# systemctl stop NetworkManager

[root@node02 ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@node02 ~]# setenforce 0

[root@node02 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

[root@node02 ~]# iptables -F

2、创建ca证书,各组件之间的通讯必须有ca证书

创建临时目录

[root@master1 k8s]# mkdir /abc

[root@master1 k8s]# mount -t cifs //192.168.56.1/anzhuangbao/ /abc -o username=anonymous,vers=2.0

Password for anonymous@//192.168.56.1/anzhuangbao/:

[root@master1 k8s]# cp /abc/k8s/etcd* .

######3、etcd-cert.sh用来创建关于etcd的CA证书

expiry 有效期10年

使用密钥验证 key encipherment

cat > ca-config.json < ca-csr.json < server-csr.json < 4、etcd.sh用来创建启动脚本和配置文件

2380是etcd之间进行通讯的端口

2379是etcd对外提供的端口

cat etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.1.10 etcd02=https://192.168.1.11:2380,etcd03=https://192.168.1.12:2380

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/opt/etcd

cat <$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

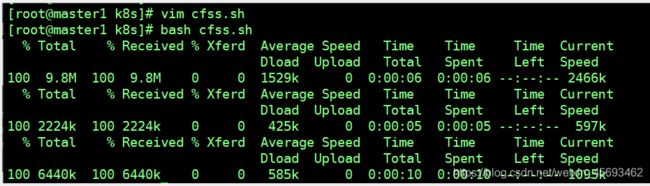

5、下载cfssl官方脚本包

cfssl 生成证书工具

cfssljson 通过传入json文件生成证书

cfssl-certinfo 查看证书信息

-o 导出

vim cfss.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl*

6、创建etcd组件证书临时目录

[root@master1 k8s]# mkdir etcd-cert

[root@master1 k8s]# mv etcd-cert.sh etcd-cert

7、定义ca证书配置

[root@master1 k8s]# cd etcd-cert/

[root@master1 etcd-cert]# ls

etcd-cert.sh

[root@master1 etcd-cert]# cat > ca-config.json <8、实现ca证书签名

[root@master1 etcd-cert]# cat > ca-csr.json <9、生产证书,生成ca-key.pem ca.pem这两个文件

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@master1 etcd-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd-cert.sh

10、指定etcd三个节点之间的通信验证

cat > server-csr.json <11、生成etcd的server证书和密钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

12、Etcd数据库集群部署

https://github.com/etcd-io/etcd/releases

我下载好直接拉到本地

[root@master1 etcd-cert]# cp /abc/k8s/etcd-v3.3.10-linux-amd64.tar.gz /root/k8s/

[root@master1 etcd-cert]# cd ..

[root@master1 k8s]# pwd

/root/k8s

[root@master1 k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64.tar.gz

[root@master1 k8s]# tar xf etcd-v3.3.10-linux-amd64.tar.gz

[root@master1 k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64 etcd-v3.3.10-linux-amd64.tar.gz

[root@master1 k8s]# cd etcd-v3.3.10-linux-amd64/

[root@master1 etcd-v3.3.10-linux-amd64]# ls

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

13、创建etcd的工作目录,下面还有配置文件cfg,命令bin,证书ssl的目录

[root@master1 etcd-v3.3.10-linux-amd64]# mkdir /k8s/etcd/{cfg,bin,ssl} -p

[root@master1 etcd-v3.3.10-linux-amd64]# cd /k8s

[root@master1 k8s]# tree .

.

└── etcd

├── bin

├── cfg

└── ssl

4 directories, 0 files

14、将证书文件和命令文件复制过来

[root@master1 k8s]# mv /root/k8s/etcd-v3.3.10-linux-amd64/etcd* /k8s/etcd/bin/

[root@master1 k8s]# cp /root/k8s/etcd-cert/*.pem /k8s/etcd/ssl/

[root@master1 k8s]# tree .

15、编辑etcd的配置文件和启动脚本

[root@master1 ~]# cd /k8s/

[root@master1 k8s]# tree .

vim etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.49.205 etcd02=https://192.168.49.129:2380,etcd03=https://192.168.49.130:2380

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/k8s/etcd

cat <$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

chmod +x etcd.sh

sh etcd.sh

执行会报错,不管继续做后面,因为还没有做后面两个节点

cd /k8s

tree .

[root@master1 k8s]# ll /usr/lib/systemd/system/ | grep etcd

-rw-r--r--. 1 root root 923 4月 30 10:07 etcd.service

16、第一步产生配置文件,启动脚本生成到systemd下

端口,2379是提供给外部端口,2380是内部集群通讯端口,最多65536端口

此时进入一个等待状态,查找别的etcd集群节点,查找不到过5分钟默认退出

bash etcd.sh etcd01 192.168.49.205 etcd02=https://192.168.49.129:2380,etcd03=https://192.168.49.130:2380

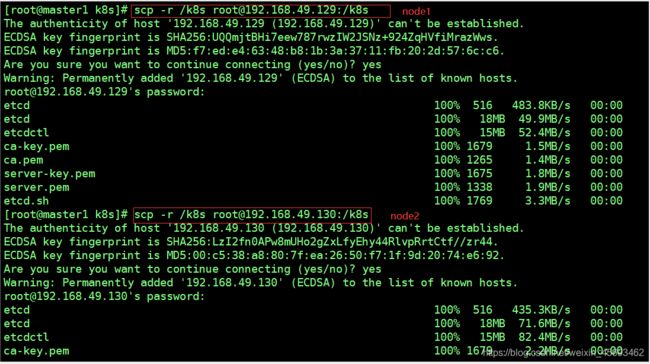

拷贝证书去另外两个节点

[root@master1 ~]# cd /root/k8s/

[root@master1 k8s]# ls

cfss.sh etcd- etcd-cert etcd.sh etcd-v3.3.10-linux-amd64 etcd-v3.3.10-linux-amd64.tar.gz

[root@master1 k8s]# pwd

/root/k8s

[root@master1 k8s]# ./etcd.sh etcd01 192.168.49.205 etcd02=https://192.168.49.129:2380,etcd03=https://192.168.49.130:2380

17、拷贝证书去另外两个节点

18、启动脚本

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

19、相关文件复制过去了,/k8s/etcd/cfg/etcd配置文件中有些参数需要修改

20、开启节点服务

[root@node01 k8s]# vim /k8s/etcd/cfg/etcd

[root@node01 k8s]# systemctl start etcd

[root@node01 k8s]# systemctl status etcd

21、此时在主节点master1上重新执行脚本命令

./etcd.sh etcd01 192.168.49.205 etcd02=https://192.168.49.129:2380,etcd03=https://192.168.49.130:2380

可以检查下集群状态

/k8s/etcd/bin/etcdctl \

--ca-file=/k8s/etcd/ssl/ca.pem \

--cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem \

--endpoints="https://192.168.49.205:2379,https://192.168.49.129:2379,https://192.168.49.130:2379" \

cluster-health

node安装docker

22、我这里只演示node01,另外node02一样操作

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

setenforce 0

23 、安装docker-ce

[root@node01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@node01 ~]# cd /etc/yum.repos.d/ && yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@node01 yum.repos.d]# ls

CentOS-Base.repo CentOS-Debuginfo.repo CentOS-Media.repo CentOS-Vault.repo

CentOS-CR.repo CentOS-fasttrack.repo CentOS-Sources.repo docker-ce.repo

[root@node01 yum.repos.d]# yum install -y docker-ce

[root@node01 yum.repos.d]# systemctl start docker

[root@node01 yum.repos.d]# systemctl enable docker

[root@node01 yum.repos.d]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://fk2yrsh1.mirror.aliyuncs.com"]

}

EOF

[root@node01 yum.repos.d]# echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf

[root@node01 yum.repos.d]# sysctl -p

net.ipv4.ip_forward = 1

[root@node01 yum.repos.d]# systemctl restart network

[root@node01 yum.repos.d]# systemctl restart docker

k8s/etcd/bin/etcdctl \

--ca-file=/k8s/etcd/ssl/ca.pem \

--cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem \

--endpoints="https://192.168.49.205:2379,https://192.168.49.129:2379,https://192.168.49.130:2379" \

set /coreos.com/network/config '{ "network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

安装flannel

flannel网络组件,还有一个是calico,calico支持bgp

overlay network:覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路tunnmel连接起来

vxlan:将原数据包封装到UDP协议中,并使用基础网络的IP/mac作为外层报文头进行封装,然后在以太网二层链路上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址

24、 写入分配的子网段到etcd中,共flannel使用

[root@master1 k8s]# /k8s/etcd/bin/etcdctl --ca-file=/k8s/etcd/ssl/ca.pem --cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem --endpoints="https://192.168.49.205:2379,https://192.168.49.129:2379,https://192.168.49.130:2379" set /coreos.com/network/config '{ "network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

25、查看写入信息,别的节点也能查看到

/k8s/etcd/bin/etcdctl \

--ca-file=/k8s/etcd/ssl/ca.pem \

--cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem \

--endpoints="https://192.168.49.205:2379,https://192.168.49.129:2379,https://192.168.49.130:2379" \

get /coreos.com/network/config

26、导入二进制包,flannel安装在node节点

谁需要跑业务资源,设就需要安装fannel

[root@master1 /]# cp /abc/k8s/flannel-v0.10.0-linux-amd64.tar.gz /root/k8s/

[root@master1 /]# cd /root/k8s/

[root@master1 k8s]# ls

cfss.sh etcd-cert etcd-v3.3.10-linux-amd64 flannel-v0.10.0-linux-amd64.tar.gz

etcd- etcd.sh etcd-v3.3.10-linux-amd64.tar.gz

[root@master1 k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/opt/

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 39.7MB/s 00:00

[root@master1 k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/opt/

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz

27、部署与配置flannel,编辑flannel启动脚本,加入到systemd中

node01节点为例

[root@node01 yum.repos.d]# cd /opt

[root@node01 opt]# tar xf flannel-v0.10.0-linux-amd64.tar.gz

[root@node01 opt]# ls

containerd flanneld flannel-v0.10.0-linux-amd64.tar.gz mk-docker-opts.sh README.md rh

创建flannel工作目录

[root@node01 opt]# mkdir /k8s/flannel/{cfg,bin,ssl} -p

[root@node01 opt]# mv mk-docker-opts.sh /k8s/flannel/bin/

[root@node01 opt]# mv flanneld /k8s/flannel/bin/

每个node节点都要做

vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat </k8s/flannel/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/k8s/etcd/ssl/ca.pem \

-etcd-certfile=/k8s/etcd/ssl/server.pem \

-etcd-keyfile=/k8s/etcd/ssl/server-key.pem"

EOF

cat </usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/k8s/flannel/cfg/flanneld

ExecStart=/k8s/flannel/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/k8s/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

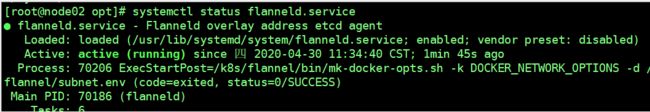

28、开启flannel网络功能,指定etcdIP:端口

bash flannel.sh https://192.168.49.205:2379,https://192.168.49.129:2379,https://192.168.49.130:2379

29、配置docker,以使用flannel生成的子网

以node01为例,别的节点也要做

让docker连接flannel的网段

vim /usr/lib/systemd/system/docker.service

13行下插入

EnvironmentFile=/run/flannel/subnet.env

修改15行

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/contain erd.sock

30、启动flannel

[root@node01 flannel]# systemctl daemon-reload

[root@node01 flannel]# systemctl restart docker

31、查看node01节点分配的flannelIP地址

32、此时便可以让不同node间的容器互联互通

测试一下,两个node各创建容器测试ping

node01 ping node02

node02 ping node01