正文之前

因为不断的扩充了辅助类,所以改进了下脚本,没有原先那么巨细,但是感觉好了不少。我在想要不要下次直接开一个文件来记录上次更新的文件以及时间。然后再写一个Java或者Python的程序来自动输出要上传的文件名字?感觉是个大工程。现在还可以,以后再说吧!

正文

echo "OK!NOW I WILL UPLOAD YOUR CHANGE TO GITHUB!"

time=$(date "+%Y-%m-%d %H:%M")

echo "${time}"

cd /Users/zhangzhaobo/Documents/Graduation-Design/

sudo cp -a /Users/zhangzhaobo/IdeaProjects/Graduation_Design/src/ReadData.* /Users/zhangzhaobo/Documents/Graduation-Design/

sudo cp -a /Users/zhangzhaobo/IdeaProjects/Graduation_Design/src/ZZB_JCS.* /Users/zhangzhaobo/Documents/Graduation-Design/

sudo cp -a /Users/zhangzhaobo/IdeaProjects/Graduation_Design/src/data.txt /Users/zhangzhaobo/Documents/Graduation-Design/data.txt

sudo cp -a /Users/zhangzhaobo/IdeaProjects/Graduation_Design/src/Mysql* /Users/zhangzhaobo/Documents/Graduation-Design/

sudo cp -a /Users/zhangzhaobo/IdeaProjects/Graduation_Design/mysql* /Users/zhangzhaobo/Documents/Graduation-Design/

sudo javac /Users/zhangzhaobo/Documents/Graduation-Design/ReadData.java

sudo javac /Users/zhangzhaobo/Documents/Graduation-Design/ZZB_JCS.java

git add ReadData.* ZZB_JCS.* data.txt Mysql*

git commit -m "$time $1"

git push origin master

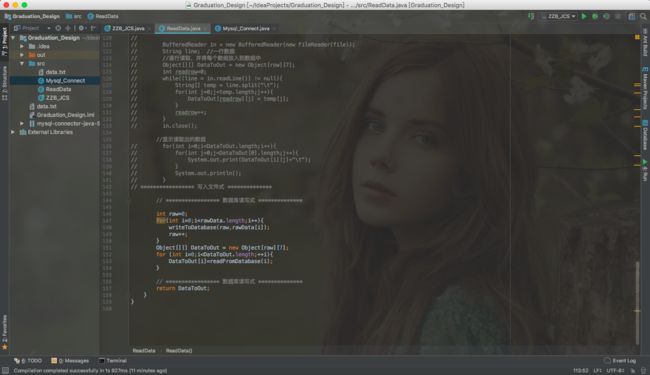

别的就不说了,先把数据读写的程序丢出来。这个数据读写其实我是想要到时候如果有人给我一个文本,那就GG,所以还要看看读文本存进数据库咋搞!另外!有没有人能告诉我!去哪儿找机械设备的运行数据啊!!!我很苦恼啊啊!

数据库连接程序

//*************************** 数据库连接程序 ***************************

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

public class Mysql_Connect {

//此处查看网络才知道。要求SSL,所以就酱紫咯:https://zhidao.baidu.com/question/2056521203295428667.html

private static String url = "jdbc:mysql://127.0.0.1:3306/Graduation_Design?useUnicode=true&characterEncoding=GBK&useSSL=true";

private static String user = "root";

private static String password = "zzb1184827350";

private static String Database="192.168.2.127:3306/Graduation_Design";

private Statement statement;

private Connection conn;

public void setUser(String user){

this.user=user;

}

public void setPassword(String p){

this.password=p;

}

public void setDatabase(String database){

this.Database=database;

this.url="jdbc:mysql:/"+Database+"?useUnicode=true&characterEncoding=GBK&useSSL=true";

}

public void setDatabase(){

this.url="jdbc:mysql:/192.168.2.127:3306/Shop_User?useUnicode=true&characterEncoding=GBK&useSSL=true";

}

public Statement getStatement() {

return this.statement;

}

public void Connect() {

try {

String driver = "com.mysql.jdbc.Driver";

Class.forName(driver);

conn = DriverManager.getConnection(url, user, password);

if (!conn.isClosed()){

}

else {

System.out.println("\n\nFailed to connect to the Database!");

}

this.statement = conn.createStatement();

} catch (ClassNotFoundException e) {

System.out.println("Sorry,can`t find the Driver!");

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

public void Dis_Connect() throws SQLException {

try {

conn.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

数据库读写程序!

//*************************** 数据库读写程序 ***************************

/* *********************

* Author : HustWolf --- 张照博

* Time : 2018.3-2018.5

* Address : HUST

* Version : 3.0

********************* */

/* *******************

* 这是从数据库或者是文本文件读取数据的时候用的

* 其实我觉得如果可以每一次读一条数据,然后处理一条会比较好

* 但是算了,数据量不大的话,这个样子也不会增加太多时间的!

******************* */

import java.io.*;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class ReadData {

protected Mysql_Connect mysql=new Mysql_Connect();

public void writeToDatabase(int id,Object[] data_array) {

try {

mysql.Connect();

Statement statement=mysql.getStatement();

String INSERT = "INSERT INTO watermelon(id,色泽,根蒂,敲声,纹理,脐部,触感,category) VALUES( " + id + " , ' " + data_array[0] + "' , ' " + data_array[1] + "' , ' " + data_array[2] + "' , ' " + data_array[3] + "' , ' " + data_array[4] + "' , ' " + data_array[5] + " ', ' " + data_array[6] + "' )";

boolean insert_ok = statement.execute(INSERT);

if (insert_ok) {

System.out.println("Insert Success!");

}

statement.close();

mysql.Dis_Connect();

} catch (SQLException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

}

public Object[] readFromDatabase(int id) {

Object[] DataToOut = new Object[7];

try {

mysql.Connect();

Statement statement=mysql.getStatement();

String GETDATA = "SELECT 色泽,根蒂,敲声,纹理,脐部,触感,category FROM watermelon WHERE id="+id;

ResultSet select_ok = statement.executeQuery(GETDATA);

if(select_ok.next()) {

DataToOut[0]=select_ok.getObject("色泽");

DataToOut[1]=select_ok.getObject("根蒂");

DataToOut[2]=select_ok.getObject("敲声");

DataToOut[3]=select_ok.getObject("纹理");

DataToOut[4]=select_ok.getObject("脐部");

DataToOut[5]=select_ok.getObject("触感");

DataToOut[6]=select_ok.getObject("category");

}

statement.close();

mysql.Dis_Connect();

} catch (SQLException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

return DataToOut;

}

public Object[][] ReadData() throws IOException {

Object[][] rawData = new Object [][]{

{"青绿","蜷缩","浊响","清晰","凹陷","硬滑","是"},

{"乌黑","蜷缩","沉闷","清晰","凹陷","硬滑","是"},

{"乌黑","蜷缩","浊响","清晰","凹陷","硬滑","是"},

{"青绿","蜷缩","沉闷","清晰","凹陷","硬滑","是"},

{"浅白","蜷缩","浊响","清晰","凹陷","硬滑","是"},

{"青绿","稍蜷","浊响","清晰","稍凹","软粘","是"},

{"乌黑","稍蜷","浊响","稍糊","稍凹","软粘","是"},

{"乌黑","稍蜷","浊响","清晰","稍凹","硬滑","是"},

{"乌黑","稍蜷","沉闷","稍糊","稍凹","硬滑","否"},

{"青绿","硬挺","清脆","清晰","平坦","软粘","否"},

{"浅白","硬挺","清脆","模糊","平坦","硬滑","否"},

{"浅白","蜷缩","浊响","模糊","平坦","软粘","否"},

{"青绿","稍蜷","浊响","稍糊","凹陷","硬滑","否"},

{"浅白","稍蜷","沉闷","稍糊","凹陷","硬滑","否"},

{"乌黑","稍蜷","浊响","清晰","稍凹","软粘","否"},

{"浅白","蜷缩","浊响","模糊","平坦","硬滑","否"},

{"青绿","蜷缩","沉闷","稍糊","稍凹","硬滑","否"},

// { "<30 ", "High ", "No ", "Fair ", "0" },

// { "<30 ", "High ", "No ", "Excellent", "0" },

// { "30-40", "High ", "No ", "Fair ", "1" },

// { ">40 ", "Medium", "No ", "Fair ", "1" },

// { ">40 ", "Low ", "Yes", "Fair ", "1" },

// { ">40 ", "Low ", "Yes", "Excellent", "0" },

// { "30-40", "Low ", "Yes", "Excellent", "1" },

// { "<30 ", "Medium", "No ", "Fair ", "0" },

// { "<30 ", "Low ", "Yes", "Fair ", "1" },

// { ">40 ", "Medium", "Yes", "Fair ", "1" },

// { "<30 ", "Medium", "Yes", "Excellent", "1" },

// { "30-40", "Medium", "No ", "Excellent", "1" },

// { "30-40", "High ", "Yes", "Fair ", "1" },

// { "<30 " , "Medium", "No ", "Excellent", "1" },

// { ">40 ", "Medium", "No ", "Excellent", "0" }

};

// ***************** 写入文件式 **************

// File file = new File("data.txt"); //存放数组数据的文件

//

// FileWriter DataToTXT = new FileWriter(file); //文件写入流

// int row=0;

// //将数组中的数据写入到文件中。每行各数据之间TAB间隔

// for(int i=0;i最大头!决策树生成程序!

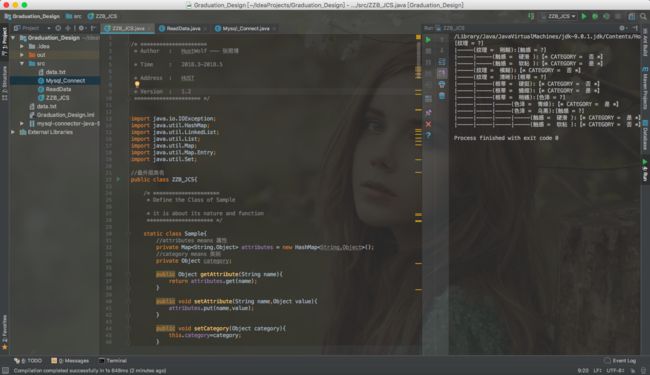

主要是照搬的网上的啦!后面再自己慢慢适合的吧!大神写的很棒啊啊!

/* *********************

* Author : HustWolf --- 张照博

* Time : 2018.3-2018.5

* Address : HUST

* Version : 1.0

********************* */

import java.io.IOException;

import java.util.HashMap;

import java.util.LinkedList;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

//最外层类名

public class ZZB_JCS{

/* *********************

* Define the Class of Sample

* it is about its nature and function

********************* */

static class Sample{

//attributes means 属性

private Map attributes = new HashMap();

//category means 类别

private Object category;

public Object getAttribute(String name){

return attributes.get(name);

}

public void setAttribute(String name,Object value){

attributes.put(name,value);

}

public void setCategory(Object category){

this.category=category;

}

public String toString(){

return attributes.toString();

}

}

/* *********************

* this is the function to read the sample

* just like decoding the data

********************* */

// 此处需要改造为读取外部数据!并且能够进行分解,改造为可读取的形式

static Map> readSample(String[] attribute_Names) throws IOException {

//样本属性及其分类,暂时先在代码里面写了。后面需要数据库或者是文件读取

ReadData data = new ReadData();

Object[][] rawData = data.ReadData();

//最终组合出一个包含所有的样本的Map

Map> sample_set = new HashMap>();

//读取每一排的数据

//分解后读取样本属性及其分类,然后利用这些数据构造一个Sample对象

//然后按照样本最后的0,1进行二分类划分样本集,

for (Object[] row:rawData) {

//新建一个Sample对象,没处理一次加入Map中,最后一起返回

Sample sample = new Sample();

int i=0;

//每次处理一排数据,构成一个样本中各项属性的值

for (int n=row.length-1; i samples = sample_set.get(row[i]);

//现在整体样本集中查询,有的话就返回value,而如果这个类别还没有样本,那么就添加一下

if(samples == null){

samples = new LinkedList();

sample_set.put(row[i],samples);

}

//不管是当前分类的样本集中是否为空,都要加上把现在分离出来的样本丢进去。

//此处基本只有前几次分类没有完毕的时候才会进入if,后面各个分类都有了样本就不会为空了。

samples.add(sample);

}

//最后返回的是一个每一个类别一个链表的Map,串着该类别的所有样本 (类别 --> 此类样本)

return sample_set;

}

/* *********************

* this is the class of the decision-tree

* 决策树(非叶结点),决策树中的每个非叶结点都引导了一棵决策树

* 每个非叶结点包含一个分支属性和多个分支,分支属性的每个值对应一个分支,该分支引导了一棵子决策树

********************* */

static class Tree{

private String attribute;

private Map children = new HashMap();

public Tree(String attribute){

this.attribute=attribute;

}

public String getAttribute(){

return attribute;

}

public Object getChild(Object attrValue){

return children.get(attrValue);

}

public void setChild(Object attrValue,Object child){

children.put(attrValue,child);

}

public Set 另外要注意,这个因为用到了数据库,所以需要加载依赖文件包!具体看这儿:

解决方案! https://blog.csdn.net/sakura_yuan/article/details/51730493

最后的结果简直是美到爆炸!好么??爆炸!!

/Library/Java/JavaVirtualMachines/jdk-9.0.1.jdk/Contents/Home/bin/java "-javaagent:/Applications/IntelliJ IDEA.app/Contents/lib/idea_rt.jar=57631:/Applications/IntelliJ IDEA.app/Contents/bin" -Dfile.encoding=UTF-8 -classpath /Users/zhangzhaobo/IdeaProjects/Graduation_Design/out/production/Graduation_Design:/Users/zhangzhaobo/IdeaProjects/Graduation_Design/mysql-connector-java-5.1.44-bin.jar ZZB_JCS

[纹理 = ?]

|-----(纹理 = 稍糊):[触感 = ?]

|-----|-----(触感 = 硬滑 ):【* CATEGORY = 否 *】

|-----|-----(触感 = 软粘 ):【* CATEGORY = 是 *】

|-----(纹理 = 模糊):【* CATEGORY = 否 *】

|-----(纹理 = 清晰):[根蒂 = ?]

|-----|-----(根蒂 = 硬挺):【* CATEGORY = 否 *】

|-----|-----(根蒂 = 蜷缩):【* CATEGORY = 是 *】

|-----|-----(根蒂 = 稍蜷):[色泽 = ?]

|-----|-----|-----(色泽 = 青绿):【* CATEGORY = 是 *】

|-----|-----|-----(色泽 = 乌黑):[触感 = ?]

|-----|-----|-----|-----(触感 = 硬滑 ):【* CATEGORY = 是 *】

|-----|-----|-----|-----(触感 = 软粘 ):【* CATEGORY = 否 *】

Process finished with exit code 0

正文之后

今天早起(八点半?)吃到了西边百景园的早饭,油条和汤包,虽然贵了点,但是好吃啊!希望以后天天有早饭吃!阿西吧!整完了,吃午饭去!