GDAL & Python | Chapter 3. Reading and writing vector data

Chapter 3. Reading and writing vector data

This chapter covers

- Understanding vector data

- Introducing OGR

- Reading vector data

- Creating new vector datasets

- Updating existing datasets

They seem to be rare these days, but you’ve probably seen a paper roadmap designed to be folded up and kept in your car. Unlike the more recent web maps that we’re used to using, these maps don’t use aerial imagery. Instead, features on the maps are all drawn as geometric objects—namely, points, lines, and polygons. These types of data, where the geographic features are all distinct objects, are called vector datasets.

Unless you only plan to look at maps that someone else has made, you’ll need to know how to read and write these types of data. If you want to work with existing data in any way, whether you’re summarizing, editing, deriving new data, or performing sophisticated spatial analyses, you need to read it in from a file first. You also need to write any new or modified data back out to a disk. For example, if you had a nationwide city dataset but needed to analyze only data from cities with 100,000 people or more, you could extract those cities out of your original dataset and run your analysis on them while ignoring the smaller towns. Optionally, you could also save the smaller dataset to a new file for later use.

In this chapter you’ll learn basic ideas behind vector data and how to use the OGR library to read, write, and edit these types of datasets.

3.1. Introduction to vector data

At its most basic, vector data are data in which geographic features are represented as discrete geometries—specifically, points, lines, and polygons. Geographic features that have distinct boundaries, such as cities, work well as vector data, but continuous data, such as elevation, don’t. It would be difficult to draw a single polygon around all areas with the same elevation, at least if you were in a mountainous area. You could, however, use polygons to differentiate between different elevation ranges. For example, polygons showing subalpine zones for a region would be a good proxy for an elevation range, but you’d lose much of the detailed elevation data within those polygons. Many types of data are excellent candidates for a vector representation, though, such as features in the roadmap mentioned earlier. Roads are represented as lines, counties and states are polygons, and depending on the scale of the map, cities are drawn as either points or polygons. In fact, all of the features on the map are probably represented as points, lines, or polygons.

The type of geometry used to draw a feature can be dependent on scale, however. Figure 3.1 shows an example of this. On the map of New York State, cities are shown as points, major roads as lines, and counties as polygons. A map of a smaller area, such as New York City, will symbolize features differently. In this case, roads are still lines, but the city and its boroughs are polygons instead of points. Now points would be used to represent features such as libraries or police stations.

Figure 3.1. An example of how scale changes the geometries used to draw certain features. New York City is a point on the state map, but is made of several polygons on the city map.

You can imagine many other examples of geographic data that lend themselves to being represented this way. Anything that can be described with a single set of coordinates, such as latitude and longitude, can be represented as a point. This includes cities, restaurants, mountain peaks, weather stations, and geocache locations. In addition to their x and y coordinates (such as latitude and longitude), points can have a third z coordinate that represents elevation.

Geographic areas with closed boundaries can be represented as polygons. Examples are states, lakes, congressional districts, zip codes, and land ownership, along with many of the same features that can be symbolized as points such as cities and parks. Other features that could be represented as polygons, but probably not as points, include countries, continents, and oceans.

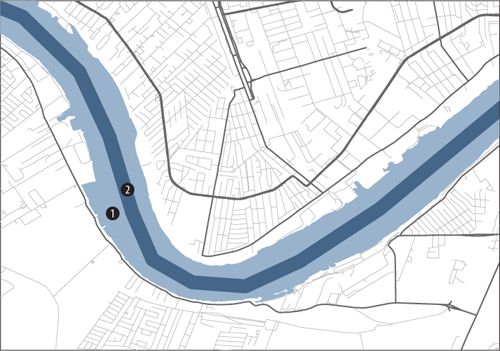

Linear features, such roads, rivers, power lines, and bus routes, all lend themselves to being characterized as lines. Once again, however, scale can make a difference. For example, a map of New Orleans could show the Mississippi River as a polygon rather than a line because it’s so wide. This would also allow the map to show the irregular banks of the river, rather than just a smooth line, as shown in figure 3.2.

Figure 3.2. The difference between using polygon ![]() and line

and line ![]() geometries to represent the Mississippi River. The polygon shows the details along the banks, while the line doesn’t.

geometries to represent the Mississippi River. The polygon shows the details along the banks, while the line doesn’t.

Vector data is more than geometries, however. Each one of these features also has associated attributes. These attributes can relate directly to the geometry itself, such as the area or perimeter of a polygon, or length of a line, but other attributes may be present as well. Figure 3.3 shows a simple example of a states dataset that stores the state name, abbreviation, population, and other data along with each feature. As you can see from the figure, these attributes can be of various types. They can be numeric, such as the city population or road speed limit, strings like city or road names, or dates such as the date the land parcel was purchased or last appraised. Certain types of vector data also support BLOBs (binary large objects), which can be used to store binary data such as photographs.

Figure 3.3. An attribute table for a dataset containing state boundaries within the United States. Each state polygon has an associated row in the data table with several attributes, including state name and population in 2010.

It should be clear by now that this type of data is well suited for making maps, but some reasons might not be so obvious. One example is how well it scales when drawing. If you’re familiar with web graphics, you probably know that vector graphics such as SVG (scalable vector graphics) work much better than raster graphics such as PNG when displayed at different scales. Even if you know nothing about SVG, you’ve surely seen an image on a website that’s pixelated and ugly. That’s a raster graphic displayed at a higher resolution than it was designed for. This doesn’t happen with vector graphics, and the exact same principle applies to vector GIS data. It always looks smooth, no matter the scale.

That doesn’t mean that scale is irrelevant, though. As you saw earlier, scale affects the type of geometry used to represent a geographic feature, but it also affects the resolution you should use for a feature. A simple way to think of resolution is to equate it to detail. The higher the resolution, the more detail can be shown. For example, a map of the United States wouldn’t show all of the individual San Juan Islands off the coast of Washington State, and in fact, the dataset wouldn’t even need to include them. A map of only Washington State, however, would definitely need a higher-resolution dataset that includes the islands, as seen in figure 3.4. Keep in mind that resolution isn’t important only for display, but also for analysis. For example, the two maps of Washington would provide extremely different measurements for coastline length.

Figure 3.4. An example showing the difference that resolution makes. The dataset shown with the thick outline has a lower resolution than the one shown with shading. Notice the difference in the amount of detail available in the two datasets.

THE COASTLINE PARADOX

Have you ever thought about how to measure the coastline of a landmass? As first pointed out by the English mathematician Lewis Fry Richardson, this isn’t as easy as you might think, because the final measurement depends totally on scale. For example, think about a wild section of coastline with multiple headlands, with a road running along beside it. Imagine that you drive along that road and use your car’s odometer to measure the distance, and then you get out of the car and walk back the way you came. But when on foot, you walk out along the edges of the headlands and follow other curves in the coast that the road doesn’t. It should be easy to imagine that you’d walk farther than you drove because you took more detours. The same principle applies when measuring the entire coastline, because you can measure more variation if you measure in smaller increments. In fact, measuring the coast of Great Britain in 50-km increments instead of 100-km increments increases the final measurement by about 600 km. You can see another example of this, using part of Washington State, in figure 3.3. If you were to measure all of the twists and turns in the higher-resolution dataset, you’d get a longer coastline measurement than if you measured the lower-resolution coastline shown by the dark line, which doesn’t even include many of the islands.

As mentioned previously, vector data isn’t only for making maps. In fact, I couldn’t make a pretty map if my life depended on it, but I do know a little bit more about data analysis. One common type of vector data analysis is to measure relationships between geographic features, typically by overlaying them on one another to determine their spatial relationship. For example, you could determine if two features overlap spatially and what that area of overlap is. Figure 3.5 shows the New Orleans city boundaries overlaid on a wetlands dataset. You could use this information to determine where wetlands exist within the city of New Orleans and how much of the city’s area is or isn’t wetland.

Figure 3.5. An example of a vector overlay operation. The dark outline is the City of New Orleans boundary, and the darker land areas are wetlands. These two datasets could be used to determine the percentage of land area within the New Orleans boundary that is wetlands.

Another aspect of spatial relationships is the distance between two features. You could find the distance between two weather stations, or all of the sandwich shops within one mile of your office. I helped out with a study a few years ago in which the researchers needed both distances and spatial relationships. They needed to know how far GPS-collared deer traveled between readings, but also the direction of travel and how they interacted with man-made features such as roads. One question in particular was if they crossed the roads, and if so, how often.

Speaking of roads, vector datasets also do a good job of representing networks, such as road networks. A properly configured road network can be used to find routes and drive times between two locations, similar to the results you see on various web-mapping sites. Businesses can also use information like this to provide services. For example, a pizza joint might use network analysis to determine which parts of town they can reach within a 15-minute drive to set their delivery area.

As with other types of data, you have multiple ways to store vector data. Similar to the way you can store a photograph as a JPEG, PNG, TIFF, bitmap, or one of many other file types, many different file formats can be used for storing vector data. I’ll talk more about the possibilities in the next chapter, but for now I’ll briefly mention a few common formats, several of which we’ll use in this chapter.

Shapefiles are a popular format for storing vector data. A shapefile isn’t made of a single file, however. In fact, this format requires a minimum of three binary files, each of which serves a different purpose. Geometry information is stored in .shp and .shx files, and attribute values are stored in a .dbf file. Additionally, other data, such as indexes or spatial reference information, can be stored in even more files. Generally you don’t need to know anything about these files, but you do need to make sure that they’re all kept together in the same folder.

Another widely used format, especially for web-mapping applications, is GeoJSON. These are plain text files that you can open up and look at in any text editor. Unlike a shapefile, a GeoJSON dataset consists of one file that stores all required information.

Vector data can also be stored in relational databases, which allows for multiuser access as well as various types of indexing. Two of the most common options for this are spatial extensions built for widely used database systems. The PostGIS extension runs on top of PostgreSQL, and SpatiaLite works with SQLite databases. Another popular database format is the Esri file geodatabase, which is completely different in that it isn’t part of an existing database system.

3.2. Introduction to OGR

The OGR Simple Features Library is part of the Geospatial Data Abstraction Library (GDAL), an extremely popular open source library for reading and writing spatial data. The OGR portion of GDAL is the part that provides the ability to read and write many different vector data formats. OGR also allows you to create and manipulate geometries; edit attribute values; filter vector data based on attribute values or spatial location; and it also offers data analysis capabilities. In short, if you want to use GDAL to work with vector data, OGR is what you need to learn about, and you will, in the next four chapters.

The GDAL library was originally written in C and C++, but it has bindings for several other languages, including Python, so there’s an interface to the GDAL/OGR library from Python, not that the code was rewritten in Python. Therefore, to use GDAL with Python, you need to install both the GDAL library and the Python bindings for it. If you haven’t yet done this, please see appendix A for detailed installation instructions.

NOTE

What does the OGR acronym stand for, anyway? It used to stand for OpenGIS Simple Features Reference Implementation, but because OGR isn’t fully compliant with the OpenGIS Simple Features specification, the name was changed and now the OGR part of it doesn’t stand for anything and is only historical in nature.

Several functions used in this chapter are from the ospybook Python module available for download at www.manning.com/books/geoprocessing-with-python. You’ll want to install this module, too. The sample datasets are available from the same site.

Before you start working with OGR, it’s useful to look at how various objects in the OGR universe are related to each other, as shown in figure 3.6. If you don’t understand this hierarchy, then the steps required to read and write data won’t make much sense. When you use OGR to open a data source, such as a shapefile, GeoJSON file, SpatiaLite, or PostGIS database, you’ll have a DataSource object. This data source can have one or more child Layer objects, one for each dataset contained in the data source. Many vector data formats, such as the shapefile examples used in this chapter, can only contain one dataset. But others, such as SpatiaLite, can contain multiple datasets, and you’ll see examples of this in the next chapter. Regardless of how many datasets are in a data source, each one is considered a layer by OGR. Even several of my students, who use GIS regularly for their classes and research, get confused by this if they mostly use shapefiles, because it’s counterintuitive to them that something called a layer sits between the data source and the actual data.

Figure 3.6. The OGR class structure. Each data source can have multiple layers, each layer can have multiple features, and each feature contains a geometry and one or more attributes.

And speaking of the actual data, each layer contains a collection of Feature objects that holds the geometries and their attributes. If you load vector data into a GIS, such as QGIS, and then look at the attribute table, you’ll see something similar to figure 3.7. Each row in the table corresponds to a feature, such as the feature representing Afghanistan. Each column corresponds to an attribute field, and in this case two of the attributes are SOVEREIGNT and TYPE. Although you can open data tables that don’t have any spatial information or geometries associated with the features, we’ll work with datasets that do have geometries. As you can see in figure 3.7, the geometries don’t show up in the attribute table in QGIS, although other GIS software packages, such as ArcGIS, do show a shape column in the attribute table.

Figure 3.7. An example of an attribute table shown in QGIS. Each row corresponds to a feature, and each column is an attribute field.

The first step to accessing any vector data is to open the data source. For this, you need to have an appropriate driver that tells OGR how to work with your data format. The GDAL/OGR website lists more than 70 vector formats that OGR is capable of reading, although it can’t write to all of them. Each one of these has its own driver. It’s likely that your version of OGR doesn’t support all of those listed, but you can always compile it yourself if you need something that’s missing (note that this is easier said than done in many cases). See www.gdal.org/ogr_formats.html for the list of all available formats and specific details pertaining to each one.

DEFINITION

A driver is a translator for a specific data format, such as Geo-JSON or shapefile. It tells OGR how to read and write that particular format. If no driver for a format is compiled into OGR, then OGR can’t work with it.

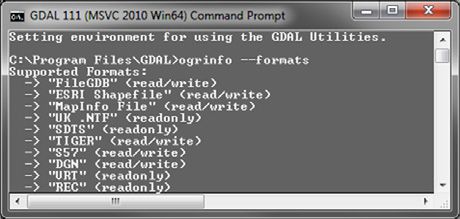

If you aren’t sure if your installation of GDAL/OGR supports a particular data format, you can use the ogrinfo command-line utility to find out which drivers are available. The location of this utility on your computer depends on your operating system and how you installed GDAL, so you might need to refer back to appendix A. If you aren’t used to using a command line, you may be tempted to double-click the ogrinfo executable file, but that won’t get you anywhere useful. Instead, you need to run ogrinfo from a terminal window or Windows command prompt. At any rate, once you find the executable, you’ll want to run it with the --formats option. Figure 3.8 shows an example of running it on my Windows 7 machine, although I’ve cut off most of the output.

Figure 3.8. An example of running the ogrinfo utility from a GDAL command prompt on a Windows computer

As you can see, ogrinfo not only tells you which drivers are included with your version of OGR, but also whether it can write to each one as well as read from it.

TIP

Information about vector formats supported by OGR can be found at www.gdal.org/ogr_formats.html.

You can also determine which drivers are available using Python. In fact, let’s try it. Start by opening up your favorite Python interactive environment. I’ll use IDLE (figure 3.9) because it’s the one that’s packaged with Python, but you can use whichever one you’re comfortable with. The first thing you need to do is import the ogr module so that you can use it. This module lives inside the osgeo package, which was installed when you installed the Python bindings for GDAL. All of the modules in this package are named with lowercase letters, which is how you need to refer to them in Python. Once you’ve imported ogr, then you can use ogr.GetDriverByName to find a specific driver:

窗体顶端

1

2

from osgeo import ogr

driver = ogr.GetDriverByName('GeoJSON')

窗体底端

Figure 3.9. Sample Python interactive session showing how to get drivers

Use the name from the Code column on the OGR Vector Formats webpage. If you get a valid driver and print it out, you’ll see information about where the object is stored in memory. The important thing is that there was something for it to print out because it means you successfully found a driver. If you pass an invalid name, or the name of a missing driver, the function will return None. See figure 3.9 for examples.

A function called print_drivers in the ospybook module will also print out a list of available drivers. This is shown in figure 3.9.

3.3. Reading vector data

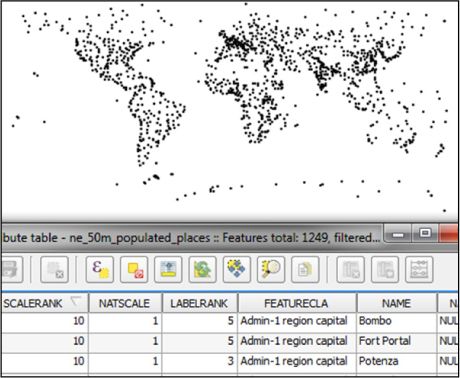

Now that you know what formats are available to work with, it’s time to read data. You’ll start with a cities shapefile, the ne_50m_populated_places.shp dataset in the global subfolder of your osgeopy-data folder. Feel free to open it up in QGIS and look. Not only will you see the cities shown in figure 3.10, but you’ll also see that the attribute table contains a collection of fields, most of which aren’t visible in the screenshot.

Figure 3.10. The geometries and attributes from ne_50m_populated_places.shp as seen in QGIS

Listing 3.1 shows a little script that prints out the names, populations, and coordinates for the first 10 features in this dataset. Don’t worry if it doesn’t make much sense at first glance because we’ll go over it in excruciating detail in a moment. The file is included with the source code for this chapter, so if you want to try it out, you can open it in IDLE, change the filename in the third line of code to match your setup, and then choose Run Module under the Run menu.

Listing 3.1. Printing data from the first ten features in a shapefile

The basic outline is simple. The first thing you do is open the shapefile and make sure that the result of that operation isn’t equal to None, because that would mean the data source couldn’t be opened. I tend to call this variable ds, short for data source. After making sure the file is opened, you retrieve the first layer from the data source. Then you iterate through the first 10 features in the layer and for each one, get the geometry object, its coordinates, and the NAME and POP_MAX attribute values. Then you print the information about the feature before moving on to the next one. When done, you delete the ds variable to force the file to close.

If you successfully ran the code, you should have 10 lines of output that look something like this, although you won’t have the parentheses if using Python 3:

窗体顶端

1

2

3

4

('Bombo', 75000, 32.533299524864844, 0.5832991056146284)

('Fort Portal', 42670, 30.27500161597942, 0.671004121125236)

('Clermont-Ferrand', 233050, 3.080008095928406, 45.779982115759424)

窗体底端

Let’s look at this in a little more detail. You open a data source by passing the filename and an optional update flag to the Open function. This is a standalone function in the OGR module, so you prefix the function name with the module name so that Python can find it. If the second parameter isn’t provided it defaults to 0, which will open the file in read-only mode. You could have passed 1 or True to open it in update, or edit, mode instead.

If the file can’t be opened, then the Open function returns None, so the next thing you do is check for this and print out an error message and quit if needed. I like to check for this so I can solve the problem immediately and in the manner of my choosing (quitting, in this case) instead of waiting for the script to crash when it tries to use the nonexistent data source. Change the filename in listing 3.1 to a bogus one and run the script if you want to see this behavior in action:

窗体顶端

1

2

3

4

5

fn = r'D:\osgeopy-data\global\ne_50m_populated_places.shp'

ds = ogr.Open(fn, 0)

if ds is None:

sys.exit('Could not open {0}.'.format(fn))

lyr = ds.GetLayer(0)

窗体底端

Remember that data sources are made of one or more layers that hold the data, so after opening the data source you need to get the layer from it. Data sources have a function called GetLayer that takes either a layer index or a layer name and returns the corresponding Layer object inside that particular data source. Layer indexes start at 0, so the first layer has index 0, the second has index 1, and so on. If you don’t provide any parameters to GetLayer, then it returns the first layer in the data source. The shapefile only has one layer, so the index isn’t technically needed in this case.

Now you want to get the data out of your layer. Recall that each layer is made of one or more features, with each feature representing a geographic object. The geometries and attribute values are attached to these features, so you need to look at them to get your data. The second half of the code in listing 3.1 loops through the first 10 features in the layer and prints information about each one. Here’s the interesting part of it again:

窗体顶端

1

2

3

4

5

6

7

for feat in lyr:

pt = feat.geometry()

x = pt.GetX()

y = pt.GetY()

name = feat.GetField('NAME')

pop = feat.GetField('POP_MAX')

print(name, pop, x, y)

窗体底端

The layer is a collection of features that you can iterate over with a for loop. Each time through the loop, the feat variable will be the next feature in the layer, and the loop will iterate over all features in the layer before stopping. You don’t want to print out all 1,249 features, though, so you force it to stop after the first 10.

The first thing you do inside the loop is get the geometry from the feature and stick it in a variable called pt. Once you have the geometry, you grab its x and y coordinates and store them in variables to use later.

Next you retrieve the values from the NAME and POP_MAX fields and store those in variables as well. The GetField function takes either an attribute name or index and returns the value of that field. Once you have the attributes, you print out all of the information you gathered about the current feature.

One thing you should be aware of is that the GetField function returns data that’s the same data type as that in the underlying dataset. In this example, the value in the name variable is a string, but the value stored in pop is a number. If you want the data in another format, check out appendix B to see a list of functions that return values as a specific type. For example, if you wanted pop to be a string so that you could concatenate it to another string, you could use GetFieldAsString.

窗体顶端

1

pop = feat.GetFieldAsString('POP_MAX')

窗体底端

Note that not all data formats support all field types, and not all data can successfully be converted between types, so you should test things thoroughly before relying on these automatic conversions. Not only are these functions useful for converting data between types, but you can also use them to make data types more evident in your code. For example, if you use GetFieldAsInteger, then it’s obvious to anyone reading your code that the value is an integer.

3.3.1. Accessing specific features

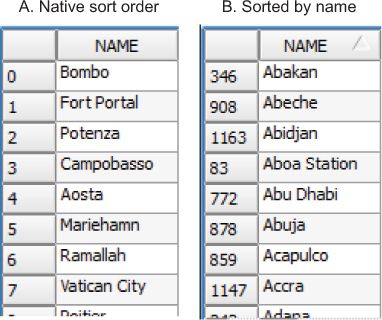

Sometimes you don’t need every feature, so you have no reason to iterate through all of them as you’ve done so far. One powerful method of limiting features to a subset is to select them by attribute value or spatial extent, and you’ll do that in chapter 5. Another way is to look at features with specific offsets, also called feature IDs (FIDs). The offset is the position that the feature is at in the dataset, starting with zero. It depends entirely on the position of the feature in the file and has nothing to do with the sort order in memory. For example, if you open the ne_50m_populated_places shapefile in QGIS and look at the attribute table, it would show Bombo as the first record in the table, as in figure 3.11A. See the numbers in the left-most column? Those are the offset values. Now try sorting the table by name by clicking on the NAME column header, as shown in figure 3.11B. Now the first record shown in the table is the one for Abakan, but it has an offset of 346. As you can see, that left-most column isn’t a row number like you see in spreadsheets, where the row numbers are always in the right order no matter how you sort the data. These numbers represent the order in the file instead.

Figure 3.11. The attribute table for the ne_50m_populated_places shapefile. Table A shows the native sort order, with the FIDs in order. Table B has been sorted by city name, and the FIDs are no longer ordered sequentially.

If you know the offset of the feature you want, you can ask for that feature by FID. To get the feature for Vatican City, you use GetFeature(7).

You can also get the total number of features with GetFeatureCount, so you could grab the last feature in the layer like this:

窗体顶端

1

2

3

4

>>> num_features = lyr.GetFeatureCount()

>>> last_feature = lyr.GetFeature(num_features - 1)

>>> last_feature.NAME

'Hong Kong'

窗体底端

You have to subtract one from the total number of features because the first index is zero. If you had tried to get the feature at index num_features, you’d have gotten an error message saying that the feature ID was out of the available range. This snippet also shows an alternate way of retrieving an attribute value from a feature, instead of using GetField, but it only works if you know the names beforehand so that you can hardcode them into your script.

The current feature

Another important point is that the functions that return features keep track of which feature was last accessed; this is the current feature. When you first get the layer object, it has no current feature. But if you start iterating through features, the first time through the loop, the current feature is the one with an FID of zero. The second time through the loop, the current feature is the one with offset 1, and so on. If you use GetFeature to get the one with an FID of 5, that’s now the current feature, and if you then call GetNextFeature or start a loop, the next feature returned will be the one with offset 6. Yes, you read that right. If you iterate through the features in the layer, it doesn’t start at the first one if you’ve already set the current feature.

Based on what you’ve learned so far, what do you think would happen if you iterated through all of the features and printed out their names and populations, but then later tried to iterate through a second time to print out their names and coordinates? If you guessed that no coordinates would print out, you were right. The first loop stops when it runs out of features, so the current feature is pointing past the last one and isn’t reset to the beginning (see figure 3.12). No next feature is there when the second loop starts, so nothing happens. How do you get the current feature to point to the beginning again? You wouldn’t want to use a FID of zero, because if you tried to iterate through them all, the first feature would be skipped. To solve this problem, use the layer.ResetReading() function, which sets the current feature pointer to a location before the first feature, similar to when you first opened the layer.

Figure 3.12. The location of the current feature pointer at various times

3.3.2. Viewing your data

Before we continue, you might find it useful to know about functions in the ospybook module that will help you visualize your data without opening it in another software program. These don’t allow the level of interaction with the data that a GIS does, so opening it in QGIS is still a much better option for exploring the data in any depth.

Viewing attributes

You can print out attribute values to your screen using the print_attributes function, which looks like this:

窗体顶端

1

print_attributes(lyr_or_fn, [n], [fields], [geom], [reset])

窗体底端

- lyr_or_fn is either a layer object or the path to a data source. If it’s a data source, the first layer will be used.

- n is an optional number of records to print. The default is to print them all.

- fields is an optional list of attribute fields to include in the printout. The default is to include them all.

- geom is an optional Boolean flag indicating whether the geometry type is printed. The default is True.

- reset is an optional Boolean flag indicating whether the layer should be reset to the first record before printing. The default is True.

For example, to print out the name and population for the first three cities in the populated places shapefile, you could do something like this from a Python interactive window:

窗体顶端

1

2

3

4

5

6

7

8

9

>>> import ospybook as pb

>>> fn = r'D:\osgeopy-data\global\ne_50m_populated_places.shp'

>>> pb.print_attributes(fn, 3, ['NAME', 'POP_MAX'])

FID Geometry NAME POP_MAX

0 POINT (32.533, 0.583) Bombo 75000

1 POINT (30.275, 0.671) Fort Portal 42670

2 POINT (15.799, 40.642) Potenza 69060

3 of 1249 features

窗体底端

Normally, you must provide arguments to functions in the order they’re listed, but if you want to provide an optional argument without specifying values for earlier optional parameters, you can use keywords to specify which parameter you mean. For example, If you wanted to set geom to False without specifying a list of fields, you could do it like this:

窗体顶端

1

pb.print_attributes(fn, 3, geom=False)

窗体底端

This function works well for viewing small numbers of attributes, but you’ll probably regret using it to print all attributes of a large file.

Plotting spatial data

The ospybook module also contains convenience classes to help you visualize your data spatially, although you’ll learn how to do it yourself in the last chapter. To use these, you must have the matplotlib Python module installed. To plot your data, you need to create a new instance of the VectorPlotter class and pass a Boolean parameter to the constructor indicating if you want to use interactive mode. If interactive, the data will be drawn immediately when you plot it. If not interactive, you’ll need to call draw after plotting the data, and everything will be drawn at once. Either way, once you’ve created this object, you can use it to plot your data with the plot method:

窗体顶端

1

plot(self, geom_or_lyr, [symbol], [name], [kwargs])

窗体底端

- geom_or_lyr is a geometry, layer, or path to a data source. If a data source, the first layer will be drawn.

- symbol is an optional pyplot symbol to draw the geometries with.

- name is an optional name to assign to the data so it can be accessed later.

- kwargs are optional pyplot drawing parameters that are specified by keyword (you’ll see the abbreviation kwargs used often for an indeterminate number of keyword arguments).

The plot function can optionally use parameters from the pyplot interface in matplotlib. You’ll see a few used in this book, but to see more you can read the pyplot documentation at http://matplotlib.org/1.5.0/api/pyplot_summary.html. Let’s start with an example that plots the populated places shapefile on top of country outlines:

窗体顶端

1

2

3

4

5

6

>>> import os

>>> os.chdir(r'D:\osgeopy-data\global')

>>> from ospybook.vectorplotter import VectorPlotter

>>> vp = VectorPlotter(True)

>>> vp.plot('ne_50m_admin_0_countries.shp', fill=False)

>>> vp.plot('ne_50m_populated_places.shp', 'bo')

窗体底端

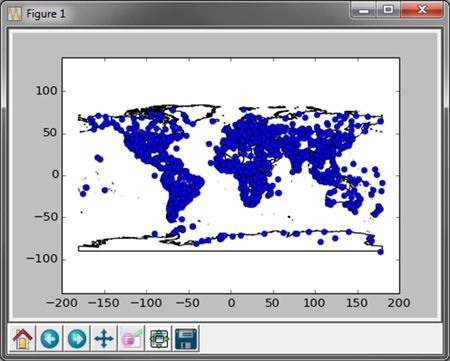

The first thing you do is use the built-in os module to change your working directory, which allows you to use filenames later instead of typing the entire path. Then you pass True to VectorPlotter to create an interactive plotter. The fill pyplot parameter causes the countries shapefile to be drawn as hollow polygons, and the 'bo' symbol for populated places means blue circles. This results in a plot that looks like figure 3.13.

Figure 3.13. The output from plotting the global populated places shapefile on top of the country outlines

You don’t need to do anything special if you want to use this in a script, but you should know that when the plotter isn’t created with interactive mode, it will stop script execution until you close the window that pops up. I’ve also discovered that depending on the environment I’m running the script from, sometimes it closes itself automatically if I created it with interactive mode, so I never get the chance to view it. Because of this, if I’m using a VectorPlotter in a script instead of a Python interactive window, I usually create it using non-interactive mode and call draw at the end of the script. The source code for this chapter has examples of this.

3.4. Getting metadata about the data

Sometimes you also need to know general information about a dataset, such as the number of features, spatial extent, geometry type, spatial reference system, or the names and types of attribute fields. For example, say you want to display your data on top of a Google map. You need to make sure that your data use the same spatial reference system as Google, and you need to know the spatial extent so that you can have your map zoom to the correct part of the world. Because different geometry types have different drawing options, you also need to know geometry types to define the symbology for your features.

You’ve already seen how to get some of these, such as the number of features in a layer with GetFeatureCount. Remember that this applies to the layer and not the data source, because each layer in a data source can have a different number of features, geometry type, spatial extent, or attributes.

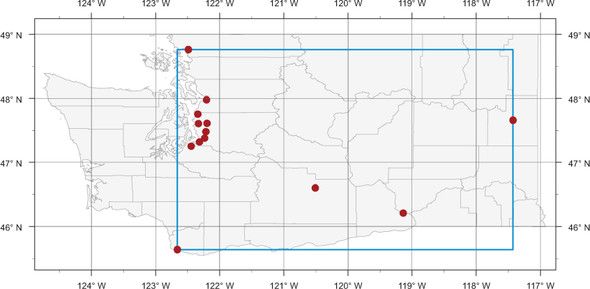

The spatial extent of a layer is the rectangle constructed from the minimum and maximum bounding coordinates in all directions. Figure 3.14 shows the Washington large_cities file and its extent. You can get these bounding coordinates from a layer object with the GetExtent function, which returns a tuple of numbers as (min_x, max_x, min_y, max_y). Here’s an example:

窗体顶端

1

2

3

4

5

6

>>> ds = ogr.Open(r'D:\osgeopy-data\Washington\large_cities.geojson')

>>> lyr = ds.GetLayer(0)

>>> extent = lyr.GetExtent()

>>> print(extent)

(-122.66148376464844, -117.4260482788086, 45.638729095458984,

48.759552001953125)

窗体底端

Figure 3.14. Here you can see the spatial extent of the large_cities dataset. The minimum and maximum longitude (x) values are approximately -122.7 and -117.4, respectively. The minimum and maximum latitude (y) values are approximately 45.6 and 48.8.

Compare these numbers to those in figure 3.14 to better understand what’s returned in the extent tuple.

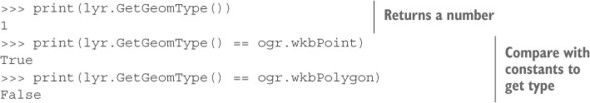

You can also get the geometry type from the layer object, but there’s a catch. The GetGeomType function returns an integer instead of a human-readable string. But how is that useful? The OGR module has a number of constants, shown in table 3.1, which are basically unchangeable variables with descriptive names and numeric values. You can compare the value you get with GetGeomType to one of these constants in order to check if it’s that geometry type. For example, the constant for point geometries is wkbPoint and the one for polygons is wkbPolygon, so continuing with the previous example, you could find out if large_cities.shp is a point or polygon shapefile like this:

Table 3.1. Common geometry type constants. You can find more in appendix B.

| Geometry type |

OGR constant |

| Point |

wkbPoint |

| Mulitpoint |

wkbMultiPoint |

| Line |

wkbLineString |

| Multiline |

wkbMultiLineString |

| Polygon |

wkbPolygon |

| Multipolygon |

wkbMultiPolygon |

| Unknown geometry type |

wkbUnknown |

| No geometry |

wkbNone |

If the layer has geometries of varying types, such as a mixture of points and polygons, GetGeomType will return wkbUnknown.

NOTE

The wkb prefix on the OGR geometry constants stands for well-known binary (WKB), which is a standard binary representation used to exchange geometries between different software packages. Because it’s binary, it isn’t human-readable, but a well-known text (WKT) format does exist that is readable.

Sometimes you’d rather have a human-readable string, however, and you can get this from one of the feature geometries. The following example grabs the first feature in the layer, gets the geometry object from that feature, and then prints the name of the geometry:

窗体顶端

1

2

3

>>> feat = lyr.GetFeature(0)

>>> print(feat.geometry().GetGeometryName())

POINT

窗体底端

Another useful piece of data you can get from the layer object is the spatial reference system, which describes the coordinate system that the dataset uses. Your GPS unit probably shows unprojected, or geographic, coordinates by default. These are the latitude and longitude coordinates that we’re all familiar with. These geographic coordinates can be converted to many other types of coordinate systems, however, and if you don’t know which of these systems a dataset uses, then you have no way of knowing where on the earth the coordinates refer to. Obviously, this is a crucial bit of metadata, and I’ll talk more about it in chapter 8. For now, you only need to know that you can get this information. If you print it out, you’ll get a string that describes the reference system in WKT format, like that shown in listing 3.2.

Listing 3.2. Example of well-known text representation of a spatial reference system

1

2

3

4

5

6

7

8

9

10

11

12

13

>>> print(lyr.GetSpatialRef())

GEOGCS["NAD83",

DATUM["North_American_Datum_1983",

SPHEROID["GRS 1980",6378137,298.257222101,

AUTHORITY["EPSG","7019"]],

TOWGS84[0,0,0,0,0,0,0],

AUTHORITY["EPSG","6269"]],

PRIMEM["Greenwich",0,

AUTHORITY["EPSG","8901"]],

UNIT["degree",0.0174532925199433,

AUTHORITY["EPSG","9122"]],

AUTHORITY["EPSG","4269"]]

Depending on your GIS experience, this output may or may not mean much to you. Don’t worry if it makes no sense now, because you’ll learn all about it later.

Last, you can also get information about the attribute fields attached to the layer. The easiest way to do this is to use the schema property on the layer object to get a list of FieldDefn objects. Each of these contains information such as the attribute column name and data type. Here’s an example of printing out the name and data type of each field:

窗体顶端

1

2

3

4

5

6

7

>>> for field in lyr.schema:

... print(field.name, field.GetTypeName())

...

CITIESX020 Integer

FEATURE String

NAME String

窗体底端

Part of this output was left out in the interest of space, but you can run the code yourself to see the rest of the fields in the layer. You’ll learn more about working with FieldDefn objects in section 3.5.2.

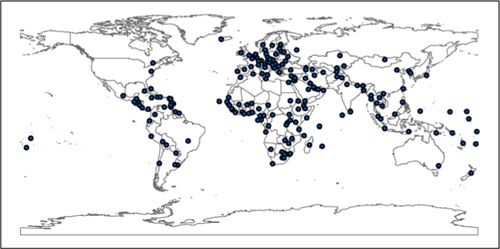

3.5. Writing vector data

Reading data is definitely useful, but you’ll probably need to edit existing or create new datasets. Listing 3.3 shows how to create a new shapefile that contains only the features corresponding to capital cities in the global populated places shapefile. The output will look like the cities in figure 3.15.

Figure 3.15. Capital cities with country outlines for reference

Listing 3.3. Exporting capital cities to a new shapefile

In this example you open up a folder instead of a shapefile as the data source. A nice feature of the shapefile driver is that it will treat a folder as a data source if a majority of the files in the folder are shapefiles, and each shapefile is treated as a layer. Notice that you pass 1 as the second parameter to Open, which will allow you to create a new layer (shapefile) in the folder. You pass the shapefile name, without the extension, to GetLayer to get the populated places shapefile as a layer. Even though you open it differently here than in listing 3.1, you can use it in exactly the same way.

Because OGR won’t overwrite existing layers, you check to see if the output layer already exists, and delete it if it did. Obviously you wouldn’t want to do this if you didn’t want the layer overwritten, but in this case you can overwrite data as you test different things.

Then you create a new layer to store your output data in. The only required parameter for CreateLayer is a name for the layer, which should be unique within the data source. You do have, however, several optional parameters that you should set when possible:

窗体顶端

1

CreateLayer(name, [srs], [geom_type], [options])

窗体底端

- name is the name of the layer to create.

- srs is the spatial reference system that the layer will use. The default is None, meaning that no spatial reference system will be assigned.

- geom_type is a geometry type constant from table 3.1 that specifies the type of geometry the layer will hold. The default is wkbUnknown.

- options is an optional list of layer-creation options, which only applies to certain vector format types.

The first of these optional parameters is the spatial reference, which defaults to None if not provided. Remember that without spatial reference information, it’s extremely difficult to figure out where the features are on the planet. Sometimes the spatial reference is implicit in the data; for example, KML only supports unprojected coordinates using the WGS 84 datum, but you should set this if possible. In this case, you copy the spatial reference information from the original shapefile to the new one. We’ll discuss spatial reference systems and how to use them in more detail in chapter 8.

The second optional parameter is one of the OGR geometry type constants from either table 3.1 or appendix B. This specifies the type of geometries that the layer will contain. If not provided, it defaults to ogr.wkbUnknown, although in many cases this will be updated to the correct value after you add features to the layer and it can be determined from them.

The last optional parameter is a list of layer-creation option strings in the form of option=value. These are documented for each driver on the OGR formats webpage. Not all vector data formats have layer-creation options, and even if a format does have options, you’re under no obligation to use them.

You use the following code to create a new point shapefile called capital_cities.shp that uses the same spatial reference system as the populated places shapefile. You do one more thing, though. The schema property on the input layer returns a list of attribute field definitions for that layer, and you pass that list to CreateFields to create the exact same set of attribute fields in the new layer:

窗体顶端

1

2

3

4

out_lyr = ds.CreateLayer('capital_cities',

in_lyr.GetSpatialRef(),

ogr.wkbPoint)

out_lyr.CreateFields(in_lyr.schema)

窗体底端

Now, to add a feature to a layer, you need to create a dummy feature that you add the geometry and attributes to, and then you insert that into the layer. The next step is to create this blank feature. Creating a feature requires a feature definition that contains information about the geometry type and all of the attribute fields, and this is used to create an empty feature with the same fields and geometry type. You need to get the feature definition from the layer you plan to add features to, but you must do it after you’ve added, deleted, or updated any fields. If you get the feature definition first, and then change the fields in any way, the definition will be out of date. This means that a feature you try to insert based on this outdated definition will not match reality, as seen in figure 3.16. This will cause Python to die a horrible death, and you definitely don’t want that.

Figure 3.16. Always get feature definitions after making changes to fields, or the definition will not match reality.

窗体顶端

1

2

out_defn = out_lyr.GetLayerDefn()

out_feat = ogr.Feature(out_defn)

窗体底端

Now that you have a feature to put information into, it’s time to start looping through the input dataset. For each feature, you check to see if its FEATURECLA attribute was equal to 'Admin-0 capital', which means it’s a capital city. If it is, then you copy the geometry from it into the dummy feature. Then you loop through all of the fields in the attribute table and copy the values from the input feature into the output feature. This works because you create the fields in the new shapefile based on the fields in the original, so they’re in the same order in both shapefiles. If they were in different orders, you’d have to use their names to access them, but you can use indexes here because you know that they match:

窗体顶端

1

2

3

4

5

6

7

8

for in_feat in in_lyr:

if in_feat.GetField('FEATURECLA') == 'Admin-0 capital':

geom = in_feat.geometry()

out_feat.SetGeometry(geom)

for i in range(in_feat.GetFieldCount()):

value = in_feat.GetField(i)

out_feat.SetField(i, value)

out_lyr.CreateFeature(out_feat)

窗体底端

Once you copy all of the attribute fields over, you insert the feature into the layer using CreateFeature. This function saves a copy of the feature, including all of the information you add to it, to the layer. The feature object can then be reused, and whatever you do to it won’t affect the data that have already been added to the layer. This way you don’t have the overhead of creating multiple features, because you can create a single one and keep editing its data each time you want to add a new feature to the layer.

You delete the ds variable at the end of the script, which forces the files to close and all of your edits to be written to disk. Deleting the layer variable doesn’t do the trick; you must close the data source. If you wanted to keep the data source open, you could call SyncToDisk on either the layer or data source object instead, like this:

窗体顶端

1

ds.SyncToDisk()

窗体底端

WARNING

You must close your files or call SyncToDisk to flush your edits to disk. If you don’t do this, and your interactive environment still has your data source open, you’ll be disappointed to find an empty dataset.

It’s always a good idea to carefully inspect your output to make sure you get the results you want. The best way would be to open it in QGIS, or you could get a good idea by plotting it from Python (figure 3.17):

窗体顶端

1

2

3

>>> vp = VectorPlotter(True)

>>> vp.plot('ne_50m_admin_0_countries.shp', fill=False)

>>> vp.plot('capital_cities.shp', 'bo')

窗体底端

Figure 3.17. The result of plotting the new capital cities shapefile on top of country outlines

Let’s return to the topic of adding attribute values for a moment. You might be wondering if multiple functions exist for setting attribute field values as with retrieving values. The answer is generally no. Most data will be converted to the correct type for you, but you may not like the results if a conversion isn’t possible. For example, pretend for a minute that you made a mistake and inserted the population into the Name field, and the name into the Population field. Do you think that the population could be converted to a string and successfully inserted into the Name field? How about converting the country name to a number so it could go in the Population field? Well, converting a number to a string works fine, but converting a string to a number is problematic. The string "3578" can be translated into the number 3578, but what about the string "Russia"? If you try it in a Python interactive window by typing int('Russia'), you’ll get an error, but OGR will insert a zero into the Population field instead of crashing. Sometimes this behavior is to your advantage because you don’t need to convert data before inserting it in a feature, but it can also be a problem if you mistakenly try to insert the wrong type of data into a field.

3.5.1. Creating new data sources

You used an existing data source in listing 3.3, but sometimes you’ll need to create new ones. Fortunately, it’s not difficult. Perhaps the most important part is that you use the correct driver. It’s the driver that does the work here, and each driver only knows how to work with one vector format, so using the correct one is essential. For example, the GeoJSON driver won’t create a shapefile, even if you ask it to create a file with an .shp extension. As shown in figure 3.18, the output will have an .shp extension, but it will still be a GeoJSON file at heart.

Figure 3.18. Using the GeoJSON driver to create a file with an .shp extension will still create a GeoJSON file, not a shapefile.

You have a couple of ways to get the required driver. The first is to get the driver from a dataset that you’ve already opened, which will allow you to create a new data source using the same vector data format as the existing data source. In this example, the driver variable will hold the ESRI shapefile driver:

窗体顶端

1

2

ds = ogr.Open(r'D:\osgeopy-data\global\ne_50m_admin_0_countries.shp')

driver = ds.GetDriver()

窗体底端

The second way to get a driver object is to use the OGR function GetDriverByName and pass it the short name of the driver. Remember that these names are available on the OGR website, by using the ogrinfo utility that comes with GDAL/OGR, or the print_drivers function available in the code accompanying this book. This example will get the GeoJSON driver:

窗体顶端

1

json_driver = ogr.GetDriverByName('GeoJSON')

窗体底端

Once you have a driver object, you can use it to create an empty data source by providing the data source name. This new data source is automatically open for writing, and you can add layers to it the way you did in listing 3.3. If the data source can’t be created, then CreateDataSource returns None, so you need to check for this condition:

窗体顶端

1

2

3

json_ds = json_driver.CreateDataSource(json_fn)

if json_ds is None:

sys.exit('Could not create {0}.'.format(json_fn))

窗体底端

A few data formats have creation options that you can use when creating a data source, although these aren’t required. Like layer-creation options, these parameters are documented on the OGR website. Don’t confuse the two, because data source and layer-creation options are two different things. Both types are passed as a list of strings, however. Let’s see how you’d use a data source–creation option to create a full-fledged SpatiaLite data source instead of SQLite. This will fail if your version of OGR wasn’t built with SpatiaLite support, though:

窗体顶端

1

2

3

driver = ogr.GetDriverByName('SQLite')

ds = driver.CreateDataSource(r'D:\ osgeopy-data\global\earth.sqlite',

['SPATIALITE=yes'])

窗体底端

Another thing to be aware of when creating new data sources is that you can’t overwrite an existing data source. If a chance exists that your code might legitimately try to overwrite a dataset, then you’ll need to delete the old one before attempting to create the new one. One way to deal with this would be to use the Python os.path.exists function to see if a file already exists before you attempt to create a data source; or you could wait and deal with it if your original attempt fails, either after checking for None or by using a try/except block. Either way, you should use the driver to delete the existing source instead of using a Python built-in function. Why? Because the driver will make sure that all required files are deleted. For example, if you’re deleting a shapefile, the shapefile driver will delete the .shp, .dbf, .shx, and any other optional files that may be present. If you were using the Python built-in module to delete the shapefile, you’d have to make sure your code checked for all of these files. Here’s an example of one way to deal with an existing data source:

窗体顶端

1

2

3

4

5

if os.path.exists(json_fn):

json_driver.DeleteDataSource(json_fn)

json_ds = json_driver.CreateDataSource(json_fn)

if json_ds is None:

sys.exit('Could not create {0}.'.format(json_fn))

窗体底端

TIP

If you try to create a shapefile as a data source rather than a layer (where the data source is the containing folder), and the shapefile already exists, you’ll get an odd error message saying that the shapefile isn’t a directory.

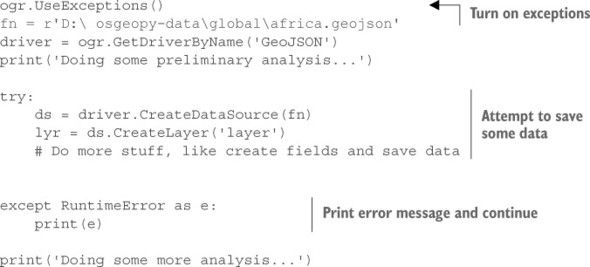

USING OGR EXCEPTIONS

By default, OGR doesn’t raise an error if it has a problem, such as failing to create a new data source. This is why you check for None, but Python programmers generally expect an error to be raised instead. You can enable this behavior if you’d like, by calling ogr.UseExceptions() at the beginning of your code. Although most of the time this works as anticipated, I’ve discovered that it doesn’t always raise an error when I expect. For example, no error is raised if OGR fails to open a data source. However, in instances where it does raise an error, you don’t need to check for None before continuing. Using exceptions also gives you flexibility with handling errors.

For example, here’s a contrived situation where I’m pretending to process data, then I want to save some temporary data to a GeoJSON file, and then I want to keep processing something else. If I can’t create the temporary file, I want to skip that step and go on to the next bit of data processing rather than crashing. Here’s the sample code:

Suppose that the africa.geojson file already exists. This code doesn’t check for that, so you know it will fail when you call CreateDataSource. If you weren’t using OGR exceptions, this script would fail at that point and never get to the last print statement. But because you’re using exceptions, you’ll get an error message saying that the file couldn’t be created, and then it will continue on to the last print statement, and the output will look like this:

窗体顶端

1

2

3

Doing some preliminary analysis...

The GeoJSON driver does not overwrite existing files.

Doing some more analysis...

窗体底端

Try it out yourself and comment out the first line, and watch how the behavior changes.

3.5.2. Creating new fields

You saw in listing 3.3 how to copy attribute field definitions from one layer to another, but you can also define your own custom fields. Several different field types are available, but not all are supported by all data formats. This is another situation when the online documentation for the various formats will come in handy, so hopefully you’ve bookmarked that page.

To add a field to a layer, you need a FieldDefn object that contains the important information about the field, such as name, data type, width, and precision. The schema property you used in listing 3.3 returns a list of these, one for each field in the layer. You can create your own, however, by providing the name and data type for the new field to the FieldDefn constructor. The data type is one of the constants from table 3.2.

Table 3.2. Field type constants. There are more shown in appendix B, but I have been unable to make them work in Python.

| Field data type |

OGR constant |

| Integer |

OFTInteger |

| List of integers |

OFTIntegerList |

| Floating point number |

OFTReal |

| List of floating point numbers |

OFTRealList |

| String |

OFTString |

| List of strings |

OFTStringList |

| Date |

OFTDate |

| Time of day |

OFTTime |

| Date and time |

OFTDateTime |

After you create a basic field definition, but before you use it to add a field to the layer, you can add other constraints such as floating-point precision or field width, although I’ve noticed that these don’t always have an effect, depending on the driver being used. For example, I haven’t been able to set a precision in a GeoJSON file, and I’ve also discovered that you must set a field width if you want to set field precision in a shapefile. This example would create two fields to hold x and y coordinates with a precision of 3:

You might have noticed that you don’t create two different field definition objects here. Once you’ve used the field definition to create a field in the layer, you can change the definition’s attributes and reuse it to create another field, which makes this easier because you want two fields that were identical except in name.

Also, sometimes the field width will be ignored if it’s too small for the data provided. For example, if you create a string field with a width of 6, but then try to insert a value that’s 11 characters long, in certain cases the width of the field would increase to hold the entire string. This isn’t always possible, however, and it’s best to be specific about what you want rather than hope something like this will conveniently happen.

3.6. Updating existing data

Sometimes you need to update existing data rather than create an entirely new dataset. Whether this is possible, and which edits are supported, depends on the format of the data. For example, you can’t edit GeoJSON files, but many different edits are allowed on shapefiles. We’ll discuss getting information about what’s supported in the next chapter.

3.6.1. Changing the layer definition

Depending on the type of data you’re working with, you can edit the layer definition by adding new fields, deleting existing ones, or changing field properties such as name. As with adding new fields, you need a field definition to change a field. Once you have a field definition that you’re happy with, you use the AlterFieldDefn function to replace the existing field with the new one:

窗体顶端

1

AlterFieldDefn(iField, field_def, nFlags)

窗体底端

- iField is the index of the field you want to change. A field name won’t work in this case.

- field_def is the new field definition object.

- nFlags is an integer that is the sum of one or more of the constants shown in table 3.3.

Table 3.3. Flags used to specify which properties of a field definition can be changed. To use more than one, simply add them together

| Field properties that need to change |

OGR constant |

| Field name only |

ALTER_NAME_FLAG |

| Field type only |

ALTER_TYPE_FLAG |

| Field width and/or precision only |

ALTER_WIDTH_PRECISION_FLAG |

| All of the above |

ALTER_ALL_FLAG |

To change a field’s properties, you need to create a field definition containing the new properties, find the index of the existing field, and decide which constants from table 3.3 to use to ensure your changes take effect. To change the name of a field from 'Name' to 'City_Name', you might do something like this:

窗体顶端

1

2

3

i = lyr.GetLayerDefn().GetFieldIndex('Name')

fld_defn = ogr.FieldDefn('City_Name', ogr.OFTString)

lyr.AlterFieldDefn(i, fld_defn, ogr.ALTER_NAME_FLAG)

窗体底端

If you needed to change multiple properties, such as both the name and the precision of a floating-point attribute field, you’d pass the sum of ALTER_NAME_FLAG and ALTER_WIDTH_PRECISION_FLAG, like this:

窗体顶端

1

2

3

4

5

6

7

8

9

lyr_defn = lyr.GetLayerDefn()

i = lyr_defn.GetFieldIndex('X')

width = lyr_defn.GetFieldDefn(i).GetWidth()

fld_defn = ogr.FieldDefn('X_coord', ogr.OFTReal)

fld_defn.SetWidth(width)

fld_defn.SetPrecision(4)

flag = ogr.ALTER_NAME_FLAG + ogr.ALTER_WIDTH_PRECISION_FLAG

lyr.AlterFieldDefn(i, fld_defn, flag)

窗体底端

Notice that you use the original field width when creating the new field definition. I found out the hard way that if you don’t set the width large enough to hold the original data, then the results will be incorrect. To get around the problem, use the original width. For the precision change to take effect, all records must be rewritten. Making the precision larger than it was won’t give you more precision, however, because data can’t be created from thin air. The precision can be decreased, however.

Instead of summing up flag values, you could cheat and just use ALTER_ALL_FLAG. Only do this if your new field definition is exactly what you want the field to look like after editing, however. The other flags limit what can change, but this one doesn’t. For example, if your field definition has a different data type than the original field but you pass ALTER_NAME_FLAG, then the data type will not change, but it will if you pass ALTER_ALL_FLAG.

3.6.2. Adding, updating, and deleting features

Adding new features to existing layers is exactly the same as adding them to brand-new layers. Create an empty feature based on the layer definition, populate it, and insert it into the layer. Updating features is much the same, except you work with features that already exist in the layer instead of blank ones. Find the feature you want to edit, make the desired changes, and then update the information in the layer by passing the updated feature to SetFeature instead of CreateFeature. For example, you could do something like this to add a unique ID value to each feature in a layer:

窗体顶端

1

2

3

4

5

6

lyr.CreateField(ogr.FieldDefn('ID', ogr.OFTInteger))

n = 1

for feat in lyr:

feat.SetField('ID', n)

lyr.SetFeature(feat)

n += 1

窗体底端

First you add an ID field, and then you iterate through the features and set the ID equal to the value of the n variable. Because you increment n each time through the loop, each feature has a unique ID value. Last, you update the feature in the layer by passing it to SetFeature.

Deleting features is even easier. All you need to know is the FID of the feature you want to get rid of. If you don’t know that number off the top of your head, or through another means, you can get it from the feature itself, like this:

窗体顶端

1

2

3

for feat in lyr:

if feat.GetField('City_Name') == 'Seattle':

lyr.DeleteFeature(feat.GetFID())

窗体底端

For each feature in the layer, you check to see if its 'City_Name' attribute is equal to 'Seattle', and if it is, you retrieve the FID from the feature itself and then pass that number to DeleteFeature.

Certain formats don’t completely kill the feature at this point, however. You may not see it, but sometimes the feature has only been marked for deletion instead of totally thrown out, so it’s still lurking in the shadows. Because of this, you won’t see any other features get assigned that FID, and it also means that if you’ve deleted many features, there may be a lot of needlessly used space in your file. See figure 3.19 for a simple example. Deleting these features will reclaim this space. If you have much experience with relational databases, you should be familiar with this idea. It’s similar to running Compact and Repair on a Microsoft Access database or using VACUUM on a PostgreSQL database.

Figure 3.19. The effect of vacuuming or repacking a database. Notice that the FID values change.

How to go about reclaiming this space, or determining if it needs to be done, is dependent on the vector data format being used. Here are examples for doing it for shapefiles and SQLite:

In both cases, you need to open the data source and then execute a SQL statement on it that compacts the database. For shapefiles you need to know the name of the layer, so if the layer is called "cities", then the SQL would be "REPACK cities".

Another issue with shapefiles is that they don’t update their metadata for spatial extent when existing features are modified or deleted. If you edit existing geometries or delete features, you can ensure that the spatial extent gets updated by calling this:

窗体顶端

1

ds.ExecuteSQL('RECOMPUTE EXTENT ON ' + lyr.GetName())

窗体底端

This isn’t necessary if you insert features, however, because those extent changes are tracked. It’s also not necessary if there’s no chance that your edits change the layer’s extent.

3.7. Summary

- Vector data formats are most appropriate for features that can be characterized as a point, line, or polygon.

- Each geographic feature in a vector dataset can have attribute data, such as name or population, attached to it.

- The type of geometry used to model a given feature may change depending on scale. A city could be represented as a point on a map of an entire country, but as a polygon on a map of a smaller area, such as a county.

- Vector datasets excel for measuring relationships between geographic features such as distances or overlaps.

- You can use OGR to read and write many different types of vector data, but which ones depend on which drivers have been compiled into your version of GDAL/OGR.

- Data sources can contain one or more layers (depending on data format), and in turn, layers can contain one or more features. Each feature has a geometry and a variable number of attribute fields.

- Newly created data sources are automatically opened for writing. If you want to edit existing data, remember to open the data source for writing.

- Remember to make changes to the layer, such as adding or deleting fields, before getting the layer definition and creating a feature for adding or updating data.