springboot+mybatis(mybatis-plus)+druid 多数据源

Druid介绍和使用

在使用Druid之前,先来简单的了解下Druid。

Druid是一个数据库连接池。Druid可以说是目前最好的数据库连接池!因其优秀的功能、性能和扩展性方面,深受开发人员的青睐。

Druid已经在阿里巴巴部署了超过600个应用,经过一年多生产环境大规模部署的严苛考验。Druid是阿里巴巴开发的号称为监控而生的数据库连接池!

同时Druid不仅仅是一个数据库连接池,Druid 核心主要包括三部分:

- 基于Filter-Chain模式的插件体系。

- DruidDataSource 高效可管理的数据库连接池。

- SQLParser

Druid的主要功能如下:

- 是一个高效、功能强大、可扩展性好的数据库连接池。

- 可以监控数据库访问性能。

- 数据库密码加密

- 获得SQL执行日志

- 扩展JDBC

介绍方面这块就不再多说,具体的可以看官方文档。

那么开始介绍Druid如何使用。

首先是Maven依赖,只需要添加druid这一个jar就行了。

com.alibaba

druid

1.1.8

配置方面,主要的只需要在application.properties或application.yml添加如下就可以了。

说明:因为这里我是用来两个数据源,所以稍微有些不同而已。Druid 配置的说明在下面中已经说的很详细了,这里我就不在说明了。

application.yml

server:

port: 8080

servlet:

context-path: /equaker

spring:

#主数据源

master:

datasource:

type: com.alibaba.druid.pool.DruidDataSource

name: master

url: jdbc:mysql://localhost:3306/wdz?useUnicode=true&characterEncoding=utf-8

username: root

password: 123456

driver-class-name: com.mysql.jdbc.Driver

#从数据源

cluster:

datasource:

type: com.alibaba.druid.pool.DruidDataSource

name: cluster

url: jdbc:mysql://localhost:3306/yufu?useUnicode=true&characterEncoding=utf-8

username: root

password: 123456

driver-class-name: com.mysql.jdbc.Driver

datasource:

druid:

# 下面为连接池的补充设置,应用到上面所有数据源中

# 初始化大小,最小,最大

initialSize: 5

minIdle: 5

maxActive: 20

# 配置获取连接等待超时的时间

maxWait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

timeBetweenEvictionRunsMillis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

minEvictableIdleTimeMillis: 30000

validationQuery: SELECT 1

validationQueryTimeout: 10000

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# 打开PSCache,并且指定每个连接上PSCache的大小

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

filters: stat,wall

# 通过connectProperties属性来打开mergeSql功能;慢SQL记录

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

# 合并多个DruidDataSource的监控数据

useGlobalDataSourceStat: true

mybatis:

# 已经再 masterdatasource/clusterdatasource指定了mapper映射文件,所以不用配置

# mapper-locations: classpath:master/*Mapper.xml

type-aliases-package: com.equaker.model

configuration:

database-id: db1

#pagehelper

pagehelper:

helperDialect: mysql

reasonable: true

supportMethodsArguments: true

params: count=countSql

logging:

level:

com.equaker.mapper : debug成功添加了配置文件之后,我们再来编写Druid相关的类。

首先是MasterDataSourceConfig.java这个类,这个是默认的数据源配置类。

MasterDataSourceConfig.hava

package com.equaker.druid;

import com.alibaba.druid.pool.DruidDataSource;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* 主数据源

*/

@Configuration

@MapperScan(basePackages = MasterDataSourceConfig.PACKAGE, sqlSessionFactoryRef = "masterSqlSessionFactory")

public class MasterDataSourceConfig {

static final String PACKAGE = "com.equaker.mapper.master";

static final String MAPPER_LOCATION = "classpath:mapper/master/*.xml";

@Value("${spring.master.datasource.url}")

private String url;

@Value("${spring.master.datasource.username}")

private String username;

@Value("${spring.master.datasource.password}")

private String password;

@Value("${spring.master.datasource.driver-class-name}")

private String driverClassName;

@Value("${spring.master.datasource.name}")

private String name;

@Value("${spring.datasource.druid.initialSize}")

private int initialSize;

@Value("${spring.datasource.druid.minIdle}")

private int minIdle;

@Value("${spring.datasource.druid.maxActive}")

private int maxActive;

@Value("${spring.datasource.druid.maxWait}")

private int maxWait;

@Value("${spring.datasource.druid.timeBetweenEvictionRunsMillis}")

private int timeBetweenEvictionRunsMillis;

@Value("${spring.datasource.druid.minEvictableIdleTimeMillis}")

private int minEvictableIdleTimeMillis;

@Value("${spring.datasource.druid.validationQuery}")

private String validationQuery;

@Value("${spring.datasource.druid.testWhileIdle}")

private boolean testWhileIdle;

@Value("${spring.datasource.druid.testOnBorrow}")

private boolean testOnBorrow;

@Value("${spring.datasource.druid.testOnReturn}")

private boolean testOnReturn;

@Value("${spring.datasource.druid.poolPreparedStatements}")

private boolean poolPreparedStatements;

@Value("${spring.datasource.druid.maxPoolPreparedStatementPerConnectionSize}")

private int maxPoolPreparedStatementPerConnectionSize;

@Value("${spring.datasource.druid.filters}")

private String filters;

@Value("{spring.datasource.druid.connectionProperties}")

private String connectionProperties;

@Bean(name = "masterDataSource")

@Primary

public DataSource masterDataSource() {

DruidDataSource dataSource = new DruidDataSource();

dataSource.setName(name);

dataSource.setUrl(url);

dataSource.setUsername(username);

dataSource.setPassword(password);

dataSource.setDriverClassName(driverClassName);

//具体配置

dataSource.setInitialSize(initialSize);

dataSource.setMinIdle(minIdle);

dataSource.setMaxActive(maxActive);

dataSource.setMaxWait(maxWait);

dataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

dataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

dataSource.setValidationQuery(validationQuery);

dataSource.setTestWhileIdle(testWhileIdle);

dataSource.setTestOnBorrow(testOnBorrow);

dataSource.setTestOnReturn(testOnReturn);

dataSource.setPoolPreparedStatements(poolPreparedStatements);

dataSource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

try {

dataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

dataSource.setConnectionProperties(connectionProperties);

return dataSource;

}

@Bean(name = "masterTransactionManager")

@Primary

public DataSourceTransactionManager masterTransactionManager() {

return new DataSourceTransactionManager(masterDataSource());

}

@Bean(name = "masterSqlSessionFactory")

@Primary

public SqlSessionFactory masterSqlSessionFactory(@Qualifier("masterDataSource") DataSource masterDataSource)

throws Exception {

final SqlSessionFactoryBean sessionFactory = new SqlSessionFactoryBean();

sessionFactory.setDataSource(masterDataSource);

sessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources(MasterDataSourceConfig.MAPPER_LOCATION));

return sessionFactory.getObject();

}

}

ClusterDataSourceConfig.javapackage com.equaker.druid;

import com.alibaba.druid.pool.DruidDataSource;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* 副数据源

*/

@Configuration

@MapperScan(basePackages = ClusterDataSourceConfig.PACKAGE, sqlSessionFactoryRef = "clusterSqlSessionFactory")

public class ClusterDataSourceConfig {

static final String PACKAGE = "com.equaker.mapper.cluster";

static final String MAPPER_LOCATION = "classpath:mapper/cluster/*.xml";

@Value("${spring.cluster.datasource.url}")

private String url;

@Value("${spring.cluster.datasource.username}")

private String username;

@Value("${spring.cluster.datasource.password}")

private String password;

@Value("${spring.cluster.datasource.driver-class-name}")

private String driverClassName;

@Value("${spring.cluster.datasource.name}")

private String name;

@Value("${spring.datasource.druid.initialSize}")

private int initialSize;

@Value("${spring.datasource.druid.minIdle}")

private int minIdle;

@Value("${spring.datasource.druid.maxActive}")

private int maxActive;

@Value("${spring.datasource.druid.maxWait}")

private int maxWait;

@Value("${spring.datasource.druid.timeBetweenEvictionRunsMillis}")

private int timeBetweenEvictionRunsMillis;

@Value("${spring.datasource.druid.minEvictableIdleTimeMillis}")

private int minEvictableIdleTimeMillis;

@Value("${spring.datasource.druid.validationQuery}")

private String validationQuery;

@Value("${spring.datasource.druid.testWhileIdle}")

private boolean testWhileIdle;

@Value("${spring.datasource.druid.testOnBorrow}")

private boolean testOnBorrow;

@Value("${spring.datasource.druid.testOnReturn}")

private boolean testOnReturn;

@Value("${spring.datasource.druid.poolPreparedStatements}")

private boolean poolPreparedStatements;

@Value("${spring.datasource.druid.maxPoolPreparedStatementPerConnectionSize}")

private int maxPoolPreparedStatementPerConnectionSize;

@Value("${spring.datasource.druid.filters}")

private String filters;

@Value("{spring.datasource.druid.connectionProperties}")

private String connectionProperties;

@Bean(name = "clusterDataSource")

public DataSource masterDataSource() {

DruidDataSource dataSource = new DruidDataSource();

dataSource.setName(name);

dataSource.setUrl(url);

dataSource.setUsername(username);

dataSource.setPassword(password);

dataSource.setDriverClassName(driverClassName);

//具体配置

dataSource.setInitialSize(initialSize);

dataSource.setMinIdle(minIdle);

dataSource.setMaxActive(maxActive);

dataSource.setMaxWait(maxWait);

dataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

dataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

dataSource.setValidationQuery(validationQuery);

dataSource.setTestWhileIdle(testWhileIdle);

dataSource.setTestOnBorrow(testOnBorrow);

dataSource.setTestOnReturn(testOnReturn);

dataSource.setPoolPreparedStatements(poolPreparedStatements);

dataSource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

try {

dataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

dataSource.setConnectionProperties(connectionProperties);

return dataSource;

}

@Bean(name = "clusterTransactionManager")

public DataSourceTransactionManager masterTransactionManager() {

return new DataSourceTransactionManager(masterDataSource());

}

@Bean(name = "clusterSqlSessionFactory")

public SqlSessionFactory masterSqlSessionFactory(@Qualifier("clusterDataSource") DataSource masterDataSource)

throws Exception {

final SqlSessionFactoryBean sessionFactory = new SqlSessionFactoryBean();

sessionFactory.setDataSource(masterDataSource);

sessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources(ClusterDataSourceConfig.MAPPER_LOCATION));

return sessionFactory.getObject();

}

}

其中这两个注解说明下:

-

@Primary :标志这个 Bean 如果在多个同类 Bean 候选时,该 Bean

优先被考虑。多数据源配置的时候注意,必须要有一个主数据源,用 @Primary 标志该 Bean。 -

@MapperScan: 扫描 Mapper 接口并容器管理。

需要注意的是sqlSessionFactoryRef 表示定义一个唯一 SqlSessionFactory 实例。

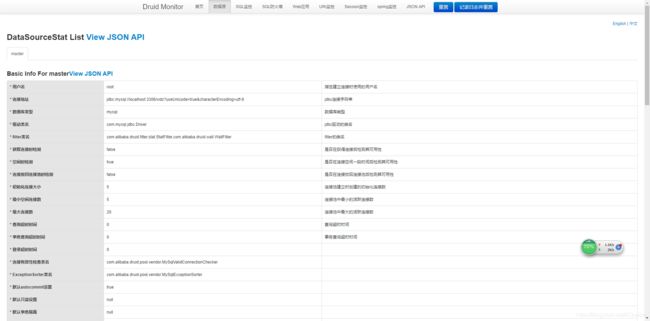

上面的配置完之后,就可以将Druid作为连接池使用了。但是Druid并不简简单单的是个连接池,它也可以说是一个监控应用,它自带了web监控界面,可以很清晰的看到SQL相关信息。

在SpringBoot中运用Druid的监控作用,只需要编写StatViewServlet和WebStatFilter类,实现注册服务和过滤规则。这里我们可以将这两个写在一起,使用@Configuration和@Bean。

为了方便理解,相关的配置说明也写在代码中了,这里就不再过多赘述了。

代码如下:

DruidConfiguration.javapackage com.equaker.druid;

import com.alibaba.druid.support.http.StatViewServlet;

import com.alibaba.druid.support.http.WebStatFilter;

import org.springframework.boot.web.servlet.FilterRegistrationBean;

import org.springframework.boot.web.servlet.ServletRegistrationBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* druid数据源监控web应用

*/

@Configuration

public class DruidConfiguration {

@Bean

public ServletRegistrationBean druidStatViewServle() {

//注册服务

ServletRegistrationBean servletRegistrationBean = new ServletRegistrationBean(

new StatViewServlet(), "/druid/*");

// 白名单(为空表示,所有的都可以访问,多个IP的时候用逗号隔开)

servletRegistrationBean.addInitParameter("allow", "127.0.0.1");

// IP黑名单 (存在共同时,deny优先于allow)

servletRegistrationBean.addInitParameter("deny", "127.0.0.2");

// 设置登录的用户名和密码

servletRegistrationBean.addInitParameter("loginUsername", "equaker");

servletRegistrationBean.addInitParameter("loginPassword", "123456");

// 是否能够重置数据.

servletRegistrationBean.addInitParameter("resetEnable", "false");

return servletRegistrationBean;

}

@Bean

public FilterRegistrationBean druidStatFilter() {

FilterRegistrationBean filterRegistrationBean = new FilterRegistrationBean(

new WebStatFilter());

// 添加过滤规则

filterRegistrationBean.addUrlPatterns("/*");

// 添加不需要忽略的格式信息

filterRegistrationBean.addInitParameter("exclusions",

"*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

System.out.println("druid初始化成功!");

return filterRegistrationBean;

}

}

编写完之后,启动程序,在浏览器输入:http://127.0.0.1:8084/druid/index.html ,然后输入设置的用户名和密码,便可以访问Web界面了。

效果如图:

DemoController.java:

package com.equaker.controller;

import com.equaker.mapper.cluster.UserMapper;

import com.equaker.mapper.master.EQMapper;

import com.equaker.model.EQ;

import com.equaker.model.User;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.ResponseBody;

import java.math.BigDecimal;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

@Controller

@RequestMapping("demo")

public class DemoController {

@Autowired

private EQMapper eqMapper;

@Autowired

private UserMapper userMapper;

@RequestMapping("/save")

@ResponseBody

public EQ save(){

EQ eq = new EQ();

eq.setcAmount(new BigDecimal(10));

eq.setcCardno("1");

eq.setcDatetime(new Date());

eq.setcGcode("1");

eq.setcGname("sdq");

eq.setcId("1");

eq.setcQtty(new BigDecimal(10));

eq.setcSname("ss");

eq.setcStoreId("1");

eqMapper.insert(eq);

return eq;

}

@RequestMapping("/saveUser")

@ResponseBody

public User saveUser(){

User user = new User();

user.setName("sdq");

user.setAge(23);

user.setCreateTime(new Date());

user.setUpdateTime(new Date());

userMapper.save(user);

return user;

}

}

启动类 :

因为使用了自定义的数据源配置,所以在启动类里面一定要把springboot自己默认的配置去除

package com.equaker.fhcrm;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;

import org.springframework.context.annotation.ComponentScan;

@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class})

@ComponentScan(basePackages = {"com.equaker"})

//@MapperScan(basePackages = {"com.equaker.mapper"})

public class FhCrmApplication {

public static void main(String[] args) {

SpringApplication.run(FhCrmApplication.class, args);

}

}访问:http://localhost:8080/equaker/demo/save 初始化并使用master数据源 ok

访问:http://localhost:8080/equaker/demo/saveUser 初始化并使用cluster数据源 ok

注意!注意!注意!

1,由于在数据源中已经配置了mapper接口和mapper映射文件,所以再启动类main方法中不需要指定mapperscan 了,,在application.yml里面也不需要执行mapper映射文件了。否则会提示waring:

2018-12-17 11:06:14.294 WARN 36624 --- [ main] o.m.s.mapper.ClassPathMapperScanner : Skipping MapperFactoryBean with name 'userMapper' and 'com.equaker.mapper.cluster.UserMapper' mapperInterface. Bean already defined with the same name!

2018-12-17 11:06:14.294 WARN 36624 --- [ main] o.m.s.mapper.ClassPathMapperScanner : Skipping MapperFactoryBean with name 'EQMapper' and 'com.equaker.mapper.master.EQMapper' mapperInterface. Bean already defined with the same name!

2018-12-17 11:06:14.294 WARN 36624 --- [ main] o.m.s.mapper.ClassPathMapperScanner : No MyBatis mapper was found in '[com.equaker.mapper]' package. Please check your configuration.

2, 若是mybatis-plus 进行多数据源配置,只需要在构建会话工厂的时候把

final SqlSessionFactoryBean sessionFactory = new SqlSessionFactoryBean();

改成:

final MybatisSqlSessionFactoryBean sessionFactory = new MybatisSqlSessionFactoryBean();

即可。道理你懂的!!!