FFmpeg解码视频帧为jpg图片保存到本地

之前遇到一个需求是将视频一秒一秒解码成一帧一帧的图片,用户滑动选择时间节点(微信朋友圈发10秒视频的编辑界面)。开始我是用的MediaMetadataRetriever类来获取图片,但是对于分辨率比较大的视频(1920*1080)获取一个图片要0.7/0.8秒,太慢了。后来又用FFmpeg的命令来批量的解码视频成一帧一帧的图片,速度依然不快每张图片得耗费0.5秒左右的时间。最后还是用FFmpeg,不过不是用命令行,而是用NDK,发现c解码视频再转换为图片保存起来效率大大挺高,一秒钟能解码4、5张图片,c就是快啊,做一下总结。

附上源码:

http://download.csdn.net/download/qq_28284547/10031785

GitHub:https://github.com/kui92/FFmpegTools

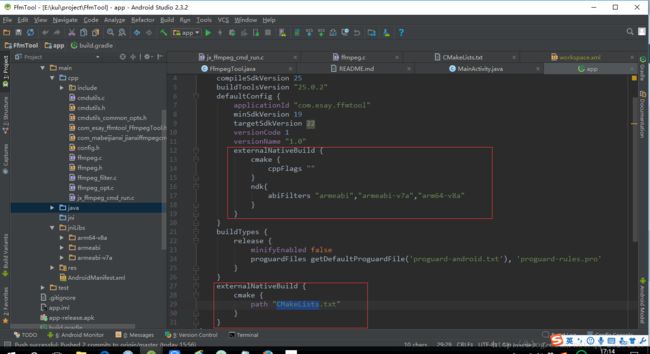

创建工程,注意要勾选上支持c++。

我从网上找到了编译好的FFmpeg文件,直接考到自己的工程里的。

CMakeLists

cmake_minimum_required(VERSION 3.4.1)

include_directories(

${CMAKE_SOURCE_DIR}/src/main/cpp/include

)

add_library( # Sets the name of the library.

jxffmpegrun

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

src/main/cpp/cmdutils.c

src/main/cpp/ffmpeg.c

src/main/cpp/ffmpeg_filter.c

src/main/cpp/ffmpeg_opt.c

src/main/cpp/jx_ffmpeg_cmd_run.c

)

add_library(avcodec SHARED IMPORTED)

add_library(avfilter SHARED IMPORTED)

add_library(avformat SHARED IMPORTED)

add_library(avutil SHARED IMPORTED)

add_library(swresample SHARED IMPORTED)

add_library(swscale SHARED IMPORTED)

add_library(fdk-aac SHARED IMPORTED)

set_target_properties(

avcodec

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libavcodec.so

)

set_target_properties(

avfilter

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libavfilter.so

)

set_target_properties(

avformat

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libavformat.so

)

set_target_properties(

avutil

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libavutil.so

)

set_target_properties(

swresample

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libswresample.so

)

set_target_properties(

swscale

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libswscale.so

)

set_target_properties(

fdk-aac

PROPERTIES IMPORTED_LOCATION

CMAKESOURCEDIR/src/main/jniLibs/ {ANDROID_ABI}/libfdk-aac.so

)

target_link_libraries( # Specifies the target library.

jxffmpegrun

log

android

fdk-aac

avcodec

avfilter

avformat

avutil

swresample

swscale

# Links the target library to the log library

# included in the NDK.

${log-lib} )

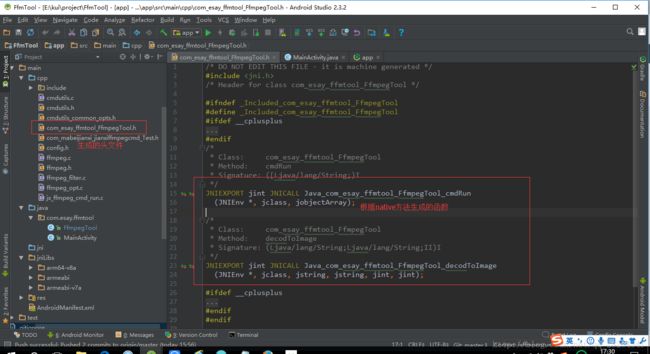

新建FfmpegTool类,编写native方法并生成头文件(头文件的生成方法可自行百度)

//实现native方法

#define LOG_TAG "videoplayer"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG, LOG_TAG, __VA_ARGS__)

/**

* 将AVFrame(YUV420格式)保存为JPEG格式的图片

*

* @param width YUV420的宽

* @param height YUV42的高

*

*/

int MyWriteJPEG(AVFrame *pFrame,char *path, int width, int height, int iIndex) {

// 输出文件路径

char out_file[1000] = {0};

LOGD("path:%s", path);

sprintf(out_file, "%stemp%d.jpg", path, iIndex);

LOGD("out_file:%s", out_file);

// 分配AVFormatContext对象

AVFormatContext *pFormatCtx = avformat_alloc_context();

// 设置输出文件格式

pFormatCtx->oformat = av_guess_format("mjpeg", NULL, NULL);

// 创建并初始化一个和该url相关的AVIOContext

if (avio_open(&pFormatCtx->pb, out_file, AVIO_FLAG_READ_WRITE) < 0) {

LOGD("Couldn't open output file.");

return -1;

}

// 构建一个新stream

AVStream *pAVStream = avformat_new_stream(pFormatCtx, 0);

if (pAVStream == NULL) {

return -1;

}

// 设置该stream的信息

AVCodecContext *pCodecCtx = pAVStream->codec;

pCodecCtx->codec_id = pFormatCtx->oformat->video_codec;

pCodecCtx->codec_type = AVMEDIA_TYPE_VIDEO;

pCodecCtx->pix_fmt = AV_PIX_FMT_YUVJ420P;

pCodecCtx->width = width;

pCodecCtx->height = height;

pCodecCtx->time_base.num = 1;

pCodecCtx->time_base.den = 25;

// Begin Output some information

av_dump_format(pFormatCtx, 0, out_file, 1);

// End Output some information

// 查找解码器

AVCodec *pCodec = avcodec_find_encoder(pCodecCtx->codec_id);

if (!pCodec) {

LOGD("Codec not found.");

return -1;

}

// 设置pCodecCtx的解码器为pCodec

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGD("Could not open codec.");

return -1;

}

//Write Header

avformat_write_header(pFormatCtx, NULL);

int y_size = pCodecCtx->width * pCodecCtx->height;

//Encode

// 给AVPacket分配足够大的空间

AVPacket pkt;

av_new_packet(&pkt, y_size * 3);

//

int got_picture = 0;

int ret = avcodec_encode_video2(pCodecCtx, &pkt, pFrame, &got_picture);

if (ret < 0) {

LOGD("Encode Error.\n");

return -1;

}

if (got_picture == 1) {

//pkt.stream_index = pAVStream->index;

ret = av_write_frame(pFormatCtx, &pkt);

}

av_free_packet(&pkt);

//Write Trailer

av_write_trailer(pFormatCtx);

LOGD("Encode Successful.\n");

if (pAVStream) {

avcodec_close(pAVStream->codec);

}

avio_close(pFormatCtx->pb);

avformat_free_context(pFormatCtx);

return 0;

}

/**

*方法的实现入口

*/

JNIEXPORT jint JNICALL Java_com_esay_ffmtool_FfmpegTool_decodToImage

(JNIEnv * env, jclass mclass, jstring in, jstring dir, jint startTime, jint num){

char * input=jstringTostring(env,in);

char * parent=jstringTostring(env,dir);

LOGD("input:%s",input);

LOGD("parent:%s",parent);

av_register_all();

AVFormatContext *pFormatCtx = avformat_alloc_context();

// Open video file

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

LOGD("Couldn't open file:%s\n", input);

return -1; // Couldn't open file

}

// Retrieve stream information

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGD("Couldn't find stream information.");

return -1;

}

// Find the first video stream

int videoStream = -1, i;

for (i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO

&& videoStream < 0) {

videoStream = i;

}

}

if (videoStream == -1) {

LOGD("Didn't find a video stream.");

return -1; // Didn't find a video stream

}

// Get a pointer to the codec context for the video stream

AVCodecContext *pCodecCtx = pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGD("Codec not found.");

return -1; // Codec not found

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGD("Could not open codec.");

return -1; // Could not open codec

}

// Allocate video frame

AVFrame *pFrame = av_frame_alloc();

if (pFrame == NULL) {

LOGD("Could not allocate video frame.");

return -1;

}

int64_t count = startTime;

int frameFinished;

//(*pCodecCtx).

AVPacket packet;

LOGD("start_time:%d",pFormatCtx->start_time);

LOGD("pFormatCtx den:%d", pFormatCtx->streams[videoStream]->sample_aspect_ratio.den);

LOGD("pFormatCtx num:%d", pFormatCtx->streams[videoStream]->sample_aspect_ratio.num);

av_seek_frame(pFormatCtx,-1,(int64_t)count*AV_TIME_BASE,AVSEEK_FLAG_FRAME);

while (av_read_frame(pFormatCtx, &packet) >= 0){

if (packet.stream_index == videoStream){

// Decode video frame

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// 并不是decode一次就可解码出一帧

if (frameFinished){

if(count<(startTime+num)){

MyWriteJPEG(pFrame,parent, pCodecCtx->width,

pCodecCtx->height, count);

++count;

av_seek_frame(pFormatCtx,-1,(int64_t)count*AV_TIME_BASE,AVSEEK_FLAG_FRAME);

} else{

av_packet_unref(&packet);

LOGD("break:");

break;

}

}

}

av_packet_unref(&packet);

}

// Free the YUV frame

av_free(pFrame);

// Close the codecs

avcodec_close(pCodecCtx);

// Close the video file

avformat_close_input(&pFormatCtx);

return 0;

}

链接so库,在类FfmpegTool开头加入代码:

static {

System.loadLibrary("avutil");

System.loadLibrary("fdk-aac");

System.loadLibrary("avcodec");

System.loadLibrary("avformat");

System.loadLibrary("swscale");

System.loadLibrary("swresample");

System.loadLibrary("avfilter");

System.loadLibrary("jxffmpegrun");

}//测试运行,在MainActivity中添加按钮执行如下方法,注意FfmpegTool.decodToImage方法是阻塞的,有时候耗时较长要放在子线程。

public void click2(View view){

String path= "图片要保存的路径";

String video="视频路径";

FfmpegTool.decodToImage(video,path,0,60);

}最后再次附上源码:

http://download.csdn.net/download/qq_28284547/10031785

GitHub:https://github.com/kui92/FFmpegTools