SpringCloud Zipkin调用链监控

1.Zipkin server

1. 1 添加依赖

2.8.4

io.zipkin.java

zipkin-autoconfigure-ui

${zipkin.version}

io.zipkin.java

zipkin-server

${zipkin.version}

org.springframework.boot

spring-boot-starter-log4j2

io.zipkin.java

zipkin-autoconfigure-collector-rabbitmq

${zipkin.version}

io.zipkin.java

zipkin-autoconfigure-storage-elasticsearch-http

${zipkin.version}

1.2 配置文件

采用elasticsearch存储调用链数据

Sleuth 默认采样算法的实现是 Reservoir sampling,具体的实现类是 PercentageBasedSampler,默认的采样比例为: 0.1,即 10%。我们可以通过 spring.sleuth.sampler.probability 来设置,所设置的值介于 0 到 1 之间,1 则表示全部采集

spring:

application:

name: cloud-zipkin-server

sleuth:

enabled: false #表示当前程序不使用sleuth

sampler:

probability: 1 #采样率,推荐0.1,百分之百收集的话存储可能扛不住

server:

port: 9411

zipkin:

storage:

StorageComponent: elasticsearch

type: elasticsearch

elasticsearch:

cluster: e58ww0P

hosts: http://localhost:9200

index: zipkin

index-shards: 5

index-replicas: 11.3 添加@EnableZipkinServer注解

@EnableZipkinServer

@SpringBootApplication

public class YunfeiCloudZipkinServerApplication {

public static void main(String[] args) {

SpringApplication.run(YunfeiCloudZipkinServerApplication.class, args);

}

@Bean

public DemoTagsProvider demoTagsProvider(){

return new DemoTagsProvider();

}

}

1.4 解决访问server报错

java.lang.IllegalArgumentException: Prometheus requires that all meters with the same name have the same set of tag keys. There is already an existing meter containing tag keys [method, status, uri]. The meter you are attempting to register has keys [exception, method, status, uri].

可以不用关注这个问题,服务器一样能收集到日志,也可以有两种处理方式: a、将management.metrics.web.server.auto-time-requests=false设置为false,默认为true; b、重写DefaultWebMvcTagsProvider或者实现接口WebMvcTagsProvider,参照DefaultWebMvcTagsProvider的写法,只需要把DefaultWebMvcTagsProvider下getTages()方法的WebMvcTags.exception(exception)去除掉。

import io.micrometer.core.instrument.Tag;

import io.micrometer.core.instrument.Tags;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.actuate.metrics.web.servlet.WebMvcTags;

import org.springframework.boot.actuate.metrics.web.servlet.WebMvcTagsProvider;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

public class DemoTagsProvider implements WebMvcTagsProvider {

static Logger logger = LoggerFactory.getLogger(DemoTagsProvider.class);

@Override

public Iterable getTags(HttpServletRequest request, HttpServletResponse response, Object handler,

Throwable exception) {

return Tags.of(WebMvcTags.method(request), WebMvcTags.uri(request, response),

WebMvcTags.status(response));

}

@Override

public Iterable getLongRequestTags(HttpServletRequest request, Object handler) {

return Tags.of(WebMvcTags.method(request), WebMvcTags.uri(request, null));

}

2. 创建Zipkin sleuth client

2.1 添加依赖

org.springframework.cloud

spring-cloud-starter-sleuth

org.springframework.cloud

spring-cloud-sleuth-zipkin

2.2 配置文件

lication:

name: cloud-sleuth-client

zipkin:

base-url: http://localhost:9411 #http://sleuth server的ip:端口

sleuth:

sampler:

probability: 1 #采样率,推荐0.1,百分之百收集的话存储可能扛不住

server:

port: 9412

eureka:

client:

service-url:

defaultZone: http://${eureka.host:localhost}:${eureka.port:8761}/eureka/

2.3 测试接口

@RestController

public class TestController {

protected final static Logger logger = LoggerFactory.getLogger(TestController.class);

@GetMapping("/testLog")

public String infoLog() {

return "testLog";

}

}

浏览器访问:http://localhost:9412/testLog

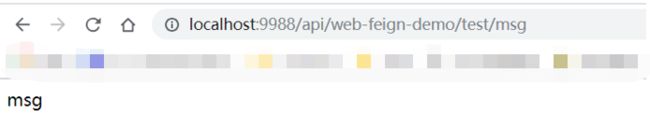

经过网关访问:http://localhost:9988/api/web-feign-demo/test/msg

2.4 结果截图

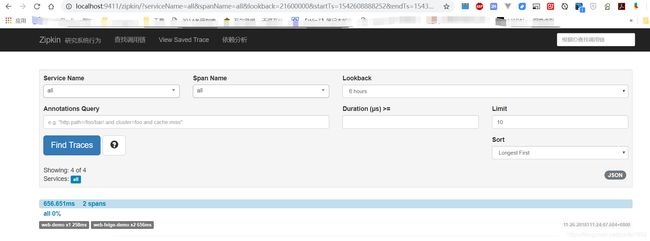

访问http://localhost:9411,可以看到调用链数据

3. zipkin-dependencies

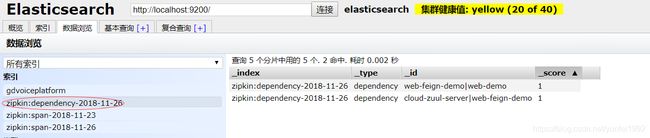

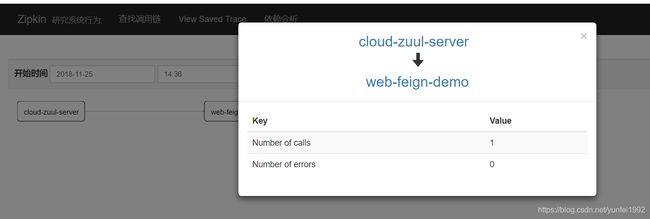

采用elasticsearch存储调用链数据,依赖分析没有数据,需要单独启动zipkin-dependencies,启动elasticsearch job定时任务,生成保存依赖分析数据。

3.1 Windows启动脚本

zipkin.bat

@echo off

SET STORAGE_TYPE=elasticsearch

SET ES_HOSTS=localhost:9200

java -jar E:\dev\zipkin-dependencies-2.0.3.jar

pause;

zipkin-dependencies 下载地址: https://www.mvnjar.com/io.zipkin.dependencies/zipkin-dependencies/2.0.3/detail.html

3.2 运行截图

执行zipkin.bat,启动定时任务