zookeeper 安装的三种模式

Zookeeper安装

zookeeper的安装分为三种模式:单机模式、集群模式和伪集群模式。

- 单机模式

首先,从Apache官网下载一个Zookeeper稳定版本,本次教程采用的是zookeeper-3.4.9版本。

http://apache.fayea.com/zookeeper/zookeeper-3.4.9/

|

然后解压zookeeper-3.4.9.tar.gz文件到安装目录下:

tar -zxvf zookeepre-3.4.9.tar.gz

zookeeper要求Java运行环境,并且需要jdk版本1.6以上。为了以后的操作方便,可以对zookeeper的环境变量进行配置(该步骤可忽略)。方法如下,在/etc/profile文件中加入以下内容:

#Set Zookeeper Environment

export ZOOKEEPER_HOME=/root/zookeeper-3.4.9

export PATH=$ZOOKEEPER_HOME/bin;$ZOOKEEPER_HOME/conf

Zookeeper服务器包含在单个jar文件中(本环境下为 zookeeper-3.4.9.jar),安装此服务需要用户自己创建一个配置文件。默认配置文件路径为 Zookeeper-3.4.9/conf/目录下,文件名为zoo.cfg。进入conf/目录下可以看到一个zoo_sample.cfg文件,可供参考。通过以下代码在conf目录下创建zoo.cfg文件:

gedit zoo.cfg

在文件中输入以下内容并保存

tickTime=2000dataDir=/home/jxwch/hadoop/data/zookeeper

dataLogDir=/home/jxwch/hadoop/dataLog/zookeeper

clientPort=2181

在这个文件中,各个语句的含义:

tickTime : 服务器与客户端之间交互的基本时间单元(ms)

dataDir : 保存zookeeper数据路径

dataLogDir : 保存zookeeper日志路径,当此配置不存在时默认路径与dataDir一致

clientPort : 客户端访问zookeeper时经过服务器端时的端口号

使用单机模式时需要注意,在这种配置方式下,如果zookeeper服务器出现故障,zookeeper服务将会停止。

- 集群模式

zookeeper最主要的应用场景是集群,下面介绍如何在一个集群上部署一个zookeeper。只要集群上的大多数zookeeper服务启动了,那么总的zookeeper服务便是可用的。另外,最好使用奇数台服务器。如歌zookeeper拥有5台服务器,那么在最多2台服务器出现故障后,整个服务还可以正常使用。

之后的操作和单机模式的安装类似,我们同样需要Java环境,下载最新版的zookeeper并配置相应的环境变量。不同之处在于每台机器上的conf/zoo.cfg配置文件的参数设置不同,用户可以参考下面的配置:

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/home/jxwch/server1/data

dataLogDir=/home/jxwch/server1/dataLog

clientPort=2181

server.1=zoo1:2888:3888

server.2=zoo2:2888:3888

server.3=zoo3:2888:3888

maxClientCnxns=60

在这个配置文件中,新出现的语句的含义:

initLimit : 此配置表示允许follower连接并同步到leader的初始化时间,它以tickTime的倍数来表示。当超过设置倍数的tickTime时间,则连接失败。

syncLimit : Leader服务器与follower服务器之间信息同步允许的最大时间间隔,如果超过次间隔,默认follower服务器与leader服务器之间断开链接。

maxClientCnxns : 限制连接到zookeeper服务器客户端的数量

server.id=host:port:port : 表示了不同的zookeeper服务器的自身标识,作为集群的一部分,每一台服务器应该知道其他服务器的信息。用户可以从“server.id=host:port:port” 中读取到相关信息。在服务器的data(dataDir参数所指定的目录)下创建一个文件名为myid的文件,这个文件的内容只有一行,指定的是自身的id值。比如,服务器“1”应该在myid文件中写入“1”。这个id必须在集群环境中服务器标识中是唯一的,且大小在1~255之间。这一样配置中,zoo1代表第一台服务器的IP地址。第一个端口号(port)是从follower连接到leader机器的端口,第二个端口是用来进行leader选举时所用的端口。所以,在集群配置过程中有三个非常重要的端口:clientPort:2181、port:2888、port:3888。

- 伪集群模式

伪集群模式就是在单机环境下模拟集群的Zookeeper服务。

在zookeeper集群配置文件中,clientPort参数用来设置客户端连接zookeeper服务器的端口。server.1=IP1:2888:3888中,IP1指的是组成Zookeeper服务器的IP地址,2888为组成zookeeper服务器之间的通信端口,3888为用来选举leader的端口。由于伪集群模式中,我们使用的是同一台服务器,也就是说,需要在单台机器上运行多个zookeeper实例,所以我们必须要保证多个zookeeper实例的配置文件的client端口不能冲突。

下面简单介绍一下如何在单台机器上建立伪集群模式。首先将zookeeper-3.4.9.tar.gz分别解压到server1,server2,server3目录下:

tar -zxvf zookeeper-3.4.9.tar.gz /home/jxwch/server1

tar -zxvf zookeeper-3.4.9.tar.gz /home/jxwch/server2

tar -zxvf zookeeper-3.4.9.tar.gz /home/jxwch/server3

|

然后在server1/data/目录下创建文件myid文件并写入“1”,同样在server2/data/,目录下创建文件myid并写入“2”,server3进行同样的操作。

下面分别展示在server1/conf/、server2/conf/、server3/conf/目录下的zoo.cfg文件:

server1/conf/zoo.cfg文件

# Server 1

# The number of milliseconds of each tick

# 服务器与客户端之间交互的基本时间单元(ms)

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

# 此配置表示允许follower连接并同步到leader的初始化时间,它以tickTime的倍数来表示。当超过设置倍数的tickTime时间,则连接失败。

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

# Leader服务器与follower服务器之间信息同步允许的最大时间间隔,如果超过次间隔,默认follower服务器与leader服务器之间断开链接

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# 保存zookeeper数据,日志路径

dataDir=/home/jxwch/server1/data

dataLogDir=/home/jxwch/server1/dataLog

# the port at which the clients will connect

# 客户端与zookeeper相互交互的端口

clientPort=2181

server.1= 127.0.0.1:2888:3888

server.2= 127.0.0.1:2889:3889

server.3= 127.0.0.1:2890:3890

#server.A=B:C:D 其中A是一个数字,代表这是第几号服务器;B是服务器的IP地址;C表示服务器与群集中的“领导者”交换信息的端口;当领导者失效后,D表示用来执行选举时服务器相互通信的端口。

# the maximum number of client connections.

# increase this if you need to handle more clients

# 限制连接到zookeeper服务器客户端的数量

maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server2/conf/zoo.cfg文件

# Server 2

# The number of milliseconds of each tick

# 服务器与客户端之间交互的基本时间单元(ms)

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

# zookeeper所能接受的客户端数量

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

# 服务器与客户端之间请求和应答的时间间隔

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# 保存zookeeper数据,日志路径

dataDir=/home/jxwch/server2/data

dataLogDir=/home/jxwch/server2/dataLog

# the port at which the clients will connect

# 客户端与zookeeper相互交互的端口

clientPort=2182

server.1= 127.0.0.1:2888:3888

server.2= 127.0.0.1:2889:3889

server.3= 127.0.0.1:2890:3890

#server.A=B:C:D 其中A是一个数字,代表这是第几号服务器;B是服务器的IP地址;C表示服务器与群集中的“领导者”交换信息的端口;当领导者失效后,D表示用来执行选举时服务器相互通信的端口。

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server3/conf/zoo.cfg文件

# Server 3

# The number of milliseconds of each tick

# 服务器与客户端之间交互的基本时间单元(ms)

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

# zookeeper所能接受的客户端数量

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

# 服务器与客户端之间请求和应答的时间间隔

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# 保存zookeeper数据,日志路径

dataDir=/home/jxwch/server3/data

dataLogDir=/home/jxwch/server3/dataLog

# the port at which the clients will connect

# 客户端与zookeeper相互交互的端口

clientPort=2183

server.1= 127.0.0.1:2888:3888

server.2= 127.0.0.1:2889:3889

server.3= 127.0.0.1:2890:3890

#server.A=B:C:D 其中A是一个数字,代表这是第几号服务器;B是服务器的IP地址;C表示服务器与群集中的“领导者”交换信息的端口;当领导者失效后,D表示用来执行选举时服务器相互通信的端口。

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

从上述三个代码清单可以发现,除了clientPort不同之外,dataDir和dataLogDir也不同。另外,不要忘记dataDir所对应的目录中创建的myid文件来指定对应的zookeeper服务器实例。

Zookeeper伪集群模式运行

当上述伪集群环境安装成功后就可以测试是否安装成功啦,我们可以尝尝鲜:

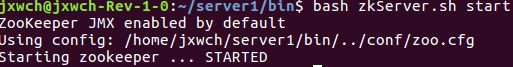

首先启动server1服务器:

cd server1/bin

bash zkServer.sh start

此时出现以下提示信息,表示启动成功:

打开zookeeper.out文件:

2017-02-23 16:17:46,419 [myid:] - INFO [main:QuorumPeerConfig@124] - Reading configuration from: /home/jxwch/server1/bin/../conf/zoo.cfg

2017-02-23 16:17:46,496 [myid:] - INFO [main:QuorumPeer$QuorumServer@149] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1

2017-02-23 16:17:46,496 [myid:] - INFO [main:QuorumPeer$QuorumServer@149] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1

2017-02-23 16:17:46,497 [myid:] - INFO [main:QuorumPeer$QuorumServer@149] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1

2017-02-23 16:17:46,497 [myid:] - INFO [main:QuorumPeerConfig@352] - Defaulting to majority quorums

2017-02-23 16:17:46,511 [myid:1] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2017-02-23 16:17:46,511 [myid:1] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 0

2017-02-23 16:17:46,511 [myid:1] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled.

2017-02-23 16:17:46,525 [myid:1] - INFO [main:QuorumPeerMain@127] - Starting quorum peer

2017-02-23 16:17:46,631 [myid:1] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181

2017-02-23 16:17:46,664 [myid:1] - INFO [main:QuorumPeer@1019] - tickTime set to 2000

2017-02-23 16:17:46,664 [myid:1] - INFO [main:QuorumPeer@1039] - minSessionTimeout set to -1

2017-02-23 16:17:46,664 [myid:1] - INFO [main:QuorumPeer@1050] - maxSessionTimeout set to -1

2017-02-23 16:17:46,665 [myid:1] - INFO [main:QuorumPeer@1065] - initLimit set to 10

2017-02-23 16:17:46,771 [myid:1] - INFO [main:FileSnap@83] - Reading snapshot /home/jxwch/server1/data/version-2/snapshot.400000015

2017-02-23 16:17:46,897 [myid:1] - INFO [ListenerThread:QuorumCnxManager$Listener@534] - My election bind port: /127.0.0.1:3888

2017-02-23 16:17:46,913 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:QuorumPeer@774] - LOOKING

2017-02-23 16:17:46,915 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:FastLeaderElection@818] - New election. My id = 1, proposed zxid=0x500000026

2017-02-23 16:17:46,922 [myid:1] - INFO [WorkerReceiver[myid=1]:FastLeaderElection@600] - Notification: 1 (message format version), 1 (n.leader), 0x500000026 (n.zxid), 0x1 (n.round), LOOKING (n.state), 1 (n.sid), 0x5 (n.peerEpoch) LOOKING (my state)

2017-02-23 16:17:46,940 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@400] - Cannot open channel to 2 at election address /127.0.0.1:3889

java.net.ConnectException: 拒绝连接

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:579)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:381)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:354)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:452)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:433)

at java.lang.Thread.run(Thread.java:745)

2017-02-23 16:17:46,943 [myid:1] - INFO [WorkerSender[myid=1]:QuorumPeer$QuorumServer@149] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1

2017-02-23 16:17:46,944 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@400] - Cannot open channel to 3 at election address /127.0.0.1:3890

java.net.ConnectException: 拒绝连接

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:579)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:381)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:354)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:452)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:433)

at java.lang.Thread.run(Thread.java:745)

2017-02-23 16:17:46,944 [myid:1] - INFO [WorkerSender[myid=1]:QuorumPeer$QuorumServer@149] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1

产生上述两条Waring信息是因为zookeeper服务的每个实例都拥有全局的配置信息,他们在启动的时候需要随时随地的进行leader选举,此时server1就需要和其他两个zookeeper实例进行通信,但是,另外两个zookeeper实例还没有启动起来,因此将会产生上述所示的提示信息。当我们用同样的方式启动server2和server3后就不会再有这样的警告信息了。

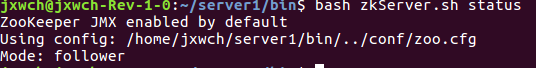

当三台服务器均成功启动后切换至server1/bin/目录下执行以下命令:

bash zkServer.sh status

终端出现以下提示信息:

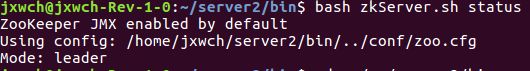

说明server1服务器此时处于follower模式,同样切换至server2/bin目录下执行相同的命令,返回如下结果:

说明server2被选举为leader。

参考资料

- Zookeeper系列之三:zookeeper的安装

- Zookeeper系列之五:zookeeper的运行

Zookeeper常用命令

Zookeeper四字命令

ZooKeeper配置参数详解