Spark在本地及Hadoop上运行方式

刚刚安装了Hadoop以及spark就非常兴奋的想要试用一下,我们还是拿Wordcount这个小应用来实验

首先实验本地版本的pyspark

$ pyspark

shell就启动起来了

>>> sc.master

u'local[*]'

可以看到是本地master

>>> text = sc.textFile("shakespeare.txt")

>>> from operator import add

>>> def token(text):

... return text.split()

...

>>> words = text.flatMap(token)

>>> wc = words.map(lambda x:(x,1))

>>> counts = wc.reduceByKey(add)

>>> counts.saveAsTextFile('wc')

在没有配置的情况下这样就可以了

如果要在Hadoop上运行spark那么就需要另外配置一下:

复制一下两个文件

$ cd $SPARK_HOME/conf

$ cp spark-env.sh.template spark-env.sh

$ cp slave.template slave

$ vim spark-env.sh

添加以下内容:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_HOME=/srv/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export SPARK_LOCAL_IP=127.0.0.1

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

$ vim slave

添加:

localhost 127.0.0.1

对了yarn-site.xml的配置看一下我前面配置Hadoop的那篇文章,不然有可能会报内存错误。

这时候可以启动Hadoop服务了

$ $HADOOP_HOME/sbin/start-dfs.sh

$ $HADOOP_HOME/sbin/start-yarn.sh

$ jps

5666 DataNode

6089 ResourceManager

5930 SecondaryNameNode

6250 NodeManager

5502 NameNode

6607 Jps

都启动起来了

之前的文章已经把文件放到Hadoop上面去过啦,不会的同学翻一下前面的文章。

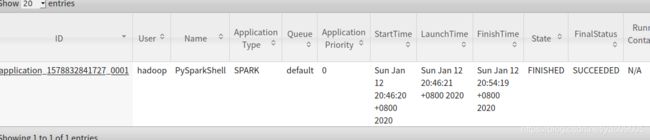

我们再次启动pyspark,这次以yarn-client作为驱动程序,这种模式下驱动程序在客户端进程内运行,对于想获得及时结果或相依交互模式运行可以使用这个模式。还有一个模式是yarn-cluster,适合运行时间长或不需要用户干预的作业,驱动程序在ApplicationMaster内部进行。

pyspark --master yarn --deploy-mode client

>>> sc.master

u'yarn'

下面还是输入一样的代码

但这次我们是去hdfs上读文件,所以要用hdfs协议

>>> text = sc.textFile("hdfs://localhost:9000/user/hadoop/shakespeare.txt")

>>> from operator import add

>>> def token(text):

... return text.split()

...

>>> words = text.flatMap(token)

>>> wc = words.map(lambda x:(x,1))

>>> counts = wc.reduceByKey(add)

>>> counts.saveAsTextFile("wc")

检验一下成果吧~

$ hadoop fs -ls /user/hadoop/wc

Found 3 items

-rw-r--r-- 1 hadoop supergroup 0 2020-01-12 18:24 /user/hadoop/wc/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 3074551 2020-01-12 18:24 /user/hadoop/wc/part-00000

-rw-r--r-- 1 hadoop supergroup 3085307 2020-01-12 18:24 /user/hadoop/wc/part-00001

$ hadoop fs -tail wc/part-00000 | less

u'winterstale@145208', 1)

(u'muchadoaboutnothing@65485', 1)

(u'midsummersnightsdream@99', 1)

(u'hamlet@147754', 1)

(u'tamingoftheshrew@36231', 1)

(u"'ld", 1)

(u'roars', 3)

(u'2kinghenryvi@83454', 1)

(u'kinghenryv@109398', 1)

(u'juliuscaesar@101945', 1)

(u'twogentlemenofverona@2385', 1)

(u'hamlet@107567', 1)

(u'hamlet@7588', 1)

(u"unmaster'd", 1)

(u'kinghenryviii@23697', 1)

(u'lance', 4)

(u'coriolanus@72688', 1)

(u'cymbeline@145819', 1)

(u"serpent's", 8)

(u'asyoulikeit@43360', 1)

(u'antonyandcleopatra@16482', 1)

(u'prolixious', 1)

(u'cymbeline@137535', 1)

(u'loveslabourslost@37179', 1)

(u'antonyandcleopatra@153227', 1)

(u'muchadoaboutnothing@75680', 1)

(u'SCROOP]', 1)

(u'kinghenryviii@9720', 1)

(u'prenez', 1)

(u'garb', 2)

(u'roar!', 1)

(u'Craves', 1)

(u'twelfthnight@27306', 1)

(u'palace!', 1)

(u'tempest@83776', 1)

(u'SELD', 1)

(u"Howsoe'er", 1)

(u'belied,', 1)

(u'twogentlemenofverona@74060', 1)

(u'timonofathens@55279', 1)

(u"warrant's", 1)

(u'vane', 2)

(u'roar;', 1)

(u'MOTH]', 7)

(u'belied.', 1)

(u'roar?', 2)