canal 源码解析(2)-数据流转篇(2)

一、msyql内部指令操作

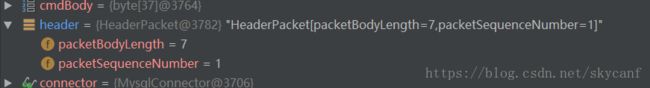

1)接收msyql发送过来的报文,先获取头部。

header = PacketManager.readHeader(connector.getChannel(), 4);

public static HeaderPacket readHeader(SocketChannel ch, int len) throws IOException { HeaderPacket header = new HeaderPacket(); header.fromBytes(ch.read(len)); return header; }

public void fromBytes(byte[] data) { if (data == null || data.length != 4) { throw new IllegalArgumentException("invalid header data. It can't be null and the length must be 4 byte."); } this.packetBodyLength = (data[0] & 0xFF) | ((data[1] & 0xFF) << 8) | ((data[2] & 0xFF) << 16); this.setPacketSequenceNumber(data[3]); }

只取了data的前四个字节

2)根据头部大小判断body长度

开始获取body

byte[] body = PacketManager.readBytes(connector.getChannel(), header.getPacketBodyLength());

public static byte[] readBytes(SocketChannel ch, int len) throws IOException { return ch.read(len); }若body长度为7,则从input里获取0-7字节放入data

public byte[] read(int readSize) throws IOException { InputStream input = this.input; byte[] data = new byte[readSize]; int remain = readSize; if (input == null) { throw new SocketException("Socket already closed."); } while (remain > 0) { try { int read = input.read(data, readSize - remain, remain); if (read > -1) { remain -= read; } else { throw new IOException("EOF encountered."); } } catch (SocketTimeoutException te) { if (Thread.interrupted()) { throw new ClosedByInterruptException(); } } } return data; }

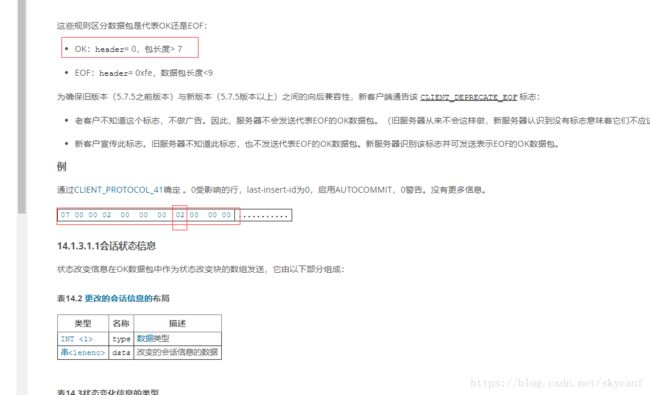

3)获得data为 ,根据msyql协议里ok报文的特性(如下,)

,根据msyql协议里ok报文的特性(如下,)

4)获得ok后,进行下一步操作

二、msyql 日常指令(dml,ddl)操作:

1)先初始化一个buff,new buffer(32)和chanel,

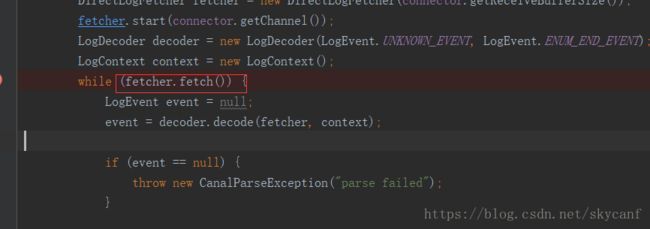

接收msyql发送过来的data

public boolean fetch() throws IOException { try { // Fetching packet header from input. if (!fetch0(0, NET_HEADER_SIZE)) { logger.warn("Reached end of input stream while fetching header"); return false; } // Fetching the first packet(may a multi-packet). int netlen = getUint24(PACKET_LEN_OFFSET);//body长度 int netnum = getUint8(PACKET_SEQ_OFFSET);// if (!fetch0(NET_HEADER_SIZE, netlen)) {//获取body logger.warn("Reached end of input stream: packet #" + netnum + ", len = " + netlen); return false; } // Detecting error code. final int mark = getUint8(NET_HEADER_SIZE); if (mark != 0) { if (mark == 255) // error from master { // Indicates an error, for example trying to fetch from // wrong // binlog position. position = NET_HEADER_SIZE + 1; final int errno = getInt16(); String sqlstate = forward(1).getFixString(SQLSTATE_LENGTH); String errmsg = getFixString(limit - position); throw new IOException("Received error packet:" + " errno = " + errno + ", sqlstate = " + sqlstate + " errmsg = " + errmsg); } else if (mark == 254) { // Indicates end of stream. It's not clear when this would // be sent. logger.warn("Received EOF packet from server, apparent" + " master disconnected. It's may be duplicate slaveId , check instance config"); return false; } else { // Should not happen. throw new IOException("Unexpected response " + mark + " while fetching binlog: packet #" + netnum + ", len = " + netlen); } } // if mysql is in semi mode if (issemi) { // parse semi mark int semimark = getUint8(NET_HEADER_SIZE + 1); int semival = getUint8(NET_HEADER_SIZE + 2); this.semival = semival; } // The first packet is a multi-packet, concatenate the packets. while (netlen == MAX_PACKET_LENGTH) { if (!fetch0(0, NET_HEADER_SIZE)) { logger.warn("Reached end of input stream while fetching header"); return false; } netlen = getUint24(PACKET_LEN_OFFSET); netnum = getUint8(PACKET_SEQ_OFFSET); if (!fetch0(limit, netlen)) { logger.warn("Reached end of input stream: packet #" + netnum + ", len = " + netlen); return false; } } // Preparing buffer variables to decoding. if (issemi) { origin = NET_HEADER_SIZE + 3; } else { origin = NET_HEADER_SIZE + 1; } position = origin; limit -= origin; return true; } catch (SocketTimeoutException e) { close(); /* Do cleanup */ logger.error("Socket timeout expired, closing connection", e); throw e; } catch (InterruptedIOException e) { close(); /* Do cleanup */ logger.info("I/O interrupted while reading from client socket", e); throw e; } catch (ClosedByInterruptException e) { close(); /* Do cleanup */ logger.info("I/O interrupted while reading from client socket", e); throw e; } catch (IOException e) { close(); /* Do cleanup */ logger.error("I/O error while reading from client socket", e); throw e; } }

以上代码有几个关键字,

limit:buff初始容量

position:和nio里的一样,每read/write 一次后,进行相应的+,例如buff初始为10,第一次读了3字节,则position为3,第二次读4 ,则position3+4=7

origin:固定标记

2) 解析数据包

public LogEvent decode(LogBuffer buffer, LogContext context) throws IOException { final int limit = buffer.limit();//获取body长度 //判断第一个事件(msyql协议规定的) if (limit >= FormatDescriptionLogEvent.LOG_EVENT_HEADER_LEN) { LogHeader header = new LogHeader(buffer, context.getFormatDescription()); final int len = header.getEventLen(); if (limit >= len) { LogEvent event; /* Checking binary-log's header */ if (handleSet.get(header.getType())) { buffer.limit(len); try { /* Decoding binary-log to event */ event = decode(buffer, header, context); if (event != null) { event.setSemival(buffer.semival); } } catch (IOException e) { if (logger.isWarnEnabled()) { logger.warn("Decoding " + LogEvent.getTypeName(header.getType()) + " failed from: " + context.getLogPosition(), e); } throw e; } finally { buffer.limit(limit); /* Restore limit */ } } else { /* Ignore unsupported binary-log. */ event = new UnknownLogEvent(header); } /* consume this binary-log. */ buffer.consume(len); return event; } } /* Rewind buffer's position to 0. */ buffer.rewind(); return null; }

以上解析;获取body长度,判断第一个事件(msyql协议规定的),

public LogHeader(LogBuffer buffer, FormatDescriptionLogEvent descriptionEvent){ when = buffer.getUint32(); type = buffer.getUint8(); // LogEvent.EVENT_TYPE_OFFSET; serverId = buffer.getUint32(); // LogEvent.SERVER_ID_OFFSET; eventLen = (int) buffer.getUint32(); // LogEvent.EVENT_LEN_OFFSET; if (descriptionEvent.binlogVersion == 1) { logPos = 0; flags = 0; return; } /* 4.0 or newer */ logPos = buffer.getUint32(); // LogEvent.LOG_POS_OFFSET /* * If the log is 4.0 (so here it can only be a 4.0 relay log read by the * SQL thread or a 4.0 master binlog read by the I/O thread), log_pos is * the beginning of the event: we transform it into the end of the * event, which is more useful. But how do you know that the log is 4.0: * you know it if description_event is version 3 *and* you are not * reading a Format_desc (remember that mysqlbinlog starts by assuming * that 5.0 logs are in 4.0 format, until it finds a Format_desc). */ if (descriptionEvent.binlogVersion == 3 && type < LogEvent.FORMAT_DESCRIPTION_EVENT && logPos != 0) { /* * If log_pos=0, don't change it. log_pos==0 is a marker to mean * "don't change rli->group_master_log_pos" (see * inc_group_relay_log_pos()). As it is unreal log_pos, adding the * event len's is nonsense. For example, a fake Rotate event should * not have its log_pos (which is 0) changed or it will modify * Exec_master_log_pos in SHOW SLAVE STATUS, displaying a nonsense * value of (a non-zero offset which does not exist in the master's * binlog, so which will cause problems if the user uses this value * in CHANGE MASTER). */ logPos += eventLen; /* purecov: inspected */ } flags = buffer.getUint16(); // LogEvent.FLAGS_OFFSET if ((type == LogEvent.FORMAT_DESCRIPTION_EVENT) || (type == LogEvent.ROTATE_EVENT)) { /* * These events always have a header which stops here (i.e. their * header is FROZEN). */ /* * Initialization to zero of all other Log_event members as they're * not specified. Currently there are no such members; in the future * there will be an event UID (but Format_description and Rotate * don't need this UID, as they are not propagated through * --log-slave-updates (remember the UID is used to not play a query * twice when you have two masters which are slaves of a 3rd * master). Then we are done. */ if (type == LogEvent.FORMAT_DESCRIPTION_EVENT) { int commonHeaderLen = buffer.getUint8(FormatDescriptionLogEvent.LOG_EVENT_MINIMAL_HEADER_LEN + FormatDescriptionLogEvent.ST_COMMON_HEADER_LEN_OFFSET); buffer.position(commonHeaderLen + FormatDescriptionLogEvent.ST_SERVER_VER_OFFSET); String serverVersion = buffer.getFixString(FormatDescriptionLogEvent.ST_SERVER_VER_LEN); // ST_SERVER_VER_OFFSET int versionSplit[] = new int[] { 0, 0, 0 }; FormatDescriptionLogEvent.doServerVersionSplit(serverVersion, versionSplit); checksumAlg = LogEvent.BINLOG_CHECKSUM_ALG_UNDEF; if (FormatDescriptionLogEvent.versionProduct(versionSplit) >= FormatDescriptionLogEvent.checksumVersionProduct) { buffer.position(eventLen - LogEvent.BINLOG_CHECKSUM_LEN - LogEvent.BINLOG_CHECKSUM_ALG_DESC_LEN); checksumAlg = buffer.getUint8(); } processCheckSum(buffer); } return; } /* * CRC verification by SQL and Show-Binlog-Events master side. The * caller has to provide @description_event->checksum_alg to be the last * seen FD's (A) descriptor. If event is FD the descriptor is in it. * Notice, FD of the binlog can be only in one instance and therefore * Show-Binlog-Events executing master side thread needs just to know * the only FD's (A) value - whereas RL can contain more. In the RL * case, the alg is kept in FD_e (@description_event) which is reset to * the newer read-out event after its execution with possibly new alg * descriptor. Therefore in a typical sequence of RL: {FD_s^0, FD_m, * E_m^1} E_m^1 will be verified with (A) of FD_m. See legends * definition on MYSQL_BIN_LOG::relay_log_checksum_alg docs lines * (log.h). Notice, a pre-checksum FD version forces alg := * BINLOG_CHECKSUM_ALG_UNDEF. */ checksumAlg = descriptionEvent.getHeader().checksumAlg; // fetch // checksum alg processCheckSum(buffer); /* otherwise, go on with reading the header from buf (nothing now) */ }

对buffer里的第9个字节type 来决定mysql发送过来的消息是什么类型。

2.1)canal进来第一次type类型,和解析规则参考https://blog.csdn.net/skycanf/article/details/80742046

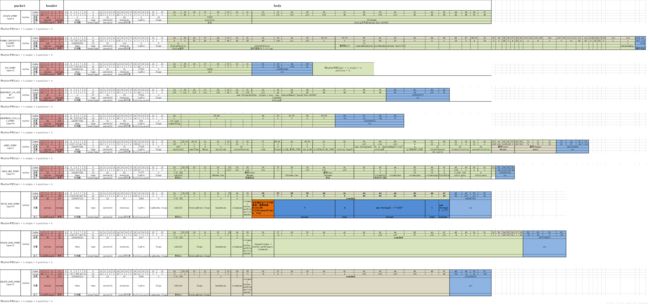

mysql推送过来的event主要分为以下(用logevent抽象类接受,根据策略模式调用各自实现):

先举例两个

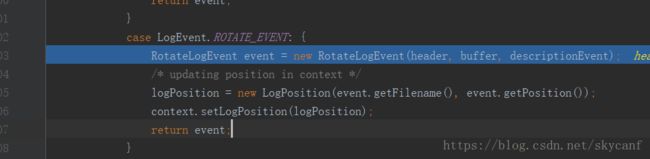

2.1)主要确认binlog文件名字,当前位点

result

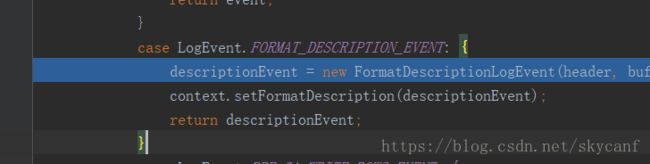

2.2)第二次解析format_destribe 确定binlog版本,名字,时间戳。

三、 sink 针对event过滤

解析出来的event

event.setSemival(buffer.semival); 半自动同步标志位

buffer.limit(limit);

buffer.consume(len); 清空buffer

if (!func.sink(event)) {

break;

}

public boolean sink(EVENT event) { try { CanalEntry.Entry entry = parseAndProfilingIfNecessary(event, false); if (!running) { return false; } if (entry != null) { exception = null; // 有正常数据流过,清空exception transactionBuffer.add(entry); // 记录一下对应的positions this.lastPosition = buildLastPosition(entry); // 记录一下最后一次有数据的时间 lastEntryTime = System.currentTimeMillis(); } return running;

protected CanalEntry.Entry parseAndProfilingIfNecessary(EVENT bod, boolean isSeek) throws Exception { long startTs = -1; boolean enabled = getProfilingEnabled(); if (enabled) { startTs = System.currentTimeMillis(); } CanalEntry.Entry event = binlogParser.parse(bod, isSeek); if (enabled) { this.parsingInterval = System.currentTimeMillis() - startTs; } if (parsedEventCount.incrementAndGet() < 0) { parsedEventCount.set(0); } return event; }

sink具体过滤,分发规则如下:

@Override public Entry parse(LogEvent logEvent, boolean isSeek) throws CanalParseException { if (logEvent == null || logEvent instanceof UnknownLogEvent) { return null; } int eventType = logEvent.getHeader().getType(); switch (eventType) { case LogEvent.ROTATE_EVENT: binlogFileName = ((RotateLogEvent) logEvent).getFilename(); break; case LogEvent.QUERY_EVENT: return parseQueryEvent((QueryLogEvent) logEvent, isSeek); case LogEvent.XID_EVENT: return parseXidEvent((XidLogEvent) logEvent); case LogEvent.TABLE_MAP_EVENT: break; case LogEvent.WRITE_ROWS_EVENT_V1: case LogEvent.WRITE_ROWS_EVENT: return parseRowsEvent((WriteRowsLogEvent) logEvent); case LogEvent.UPDATE_ROWS_EVENT_V1: case LogEvent.UPDATE_ROWS_EVENT: return parseRowsEvent((UpdateRowsLogEvent) logEvent); case LogEvent.DELETE_ROWS_EVENT_V1: case LogEvent.DELETE_ROWS_EVENT: return parseRowsEvent((DeleteRowsLogEvent) logEvent); case LogEvent.ROWS_QUERY_LOG_EVENT: return parseRowsQueryEvent((RowsQueryLogEvent) logEvent); case LogEvent.ANNOTATE_ROWS_EVENT: return parseAnnotateRowsEvent((AnnotateRowsEvent) logEvent); case LogEvent.USER_VAR_EVENT: return parseUserVarLogEvent((UserVarLogEvent) logEvent); case LogEvent.INTVAR_EVENT: return parseIntrvarLogEvent((IntvarLogEvent) logEvent); case LogEvent.RAND_EVENT: return parseRandLogEvent((RandLogEvent) logEvent); case LogEvent.GTID_LOG_EVENT: return parseGTIDLogEvent((GtidLogEvent) logEvent); default: break; } return null; }

3.1 event =0

3.2 event=4

3.3 event=15 返回空

3.4 event=16

3.6 event=34 返回null

3.7 event=2 返回库,表名字,创建时间,编码,storevalue为0 执行时间,

3.8 event=19 break,返回null

3.9 event=30 (插入)

3.10 event=31(更新)

3.11 event=34

3.22 event=32(删除)

下一篇开始讲解针对每个event进行的过滤