一、准备工作

1、规划

域名:node1.test.com IP:172.16.12.101 hostname: node1

域名:node2.test.com IP:172.16.12.102 hostname: node2

NFS服务器: IP:172.16.12.103 hostname: nfs

虚拟VIP: IP:172.16.12.100

2、在节点服务器上安装http

[root@node1 ~]# yum -y install httpd [root@node1 ~]# echo "node1.test.com

" > /var/www/html/index.html //添加测试页面/var/www/html/index.html [root@node1 ~]# service httpd stop //停止http服务 [root@node1 ~]# chkconfig httpd off //不要开机启动

在nfs服务器上安装nfs

[root@nfs ~]# yum install -y rpcbind nfs-utils [root@nfs ~]# mkdir /web [root@nfs ~]# chmod 777 /web [root@nfs ~]# vim /etc/exports // //添加如下内容 /web 172.16.12.101(rw,sync,no_root_squash) /web 172.16.12.102(rw,sync,no_root_squash) [root@data html]# service nfs restart

3、因为我们的STONITH resources没有定义,这里没有STONITH设备,所以我们先关闭这个属性!

另外我们只有两个节点,不满足投票规则,所以先忽略quorum

[root@node1 ~]# crm crm(live)# configure crm(live)configure# property stonith-enabled=false //关闭stonith属性 crm(live)configure# property no-quorum-policy=ignore //忽略投票规则 crm(live)configure# verify //(检查语法,此处没有信息就表示己经是正确操作) crm(live)configure# commit //(提交,使用commit命令后将被写到配置文件中,如果想要修改可以使用edit命令)

4、定义资源

crm(live)configure# primitive webvip ocf:heartbeat:IPaddr params ip=172.16.12.100 op monitor interval=30s timeout=20s on-fail=restart

//定义一个主资源名称为webvip,资源代理类别为ocf:heartbeat,资源代理为IPaddr。params:指定定义的参数 ,op代表动作,monitor设置一个监控,每30s检测一次,超时时间为20s,一旦故障就重启

crm(live)configure# verify

crm(live)configure# commit

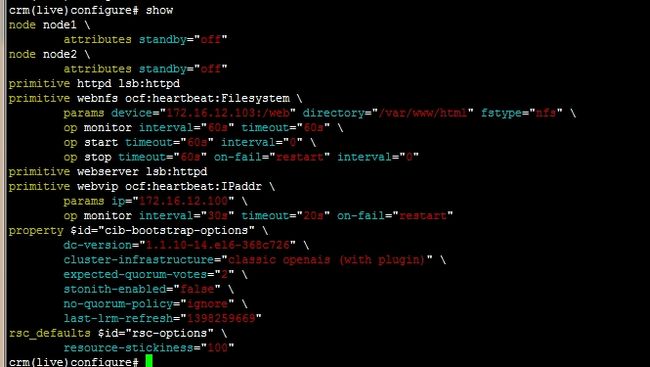

crm(live)configure# show

node node1 \

attributes standby="off"

node node2 \

attributes standby="off"

primitive httpd lsb:httpd

primitive webvip ocf:heartbeat:IPaddr \

params ip="172.16.12.100" \

op monitor interval="30s" timeout="20s" on-fail="restart"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore" \

last-lrm-refresh="1398259669"

rsc_defaults $id="rsc-options" \

resource-stickiness="100"

5、定义一个文件挂载系统

先进入ra中查找文件系统所使用的资源代理

crm(live)# configure crm(live)configure# ra crm(live)configure ra# classes lsb ocf / heartbeat pacemaker service stonith crm(live)configure ra# list ocf CTDB ClusterMon Dummy Filesystem HealthCPU HealthSMART IPaddr IPaddr2 IPsrcaddr LVM MailTo Route SendArp Squid Stateful SysInfo SystemHealth VirtualDomain Xinetd apache conntrackd controld dhcpd ethmonitor exportfs mysql mysql-proxy named nfsserver nginx pgsql ping pingd postfix remote rsyncd rsyslog slapd symlink tomcat crm(live)configure ra# providers Filesystem heartbeat crm(live)configure ra#

由此可知文件系统的资源代理是由ocf:heartbeat提供

查看此资源代理可的参数

crm(live)configure ra# meta ocf:heartbeat:Filesystem Manages filesystem mounts (ocf:heartbeat:Filesystem) Resource script for Filesystem. It manages a Filesystem on a shared storage medium. The standard monitor operation of depth 0 (also known as probe) ... ...

6、定义nfs资源

crm(live)configure# primitive webnfs ocf:heartbeat:Filesystem params device="172.16.12.103:/web" directory="/var/www/html" fstype="nfs" op monitor interval=60s timeout=60s op start timeout=60s op stop timeout=60s on-fail=restart //定义一个主资源名称为webnfs,资源代理类别为ocf:heartbeat,资源代理为Filesystem,参数params,nfs共享目录device=*,挂载目录directory=,文件类型fstype=,op monitor定义一个监控,60秒刷新一次,超时时长60s,起动超时时长start time=60s,停止超时时长stop time=60s,一旦故障就重启 crm(live)configure# verify //检查语法 crm(live)configure# commit //提交

定义web资源

crm(live)configure# primitive webserver lsb:httpd //定义一个主资源,名称webserver,资源代理类别lsb,资源代理类型httpd,无其它参数 crm(live)configure# verify crm(live)configure# commit crm(live)configure# show

7、组资源和资源约束:

很多时候,多个资源必须同时运行在某节点上。有两种方法可以解决,

一种是定义组资源,将vip与httpd同时加入一个组中,可以实现将资源运行在同节点上,

另一种是定义资源约束可实现将资源运行在同一节点上。我们先来说每一种方法,定义组资源。

定义组资源:

将多个资源整全在一起(绑定在一起运行)

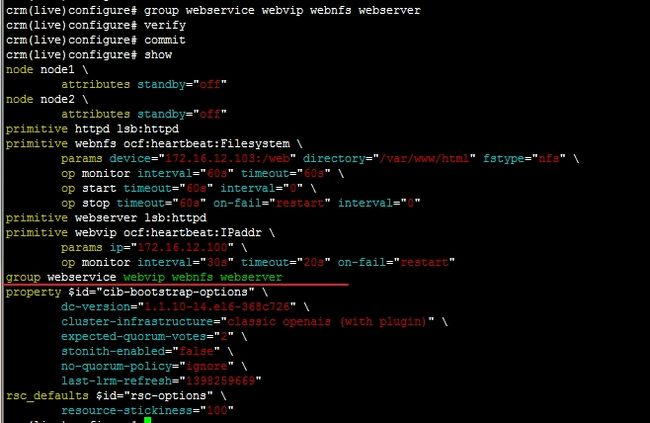

crm(live)configure# group webservice webvip webnfs webserver //定义一个资源组,名称webservice crm(live)configure# verify crm(live)configure# commit crm(live)configure# show

换个方式查看一下己生效的资源信息:status

crm(live)configure# cd

crm(live)# status

Last updated: Wed Apr 30 17:05:42 2014

Last change: Wed Apr 30 16:56:02 2014 via cibadmin on node1

Stack: classic openais (with plugin)

Current DC: node1 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Online: [ node1 node2 ]

httpd (lsb:httpd): Started node1

Resource Group: webservice //组资源统一运行在节点2上

webvip (ocf::heartbeat:IPaddr): Started node2

webnfs (ocf::heartbeat:Filesystem): Started node2

webserver (lsb:httpd): Started node2

模拟node2节点故障,看资源会是否转移

crm(live)node# standby node2

crm(live)node# cd

crm(live)# status

Last updated: Wed Apr 30 17:09:35 2014

Last change: Wed Apr 30 17:08:37 2014 via crm_attribute on node1

Stack: classic openais (with plugin)

Current DC: node2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Node node2: standby

Online: [ node1 ]

httpd (lsb:httpd): Started node1

Resource Group: webservice //组资源全部在node1上运行,注意:如果显示不是这样,重启下node1的corosync就正常显示了

webvip (ocf::heartbeat:IPaddr): Started node1

webnfs (ocf::heartbeat:Filesystem): Started node1

webserver (lsb:httpd): Started node1

定义资源约束:

先把node2启动

crm(live)# node

crm(live)node# online node2

crm(live)node# cd

crm(live)# status

Last updated: Wed Apr 30 17:14:43 2014

Last change: Wed Apr 30 17:14:27 2014 via crm_attribute on node2

Stack: classic openais (with plugin)

Current DC: node2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Online: [ node1 node2 ]

httpd (lsb:httpd): Started node1

Resource Group: webservice

webvip (ocf::heartbeat:IPaddr): Started node1

webnfs (ocf::heartbeat:Filesystem): Started node1

webserver (lsb:httpd): Started node1

停止组资源,删除组资源

crm(live)# resource crm(live)resource# stop webservice crm(live)resource# cd crm(live)# configure crm(live)configure# delete webservice crm(live)configure# verify crm(live)configure# commit

定义约束资源:

crm(live)configure# colocation webserver-with-webnfs-webvip inf: webvip webnfs webserver //定义一个约束,名称webserver-with-webnfs-webvip,inf:#(可能性,inf表示永久在一起,也可以是数值) crm(live)configure# verify crm(live)configure# commit

8、定义资源启动顺序:

crm(live)configure# order vip_before_webnfs_before_webserver mandatory: webvip webnfs webserver //order :顺序约束的命令,vip_before_webnfs_before_webserver #约束名称,mandatory: #指定级别(此处有三种级别:mandatory:强制, Optional:可选,Serialize:序列化),webvip webnfs webserver #资源名,这里书写的先后顺序相当重要 crm(live)configure# verify crm(live)configure# commit

9、定义位置约束:

crm(live)configure# location webvip_and_webnfs_and_webserver webvip 500: node2 //location:位置约束命令,webvip_and_webnfs_and_webserver:约束名称 //webip 500: node2:对哪一个资源指定多少权重在那一个节点 crm(live)configure# verify crm(live)configure# commit

10、定义默认资源属性:

crm(live)configure# rsc_defaults resource-stickiness=100 //默认权重100 crm(live)configure# verify crm(live)configure# commit

解释:这样定义代表集群中每一个资源的默认粘性,只有当资源服务不在当前节点时,粘性才会生效,比如,这里我定义了三个资源webip、webnfs、webserver,对每一个资源的粘性为100,那么加在一起就变成了300,之前己经定义node2的位置约束的值为500,当node2当机后,重新上线,这样就切换到node2上了。

测试:

1、现在资源全部在node2上运行

crm(live)configure# cd crm(live)# status Last updated: Wed Apr 30 17:36:14 2014 Last change: Wed Apr 30 17:29:53 2014 via cibadmin on node1 Stack: classic openais (with plugin) Current DC: node2 - partition with quorum Version: 1.1.10-14.el6-368c726 2 Nodes configured, 2 expected votes 4 Resources configured Online: [ node1 node2 ] httpd (lsb:httpd): Started node1 webvip (ocf::heartbeat:IPaddr): Started node2 webnfs (ocf::heartbeat:Filesystem): Started node2 webserver (lsb:httpd): Started node2

2、模拟node2故障

crm(live)# cd crm(live)# node crm(live)node# standby node2 crm(live)node# cd crm(live)# status Last updated: Wed Apr 30 17:37:49 2014 Last change: Wed Apr 30 17:37:44 2014 via crm_attribute on node1 Stack: classic openais (with plugin) Current DC: node2 - partition with quorum Version: 1.1.10-14.el6-368c726 2 Nodes configured, 2 expected votes 4 Resources configured Node node2: standby Online: [ node1 ] httpd (lsb:httpd): Started node1 webvip (ocf::heartbeat:IPaddr): Started node1 webnfs (ocf::heartbeat:Filesystem): Started node1 webserver (lsb:httpd): Started node1

3、将node2上线,看资源会不会重新回到node2

crm(live)# cd crm(live)# node crm(live)node# standby node2 crm(live)node# cd crm(live)# status Last updated: Wed Apr 30 17:37:49 2014 Last change: Wed Apr 30 17:37:44 2014 via crm_attribute on node1 Stack: classic openais (with plugin) Current DC: node2 - partition with quorum Version: 1.1.10-14.el6-368c726 2 Nodes configured, 2 expected votes 4 Resources configured Node node2: standby Online: [ node1 ] httpd (lsb:httpd): Started node1 webvip (ocf::heartbeat:IPaddr): Started node1 webnfs (ocf::heartbeat:Filesystem): Started node1 webserver (lsb:httpd): Started node1

因为node2权重比较高,资源重新回到node2了