20. python爬虫——基于CrawlSpider爬取凤凰周刊新闻资讯专栏全部页码页面数据

python爬虫——基于CrawlSpider爬取凤凰周刊新闻资讯专栏全部页码页面数据

- CrawlSpider:类,Spider的一个子类

-

- 全站数据爬取的方式:

- LinkExtractor常见参数:

- spiders.Rule常见参数:

- CrawlSpider的使用:

- 1、需求

-

- 【前期准备】

- 2、分析

-

- (1)获取全站页面链接

- (2)爬取标题和时间

- (3)爬取详情页链接

- (4)爬取详情页数据

- (5)进行持久化存储

CrawlSpider:类,Spider的一个子类

全站数据爬取的方式:

- 基于Spider:手动请求

- 基于CrawlSpider

LinkExtractor常见参数:

- allow:满足括号中“正则表达式”的URL会被提取,如果为空,则全部匹配。

- deny:满足括号中“正则表达式”的URL一定不会提取(优先级敢于allow)。

- allow_domains:会被提取的链接的domains。

- deny_domains:一定不会被提取链接的domains。

- restrict_xpaths:使用xpath表达式,该参数与allow共同作痛过滤链接,xpath满足范围内的url地址会被提取

注:url地址不完整时,crawlspider会自动补充完整之后再请求;parse函数不能定义,它有特殊功能需求实现;callback连接提取器提取出来的url地址对应的相应交给它来处理。

spiders.Rule常见参数:

- link_extractor:是一个LinkExtractor对象,用于定义需要提取的链接。

- callback:从link_extractor中每获取到链接时,参数所指定的值作为回调函数。

- follow:是一个布尔(boolean)值,制定了根据该规则从response提取的链接是否需要跟进。如果callback为None,follow默认设置为True,否则默认为False。

- process_links:指定该spider中哪个的函数将会被调用,从link_extractor中获取到链接列表时 将会调用函数,该方法主要用来过滤url。

- process_request:指定该spider中哪个的函数将会被调用,该规则提取到每个request时都会调用该函数,用来过滤request。

CrawlSpider的使用:

- 创建一个工程

- cd xxx

- 创建爬虫文件(CrawlSpider):

- scrapy genspider -t crawl xxx www.yyy.com

- 链接提取器:

- 根据指定规则(allow=“正则”)进行指定连接的提取

- 规则解析器:

- 将链接提取器提取到的链接进行指定规则(callback)的解析操作

- follow=True:

- 可以将链接提取器继续作用到链接提取器提取到的链接所对应的页面中

1、需求

爬取 凤凰周刊|新闻资讯 全部页码页面数据

【前期准备】

创建工程scrapy startproject sunPro

创建CrawlSpider文件scrapy genspider -t sun www.xxx.com

设置settings.py配置文件

LOG_LEVEL = 'ERROR'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/...

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

2、分析

(1)获取全站页面链接

使用开发者工具,观察跳转页码的标签属性

发现每一页的跳转标签对应的属性均为’/list.php?lmid=5&page=num’

因此编写的正则表达式为:lmid=5&page=\d+

知识点:正则表达式“\d+||(\d+\.\d+”是什么意思

首先爬取全部页面

sun.py文件

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class SunSpider(CrawlSpider):

name = 'sun'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.ifengweekly.com/list.php?lmid=39&page=1']

#链接提取器:根据指定规则(allow="正则")进行指定链接的提取

link = LinkExtractor(allow=r'lmid=5&page=\d+')

#规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作

#follow=True后可实现爬取全部页码页面效果

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

print(response)

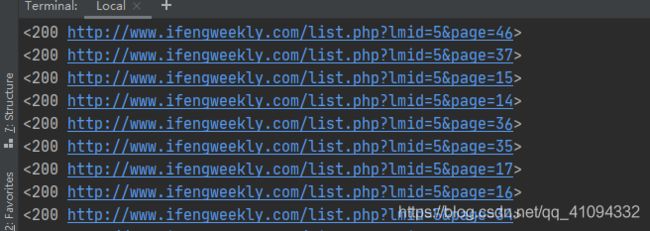

全部页面成功爬取

(2)爬取标题和时间

sun.py文件

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class SunSpider(CrawlSpider):

name = 'sun'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.cnblogs.com/sitehome/p/1']

#链接提取器:根据指定规则(allow="正则")进行指定链接的提取

link = LinkExtractor(allow=r'/sitehome/p/\d+')

#规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

div_list = response.xpath('//*[@id="hot"]/div[@class="column"]')

for div in div_list:

title = div.xpath('./h1/a/text()').extract_first()

time = div.xpath('./p/text()').extract_first()

number = div.xpath('./p/a/text()').extract_first()

print(title,time,number)

(3)爬取详情页链接

确定链接所在位置,再构建要给解析详情页的函数,将含有解析数据的response提交给解析详情页的函数。

确定链接所在位置,再构建要给解析详情页的函数,将含有解析数据的response提交给解析详情页的函数。

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class SunSpider(CrawlSpider):

name = 'sun'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.ifengweekly.com/list.php?lmid=5&page=1']

#链接提取器:根据指定规则(allow="正则")进行指定链接的提取

link = LinkExtractor(allow=r'lmid=5&page=\d+')

#详情页链接提取器

#detail_link = linkExtractor(allow=r'')

#规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作

#follow=True后可实现爬取全部页码页面效果

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

div_list = response.xpath('//*[@id="hot"]/div[@class="column"]')

for div in div_list:

title = div.xpath('./h1/a/text()').extract_first()

time = div.xpath('./p/text()').extract_first()

number = div.xpath('./p/a/text()').extract_first()

detail_url = 'http://www.ifengweekly.com/' + div.xpath('./h1/a/@href').extract_first()

# print(detail_url)

yield scrapy.Request(url=detail_url,callback=self.parse_detail)

def parse_detail(self, response):

print(response)

(4)爬取详情页数据

编写代码

sun.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class SunSpider(CrawlSpider):

name = 'sun'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.ifengweekly.com/list.php?lmid=39&page=1']

#链接提取器:根据指定规则(allow="正则")进行指定链接的提取

link = LinkExtractor(allow=r'lmid=5&page=\d+')

#详情页链接提取器

#detail_link = linkExtractor(allow=r'')

#规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作

#follow=True后可实现爬取全部页码页面效果

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

div_list = response.xpath('//*[@id="hot"]/div[@class="column"]')

for div in div_list:

title = div.xpath('./h1/a/text()').extract_first()

time = div.xpath('./p/text()').extract_first()

number = div.xpath('./p/a/text()').extract_first()

detail_url = 'http://www.ifengweekly.com/' + div.xpath('./h1/a/@href').extract_first()

# print(detail_url)

yield scrapy.Request(url=detail_url,callback=self.parse_detail)

def parse_detail(self, response):

content = response.xpath('//*[@id="detil"]/div[3]/p//text()').extract()

content = ''.join(content)

#result_content

print(content)

(5)进行持久化存储

开启piplines配置,文件piplines.py

ITEM_PIPELINES = {

'sunPro.pipelines.SunproPipeline': 300,

}

items.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

import os

class SunproPipeline:

if not os.path.exists('./news'):

os.mkdir('./news')

fp = None

def open_spider(self, spider):

print('开始爬虫......')

def process_item(self, item, spider):

title = item['title']

time = item['time']

number = item['number']

content = item['content']

file_name = './news/'+ title +'.txt'

self.fp = open(file_name,'w',encoding='utf-8')

self.fp.write(title+time+number+'\n'+content)

print(title)

def close_spider(self, spider):

print('结束爬虫.')

self.fp.close()

sun.py文件

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from sunPro.items import SunproItem

import re

class SunSpider(CrawlSpider):

name = 'sun'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.ifengweekly.com/list.php?lmid=39&page=1']

#链接提取器:根据指定规则(allow="正则")进行指定链接的提取

link = LinkExtractor(allow=r'lmid=39&page=\d+')

#详情页链接提取器

#detail_link = linkExtractor(allow=r'')

#规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作

#follow=True后可实现爬取全部页码页面效果

rules = (

Rule(link, callback='parse_item', follow=True),

)

def parse_item(self, response):

div_list = response.xpath('//*[@id="hot"]/div[@class="column"]')

for div in div_list:

title = div.xpath('./h1/a/text()').extract_first()

time = div.xpath('./p/text()').extract_first()

number = div.xpath('./p/a/text()').extract_first()

detail_url = 'http://www.ifengweekly.com/' + div.xpath('./h1/a/@href').extract_first()

time = time.replace("\r","")

item = SunproItem()

item['title'] = title

item['time'] = time

item['number'] = number

# print(item)

# print(detail_url)

yield scrapy.Request(url=detail_url,callback=self.parse_detail,meta={

'item':item})

def parse_detail(self, response):

content = response.xpath('//*[@id="detil"]/div[@class="jfont"]/p//text()').extract()

#将文本中的\r、\t、\n通过替换方式除去

#content = [re.sub(r"\r|\t|\n","",i) for i in content]

#content = [i for i in content if len(i)>0]

content = ''.join(content)

#该方法与上面用re替换除去\r、\t、\n可实现同样的效果

content = content.replace("\r","").replace("\t","").replace("\n","")

item = response.meta['item']

item['content'] = content

#print(item)

yield item

#result_content