hadoop 2.8.3 eclipse 插件

Windows Server 2012 R2 Standard

hadoop 2.8.3

eclipse-jee-mars-2-win32-x86_64

- 从https://github.com/winghc/hadoop2x-eclipse-plugin下载源码到本地;

- 下载ant。

- 修改两个文件:

3.1. \hadoop2x-eclipse-plugin-master\ivy\libraries.properties

将里面的jar包版本改为hadoop对应的版本

3.2. \hadoop2x-eclipse-plugin-master\src\contrib\eclipse-plugin\build.xml,修改jar包名称,我把命令行中的参数直接写在这个文件中了。 - 命令行

cd到目录hadoop2x-eclipse-plugin-master\src\contrib\eclipse-plugin,执行ant jar命令:

- 将

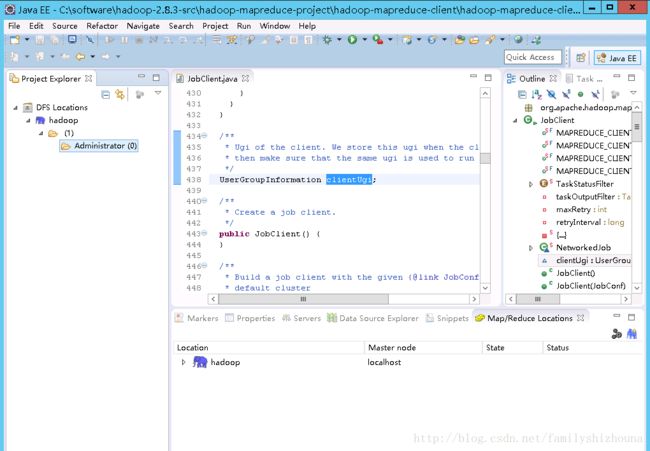

\hadoop2x-eclipse-plugin-master\build\contrib\eclipse-plugin\目录下的hadoop-eclipse-plugin-2.8.3.jar拷贝到\eclipse\plugin目录下; - 启动eclipse;会看到下面的图标:

- 然后点击windows->show view->MapReduce Tools->Map/Reduce Locations。

- 点击这个视图右上角的小象进行配置:

- 启动hadoop,在命令行输入:hadoop fs -mkdir /Administrator

(Adiministrator是我的用户名) - ok

插件下载地址:

eclipse mars: http://download.csdn.net/download/familyshizhouna/10172408

eclipse oxygen:

http://download.csdn.net/download/familyshizhouna/10172755

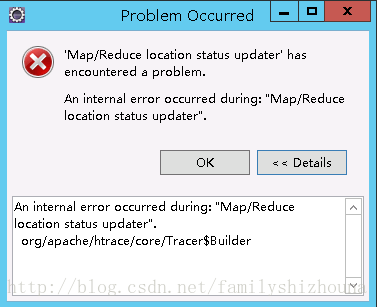

刚开始编译不成功,根据错误提示,修改了libraries.properties和build.xml,编译成功。但放到eclipse(这时eclipse版本是oxygen)中可以显示,但遇到下面的错误(查看eclipse错误日志),

!MESSAGE An internal error occurred during: "Map/Reduce location status updater".

!STACK 0

java.lang.NoClassDefFoundError: org/apache/htrace/core/Tracer$Builder

at org.apache.hadoop.fs.FsTracer.get(FsTracer.java:42)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2806)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:100)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2849)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2831)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:389)

at org.apache.hadoop.fs.FileSystem.getLocal(FileSystem.java:360)

at org.apache.hadoop.mapred.LocalJobRunner.(LocalJobRunner.java:739)

at org.apache.hadoop.mapred.LocalJobRunner.(LocalJobRunner.java:734)

at org.apache.hadoop.mapred.LocalClientProtocolProvider.create(LocalClientProtocolProvider.java:42)

at org.apache.hadoop.mapreduce.Cluster.initialize(Cluster.java:121)

at org.apache.hadoop.mapreduce.Cluster.(Cluster.java:108)

at org.apache.hadoop.mapreduce.Cluster.(Cluster.java:101)

at org.apache.hadoop.mapred.JobClient.init(JobClient.java:475)

at org.apache.hadoop.mapred.JobClient.(JobClient.java:454)

at org.apache.hadoop.eclipse.server.HadoopServer.getJobClient(HadoopServer.java:488)

at org.apache.hadoop.eclipse.server.HadoopServer$LocationStatusUpdater.run(HadoopServer.java:103)

at org.eclipse.core.internal.jobs.Worker.run(Worker.java:56)

Caused by: java.lang.ClassNotFoundException: org.apache.htrace.core.Tracer$Builder cannot be found by org.apache.hadoop.eclipse_0.18.0

at org.eclipse.osgi.internal.loader.BundleLoader.findClassInternal(BundleLoader.java:484)

at org.eclipse.osgi.internal.loader.BundleLoader.findClass(BundleLoader.java:395)

at org.eclipse.osgi.internal.loader.BundleLoader.findClass(BundleLoader.java:387)

at org.eclipse.osgi.internal.loader.ModuleClassLoader.loadClass(ModuleClassLoader.java:150)

at java.lang.ClassLoader.loadClass(Unknown Source)

... 18 more 我感觉错误的意思是找不到hrace的jar包,但插件里面有这个包啊,不知道该怎么改。

如果您遇到这个问题,解决了,麻烦告诉我,谢谢!

应该是jar包不对,我直接改的插件包,从新编译下,然后这样启动eclipse:eclipse -clean -consolelog -debug,就可以了。

然后就换了eclipse的版本,用mars。mars遇到了另外一个问题:

!MESSAGE An internal error occurred during: "Map/Reduce location status updater".

!STACK 0

java.lang.NullPointerException

at org.apache.hadoop.mapred.JobClient.getAllJobs(JobClient.java:851)

at org.apache.hadoop.mapred.JobClient.jobsToComplete(JobClient.java:827)

at org.apache.hadoop.eclipse.server.HadoopServer$LocationStatusUpdater.run(HadoopServer.java:119)

at org.eclipse.core.internal.jobs.Worker.run(Worker.java:55)这个问题参考下面的方法可以解决,hadoop dfs -mkdir /Administrator

参考:http://blog.csdn.net/l1028386804/article/details/52665022

libraries.properties

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#This properties file lists the versions of the various artifacts used by hadoop and components.

#It drives ivy and the generation of a maven POM

# This is the version of hadoop we are generating

hadoop.version=2.8.3

#hadoop-gpl-compression.version=0.1.0

#These are the versions of our dependencies (in alphabetical order)

#apacheant.version=1.9.6

#ant-task.version=2.0.10

hadoop-ant.version=2.8.3

asm.version=3.2

avro.version=1.7.4

#aspectj.version=1.6.5

#aspectj.version=1.6.11

#checkstyle.version=4.2

commons-cli.version=1.2

commons-codec.version=1.4

commons-beanutils-core.version=1.8.0

commons-beanutils.version=1.7.0

commons-collections.version=3.2.2

commons-configuration.version=1.6

commons-compress.version=1.4.1

commons-daemon.version=1.0.13

commons-digester.version=1.8

commons-httpclient.version=3.1

commons-lang.version=2.6

commons-logging.version=1.1.3

#commons-logging-api.version=1.0.4

commons-math.version=2.2

commons-math3.version=3.1.1

#commons-el.version=1.0

#commons-fileupload.version=1.2

commons-io.version=2.4

commons-net.version=3.1

#core.version=3.1.1

#coreplugin.version=1.3.2

hsqldb.version=2.0.0

#htrace.version=4.0.1

htrace-core4.version=4.0.1

ivy.version=2.1.0

jasper.version=5.5.12

jackson.version=1.9.13

#not able to figureout the version of jsp & jsp-api version to get it resolved throught ivy

# but still declared here as we are going to have a local copy from the lib folder

#jsp.version=2.1

jsp-api.version=2.1

#jsp-api-2.1.version=6.1.14

#jsp-2.1.version=6.1.14

jets3t.version=0.9.0

jetty.version=6.1.26

jetty-util.version=6.1.26

jersey-core.version=1.9

jersey-json.version=1.9

jersey-server.version=1.9

junit.version=4.11

#jdeb.version=0.8

#jdiff.version=1.0.9

#json.version=1.0

json-smart.version=1.1.1

#kfs.version=0.1

log4j.version=1.2.17

#lucene-core.version=2.3.1

mockito-all.version=1.8.5

jsch.version=0.1.51

#oro.version=2.0.8

#rats-lib.version=0.5.1

#servlet.version=4.0.6

guice-servlet.version=3.0

servlet-api.version=2.5

slf4j-api.version=1.7.10

slf4j-log4j12.version=1.7.10

#wagon-http.version=1.0-beta-2

xmlenc.version=0.52

#xerces.version=2.9.1

xercesImpl.version=2.9.1

protobuf.version=2.5.0

guava.version=11.0.2

netty.version=3.6.2.Final

paranamer.version=2.3

xz.version=1.0

zookeeper.version=3.4.6

jsr305.version=3.0.0

snappy-java.version=1.0.4.1

hamcrest-core.version=1.3build.xml

<project default="jar" name="eclipse-plugin">

<property name="version" value="2.8.3"/>

<property name="eclipse.home" value="C:/eclipse-jee-mars-2-win32-x86_64/eclipse"/>

<property name="hadoop.home" value="c:/hadoop-2.8.3"/>

<import file="../build-contrib.xml"/>

<path id="eclipse-sdk-jars">

<fileset dir="${eclipse.home}/plugins/">

<include name="org.eclipse.ui*.jar"/>

<include name="org.eclipse.jdt*.jar"/>

<include name="org.eclipse.core*.jar"/>

<include name="org.eclipse.equinox*.jar"/>

<include name="org.eclipse.debug*.jar"/>

<include name="org.eclipse.osgi*.jar"/>

<include name="org.eclipse.swt*.jar"/>

<include name="org.eclipse.jface*.jar"/>

<include name="org.eclipse.team.cvs.ssh2*.jar"/>

<include name="com.jcraft.jsch*.jar"/>

fileset>

path>

<path id="hadoop-sdk-jars">

<fileset dir="${hadoop.home}/share/hadoop/mapreduce">

<include name="hadoop*.jar"/>

fileset>

<fileset dir="${hadoop.home}/share/hadoop/hdfs">

<include name="hadoop*.jar"/>

fileset>

<fileset dir="${hadoop.home}/share/hadoop/common">

<include name="hadoop*.jar"/>

fileset>

path>

<path id="classpath">

<pathelement location="${build.classes}"/>

<path refid="eclipse-sdk-jars"/>

<path refid="hadoop-sdk-jars"/>

path>

<target name="check-contrib" unless="eclipse.home">

<property name="skip.contrib" value="yes"/>

<echo message="eclipse.home unset: skipping eclipse plugin"/>

target>

<target name="compile" depends="init, ivy-retrieve-common" unless="skip.contrib">

<echo message="contrib: ${name}"/>

<javac

encoding="${build.encoding}"

srcdir="${src.dir}"

includes="**/*.java"

destdir="${build.classes}"

debug="${javac.debug}"

deprecation="${javac.deprecation}">

<classpath refid="classpath"/>

javac>

target>

<target name="jar" depends="compile" unless="skip.contrib">

<mkdir dir="${build.dir}/lib"/>

<copy todir="${build.dir}/lib/" verbose="true">

<fileset dir="${hadoop.home}/share/hadoop/mapreduce">

<include name="hadoop*.jar"/>

fileset>

copy>

<copy todir="${build.dir}/lib/" verbose="true">

<fileset dir="${hadoop.home}/share/hadoop/common">

<include name="hadoop*.jar"/>

fileset>

copy>

<copy todir="${build.dir}/lib/" verbose="true">

<fileset dir="${hadoop.home}/share/hadoop/hdfs">

<include name="hadoop*.jar"/>

fileset>

copy>

<copy todir="${build.dir}/lib/" verbose="true">

<fileset dir="${hadoop.home}/share/hadoop/yarn">

<include name="hadoop*.jar"/>

fileset>

copy>

<copy todir="${build.dir}/classes" verbose="true">

<fileset dir="${root}/src/java">

<include name="*.xml"/>

fileset>

copy>

<copy file="${hadoop.home}/share/hadoop/common/lib/protobuf-java-${protobuf.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/log4j-${log4j.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/commons-configuration-${commons-configuration.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/commons-lang-${commons-lang.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/commons-collections-${commons-collections.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/jackson-core-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/jackson-mapper-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/slf4j-log4j12-${slf4j-log4j12.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/slf4j-api-${slf4j-api.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/guava-${guava.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/hadoop-auth-${hadoop.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/netty-${netty.version}.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.home}/share/hadoop/common/lib/htrace-core4-${htrace-core4.version}-incubating.jar" todir="${build.dir}/lib" verbose="true"/>

<jar

jarfile="${build.dir}/hadoop-${name}-${hadoop.version}.jar"

manifest="${root}/META-INF/MANIFEST.MF">

<manifest>

<attribute name="Bundle-ClassPath"

value="classes/,

lib/hadoop-hdfs-client-${hadoop.version}.jar,

lib/hadoop-mapreduce-client-core-${hadoop.version}.jar,

lib/hadoop-mapreduce-client-common-${hadoop.version}.jar,

lib/hadoop-mapreduce-client-jobclient-${hadoop.version}.jar,

lib/hadoop-auth-${hadoop.version}.jar,

lib/hadoop-common-${hadoop.version}.jar,

lib/hadoop-hdfs-${hadoop.version}.jar,

lib/protobuf-java-${protobuf.version}.jar,

lib/log4j-${log4j.version}.jar,

lib/commons-cli-${commons-cli.version}.jar,

lib/commons-configuration-${commons-configuration.version}.jar,

lib/httpclient-${httpclient.version}.jar,

lib/commons-lang-${commons-lang.version}.jar,

lib/commons-collections-${commons-collections.version}.jar,

lib/jackson-core-asl-${jackson.version}.jar,

lib/jackson-mapper-asl-${jackson.version}.jar,

lib/slf4j-log4j12-${slf4j-log4j12.version}.jar,

lib/slf4j-api-${slf4j-api.version}.jar,

lib/guava-${guava.version}.jar,

lib/netty-${netty.version}.jar,

lib/servlet-api-${servlet-api.version}.jar,

lib/htrace-core4-${htrace-core4.version}-incubating.jar,

lib/commons-io-${commons-io.version}.jar"/>

manifest>

<fileset dir="${build.dir}" includes="classes/ lib/"/>

<fileset dir="${root}" includes="resources/ plugin.xml"/>

jar>

target>

project>