Linux性能优化-Redis响应严重延迟

目录

安装环境

分析问题

参考

上次MySql慢是因为没建立索引

此外主要依赖系统缓存加速磁盘I/O访问,如果系统中还有其他应用同时运行,MyISAM引擎很难充分利用系统缓存,缓存可能会被其他程序占用,甚至被清理掉

最好在应用程序内部分配内存,构建完全自主控制的缓存,或者使用第三方缓存如Memcached,Redis等

Redis是最常用的键值存储系统之一,常用做数据库,高速缓存和消息队列代理等,Redis基于内存来存储数据,为了保住服务器异常时数据不丢失,需要为其配置持久化,这就可能会引发磁盘I/O的性能问题

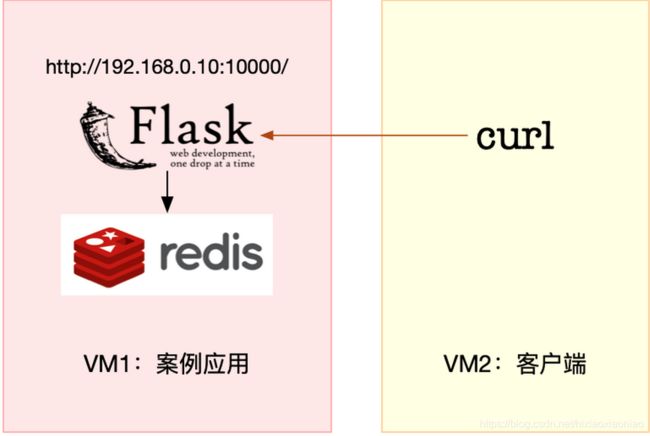

安装环境

案例由Python应用,Redis两部分组成,其中Python应用是一个基于Flask的应用,他会利用Redis,来管理应用程序的缓存,并对外提供三个HTTP接口

/, 返回hello redis

/init, 插入指定数据的缓存数据,如果不指定数量,默认为5000条

缓存的键格式为uuid

缓存的值为good,bad,normal三者之一

/get_cache/

bad,normal(也就是找出具有相同value的key列表)

安装应用和redis,执行如下命令

cd redis-slow

make run

docker run --name=redis -itd -p 10000:80 feisky/redis-server

Unable to find image 'feisky/redis-server:latest' locally

Trying to pull repository docker.io/feisky/redis-server ...

latest: Pulling from docker.io/feisky/redis-server

cd784148e348: Already exists

48d4c7155ddc: Pull complete

6d908603dbe8: Pull complete

0b981e82e1e2: Pull complete

7074f4a1fd03: Pull complete

447ac2b250dc: Pull complete

b6d44ce71e94: Pull complete

Digest: sha256:a69d39256eb970ab0d87a70d53fa2666d0c32cbf68fb316ef016efd513806146

Status: Downloaded newer image for docker.io/feisky/redis-server:latest

5785cf3f87c786730f364399f656b02dc5f26ca863979a642bf347cb99ff0c33

docker run --name=app --network=container:redis -itd feisky/redis-app

Unable to find image 'feisky/redis-app:latest' locally

Trying to pull repository docker.io/feisky/redis-app ...

latest: Pulling from docker.io/feisky/redis-app

54f7e8ac135a: Already exists

d6341e30912f: Already exists

087a57faf949: Already exists

5d71636fb824: Already exists

0c1db9598990: Already exists

2eeb5ce9b924: Already exists

d3029c597b32: Pull complete

265a9c957eba: Pull complete

3bb7ae9463c5: Pull complete

b3198935e7ab: Pull complete

ca3ab58d03d9: Pull complete

Digest: sha256:ac281cbb37e35eccb880a58b75f244ef19a9a8704a43ae274c12e6576ed6082b

Status: Downloaded newer image for docker.io/feisky/redis-app:latest

c9c0d78952471656bdc2a9c6f1785d707532e65c307c090ce07dcbb27da66f33

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c9c0d7895247 feisky/redis-app "python /app.py" 52 seconds ago Up 52 seconds app

5785cf3f87c7 feisky/redis-server "docker-entrypoint..." About a minute ago Up About a minute 6379/tcp, 0.0.0.0:10000->80/tcp redis

通过curl执行一些测试和初始化操作

#测试访问效果

curl http://[IP]:10000/

hello redis

#插入5000条数据

curl http://[IP]:10000/init/5000

{"elapsed_seconds":11.59295916557312,"keys_initialized":5000}

#查询缓存接口,显示用时 4.4秒

curl http://[IP]:10000/init/get_cache

。。。

c120002","fdb33396-203d-11e9-bd87-0242ac120002","fe99d76a-203d-11e9-bd87-0242ac120002"],"elapsed_seconds":4.490397691726685,"type":"good"}

#为了避免分析时,请求客户端结束,将这个curl放到一个循环中

while true; do curl http://[IP]:10000/get_cache; done

分析问题

通过top,iostat,pidstat来观察系统运行情况

发现有一个redis-server进程,io使用率很高

top - 12:43:28 up 16 days, 2:26, 3 users, load average: 1.43, 0.54, 0.20

Tasks: 84 total, 3 running, 44 sleeping, 0 stopped, 0 zombie

%Cpu(s): 13.1 us, 11.3 sy, 0.0 ni, 0.0 id, 73.9 wa, 0.0 hi, 1.7 si, 0.0 st

KiB Mem : 1008936 total, 82300 free, 123712 used, 802924 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 721308 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

8001 root 20 0 193264 27784 8884 S 15.7 2.8 0:16.61 python

7957 100 20 0 28352 9904 1984 D 8.0 1.0 0:08.33 redis-server

349 root 20 0 0 0 0 R 1.7 0.0 0:07.37 jbd2/vda1-8

iostat -x -d 1

Linux 4.20.0-1.el7.elrepo.x86_64 (iz2zege42v3jtvyj2oecuzz) 01/25/2019 _x86_64_ (1 CPU)

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.30 0.57 0.55 138.44 33.52 308.20 0.74 33.75 20.41 47.50 629.94 70.29

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 351.52 33.33 995.96 137.37 5393.94 10.75 1.04 0.72 0.48 0.72 0.98 101.01

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 381.63 0.00 1087.76 0.00 5873.47 10.80 1.02 0.71 0.00 0.71 0.94 102.04

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 390.72 0.00 1118.56 0.00 6041.24 10.80 1.03 0.71 0.00 0.71 0.92 103.09

pidstat -d 1

12:44:51 PM UID PID kB_rd/s kB_wr/s kB_ccwr/s Command

12:44:52 PM 0 349 0.00 4.00 0.00 jbd2/vda1-8

12:44:52 PM 100 7957 0.00 1456.00 0.00 redis-server

12:44:52 PM UID PID kB_rd/s kB_wr/s kB_ccwr/s Command

12:44:53 PM 100 7957 0.00 1303.09 0.00 redis-server

12:44:53 PM UID PID kB_rd/s kB_wr/s kB_ccwr/s Command

12:44:54 PM 0 349 0.00 4.08 0.00 jbd2/vda1-8

12:44:54 PM 0 419 0.00 12.24 0.00 systemd-journal

12:44:54 PM 100 7957 0.00 1302.04 0.00 redis-server通过strace观察,有大量的read,write调用

# -tt -T 打印时间戳,和函数调用时间

strace -p 7957 -e trace=desc -ff -tt -T

[pid 7957] 12:48:58.580249 epoll_pwait(5, [{EPOLLIN, {u32=8, u64=8}}], 10128, 92, NULL, 8) = 1 <0.000019>

[pid 7957] 12:48:58.580301 read(8, "*2\r\n$3\r\nGET\r\n$41\r\nuuid:6b049e5e-"..., 16384) = 61 <0.000013>

[pid 7957] 12:48:58.580345 read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable) <0.000011>

[pid 7957] 12:48:58.580380 write(8, "$3\r\nbad\r\n", 9) = 9 <0.000126>

[pid 7957] 12:48:58.580539 epoll_pwait(5, [{EPOLLIN, {u32=8, u64=8}}], 10128, 92, NULL, 8) = 1 <0.000017>

[pid 7957] 12:48:58.580587 read(8, "*2\r\n$3\r\nGET\r\n$41\r\nuuid:6860bbec-"..., 16384) = 61 <0.000012>

[pid 7957] 12:48:58.580630 read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable) <0.000012>

[pid 7957] 12:48:58.580665 write(8, "$4\r\ngood\r\n", 10) = 10 <0.000126>

[pid 7957] 12:48:58.580824 epoll_pwait(5, [{EPOLLIN, {u32=8, u64=8}}], 10128, 92, NULL, 8) = 1 <0.000018>

[pid 7957] 12:48:58.580872 read(8, "*3\r\n$4\r\nSADD\r\n$4\r\ngood\r\n$36\r\n686"..., 16384) = 67 <0.000027>

[pid 7957] 12:48:58.580935 read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable) <0.000011>

[pid 7957] 12:48:58.580972 write(7, "*3\r\n$4\r\nSADD\r\n$4\r\ngood\r\n$36\r\n686"..., 67) = 67 <0.000023>

[pid 7957] 12:48:58.581018 fdatasync(7) = 0 <0.001618>

[pid 7957] 12:48:58.582680 write(8, ":1\r\n", 4) = 4 <0.000131>

[pid 7957] 12:48:58.582851 epoll_pwait(5, [{EPOLLIN, {u32=8, u64=8}}], 10128, 90, NULL, 8) = 1 <0.000017>

[pid 7957] 12:48:58.582900 read(8, "*2\r\n$3\r\nGET\r\n$41\r\nuuid:68cdfdd8-"..., 16384) = 61 <0.000013>

[pid 7957] 12:48:58.582944 read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable) <0.000011>

[pid 7957] 12:48:58.582979 write(8, "$4\r\ngood\r\n", 10) = 10 <0.000149>

结合strace和lsof再分析

这里有好多种文件,pipe管道文件,eventpoll,普通文件,TCP socket文件

综合磁盘读写的想象,只有7号普通文件才会产生磁盘写,他操作的文件路径是

/data/appendonly.aof

相应的系统调用包含write和fdatasync

再通过 strace看,确实是fdatasync系统调用,再写fd=7的文件,也就是/data/appendonly.aof

通过fdatasync的调用和时间戳看,大概平均3毫秒调用一次,每次执行时间大概是1毫秒左右

lsof -p 7957

redis-ser 7957 100 0u CHR 136,1 0t0 4 /1

lsof: no pwd entry for UID 100

redis-ser 7957 100 1u CHR 136,1 0t0 4 /1

lsof: no pwd entry for UID 100

redis-ser 7957 100 2u CHR 136,1 0t0 4 /1

lsof: no pwd entry for UID 100

redis-ser 7957 100 3r FIFO 0,12 0t0 963277 pipe

lsof: no pwd entry for UID 100

redis-ser 7957 100 4w FIFO 0,12 0t0 963277 pipe

lsof: no pwd entry for UID 100

redis-ser 7957 100 5u a_inode 0,13 0 7531 [eventpoll]

lsof: no pwd entry for UID 100

redis-ser 7957 100 6u sock 0,9 0t0 963279 protocol: TCP

lsof: no pwd entry for UID 100

redis-ser 7957 100 7w REG 253,1 11560065 1195079 /data/appendonly.aof

lsof: no pwd entry for UID 100

redis-ser 7957 100 8u sock 0,9 0t0 964360 protocol: TCP

strace -p 7957 -e trace=fdatasync -ff -tt -T

strace: Process 7957 attached with 4 threads

[pid 7957] 12:55:33.267216 fdatasync(7) = 0 <0.001888>

[pid 7957] 12:55:33.269724 fdatasync(7) = 0 <0.002024>

[pid 7957] 12:55:33.273431 fdatasync(7) = 0 <0.001935>

[pid 7957] 12:55:33.276447 fdatasync(7) = 0 <0.002140>

[pid 7957] 12:55:33.280856 fdatasync(7) = 0 <0.002174>

[pid 7957] 12:55:33.284771 fdatasync(7) = 0 <0.002113>

[pid 7957] 12:55:33.287465 fdatasync(7) = 0 <0.002371>通过上述分析,大致猜测是Redis持久化配置中的appendonly和appendfsync选项配置不合理导致的

通过Redis的命令行工具,查询这两个选项的配置

继续再终端中,运行下面的命令,查询appendonly和appendfsync的配置

docker exec -it redis redis-cli config get 'append*'

1) "appendfsync"

2) "always"

3) "appendonly"

4) "yes"通过这个结果可以发现

appendfsync配置的是always

appendonly配置的是yes

Redis提供两种数据持久化方式,分别是快照和追加文件

- 快照方式,会按照指定的时间间隔,生产数据的快照,并且保存到磁盘文件中,为了避免阻塞主进程,Redis还会fork出一个子进程,来负责快照的保存,这种方式的性能好,无论是备份还是恢复,都比追加文件好很多缺点是,在数据量大时,fork子进程需要用到比较大的呢称,保存数据也很耗时,所以需要设置一个比较长的时间间隔来应对,比如至少5分钟,这样如果发生故障就丢失5分钟的数据

- 追加文件,用在文件末尾追加记录的方式,对Redis写入的数据,依次进行持久化,所以它的持久化更安全此外,他还提供了一个用appendfsync选项设置fsync的策略,确保写入的数据都落到磁盘中

追加文件的具体策略包括 always,everysec,no等

- always, 每个操作都会执行一次fsync,是最为安全的方式

- everysec, 每秒调用一次fsync,这样可以保证即使是最坏的情况下,也只丢失1秒的数据

- no, 表示交给操作系统来处理

这样就找到了Redis正在进行写入的文件,也知道了产生大量I/O的原因

再看看Redis到底读写了哪些文件

strace -p 7957 -f -e trace=read,write

strace: Process 7957 attached with 4 threads

[pid 7957] write(8, ":1\r\n", 4) = 4

[pid 7957] read(8, "*4\r\n$4\r\nSCAN\r\n$4\r\n8049\r\n$5\r\nMATC"..., 16384) = 47

[pid 7957] read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable)

[pid 7957] write(8, "*2\r\n$4\r\n1521\r\n*11\r\n$41\r\nuuid:6e3"..., 547) = 547

[pid 7957] read(8, "*2\r\n$3\r\nGET\r\n$41\r\nuuid:6e3c31ea-"..., 16384) = 61

[pid 7957] read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable)

[pid 7957] write(8, "$4\r\ngood\r\n", 10) = 10

[pid 7957] read(8, "*3\r\n$4\r\nSADD\r\n$4\r\ngood\r\n$36\r\n6e3"..., 16384) = 67

[pid 7957] read(3, 0x7ffd970a80b7, 1) = -1 EAGAIN (Resource temporarily unavailable)

[pid 7957] write(7, "*3\r\n$4\r\nSADD\r\n$4\r\ngood\r\n$36\r\n6e3"..., 67) = 67fd=3的是一个pipe管道文件

fd=8是 socket文件,有读也有写

从socket读取GET uuid:。。。 后,响应good

再从socket读取SADD good 545.。。后,响应1

对于Redis来说,SADD是一个写操作,所以Redis还会把他保存到用于持久化的appendonly.aof文件中

观察更多的strace结果,会发现每当GET返回good时候,随后都会有一个SADD操作,这样就导致了本来是查询,Redis却有大量的写磁盘操作

到这里就找出了Redis写磁盘的原因,在最终结论之前,需要确认一下,8号TCP socket对应的Redis客户端,是不是当前的案列应用

可以给lsof命令加上-i选项,找出TCP socket对应的TCP连接信息,由于Redis和Python应用都在容器中运行,我们需要进入容器的网络命名空间内部,才能看到完整的TCP连接

#下面的命令会用到 nsenter 工具,可以进入容器命名空间,安装方式

docker run --rm -v /usr/local/bin:target jpetazzo/nsenter运行下面的命令

#由于这两个容器共享同一个网络命名空间,我们只需要进入app的网络命名空间即可

PID=$(docker inspect --format {{.State.Pid}} app)

-i表示显示网络套接字信息

nsenter --target $PID --net -- lsof -i

lsof: no pwd entry for UID 100

lsof: no pwd entry for UID 100

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

lsof: no pwd entry for UID 100

redis-ser 7957 100 6u IPv4 963279 0t0 TCP localhost:6379 (LISTEN)

lsof: no pwd entry for UID 100

redis-ser 7957 100 8u IPv4 964360 0t0 TCP localhost:6379->localhost:49088 (ESTABLISHED)

python 8001 root 3u IPv4 964217 0t0 TCP *:http (LISTEN)

python 8001 root 4u IPv4 980043 0t0 TCP [my_host]:http->[IP]:34944 (ESTABLISHED)

python 8001 root 5u IPv4 964359 0t0 TCP localhost:49088->localhost:6379 (ESTABLISHED)

这次可以看到,redis-server的8号文件描述符,对应TCP连接localhost:6379 -> localhost:49088

其中localhost:6379就是 redis-server 自己的监听端口,所以 localhost:49088就是redis的客户端

观察最后一行,也就是正在运行的Python应用程序

现在终于找到Redis响应延迟的潜在原因,找到两个问题

1.Redis配置的appendfsync是always,这就导致了Redis每次的写操作,都会触发fdatasync系统调用,改成1秒就可以了

2.Python应用在查询接口中调用Redis的SADD命令,这可能是不合理使用缓存导致的

对于第一个配置问题,执行下面命令修改

docker exec -it redis redis-cli config set appendfsync everysec

OK再看系统运行情况,io延迟已经低了很多了

top - 13:37:24 up 16 days, 3:20, 6 users, load average: 1.04, 1.37, 1.44

Tasks: 85 total, 3 running, 48 sleeping, 0 stopped, 0 zombie

%Cpu(s): 61.1 us, 27.8 sy, 0.0 ni, 5.6 id, 0.0 wa, 0.0 hi, 5.6 si, 0.0 st

KiB Mem : 1008936 total, 109480 free, 123364 used, 776092 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 720904 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

8001 root 20 0 193264 27852 8884 S 65.5 2.8 10:06.27 python

iostat -d -x 1

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 14.14 0.00 3.03 0.00 185.86 122.67 0.21 1.33 0.00 1.33 67.67 20.51

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 7.84 0.00 2.94 0.00 137.25 93.33 0.20 1.00 0.00 1.00 68.00 20.00再看python的app.py代码

@app.route('/get_cache', defaults={'type_name': 'good'})

@app.route("/get_cache/")

@timing

def get_cache(type_name):

'''handler for /get_cache'''

for key in redis_client.scan_iter("uuid:*"):

value = redis_client.get(key)

if value == type_name:

redis_client.sadd(type_name, key[5:])

data = list(redis_client.smembers(type_name))

redis_client.delete(type_name)

return jsonify({"type": type_name, 'count': len(data), 'data': data}) 可以看到,Python应用把Redis当成临时空间,用处来村查询过程中找到的数据

而这些数据放到内存中就可以了,完全没必须再通过网络钓调用储到Redis中

再通过新的接口访问

curl http://[IP]:10000/get_cache_data

"688563de-205b-11e9-b526-0242ac120002","6810117e-205b-11e9-b526-0242ac120002",

"6e8c056c-205b-11e9-b526-0242ac120002","6814491a-205b-11e9-b526-0242ac120002"],

"elapsed_seconds":0.17398428916931152,"type":"good"}解决第二个问题后,性能又有了进步一提升,0.17秒就完成了

最后清理案例的应用

redis-slow]# make clean

docker rm -f app redis

app

redis这次分析的是一个Redis 缓存的案例

先用 top、iostat ,分析了系统的 CPU 、内存和磁盘使用情况,再通过pidstat继续分析

从 Redis 的原理来说,查询缓存时不应该出现大量的磁盘 I/O 写操作。

顺着这个思路,继续借助 pidstat、strace、lsof、nsenter 等一系列的工具,找出了两个潜在问题,

一个是 Redis 的不合理配置,

另一个是 Python 应用对 Redis 的滥用

参考

Redis Persistence