dpvs源代码分析——master和slave之间通信

小胖我在阅读dpvs源代码的过程中,发现很多模块调用msg_type_mc_register函数或msg_type_register函数来注册dpvs_msg_type结构体,结构体定义如下:

/* unicast only needs UNICAST_MSG_CB,

* while multicast need both UNICAST_MSG_CB and MULTICAST_MSG_CB.

* As for mulitcast msg, UNICAST_MSG_CB return a dpvs_msg to Master with the SAME

* seq number as the msg recieved. */

struct dpvs_msg_type {

msgid_t type;

lcoreid_t cid; /* on which lcore the callback func registers */

DPVS_MSG_MODE mode; /* distinguish unicast from multicast for the same msg type */

UNICAST_MSG_CB unicast_msg_cb; /* call this func if msg is unicast, i.e. 1:1 msg */

MULTICAST_MSG_CB multicast_msg_cb; /* call this func if msg is multicast, i.e. 1:N msg */

rte_atomic32_t refcnt;

struct list_head list;

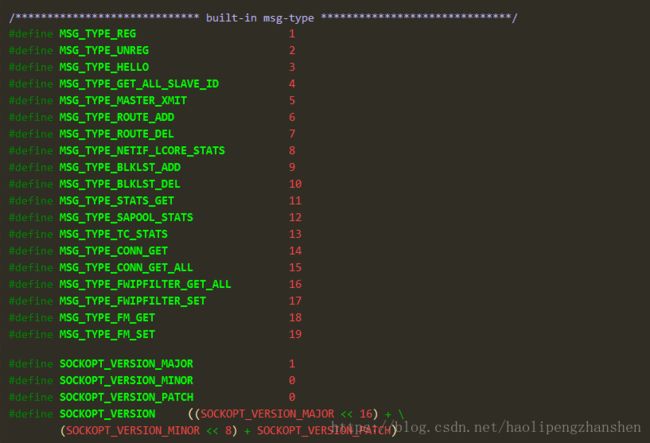

};type:消息类型

在ctrl.h文件中定义

cid:回调函数注册在哪个cpu逻辑核心上

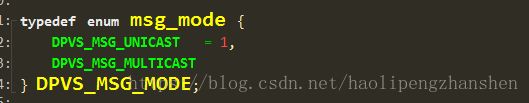

mode:消息模式,分为单播和多播

unicast_msg_cb:单播消息的回调函数

multicast_msg_cb:多播消息的回调函数

上述回调函数的函数原型如下所示:

/* All msg callbacks are called on the lcore which it registers */

typedef int (*UNICAST_MSG_CB)(struct dpvs_msg *);

typedef int (*MULTICAST_MSG_CB)(struct dpvs_multicast_queue *);refcnt:引用计数

list:链表的头指针

本博客解决以下几个问题:

1.单播消息和多播消息的注册(初始化)和使用

2. 注册单播消息和多播消息的区别?

3.何时注册单播消息?何时注册多播消息?

一、单播消息和多播消息的注册和使用

以netif.c的函数为例

static inline int lcore_stats_msg_init(void)

{

int ii, err;

struct dpvs_msg_type lcore_stats_msg_type = {

.type = MSG_TYPE_NETIF_LCORE_STATS,

.mode = DPVS_MSG_UNICAST,

.unicast_msg_cb = lcore_stats_msg_cb,

.multicast_msg_cb = NULL,

};

for (ii = 0; ii < DPVS_MAX_LCORE; ii++) {

if ((ii == g_master_lcore_id) || (g_slave_lcore_mask & (1L << ii))) {

lcore_stats_msg_type.cid = ii;

err = msg_type_register(&lcore_stats_msg_type);

if (EDPVS_OK != err) {

RTE_LOG(WARNING, NETIF, "[%s] fail to register NETIF_LCORE_STATS msg-type "

"on lcore%d: %s\n", __func__, ii, dpvs_strerror(err));

return err;

}

}

}

return EDPVS_OK;

}使用方法:定义dpvs_msg_type结构体,并进行赋值,然后调用msg_type_register注册即可,是不是很简单?

二、单播消息和多播消息注册函数的源代码分析

其涉及到的主要函数有以下4个:

/* register|unregister msg-type on lcore 'msg_type->cid' */

int msg_type_register(const struct dpvs_msg_type *msg_type);

int msg_type_unregister(const struct dpvs_msg_type *msg_type);

/* register|unregister multicast msg-type on each configured lcore */

int msg_type_mc_register(const struct dpvs_msg_type *msg_type);

int msg_type_mc_unregister(const struct dpvs_msg_type *msg_type);2.1 单播注册函数

int msg_type_register(const struct dpvs_msg_type *msg_type)

{

int hashkey;

struct dpvs_msg_type *mt;

//参数校验

if (unlikely(NULL == msg_type || msg_type->cid >= DPVS_MAX_LCORE)) {

RTE_LOG(WARNING, MSGMGR, "%s: invalid args !\n", __func__);

return EDPVS_INVAL;

}

//通过type值获取哈希值

hashkey = mt_hashkey(msg_type->type);

//通过msg type 获取dpvs_msg_type结构体指针

mt = msg_type_get(msg_type->type, /*msg_type->mode, */msg_type->cid);

if (NULL != mt) {

RTE_LOG(WARNING, MSGMGR, "%s: msg type %d mode %s already registered\n",

__func__, mt->type, mt->mode == DPVS_MSG_UNICAST ? "UNICAST" : "MULTICAST");

msg_type_put(mt);

rte_exit(EXIT_FAILURE, "inter-lcore msg type %d already exist!\n", mt->type);

return EDPVS_EXIST;

}

//申请资源

mt = rte_zmalloc("msg_type", sizeof(struct dpvs_msg_type), RTE_CACHE_LINE_SIZE);

if (unlikely(NULL == mt)) {

RTE_LOG(ERR, MSGMGR, "%s: no memory !\n", __func__);

return EDPVS_NOMEM;

}

//拷贝数值,并将refcnt引用计数置为0

memcpy(mt, msg_type, sizeof(struct dpvs_msg_type));

rte_atomic32_set(&mt->refcnt, 0);

//加上写锁,将mt元素添加到mt_array二维数组(哈希表)的尾部

rte_rwlock_write_lock(&mt_lock[msg_type->cid][hashkey]);

list_add_tail(&mt->list, &mt_array[msg_type->cid][hashkey]);

rte_rwlock_write_unlock(&mt_lock[msg_type->cid][hashkey]);

return EDPVS_OK;

}主要说下mt_lock和mt_array

/* per-lcore msg-type array */

typedef struct list_head msg_type_array_t[DPVS_MSG_LEN];

typedef rte_rwlock_t msg_type_lock_t[DPVS_MSG_LEN];

msg_type_array_t mt_array[DPVS_MAX_LCORE];

msg_type_lock_t mt_lock[DPVS_MAX_LCORE];

msg_type_array_t是指向struct list_head的指针类型

msg_type_lock_t是指向msg_type_lock_t的指针类型

所以mt_array,mt_lock是个二维数组

第一个维度是lcore id

第二个维度是通过消息类型计算出来的hashkey值

2.2 多播注册函数

int msg_type_mc_register(const struct dpvs_msg_type *msg_type)

{

lcoreid_t cid;

struct dpvs_msg_type mt;

int ret = EDPVS_OK;

if (unlikely(NULL == msg_type))

return EDPVS_INVAL;

memset(&mt, 0, sizeof(mt));

mt.type = msg_type->type;

mt.mode = DPVS_MSG_MULTICAST;

//遍历当前所有lcore id

for (cid = 0; cid < DPVS_MAX_LCORE; cid++) {

//master核心上注册multicast_msg_cb多播回调

if (cid == master_lcore) {

mt.cid = cid;

mt.unicast_msg_cb = NULL;

if (msg_type->multicast_msg_cb)

mt.multicast_msg_cb = msg_type->multicast_msg_cb;

else /* if no multicast callback given, then a default one is used, which do nothing now */

mt.multicast_msg_cb = default_mc_msg_cb;//调用默认的多播回调

}

else if (slave_lcore_mask & (1L << cid)) {

//slave核心上注册unicast_msg_cb单播回调

mt.cid = cid;

mt.unicast_msg_cb = msg_type->unicast_msg_cb;

mt.multicast_msg_cb = NULL;

} else

continue;

//!!!!同样也是调用msg_type_register函数

ret = msg_type_register(&mt);

if (unlikely(ret < 0)) {

RTE_LOG(ERR, MSGMGR, "%s: fail to register multicast msg on lcore %d\n",

__func__, cid);

return ret;

}

}

return EDPVS_OK;

}多播的注册函数,本质上就是遍历所有的cpu lcore 逻辑核心,master核心注册多播回调函数

slave 核心注册单播回调函数,最终还是调用msg_type_register函数

三、何时调用unicast_msg_cb和multicast_msg_cb

3.1 调用关系

小胖使用的是source insight编辑器,全局搜索下unicast_msg_cb

在msg_master_process函数和msg_slave_process函数中调用了unicast_msg_cb函数,

在msg_master_process函数中调用了multicast_msg_cb函数

msg_master_process函数和msg_slave_process函数在调用堆栈是什么呢?

1) msg_master_process函数调用堆栈

msg_master_process在msg_send函数和multicast_msg_send函数的同步消息发送超时时,会被再次调用。

主要就是在main函数的控制面线程中被调用

2) msg_slave_process函数调用堆栈

slave_lcore_loop_func -> msg_slave_process

slave_lcore_loop_func为注册的ctrl_lcore_job的func成员的回调函数

/* register netif-lcore-loop-job for Slaves */

snprintf(ctrl_lcore_job.name, sizeof(ctrl_lcore_job.name) - 1, "%s", "slave_ctrl_plane");

ctrl_lcore_job.func = slave_lcore_loop_func;

ctrl_lcore_job.data = NULL;

ctrl_lcore_job.type = NETIF_LCORE_JOB_LOOP;

if ((ret = netif_lcore_loop_job_register(&ctrl_lcore_job)) < 0) {

RTE_LOG(ERR, MSGMGR, "%s: fail to register ctrl func on slave lcores\n", __func__);

return ret;

}func回调函数的调用在前面的博客已经讲解过了,感兴趣的可以翻看下前面的博客。

3.2 msg_master_process中单播/多播消息的处理

msg_master_process函数中master 核心对于DPVS_MSG_MULTICAST多播消息的处理

{

/* multicast msg */

mcq = mc_queue_get(msg->type, msg->seq);

if (!mcq) {

RTE_LOG(WARNING, MSGMGR, "%s: miss multicast msg from"

" lcore %d\n", __func__, msg->type, msg->seq, msg->cid);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&msg);

msg_type_put(msg_type);

continue;

}

rte_atomic16_dec(&mcq->org_msg->refcnt); /* for each reply, decrease refcnt of org_msg */

if (!msg_type->multicast_msg_cb) {

RTE_LOG(DEBUG, MSGMGR, "%s: no callback registered for multicast msg %d\n",

__func__, msg->type);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&msg);

msg_type_put(msg_type);

continue;

}

if (mcq->mask & (1L << msg->cid)) { /* you are the msg i'm waiting */

list_add_tail(&msg->mq_node, &mcq->mq);

add_msg_flags(msg, DPVS_MSG_F_STATE_QUEUE);/* set QUEUE flag for slave's reply msg */

mcq->mask &= ~(1L << msg->cid);

if (test_msg_flags(msg, DPVS_MSG_F_CALLBACK_FAIL)) /* callback on slave failed */

add_msg_flags(mcq->org_msg, DPVS_MSG_F_CALLBACK_FAIL);

if (unlikely(0 == mcq->mask)) { /* okay, all slave reply msg arrived */

if (msg_type->multicast_msg_cb(mcq) < 0) {

add_msg_flags(mcq->org_msg, DPVS_MSG_F_CALLBACK_FAIL);/* callback on master failed */

RTE_LOG(WARNING, MSGMGR, "%s: mc msg_type %d callback failed on master\n",

__func__, msg->type);

}

add_msg_flags(mcq->org_msg, DPVS_MSG_F_STATE_FIN);

msg_destroy(&mcq->org_msg);

msg_type_put(msg_type);

continue;

}

msg_type_put(msg_type);

continue;

}

/* free repeated msg, but not free msg queue, so change msg mode to DPVS_MSG_UNICAST */

msg->mode = DPVS_MSG_UNICAST;

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&msg); /* sorry, you are late */

rte_atomic16_inc(&mcq->org_msg->refcnt); /* do not count refcnt for repeated msg */

} 首先,mc_queue_get获取mc_wait_list链表中对应seq和type的元素

3.3 msg_slave_process中单播消息的处理

/* only unicast msg can be recieved on slave lcore */

int msg_slave_process(void)

{

struct dpvs_msg *msg, *xmsg;

struct dpvs_msg_type *msg_type;

lcoreid_t cid;

int ret = EDPVS_OK;

//获取cpu核心id

cid = rte_lcore_id();

if (unlikely(cid == master_lcore)) {

RTE_LOG(ERR, MSGMGR, "%s is called on master lcore!\n", __func__);

return EDPVS_NONEALCORE;

}

/* dequeue msg from ring on the lcore until drain */

while (0 == rte_ring_dequeue(msg_ring[cid], (void **)&msg)) {

add_msg_flags(msg, DPVS_MSG_F_STATE_RECV);

if (unlikely(DPVS_MSG_MULTICAST == msg->mode)) {

RTE_LOG(ERR, MSGMGR, "%s: multicast msg recieved on slave lcore!\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&msg);

continue;

}

msg_type = msg_type_get(msg->type, cid);

if (!msg_type) {

RTE_LOG(DEBUG, MSGMGR, "%s: unregistered msg type %d on lcore %d\n",

__func__, msg->type, cid);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&msg);

continue;

}

//调用unicast_msg_cb函数,函数会对msg实参进行赋值操作

if (msg_type->unicast_msg_cb) {

if (msg_type->unicast_msg_cb(msg) < 0) {

add_msg_flags(msg, DPVS_MSG_F_CALLBACK_FAIL);

RTE_LOG(WARNING, MSGMGR, "%s: msg_type %d callback failed on lcore %d\n",

__func__, msg->type, cid);

}

}

/* send response msg to Master for multicast msg */

if (DPVS_MSG_MULTICAST == msg_type->mode) {

//发送响应给master lcore

xmsg = msg_make(msg->type, msg->seq, DPVS_MSG_UNICAST, cid, msg->reply.len,

msg->reply.data);

if (unlikely(!xmsg)) {

ret = EDPVS_NOMEM;

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

goto cont;

}

add_msg_flags(xmsg, DPVS_MSG_F_CALLBACK_FAIL & get_msg_flags(msg));

//以异步方式将消息发送到master lcore上

if (msg_send(xmsg, master_lcore, DPVS_MSG_F_ASYNC, NULL)) {

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg_destroy(&xmsg);

goto cont;

}

msg_destroy(&xmsg);

}

//设置完成标志

add_msg_flags(msg, DPVS_MSG_F_STATE_FIN);

cont:

msg_destroy(&msg);

msg_type_put(msg_type);

}

return ret;

}3.4 msg_send单播消息发送函数

/* "msg" must be produced by "msg_make" */

int msg_send(struct dpvs_msg *msg, lcoreid_t cid, uint32_t flags, struct dpvs_msg_reply **reply)

{

struct dpvs_msg_type *mt;

int res;

uint32_t tflags;

uint64_t start, delay;

add_msg_flags(msg, flags);

if (unlikely(!msg || !((cid == master_lcore) || (slave_lcore_mask & (1L << cid))))) {

RTE_LOG(WARNING, MSGMGR, "%s: invalid args\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

return EDPVS_INVAL;

}

//从mt_array二维数组中获取dpvs_msg_type元素

mt = msg_type_get(msg->type, cid);

if (unlikely(!mt)) {

RTE_LOG(WARNING, MSGMGR, "%s: msg type %d not registered\n", __func__, msg->type);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

return EDPVS_NOTEXIST;

}

//引用计数减少1

msg_type_put(mt);

/* two lcores will be using the msg now, increase its refcnt */

rte_atomic16_inc(&msg->refcnt);

//投递到master对应的队列msg_ring[master_lcore]中

res = rte_ring_enqueue(msg_ring[cid], msg);

if (unlikely(-EDQUOT == res)) {

RTE_LOG(WARNING, MSGMGR, "%s: msg ring of lcore %d quota exceeded\n",

__func__, cid);

} else if (unlikely(-ENOBUFS == res)) {

RTE_LOG(ERR, MSGMGR, "%s: msg ring of lcore %d is full\n", __func__, res);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

rte_atomic16_dec(&msg->refcnt); /* not enqueued, free manually */

return EDPVS_DPDKAPIFAIL;

} else if (res) {

RTE_LOG(ERR, MSGMGR, "%s: unkown error %d for rte_ring_enqueue\n", __func__, res);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

rte_atomic16_dec(&msg->refcnt); /* not enqueued, free manually */

return EDPVS_DPDKAPIFAIL;

}

//异步消息,投递到队列中即可返回

if (flags & DPVS_MSG_F_ASYNC)

return EDPVS_OK;

/* blockable msg, wait here until done or timeout */

/*同步发送,一直等待消息发送出去或者超时*/

add_msg_flags(msg, DPVS_MSG_F_STATE_SEND);//已发送标志

start = rte_get_timer_cycles();//当前cpu时钟周期

//检测msg的标志是否是DPVS_MSG_F_STATE_FIN或DPVS_MSG_F_STATE_DROP,或者等待超时退出while循环

while(!(test_msg_flags(msg, (DPVS_MSG_F_STATE_FIN | DPVS_MSG_F_STATE_DROP)))) {

/* to avoid dead lock when one send a blockable msg to itself */

if (rte_lcore_id() == master_lcore)

msg_master_process();

else

msg_slave_process();

delay = (uint64_t)msg_timeout * rte_get_timer_hz() / 1E6;

//大于时间间隔delay,已超时,将msg标志改为DPVS_MSG_F_TIMEOUT

if (start + delay < rte_get_timer_cycles()) {

RTE_LOG(WARNING, MSGMGR, "%s: uc_msg(type:%d, cid:%d->%d, flags=%d) timeout"

"(%d us), drop...\n", __func__,

msg->type, msg->cid, cid, get_msg_flags(msg), msg_timeout);

add_msg_flags(msg, DPVS_MSG_F_TIMEOUT);

return EDPVS_MSG_DROP;

}

}

if (reply)

*reply = &msg->reply;

tflags = get_msg_flags(msg);

if (tflags & DPVS_MSG_F_CALLBACK_FAIL)

return EDPVS_MSG_FAIL;

else if (tflags & DPVS_MSG_F_STATE_FIN)

return EDPVS_OK;

else

return EDPVS_MSG_DROP;

}1) 从mt_array二维数组中获取dpvs_msg_type元素

2) 投递到master对应的队列msg_ring[master_lcore]中

3) 如flags是DPVS_MSG_F_ASYNC,表明是异步消息,投递到队列中即可函数返回

4) 同步发送,分为循环等待消息发送完成或消息丢弃 and 同步发送超时。

while(!(test_msg_flags(msg, (DPVS_MSG_F_STATE_FIN | DPVS_MSG_F_STATE_DROP)))) {

/* to avoid dead lock when one send a blockable msg to itself */

if (rte_lcore_id() == master_lcore)//master lcore上调用msg_master_process();处理

msg_master_process();

else

msg_slave_process();//slave lcore上调用msg_slave_process();处理

delay = (uint64_t)msg_timeout * rte_get_timer_hz() / 1E6;

//大于时间间隔delay,已超时,将msg标志改为DPVS_MSG_F_TIMEOUT

if (start + delay < rte_get_timer_cycles()) {

RTE_LOG(WARNING, MSGMGR, "%s: uc_msg(type:%d, cid:%d->%d, flags=%d) timeout"

"(%d us), drop...\n", __func__,

msg->type, msg->cid, cid, get_msg_flags(msg), msg_timeout);

add_msg_flags(msg, DPVS_MSG_F_TIMEOUT);

return EDPVS_MSG_DROP;

}

}

3.5 multicast_msg_send多播消息发送函数

/* "msg" must be produced by "msg_make" */

int multicast_msg_send(struct dpvs_msg *msg, uint32_t flags, struct dpvs_multicast_queue **reply)

{

struct dpvs_msg *new_msg;

struct dpvs_multicast_queue *mc_msg;

uint32_t tflags;

uint64_t start, delay;

int ii, ret;

//设置消息的标志位

add_msg_flags(msg, flags);

//参数校验

if (unlikely(!msg || DPVS_MSG_MULTICAST != msg->mode)

|| master_lcore != msg->cid) {

RTE_LOG(WARNING, MSGMGR, "%s: invalid multicast msg\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

return EDPVS_INVAL;

}

/* multicast msg of identical type and seq cannot coexist */

/*判断msg在mc_wait_list等待链表中是否已经存在*/

if (unlikely(mc_queue_get(msg->type, msg->seq) != NULL)) {

RTE_LOG(WARNING, MSGMGR, "%s: repeated sequence number for multicast msg: "

"type %d, seq %d\n", __func__, msg->type, msg->seq);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

msg->mode = DPVS_MSG_UNICAST; /* do not free msg queue */

return EDPVS_INVAL;

}

/* send unicast msgs from master to all alive slaves */

rte_atomic16_inc(&msg->refcnt); /* refcnt increase by 1 for itself */

for (ii = 0; ii < DPVS_MAX_LCORE; ii++) {

//从master向所有的slave核心发送unicast消息

if (slave_lcore_mask & (1L << ii)) {

//申请msg消息

new_msg = msg_make(msg->type, msg->seq, DPVS_MSG_UNICAST, msg->cid, msg->len, msg->data);

if (unlikely(!new_msg)) {

RTE_LOG(ERR, MSGMGR, "%s: msg make fail\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

rte_atomic16_dec(&msg->refcnt); /* decrease refcnt by 1 manually */

return EDPVS_NOMEM;

}

/* must send F_ASYNC msg as mc_msg has not allocated */

ret = msg_send(new_msg, ii, DPVS_MSG_F_ASYNC, NULL);

if (ret < 0) { /* nonblock msg not equeued */

RTE_LOG(ERR, MSGMGR, "%s: msg send fail\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

rte_atomic16_dec(&msg->refcnt); /* decrease refcnt by 1 manually */

msg_destroy(&new_msg);

return ret;

}

msg_destroy(&new_msg);

rte_atomic16_inc(&msg->refcnt); /* refcnt increase by 1 for each slave */

}

}

mc_msg = rte_zmalloc("mc_msg", sizeof(struct dpvs_multicast_queue), RTE_CACHE_LINE_SIZE);

if (unlikely(!mc_msg)) {

RTE_LOG(ERR, MSGMGR, "%s: no memory\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

return EDPVS_NOMEM;

}

//msg的type和seq是怎么赋值的

mc_msg->type = msg->type;

mc_msg->seq = msg->seq;

mc_msg->mask = slave_lcore_mask;

mc_msg->org_msg = msg; /* save original msg */

INIT_LIST_HEAD(&mc_msg->mq);

rte_rwlock_write_lock(&mc_wait_lock);

//无存储空间可存储

if (mc_wait_list.free_cnt <= 0) {

rte_rwlock_write_unlock(&mc_wait_lock);

RTE_LOG(WARNING, MSGMGR, "%s: multicast msg wait queue full, "

"msg dropped and try later...\n", __func__);

add_msg_flags(msg, DPVS_MSG_F_STATE_DROP);

return EDPVS_MSG_DROP;

}

list_add_tail(&mc_msg->list, &mc_wait_list.list);

--mc_wait_list.free_cnt;//减少mc_wait_list的可用数量

rte_rwlock_write_unlock(&mc_wait_lock);

if (flags & DPVS_MSG_F_ASYNC)

return EDPVS_OK;

/* blockable msg wait here until done or timeout */

add_msg_flags(msg, DPVS_MSG_F_STATE_SEND);

start = rte_get_timer_cycles();

while(!(test_msg_flags(msg, (DPVS_MSG_F_STATE_FIN | DPVS_MSG_F_STATE_DROP)))) {

msg_master_process(); /* to avoid dead lock if send msg to myself */

delay = (uint64_t)msg_timeout * rte_get_timer_hz() / 1E6;

if (start + delay < rte_get_timer_cycles()) {

RTE_LOG(WARNING, MSGMGR, "%s: mc_msg(type:%d, cid:%d->slaves) timeout"

"(%d us), drop...\n", __func__,

msg->type, msg->cid, msg_timeout);

add_msg_flags(msg, DPVS_MSG_F_TIMEOUT);

return EDPVS_MSG_DROP;

}

}

if (reply)

*reply = mc_msg; /* here, mc_msg store all slave's response msg */

tflags = get_msg_flags(msg);

if (tflags & DPVS_MSG_F_CALLBACK_FAIL)

return EDPVS_MSG_FAIL;

else if (tflags & DPVS_MSG_F_STATE_FIN)

return EDPVS_OK;

else

return EDPVS_MSG_DROP;

}1)判断msg在mc_wait_list等待链表中是否已经存在(下文中mc_wait_list都统称为等待队列)

2) 从master向所有的slave核心,异步方式调用msg_send(new_msg, ii, DPVS_MSG_F_ASYNC, NULL);发送单播消息

3) 申请dpvs_multicast_queue结构体类型的资源,并对其进行赋值。

dpvs_multicast_queue是什么东西?什么作用?心里好奇不?

多播时,master和slave之间交互的队列

mc_msg->type = msg->type;//消息的类型

mc_msg->seq = msg->seq;//消息的序列号

mc_msg->mask = slave_lcore_mask;//消息的cpu逻辑核心掩码

mc_msg->org_msg = msg; //保存以前的消息

INIT_LIST_HEAD(&mc_msg->mq);//初始化接受msg的队列

4)加写锁,读取mc_wait_list.free_cnt判断msg队列是否还有剩余空间,如没有,则将msg丢弃

5)将申请的mc_msg添加到mc_wait_list多播等待队列的尾部

list_add_tail(&mc_msg->list, &mc_wait_list.list);

--mc_wait_list.free_cnt;//减少mc_wait_list的可用数量

6)异步消息投递到队列中即可函数返回;

同步发送,分为循环等待消息发送完成或消息丢弃 and 同步发送超时(上面已经分析)。

7)消息发送出去后,通过get_msg_flags函数得到msg的标志,返回不同的值。

回答最开始提出来的问题

1.单播消息和多播消息的注册(初始化)和使用?

2. 注册单播消息和多播消息的区别?

3.何时注册单播消息?何时注册多播消息?

小结:

1.master 核心同时处理DPVS_MSG_UNICAST单播消息和DPVS_MSG_MULTICAST多播消息

2.slave 核心仅仅处理DPVS_MSG_UNICAST单播消息,如果单播消息的模式为DPVS_MSG_MULTICAST,则调用

msg_send(xmsg, master_lcore, DPVS_MSG_F_ASYNC, NULL)以异步方式将消息发送到master lcore上