CD网站用户消费行为的分析报告

CD网站的用户消费行为的分析报告

数据来源CDNow网站的用户购买明细。通过对用户消费趋势、用户个体消费、用户消费行为、复购率以及回购率的分析,可以更清楚了解用户行为习惯,为进一步制定营销策略提供依据。

一、理解数据

本数据集共有 6 万条左右数据,数据为 CDNow 网站 1997年1月至1998年6月的用户行为数据,共计 4 列字段,分别是:

user_id: 用户ID

order_dt: 购买日期

order_products: 购买产品数

order_amount: 购买金额

二、读取数据

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from datetime import datetime

%matplotlib inline

plt.style.use("ggplot")

columns = ["user_id","order_dt","order_products","order_amount"]

df = pd.read_table("CDNOW_master.txt",names = columns,sep = "\s+")

df.head()

| user_id | order_dt | order_products | order_amount | |

|---|---|---|---|---|

| 0 | 1 | 19970101 | 1 | 11.77 |

| 1 | 2 | 19970112 | 1 | 12.00 |

| 2 | 2 | 19970112 | 5 | 77.00 |

| 3 | 3 | 19970102 | 2 | 20.76 |

| 4 | 3 | 19970330 | 2 | 20.76 |

观察数据,order_dt表示时间,但现在它只是年月日组合的一串数字,没有时间含义。购买金额是小数。一个用户在一天内可能购买多次,用户ID为2的用户就在1月12日买了两次。

df.describe()

| user_id | order_dt | order_products | order_amount | |

|---|---|---|---|---|

| count | 69659.000000 | 6.965900e+04 | 69659.000000 | 69659.000000 |

| mean | 11470.854592 | 1.997228e+07 | 2.410040 | 35.893648 |

| std | 6819.904848 | 3.837735e+03 | 2.333924 | 36.281942 |

| min | 1.000000 | 1.997010e+07 | 1.000000 | 0.000000 |

| 25% | 5506.000000 | 1.997022e+07 | 1.000000 | 14.490000 |

| 50% | 11410.000000 | 1.997042e+07 | 2.000000 | 25.980000 |

| 75% | 17273.000000 | 1.997111e+07 | 3.000000 | 43.700000 |

| max | 23570.000000 | 1.998063e+07 | 99.000000 | 1286.010000 |

用户平均每笔订单购买2.4个商品,标准差在2.3,稍稍具有波动性。中位数在2个商品,75分位数在3个商品,说明绝大部分订单的购买量都不多。最大值在99个,数字比较高。购买金额的情况差不多,大部分订单都集中在小额。

df.info()

RangeIndex: 69659 entries, 0 to 69658

Data columns (total 4 columns):

user_id 69659 non-null int64

order_dt 69659 non-null int64

order_products 69659 non-null int64

order_amount 69659 non-null float64

dtypes: float64(1), int64(3)

memory usage: 2.1 MB

可见没有空值,数据比较干净,接下来对时间的数据类型进行转换。

将购买时间数据类型转换为日期

df["order_date"] = pd.to_datetime(df.order_dt,format = "%Y%m%d")

考虑按月分析,这里把日期全部转为月初格式

df["month"] = df.order_date.astype("datetime64[M]")

df.head()

| user_id | order_dt | order_products | order_amount | order_date | month | |

|---|---|---|---|---|---|---|

| 0 | 1 | 19970101 | 1 | 11.77 | 1997-01-01 | 1997-01-01 |

| 1 | 2 | 19970112 | 1 | 12.00 | 1997-01-12 | 1997-01-01 |

| 2 | 2 | 19970112 | 5 | 77.00 | 1997-01-12 | 1997-01-01 |

| 3 | 3 | 19970102 | 2 | 20.76 | 1997-01-02 | 1997-01-01 |

| 4 | 3 | 19970330 | 2 | 20.76 | 1997-03-30 | 1997-03-01 |

df.info()

RangeIndex: 69659 entries, 0 to 69658

Data columns (total 6 columns):

user_id 69659 non-null int64

order_dt 69659 non-null int64

order_products 69659 non-null int64

order_amount 69659 non-null float64

order_date 69659 non-null datetime64[ns]

month 69659 non-null datetime64[ns]

dtypes: datetime64[ns](2), float64(1), int64(3)

memory usage: 3.2 MB

三、用户消费趋势分析(按月)

1.每月的产品购买数量

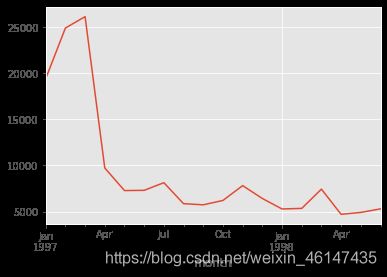

df.groupby("month").order_products.sum().plot()

按月统计CD的销量,从图中可以看出前几个月的销量非常高,而后几个月的销量较为稳定,且有轻微下降趋势。

2.每月的消费金额

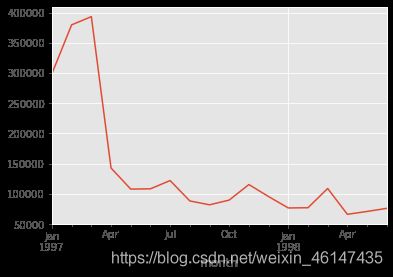

df.groupby("month").order_amount.sum().plot()

由图可以看到,消费金额也一样呈现早期销售多,后期平稳下降趋势,而且前三月数据都呈现出异常状态,为什么呈现这样的原因呢?首先假设前三个月有促销活动,或者用户本身出了问题,早期用户有异常值。但这里只有消费数据,因此不能做出判断。

3.每月消费次数

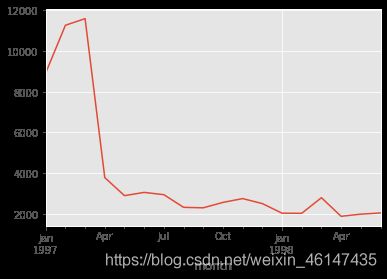

df.groupby("month").user_id.count().plot()

前三个月订单数在 10000 笔左右,后续月份的平均消费订单数则在 2500 笔左右。

4.每月消费人数

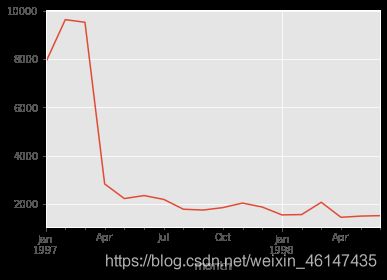

df.groupby("month").user_id.apply(lambda x:len(x.drop_duplicates())).plot()

每月消费人数低于每月消费次数,但差异不大。

前三个月每月消费人数在 8000~10000 之间,后续月份,平均消费人数在 2000 人不到。

四、用户个体消费分析

1.用户消费金额,消费次数的描述统计

user_grouped = df.groupby("user_id").sum()

user_grouped.describe()

| order_dt | order_products | order_amount | |

|---|---|---|---|

| count | 2.357000e+04 | 23570.000000 | 23570.000000 |

| mean | 5.902627e+07 | 7.122656 | 106.080426 |

| std | 9.460684e+07 | 16.983531 | 240.925195 |

| min | 1.997010e+07 | 1.000000 | 0.000000 |

| 25% | 1.997021e+07 | 1.000000 | 19.970000 |

| 50% | 1.997032e+07 | 3.000000 | 43.395000 |

| 75% | 5.992125e+07 | 7.000000 | 106.475000 |

| max | 4.334408e+09 | 1033.000000 | 13990.930000 |

- 从用户角度

用户数量:23570,每位用户平均购买 7 张 CD,但是中位数值只有3,且有狂热用户购买了1033 张。平均值大于中位数,是右偏分布,说明小部分用户购买了大量的 CD。

- 从消费金额角度

用户平均消费 106 元,中位数只有 43,且有土豪用户消费 13990,结合分位数和最大值看,平均数仅和 75 分位接近,肯定存在小部分的高频消费用户。

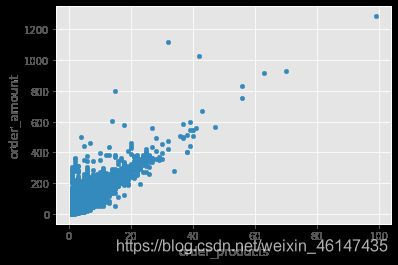

df.plot.scatter(x = "order_products",y = "order_amount")

绘制每笔订单的散点图。从图中观察,订单消费金额和订单商品量呈规律性,每个商品十元左右。订单的极值较少,超出1000的就几个。所以不是造成异常波动的原因。

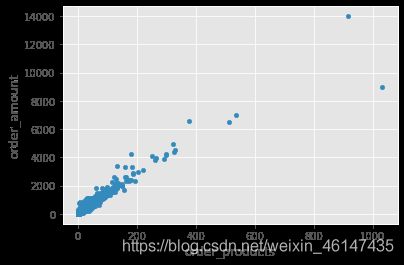

df.groupby("user_id").sum().plot.scatter(x = "order_products",y = "order_amount")

绘制用户的散点图,用户也比较健康,而且规律性比订单更强。

2.用户消费分布图

消费能力特别强的用户有,但是数量不多。为了更好的观察,用直方图。

plt.figure(figsize = (12,4))

plt.subplot(1,2,1)

df.order_amount.hist(bins = 20)

plt.subplot(1,2,2)

df.groupby("user_id").order_products.sum().hist(bins = 30)

从直方图看,大部分用户的消费能力确实不高,高消费用户在图上几乎看不到。这也符合消费行为的行业规律。

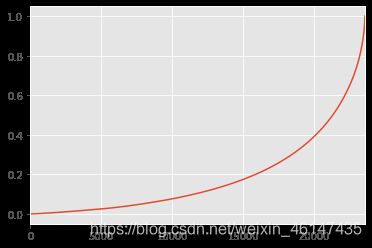

3.用户累计消费金额的占比

user_cumsum = df.groupby("user_id").order_amount.sum().sort_values().reset_index()

user_cumsum["amount_cumsum"] = user_cumsum.order_amount.cumsum()

user_cumsum.tail()

| user_id | order_amount | amount_cumsum | |

|---|---|---|---|

| 23565 | 7931 | 6497.18 | 2463822.60 |

| 23566 | 19339 | 6552.70 | 2470375.30 |

| 23567 | 7983 | 6973.07 | 2477348.37 |

| 23568 | 14048 | 8976.33 | 2486324.70 |

| 23569 | 7592 | 13990.93 | 2500315.63 |

amount_total = user_cumsum.amount_cumsum.max()

user_cumsum["prop"] = user_cumsum.apply(lambda x:x.amount_cumsum/amount_total,axis = 1)

user_cumsum.tail()

| user_id | order_amount | amount_cumsum | prop | |

|---|---|---|---|---|

| 23565 | 7931 | 6497.18 | 2463822.60 | 0.985405 |

| 23566 | 19339 | 6552.70 | 2470375.30 | 0.988025 |

| 23567 | 7983 | 6973.07 | 2477348.37 | 0.990814 |

| 23568 | 14048 | 8976.33 | 2486324.70 | 0.994404 |

| 23569 | 7592 | 13990.93 | 2500315.63 | 1.000000 |

user_cumsum.prop.plot()

绘制趋势图,横坐标是按贡献金额大小排序而成,纵坐标则是用户累计贡献。可以很清楚的看到,前20000个用户贡献了40%的消费。后面4000位用户贡献了60%,确实呈现28倾向。

五、用户消费行为

1.用户首购时间

df.groupby("user_id").month.min().value_counts()

1997-02-01 8476

1997-01-01 7846

1997-03-01 7248

Name: month, dtype: int64

df.groupby("user_id").order_date.min().value_counts().plot()

2.用户最后一次购买时间

df.groupby("user_id").month.max().value_counts()

1997-02-01 4912

1997-03-01 4478

1997-01-01 4192

1998-06-01 1506

1998-05-01 1042

1998-03-01 993

1998-04-01 769

1997-04-01 677

1997-12-01 620

1997-11-01 609

1998-02-01 550

1998-01-01 514

1997-06-01 499

1997-07-01 493

1997-05-01 480

1997-10-01 455

1997-09-01 397

1997-08-01 384

Name: month, dtype: int64

df.groupby("user_id").order_date.max().value_counts().plot()

通过以上两个维度观察,可以看出

- 用户第一次购买分布,集中在前三个月,其中,在 2 月 15 日左右有一次剧烈波动。

- 用户最后一次购买的分布比第一次分布广,但是大部分最后一次购买也集中在前三个月,说明忠诚用户较少,随着时间的递增,最后一次购买数在递增,消费呈现流失上升的趋势,所以可以推测,这份数据选择的是前三个月消费的用户在后面18个月的跟踪记录数据,前三个月消费金额和购买数量的异常趋势获得解释。

3.用户分层

3.1 构建RFM 模型

rfm = df.pivot_table(index = "user_id",

values = ["order_products","order_amount","order_date"],

aggfunc = {"order_date":"max",

"order_products":"sum",

"order_amount":"sum"})

rfm.head()

| order_amount | order_date | order_products | |

|---|---|---|---|

| user_id | |||

| 1 | 11.77 | 1997-01-01 | 1 |

| 2 | 89.00 | 1997-01-12 | 6 |

| 3 | 156.46 | 1998-05-28 | 16 |

| 4 | 100.50 | 1997-12-12 | 7 |

| 5 | 385.61 | 1998-01-03 | 29 |

rfm["R"] = -(rfm.order_date - rfm.order_date.max())/np.timedelta64(1,"D")

rfm.rename(columns = {"order_products":"F","order_amount":"M"},inplace = True

)

rfm.head()

| M | order_date | F | R | |

|---|---|---|---|---|

| user_id | ||||

| 1 | 11.77 | 1997-01-01 | 1 | 545.0 |

| 2 | 89.00 | 1997-01-12 | 6 | 534.0 |

| 3 | 156.46 | 1998-05-28 | 16 | 33.0 |

| 4 | 100.50 | 1997-12-12 | 7 | 200.0 |

| 5 | 385.61 | 1998-01-03 | 29 | 178.0 |

def rfm_func(x):

level = x.apply(lambda x:"1" if x >= 1 else "0")

label = level.R + level.F + level.M

d = {

'111':'重要价值客户',

'011':'重要保持客户',

'101':'重要挽留客户',

'001':'重要发展客户',

'110':'一般价值客户',

'010':'一般保持客户',

'100':'一般挽留客户',

'000':'一般发展客户'

}

result = d[label]

return result

rfm["label"] = rfm[["R","F","M"]].apply(lambda x:x-x.mean()).apply(rfm_func,axis = 1)

rfm.head()

| M | order_date | F | R | label | |

|---|---|---|---|---|---|

| user_id | |||||

| 1 | 11.77 | 1997-01-01 | 1 | 545.0 | 一般挽留客户 |

| 2 | 89.00 | 1997-01-12 | 6 | 534.0 | 一般挽留客户 |

| 3 | 156.46 | 1998-05-28 | 16 | 33.0 | 重要保持客户 |

| 4 | 100.50 | 1997-12-12 | 7 | 200.0 | 一般发展客户 |

| 5 | 385.61 | 1998-01-03 | 29 | 178.0 | 重要保持客户 |

rfm.groupby("label").sum()

| M | F | R | |

|---|---|---|---|

| label | |||

| 一般价值客户 | 1767.11 | 182 | 8512.0 |

| 一般保持客户 | 5100.77 | 492 | 7782.0 |

| 一般发展客户 | 215075.77 | 15428 | 621894.0 |

| 一般挽留客户 | 445233.28 | 29915 | 6983699.0 |

| 重要价值客户 | 147180.09 | 9849 | 286676.0 |

| 重要保持客户 | 1555586.51 | 105509 | 476502.0 |

| 重要发展客户 | 80466.30 | 4184 | 96009.0 |

| 重要挽留客户 | 49905.80 | 2322 | 174340.0 |

rfm.groupby("label").count()

| M | order_date | F | R | |

|---|---|---|---|---|

| label | ||||

| 一般价值客户 | 18 | 18 | 18 | 18 |

| 一般保持客户 | 53 | 53 | 53 | 53 |

| 一般发展客户 | 3493 | 3493 | 3493 | 3493 |

| 一般挽留客户 | 14138 | 14138 | 14138 | 14138 |

| 重要价值客户 | 631 | 631 | 631 | 631 |

| 重要保持客户 | 4267 | 4267 | 4267 | 4267 |

| 重要发展客户 | 599 | 599 | 599 | 599 |

| 重要挽留客户 | 371 | 371 | 371 | 371 |

plt.rcParams['font.sans-serif']=['SimHei']

plt.rcParams['axes.unicode_minus'] = False

for label,gropued in rfm.groupby('label'):

x= gropued['F']

y = gropued['R']

plt.scatter(x,y,label = label) # 利用循环绘制函数

plt.legend(loc='best') # 图例位置

plt.xlabel('Frequency')

plt.ylabel('Recency')

plt.show()

从 RFM 分层可知,大部分用户为重要保持客户,但这是因为极值存在,具体还是要以业务为准划分

3.2 按新、活跃、回流、流失分层用户

pivoted_counts = df.pivot_table(index = 'user_id',

columns = 'month',

values = 'order_dt',

aggfunc = 'count').fillna(0)

pivoted_counts.columns = df.month.sort_values().astype("str").unique()

pivoted_counts.head()

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | ||||||||||||||||||

| 1 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2 | 2.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 3 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

| 4 | 2.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 5 | 2.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 2.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

df_purchase = pivoted_counts.applymap(lambda x:1 if x>0 else 0)

df_purchase.head()

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | ||||||||||||||||||

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

| 4 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

def active_status(data):

status = []

for i in range(18):

if data[i] == 0:

if len(status) > 0:

if status[i-1] == 'unreg':

status.append('unreg')

else:

status.append('unactive')

else:

status.append('unreg')

else:

if len(status) == 0:

status.append('new')

else:

if status[i-1] == 'unactive':

status.append('return')

elif status[i-1] == 'unreg':

status.append('new')

else:

status.append('active')

return pd.Series(status,df_purchase.columns)

purchase_states = df_purchase.apply(active_status,axis = 1)

purchase_states.head()

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | ||||||||||||||||||

| 1 | new | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive |

| 2 | new | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive | unactive |

| 3 | new | unactive | return | active | unactive | unactive | unactive | unactive | unactive | unactive | return | unactive | unactive | unactive | unactive | unactive | return | unactive |

| 4 | new | unactive | unactive | unactive | unactive | unactive | unactive | return | unactive | unactive | unactive | return | unactive | unactive | unactive | unactive | unactive | unactive |

| 5 | new | active | unactive | return | active | active | active | unactive | return | unactive | unactive | return | active | unactive | unactive | unactive | unactive | unactive |

purchase_states_ct = purchase_states.replace('unreg',np.NaN).apply(lambda x:pd.value_counts(x))

purchase_states_ct

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| active | NaN | 1157.0 | 1681 | 1773.0 | 852.0 | 747.0 | 746.0 | 604.0 | 528.0 | 532.0 | 624.0 | 632.0 | 512.0 | 472.0 | 571.0 | 518.0 | 459.0 | 446.0 |

| new | 7846.0 | 8476.0 | 7248 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| return | NaN | NaN | 595 | 1049.0 | 1362.0 | 1592.0 | 1434.0 | 1168.0 | 1211.0 | 1307.0 | 1404.0 | 1232.0 | 1025.0 | 1079.0 | 1489.0 | 919.0 | 1029.0 | 1060.0 |

| unactive | NaN | 6689.0 | 14046 | 20748.0 | 21356.0 | 21231.0 | 21390.0 | 21798.0 | 21831.0 | 21731.0 | 21542.0 | 21706.0 | 22033.0 | 22019.0 | 21510.0 | 22133.0 | 22082.0 | 22064.0 |

purchase_states_ct.fillna(0).T

| active | new | return | unactive | |

|---|---|---|---|---|

| 1997-01-01 | 0.0 | 7846.0 | 0.0 | 0.0 |

| 1997-02-01 | 1157.0 | 8476.0 | 0.0 | 6689.0 |

| 1997-03-01 | 1681.0 | 7248.0 | 595.0 | 14046.0 |

| 1997-04-01 | 1773.0 | 0.0 | 1049.0 | 20748.0 |

| 1997-05-01 | 852.0 | 0.0 | 1362.0 | 21356.0 |

| 1997-06-01 | 747.0 | 0.0 | 1592.0 | 21231.0 |

| 1997-07-01 | 746.0 | 0.0 | 1434.0 | 21390.0 |

| 1997-08-01 | 604.0 | 0.0 | 1168.0 | 21798.0 |

| 1997-09-01 | 528.0 | 0.0 | 1211.0 | 21831.0 |

| 1997-10-01 | 532.0 | 0.0 | 1307.0 | 21731.0 |

| 1997-11-01 | 624.0 | 0.0 | 1404.0 | 21542.0 |

| 1997-12-01 | 632.0 | 0.0 | 1232.0 | 21706.0 |

| 1998-01-01 | 512.0 | 0.0 | 1025.0 | 22033.0 |

| 1998-02-01 | 472.0 | 0.0 | 1079.0 | 22019.0 |

| 1998-03-01 | 571.0 | 0.0 | 1489.0 | 21510.0 |

| 1998-04-01 | 518.0 | 0.0 | 919.0 | 22133.0 |

| 1998-05-01 | 459.0 | 0.0 | 1029.0 | 22082.0 |

| 1998-06-01 | 446.0 | 0.0 | 1060.0 | 22064.0 |

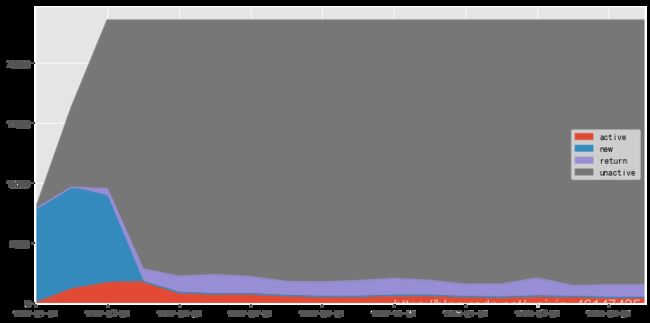

purchase_states_ct.fillna(0).T.plot.area(figsize = (12,6))

plt.show()

由面积图,蓝色和灰色区域占大面积,可以不看,因为这只是某段时间消费过的用户的后续行为。其次红色代表的活跃用户非常稳定,是属于核心用户,以及紫色的回流用户,这两个分层相加,就是消费用户人数占比

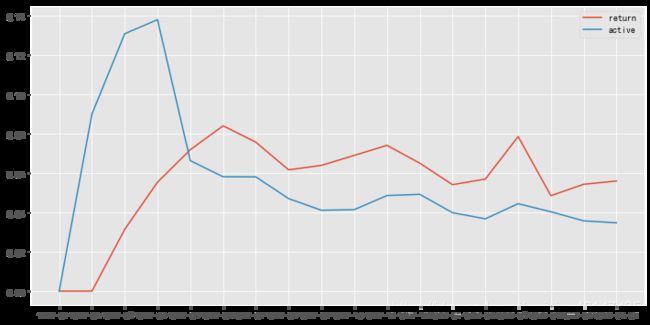

3.3回流用户占比

plt.figure(figsize = (12,6))

rate = purchase_states_ct.fillna(0).T.apply(lambda x:x/x.sum())

plt.plot(rate["return"],label = "return")

plt.plot(rate["active"],label = "active")

plt.legend()

plt.show()

由图可知,用户每月回流用户比占 5% ~ 8% 之间,有下降趋势,说明客户有流失倾向。

活跃用户的占比在 4% ~ 5%间,下降趋势更显著,活跃用户可以看作连续消费用户,忠诚度高于回流用户。

结合活跃用户和回流用户看,在后期的消费用户中,60%是回流用户,40%是活跃用户,整体用户质量相对不错。也进一步说明前面用户消费行为分析中的二八定律,反应了在消费领域中,狠抓高质量用户是不变的道理。

4.用户购买周期

order_diff = df.groupby("user_id").apply(lambda x:x.order_date - x.order_date.shift())

order_diff.head(10)

user_id

1 0 NaT

2 1 NaT

2 0 days

3 3 NaT

4 87 days

5 3 days

6 227 days

7 10 days

8 184 days

4 9 NaT

Name: order_date, dtype: timedelta64[ns]

order_diff.describe()

count 46089

mean 68 days 23:22:13.567662

std 91 days 00:47:33.924168

min 0 days 00:00:00

25% 10 days 00:00:00

50% 31 days 00:00:00

75% 89 days 00:00:00

max 533 days 00:00:00

Name: order_date, dtype: object

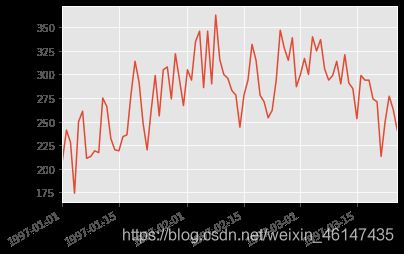

(order_diff/np.timedelta64(1,"D")).hist(bins = 20)

plt.show()

订单周期呈指数分布

用户的平均购买周期是 68 天

绝大部分用户的购买周期低于 100 天

用户生命周期图是典型的长尾图,大部分用户的消费间隔确实比较短。不妨将时间召回点设为消费后立即赠送优惠券,消费后10天询问用户CD怎么样,消费后30天提醒优惠券到期,消费后60天短信推送。

5.用户生命周期

user_life = df.groupby("user_id").order_date.agg(["min","max"])

user_life.head()

| min | max | |

|---|---|---|

| user_id | ||

| 1 | 1997-01-01 | 1997-01-01 |

| 2 | 1997-01-12 | 1997-01-12 |

| 3 | 1997-01-02 | 1998-05-28 |

| 4 | 1997-01-01 | 1997-12-12 |

| 5 | 1997-01-01 | 1998-01-03 |

(user_life['min'] == user_life['max']).value_counts().plot.pie()

(user_life["max"] - user_life["min"]).describe()

count 23570

mean 134 days 20:55:36.987696

std 180 days 13:46:43.039788

min 0 days 00:00:00

25% 0 days 00:00:00

50% 0 days 00:00:00

75% 294 days 00:00:00

max 544 days 00:00:00

dtype: object

-

通过描述可知,用户平均生命周期 134 天,比预想高,但是中位数 0 天,大部分用户第一次消费也是最后一次,这批属于低质量用户,而最大的是 544 天,几乎是数据集的总天数,这用户属于核心用户。

-

因为数据中的用户都是前三个月第一次消费,所以这里的生命周期代表的是1月~3月用户的生命周期。因为用户会持续消费,这段时间过后还会继续消费,用户的平均生命周期会增长。

plt.figure(figsize = (20,6))

plt.subplot(121)

((user_life["max"] - user_life["min"])/np.timedelta64(1,"D")).hist(bins = 15)

plt.title('用户的生命周期直方图')

plt.xlabel('天数')

plt.ylabel('人数')

plt.subplot(122)

u_1 = ((user_life["max"] - user_life["min"]).reset_index()[0]/np.timedelta64(1,"D"))

u_1[u_1 > 0].hist(bins = 40)

plt.title('二次消费以上用户的生命周期直方图')

plt.xlabel('天数')

plt.ylabel('人数')

plt.show()

通过两图对比看出,过滤掉周期为 0 的用户后,图像呈双峰结构,虽然还是有不少用户生命周期趋于 0 天,但是相比第一幅图,靠谱多了。部分低质用户,虽然消费两次,但还是不能持续消费,要想提高用户转化率,应该用户首次消费 30 天内尽量引导,少部分用户集中在 50 - 300 天,属于普通用户,忠诚度一般。集中在 400 天以后的,是高质量用户了,后期人数还在增加,这批用户已经属于核心用户了,忠诚度极高,尽量维护这批用户的利益。

u_1[u_1 > 0].mean()

276.0448072247308

消费两次以上的用户平均生命周期是 276 天,远高于总体,所以如何在用户首次消费后引导其进行多次消费,可以有效提高用户生命周期。

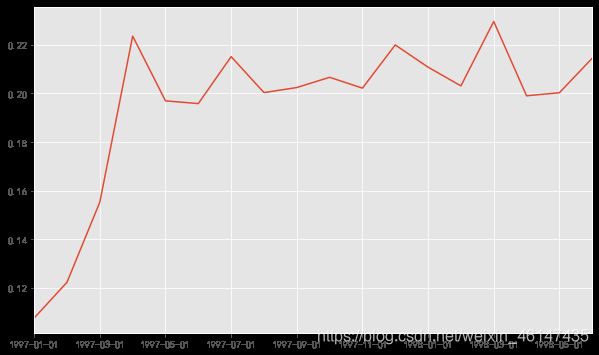

六、复购率和回购率分析

6.1复购率分析

purchase_r = pivoted_counts.applymap(lambda x: 1 if x > 1 else np.NaN if x == 0 else 0)

purchase_r.head()

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | ||||||||||||||||||

| 1 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | 0.0 | NaN | 0.0 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | 0.0 | NaN |

| 4 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | 0.0 | NaN | NaN | NaN | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN |

| 5 | 1.0 | 0.0 | NaN | 0.0 | 0.0 | 0.0 | 0.0 | NaN | 0.0 | NaN | NaN | 1.0 | 0.0 | NaN | NaN | NaN | NaN | NaN |

(purchase_r.sum()/purchase_r.count()).plot(figsize = (10,6))

复购率稳定在 20% 左右,前三个月因为有大量新用户涌入,而这批用户只购买了一次,所以导致复购率降低。

6.2回购率分析

def purchase_back(data):

status = []

for i in range(17):

if data[i] == 1:

if data[i+1] == 1:

status.append(1)

if data[i+1] == 0:

status.append(0)

else:

status.append(np.NaN)

status.append(np.NaN)

return pd.Series(status,df_purchase.columns)

purchase_b = df_purchase.apply(purchase_back,axis = 1)

purchase_b.head()

| 1997-01-01 | 1997-02-01 | 1997-03-01 | 1997-04-01 | 1997-05-01 | 1997-06-01 | 1997-07-01 | 1997-08-01 | 1997-09-01 | 1997-10-01 | 1997-11-01 | 1997-12-01 | 1998-01-01 | 1998-02-01 | 1998-03-01 | 1998-04-01 | 1998-05-01 | 1998-06-01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | ||||||||||||||||||

| 1 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | 0.0 | NaN | 1.0 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | 0.0 | NaN | NaN | NaN | NaN | NaN | 0.0 | NaN |

| 4 | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN | 0.0 | NaN | NaN | NaN | 0.0 | NaN | NaN | NaN | NaN | NaN | NaN |

| 5 | 1.0 | 0.0 | NaN | 1.0 | 1.0 | 1.0 | 0.0 | NaN | 0.0 | NaN | NaN | 1.0 | 0.0 | NaN | NaN | NaN | NaN | NaN |

1 为回购用户, 0 为上月没购买当月购买过,NaN 为连续两月都没购买

plt.figure(figsize=(20,4))

plt.subplot(211)

(purchase_b.sum() / purchase_b.count()).plot()

plt.title('用户回购率图')

plt.ylabel('百分比%')

plt.subplot(212)

plt.plot(purchase_b.sum(),label='每月消费人数')

plt.plot(purchase_b.count(),label='每月回购人数')

plt.xlabel('month')

plt.ylabel('人数')

plt.legend()

plt.show()

- 由回购率图可以看出,用户回购率高于复购率,约在 30% 左右,波动性较强。新用户回购率在 15 % 左右,与老用户相差不大。

- 由人数分布图发现,回购人数在前三月之后趋于稳定,所以波动产生的原因可能由于营销淡旺季导致,但之前复购用户的消费行为与会回购用户的行为大致相同,可能有一部分用户重合,属于优质用户。

- 结合回购率和复购率分析,可以新客的整体忠诚度低于老客,老客的回购率较好,消费频率稍低,这是 CDNow 网站的用户消费特征。