2019独角兽企业重金招聘Python工程师标准>>> ![]()

上一篇章节,介绍了azkaban的几个实战案例;本篇博客博主将为小伙伴们分享数据迁移工具sqoop的安装来帮助熟悉其使用。

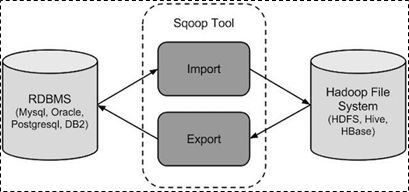

一、概述

sqoop是apache旗下一款“Hadoop和关系数据库服务器之间传送数据”的工具。导入数据:MySQL,Oracle导入数据到Hadoop的HDFS、HIVE、HBASE等数据存储系统;导出数据:从Hadoop的文件系统中导出数据到关系数据库。

二、工作机制

将导入或导出命令翻译成mapreduce程序来实现,在翻译出的mapreduce中主要是对inputformat和outputformat进行定制。

三、sqoop实战及原理

sqoop安装:

安装sqoop的前提是已经具备java和hadoop的环境

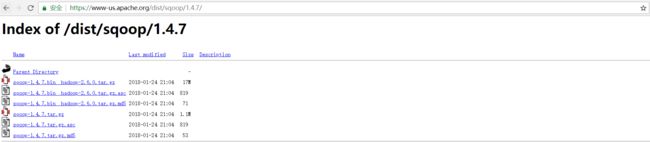

(1)、下载并上传解压

最新稳定版地址:https://www-us.apache.org/dist/sqoop/1.4.7/

Alt+p

put sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /home/hadoop

mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop

(2)、修改配置文件

cd /home/hadoop/sqoop/conf

mv sqoop-env-template.sh sqoop-env.sh

打开sqoop-env.sh并编辑下面几行:

export HADOOP_COMMON_HOME=/home/hadoop/apps/hadoop-2.9.1/

export HADOOP_MAPRED_HOME=/home/hadoop/apps/hadoop-2.9.1/

export HIVE_HOME=/home/hadoop/apps/apache-hive-1.2.2-bin

(3)、加入mysql的jdbc驱动包

cp ~/mysql-connector-java-5.1.28.jar /home/hadoop/sqoop/lib(4)、验证启动

cd /home/hadoop/sqoop/bin

./sqoop version

19/03/18 15:47:14 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017到这里,整个Sqoop安装工作完成

四、Sqoop的基本命令

基本操作:首先,我们可以使用 sqoop help 来查看,sqoop 支持哪些命令

./sqoop helpWarning: /home/hadoop/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/03/18 16:12:52 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.然后得到这些支持了的命令之后,如果不知道使用方式,可以使用 sqoop command 的方式 来查看某条具体命令的使用方式,比如:

[hadoop@centos-aaron-h1 bin]$ ./sqoop help import

Warning: /home/hadoop/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/03/18 16:16:28 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

usage: sqoop import [GENERIC-ARGS] [TOOL-ARGS]

Common arguments:

--connect Specify JDBC

connect

string

--connection-manager Specify

connection

manager

class name

--connection-param-file Specify

connection

parameters

file

--driver Manually

specify JDBC

driver class

to use

--hadoop-home Override

$HADOOP_MAPR

ED_HOME_ARG

--hadoop-mapred-home Override

$HADOOP_MAPR

ED_HOME_ARG

--help Print usage

instructions

--metadata-transaction-isolation-level Defines the

transaction

isolation

level for

metadata

queries. For

more details

check

java.sql.Con

nection

javadoc or

the JDBC

specificaito

n

--oracle-escaping-disabled Disable the

escaping

mechanism of

the

Oracle/OraOo

p connection

managers

-P Read

password

from console

--password Set

authenticati

on password

--password-alias Credential

provider

password

alias

--password-file Set

authenticati

on password

file path

--relaxed-isolation Use

read-uncommi

tted

isolation

for imports

--skip-dist-cache Skip copying

jars to

distributed

cache

--temporary-rootdir Defines the

temporary

root

directory

for the

import

--throw-on-error Rethrow a

RuntimeExcep

tion on

error

occurred

during the

job

--username Set

authenticati

on username

--verbose Print more

information

while

working

Import control arguments:

--append Imports data

in append

mode

--as-avrodatafile Imports data

to Avro data

files

--as-parquetfile Imports data

to Parquet

files

--as-sequencefile Imports data

to

SequenceFile

s

--as-textfile Imports data

as plain

text

(default)

--autoreset-to-one-mapper Reset the

number of

mappers to

one mapper

if no split

key

available

--boundary-query Set boundary

query for

retrieving

max and min

value of the

primary key

--columns Columns to

import from

table

--compression-codec Compression

codec to use

for import

--delete-target-dir Imports data

in delete

mode

--direct Use direct

import fast

path

--direct-split-size Split the

input stream

every 'n'

bytes when

importing in

direct mode

-e,--query Import

results of

SQL

'statement'

--fetch-size Set number

'n' of rows

to fetch

from the

database

when more

rows are

needed

--inline-lob-limit Set the

maximum size

for an

inline LOB

-m,--num-mappers Use 'n' map

tasks to

import in

parallel

--mapreduce-job-name Set name for

generated

mapreduce

job

--merge-key Key column

to use to

join results

--split-by Column of

the table

used to

split work

units

--split-limit Upper Limit

of rows per

split for

split

columns of

Date/Time/Ti

mestamp and

integer

types. For

date or

timestamp

fields it is

calculated

in seconds.

split-limit

should be

greater than

0

--table Table to

read

--target-dir HDFS plain

table

destination

--validate Validate the

copy using

the

configured

validator

--validation-failurehandler Fully

qualified

class name

for

ValidationFa

ilureHandler

--validation-threshold Fully

qualified

class name

for

ValidationTh

reshold

--validator Fully

qualified

class name

for the

Validator

--warehouse-dir HDFS parent

for table

destination

--where WHERE clause

to use

during

import

-z,--compress Enable

compression

Incremental import arguments:

--check-column Source column to check for incremental

change

--incremental Define an incremental import of type

'append' or 'lastmodified'

--last-value Last imported value in the incremental

check column

Output line formatting arguments:

--enclosed-by Sets a required field enclosing

character

--escaped-by Sets the escape character

--fields-terminated-by Sets the field separator character

--lines-terminated-by Sets the end-of-line character

--mysql-delimiters Uses MySQL's default delimiter set:

fields: , lines: \n escaped-by: \

optionally-enclosed-by: '

--optionally-enclosed-by Sets a field enclosing character

Input parsing arguments:

--input-enclosed-by Sets a required field encloser

--input-escaped-by Sets the input escape

character

--input-fields-terminated-by Sets the input field separator

--input-lines-terminated-by Sets the input end-of-line

char

--input-optionally-enclosed-by Sets a field enclosing

character

Hive arguments:

--create-hive-table Fail if the target hive

table exists

--external-table-dir Sets where the external

table is in HDFS

--hive-database Sets the database name to

use when importing to hive

--hive-delims-replacement Replace Hive record \0x01

and row delimiters (\n\r)

from imported string fields

with user-defined string

--hive-drop-import-delims Drop Hive record \0x01 and

row delimiters (\n\r) from

imported string fields

--hive-home Override $HIVE_HOME

--hive-import Import tables into Hive

(Uses Hive's default

delimiters if none are

set.)

--hive-overwrite Overwrite existing data in

the Hive table

--hive-partition-key Sets the partition key to

use when importing to hive

--hive-partition-value Sets the partition value to

use when importing to hive

--hive-table Sets the table name to use

when importing to hive

--map-column-hive Override mapping for

specific column to hive

types.

HBase arguments:

--column-family Sets the target column family for the

import

--hbase-bulkload Enables HBase bulk loading

--hbase-create-table If specified, create missing HBase tables

--hbase-row-key Import to in HBase

HCatalog arguments:

--hcatalog-database HCatalog database name

--hcatalog-home Override $HCAT_HOME

--hcatalog-partition-keys Sets the partition

keys to use when

importing to hive

--hcatalog-partition-values Sets the partition

values to use when

importing to hive

--hcatalog-table HCatalog table name

--hive-home Override $HIVE_HOME

--hive-partition-key Sets the partition key

to use when importing

to hive

--hive-partition-value Sets the partition

value to use when

importing to hive

--map-column-hive Override mapping for

specific column to

hive types.

HCatalog import specific options:

--create-hcatalog-table Create HCatalog before import

--drop-and-create-hcatalog-table Drop and Create HCatalog before

import

--hcatalog-storage-stanza HCatalog storage stanza for table

creation

Accumulo arguments:

--accumulo-batch-size Batch size in bytes

--accumulo-column-family Sets the target column family for

the import

--accumulo-create-table If specified, create missing

Accumulo tables

--accumulo-instance Accumulo instance name.

--accumulo-max-latency Max write latency in milliseconds

--accumulo-password Accumulo password.

--accumulo-row-key Import to in Accumulo

--accumulo-user Accumulo user name.

--accumulo-visibility Visibility token to be applied to

all rows imported

--accumulo-zookeepers Comma-separated list of

zookeepers (host:port)

Code generation arguments:

--bindir Output directory for

compiled objects

--class-name Sets the generated class

name. This overrides

--package-name. When

combined with --jar-file,

sets the input class.

--escape-mapping-column-names Disable special characters

escaping in column names

--input-null-non-string Input null non-string

representation

--input-null-string Input null string

representation

--jar-file Disable code generation; use

specified jar

--map-column-java Override mapping for

specific columns to java

types

--null-non-string Null non-string

representation

--null-string Null string representation

--outdir Output directory for

generated code

--package-name Put auto-generated classes

in this package

Generic Hadoop command-line arguments:

(must preceed any tool-specific arguments)

Generic options supported are:

-conf specify an application configuration file

-D define a value for a given property

-fs specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations.

-jt specify a ResourceManager

-files specify a comma-separated list of files to be copied to the map reduce cluster

-libjars specify a comma-separated list of jar files to be included in the classpath

-archives specify a comma-separated list of archives to be unarchived on the compute machines

The general command line syntax is:

command [genericOptions] [commandOptions]

At minimum, you must specify --connect and --table

Arguments to mysqldump and other subprograms may be supplied

after a '--' on the command line.

[hadoop@centos-aaron-h1 bin]$

五、Sqoop的数据导入

“导入工具”导入单个表从RDBMS到HDFS。表中的每一行被视为HDFS的记录。所有记录都存储为文本文件的文本数据(或者Avro、sequence文件等二进制数据)

(1)、语法:

下面的语法用于将数据导入HDFS。

./sqoop import (generic-args) (import-args)

(2)、示例:

表数据

在mysql中有一个库userdb中三个表:emp, emp_add和emp_contact

表emp:

name

deg

salary

dept

1

gopal

manager

50,000

TP

2

manisha

Proof reader

50,000

TP

3

khalil

php dev

30,000

AC

4

prasanth

php dev

30,000

AC

5

kranthi

admin

20,000

TP

表emp_add:

id

hno

street

city

1201

288A

vgiri

jublee

1202

108I

aoc

sec-bad

1203

144Z

pgutta

hyd

1204

78B

old city

sec-bad

1205

720X

hitec

sec-bad

表emp_conn:

id

phno

email

1201

2356742

1202

1661663

1203

8887776

1204

9988774

1205

1231231

(3)、导入表表数据到HDFS

下面的命令用于从MySQL数据库服务器中的emp表导入HDFS。

./sqoop import \

--connect jdbc:mysql://centos-aaron-03:3306/test \

--username root \

--password 123456 \

--table emp \

--m 1

第一次执行报错“主机找不到”,分析后是因为/etc/hosts没配置mysql主机名的原因

[hadoop@centos-aaron-h1 bin]$ ./sqoop import \

> --connect jdbc:mysql://centos-aaron-03:3306/test \

> --username root \

> --password 123456 \

> --table emp \

> --m 1

Warning: /home/hadoop/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/03/18 16:34:17 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

19/03/18 16:34:17 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/03/18 16:34:17 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

19/03/18 16:34:17 INFO tool.CodeGenTool: Beginning code generation

19/03/18 16:34:18 ERROR manager.SqlManager: Error executing statement: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:411)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:1129)

at com.mysql.jdbc.MysqlIO.(MysqlIO.java:358)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2489)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2526)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2311)

at com.mysql.jdbc.ConnectionImpl.(ConnectionImpl.java:834)

at com.mysql.jdbc.JDBC4Connection.(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:411)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:416)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:347)

at java.sql.DriverManager.getConnection(DriverManager.java:571)

at java.sql.DriverManager.getConnection(DriverManager.java:215)

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:904)

at org.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:59)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:763)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:786)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:289)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:260)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:246)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:327)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1872)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1671)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.net.UnknownHostException: centos-aaron-03

at java.net.Inet4AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$1.lookupAllHostAddr(InetAddress.java:901)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1293)

at java.net.InetAddress.getAllByName0(InetAddress.java:1246)

at java.net.InetAddress.getAllByName(InetAddress.java:1162)

at java.net.InetAddress.getAllByName(InetAddress.java:1098)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:248)

at com.mysql.jdbc.MysqlIO.(MysqlIO.java:308)

... 33 more

19/03/18 16:34:18 ERROR tool.ImportTool: Import failed: java.io.IOException: No columns to generate for ClassWriter

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1677)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

配置主机名

vi /etc/hosts

#最后一行添加以下内容保存

192.168.29.131 centos-aaron-03

Esc

shift+z+z

#分发到hadoop集群其它主机上

sudo scp /etc/hosts root@centos-aaron-h2:/etc/hosts

sudo scp /etc/hosts root@centos-aaron-h3:/etc/hosts

sudo scp /etc/hosts root@centos-aaron-h4:/etc/hosts

再次执行导入命令

[hadoop@centos-aaron-h1 bin]$ ./sqoop import --connect jdbc:mysql://centos-aaron-03:3306/test --username root --password 123456 --table emp --m 1

Warning: /home/hadoop/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/03/18 16:48:05 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

19/03/18 16:48:05 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/03/18 16:48:05 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

19/03/18 16:48:05 INFO tool.CodeGenTool: Beginning code generation

19/03/18 16:48:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

19/03/18 16:48:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

19/03/18 16:48:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.9.1

注: /tmp/sqoop-hadoop/compile/d57b49bfec6ba315e6a268ebf3aac36f/emp.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

19/03/18 16:48:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/d57b49bfec6ba315e6a268ebf3aac36f/emp.jar

19/03/18 16:48:07 WARN manager.MySQLManager: It looks like you are importing from mysql.

19/03/18 16:48:07 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

19/03/18 16:48:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

19/03/18 16:48:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

19/03/18 16:48:07 INFO mapreduce.ImportJobBase: Beginning import of emp

19/03/18 16:48:08 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

19/03/18 16:48:08 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

19/03/18 16:48:09 INFO client.RMProxy: Connecting to ResourceManager at centos-aaron-h1/192.168.29.144:8032

19/03/18 16:48:10 INFO mapreduce.JobSubmitter: number of splits:1

19/03/18 16:48:11 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

19/03/18 16:48:11 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1552898029697_0002

19/03/18 16:48:11 INFO impl.YarnClientImpl: Submitted application application_1552898029697_0002

19/03/18 16:48:11 INFO mapreduce.Job: The url to track the job: http://centos-aaron-h1:8088/proxy/application_1552898029697_0002/

19/03/18 16:48:11 INFO mapreduce.Job: Running job: job_1552898029697_0002

19/03/18 16:48:22 INFO mapreduce.Job: Job job_1552898029697_0002 running in uber mode : false

19/03/18 16:48:22 INFO mapreduce.Job: map 0% reduce 0%

19/03/18 16:48:28 INFO mapreduce.Job: map 100% reduce 0%

19/03/18 16:48:28 INFO mapreduce.Job: Job job_1552898029697_0002 completed successfully

19/03/18 16:48:28 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=206933

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=151

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=3595

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=3595

Total vcore-milliseconds taken by all map tasks=3595

Total megabyte-milliseconds taken by all map tasks=3681280

Map-Reduce Framework

Map input records=5

Map output records=5

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=60

CPU time spent (ms)=540

Physical memory (bytes) snapshot=131735552

Virtual memory (bytes) snapshot=1715556352

Total committed heap usage (bytes)=42860544

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=151

19/03/18 16:48:28 INFO mapreduce.ImportJobBase: Transferred 151 bytes in 20.0215 seconds (7.5419 bytes/sec)

19/03/18 16:48:28 INFO mapreduce.ImportJobBase: Retrieved 5 records.

[hadoop@centos-aaron-h1 bin]$

查看执行结果

[hadoop@centos-aaron-h1 bin]$ hdfs dfs -ls /user/hadoop/emp

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2019-03-18 16:48 /user/hadoop/emp/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 151 2019-03-18 16:48 /user/hadoop/emp/part-m-00000

[hadoop@centos-aaron-h1 bin]$ hdfs dfs -cat /user/hadoop/emp/part-m-00000

1,gopal,manager,50000.00,TP

2,manisha,Proof reader,50000.00,TP

3,khalil,php dev,30000.00,AC

4,prasanth,php dev,30000.00,AC

5,kranthi,admin,20000.00,TP

[hadoop@centos-aaron-h1 bin]$

最后寄语,以上是博主本次文章的全部内容,如果大家觉得博主的文章还不错,请点赞;如果您对博主其它服务器大数据技术或者博主本人感兴趣,请关注博主博客,并且欢迎随时跟博主沟通交流。

转载于:https://my.oschina.net/u/2371923/blog/3024086

你可能感兴趣的:(大数据教程(13.5)sqoop数据迁移工具安装&简单导入实例)