hbase常见问题及解决方案(二)

hbase常见问题总结二

之前介绍了工作中遇到的一些比较基础的问题,下面介绍一些看起来没有那么简单的问题

1. 类找不见的问题!(自己写的类找不见的问题!)

出现该问题的情形: hbase和hadoop的hdfs,mapreduce整合使用的时候:

18/04/16 18:25:06 INFO mapreduce.JobSubmitter: Cleaning up the staging area /user/mingtong/.staging/job_1522546194099_223330

Exception in thread "main" java.lang.RuntimeException: java.lang.ClassNotFoundException: Class mastercom.cn.bigdata.util.hadoop.mapred.CombineSmallFileInputFormat not found

at org.apache.hadoop.mapreduce.lib.input.MultipleInputs.getInputFormatMap(MultipleInputs.java:112)

at org.apache.hadoop.mapreduce.lib.input.DelegatingInputFormat.getSplits(DelegatingInputFormat.java:58)

at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:301)

at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:318)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:196)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1290)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1287)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1287)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1308)

at mastercom.cn.hbase.helper.AddPaths.addUnCombineConfigJob(AddPaths.java:261)

at mastercom.cn.hbase.config.HbaseBulkloadConfigMain.CreateJob(HbaseBulkloadConfigMain.java:98)

at mastercom.cn.hbase.config.HbaseBulkloadConfigMain.main(HbaseBulkloadConfigMain.java:109)

```

经过各种测试,最终将问题定位在:这一行代码:

Configuration conf = HBaseConfiguration.create();

只要你的configuration使用的是hbase的,而且后面mapReduce的job用到这个conf,就会报这个问题!

解决方法: 乖乖的使用 Configuration conf = new Configuration(); 来创建conf吧

但是这种方法创建的conf,不会去加载hbase-site.xml配置文件,

hbase-site.xml里面重要的参数需要手动set!!

否则就无法正确的连接到Hbase!

由于上面介绍的问题还会引发下面的报错:

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.LeaseExpiredException): No lease on /wangyou/mingtong/mt_wlyh/Data/hbase_bulkload/output/4503/inin (inode 1964063475): File does not exist. Holder DFSClient_NONMAPREDUCE_-769553346_1 does not have any open files.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:3521)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFileInternal(FSNamesystem.java:3611)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFile(FSNamesystem.java:3578)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.complete(NameNodeRpcServer.java:905)

按照上述方法改进后,该问题就得到解决!

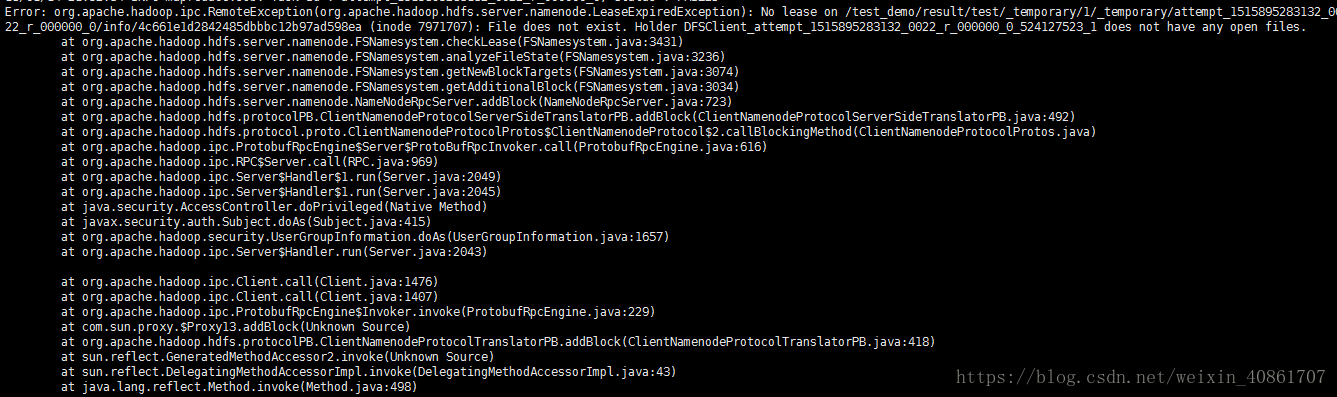

2.执行MapReduce遇到的问题:文件租约超期异常.

org.apache.hadoop.hdfs.server.namenode.LeaseExpiredException

这个问题实际上就是data stream操作过程中文件被删掉了。之前也遇到过,通常是因为Mapred多个task操作同一个文件,一个task完成后删掉文件导致,将可能造成这种情况的代码进行修改即可

我遇到这种问题的另一种情形 就是: 因为mapReduce之前的一些错误,job一直报错... 到后面导致的这个问题,这种情况下,不要理会这个报错,只需要解决前面的问题这个问题就迎刃而解

3.连接Hbase时, 明明hbase.zookeeper.quorum 和hbase.zookeeper.property.clientPort的设置都是正确的,却总是报错 INFO client.ZooKeeperRegistry: ClusterId read in ZooKeeper is null

首先,这种情况出现在: 使用的configuration 是 new configuration这种方式获得的

这里: 涉及到一个关键的配置:

zookeeper.znode.parent --> 这个值的默认值是/hbase

但是如果集群里面设置的值不是这个的话,就会抛出这个异常!比如说我们的集群:

因为使用 new Configuration()获得的configuration对象是不会读取Hbase的配置文件hbase-site.xml文件的

在代码中将该配置按照hbase-site.xml里面配置的添加进来即可

conf.set("zookeeper.znode.parent", "/hbase-unsecure");

这样,该问题得到解决!

4.使用bulkload入库遇到的另外一个问题!

报错信息如下所示:

Exception in thread "main" java.lang.IllegalArgumentException: Can not create a Path from a null string

at org.apache.hadoop.fs.Path.checkPathArg(Path.java:122)

at org.apache.hadoop.fs.Path.<init>(Path.java:134)

at org.apache.hadoop.fs.Path.<init>(Path.java:88)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configurePartitioner(HFileOutputFormat2.java:596)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configureIncrementalLoad(HFileOutputFormat2.java:445)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configureIncrementalLoad(HFileOutputFormat2.java:410)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configureIncrementalLoad(HFileOutputFormat2.java:372)

at mastercom.cn.hbase.helper.AddPaths.addUnCombineConfigJob(AddPaths.java:272)

at mastercom.cn.hbase.config.HbaseBulkloadConfigMain.CreateJob(HbaseBulkloadConfigMain.java:129)

at mastercom.cn.hbase.config.HbaseBulkloadConfigMain.main(HbaseBulkloadConfigMain.java:141)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:233)

at org.apache.hadoop.util.RunJar.main(RunJar.java:148)

由报错信息上可以看出来:是在HFileOutputFormat2类里面出现的错误

这个类是使用bulkload方式进行入库的很关键的类

我们接下来一步一步的去定位错误:

抛出来的错误信息是来自于path类的这个方法:

private void checkPathArg( String path ) throws IllegalArgumentException {

// disallow construction of a Path from an empty string

if ( path == null ) {

throw new IllegalArgumentException(

"Can not create a Path from a null string");

}

if( path.length() == 0 ) {

throw new IllegalArgumentException(

"Can not create a Path from an empty string");

}

}

根据界面上的报错结合一下: 可以得到path是一个null,

那么这个空是从何而来,我们继续看源码

static void configurePartitioner(Job job, List<ImmutableBytesWritable> splitPoints)

throws IOException {

Configuration conf = job.getConfiguration();

// create the partitions file

FileSystem fs = FileSystem.get(conf);

Path partitionsPath = new Path(conf.get("hbase.fs.tmp.dir"), "partitions_" + UUID.randomUUID());

fs.makeQualified(partitionsPath);

writePartitions(conf, partitionsPath, splitPoints);

fs.deleteOnExit(partitionsPath);

// configure job to use it

job.setPartitionerClass(TotalOrderPartitioner.class);

TotalOrderPartitioner.setPartitionFile(conf, partitionsPath);

}

分析上面的源码,能够产生null的又和path相关的,显然是这行代码:

Path(conf.get("hbase.fs.tmp.dir"), "partitions_" + UUID.randomUUID());

我们不妨测试一下,在获得conf对象后,打印一下hbase.fs.tmp.dir的值,果然为空!

那么问题已经确认,只需要在代码里面加上这行!

conf.set("hbase.fs.tmp.dir", "/wangyou/mingtong/mt_wlyh/tmp/hbase-staging");

问题便得到解决,入库工作得以正常运行!

5.gz压缩文件损坏导致入库失败的问题

ERROR hdfs.DFSClient: Failed to close inode 16886732

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.LeaseExpiredException): No lease on /hbase_bulkload/output/inin (inode 16886732): File does not exist. Holder DFSClient_NONMAPREDUCE_1351255084_1 does not have any open files.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:3431)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFileInternal(FSNamesystem.java:3521)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFile(FSNamesystem.java:3488)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.complete(NameNodeRpcServer.java:785)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.complete(ClientNamenodeProtocolServerSideTranslatorPB.java:536)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

at org.apache.hadoop.ipc.Client.call(Client.java:1476)

at org.apache.hadoop.ipc.Client.call(Client.java:1407)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy9.complete(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.complete(ClientNamenodeProtocolTranslatorPB.java:462)

at sun.reflect.GeneratedMethodAccessor6.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy10.complete(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream.completeFile(DFSOutputStream.java:2257)

at org.apache.hadoop.hdfs.DFSOutputStream.closeImpl(DFSOutputStream.java:2238)

at org.apache.hadoop.hdfs.DFSOutputStream.close(DFSOutputStream.java:2204)

at org.apache.hadoop.hdfs.DFSClient.closeAllFilesBeingWritten(DFSClient.java:951)

at org.apache.hadoop.hdfs.DFSClient.closeOutputStreams(DFSClient.java:983)

at org.apache.hadoop.hdfs.DistributedFileSystem.close(DistributedFileSystem.java:1076)

at org.apache.hadoop.fs.FileSystem$Cache.closeAll(FileSystem.java:2744)

at org.apache.hadoop.fs.FileSystem$Cache$ClientFinalizer.run(FileSystem.java:2761)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:54)

该问题的场景是在对大量的小的.gz压缩文件进行入库的时候,个别压缩文件损坏导致的,解决的方法就是找到那些出错的.gz文件删除掉

我当时使用的方法: 1. 首先去界面查看相应的job执行的日志,日志里有可能会有出错的.gz文件的id信息,找到将其删除

2. 将入库的文件夹下面的文件按照文件大小进行排序,一般来说,大小为0KB的都是有问题的.. 将其get下来,查看能否解压,不能正常解压就干掉

3. 可以使用命令: hdfs fsck path -openforwrite

检测某个文件夹下面文件是否正常

6.查询hbase的时候报错:

Caused by: java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.hbase.util.ByteStringer

at org.apache.hadoop.hbase.protobuf.RequestConverter.buildRegionSpecifier(RequestConverter.java:989)

at org.apache.hadoop.hbase.protobuf.RequestConverter.buildScanRequest(RequestConverter.java:485)

at org.apache.hadoop.hbase.client.ClientSmallScanner$SmallScannerCallable.call(ClientSmallScanner.java:195)

at org.apache.hadoop.hbase.client.ClientSmallScanner$SmallScannerCallable.call(ClientSmallScanner.java:181)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:126)

... 6 more

java.lang.NullPointerException

at mastercom.cn.bigdata.util.hbase.HbaseDBHelper.qureyAsList(HbaseDBHelper.java:86)

at conf.config.CellBuildInfo.loadCellBuildHbase(CellBuildInfo.java:150)

at mro.loc.MroXdrDeal.init(MroXdrDeal.java:200)

at mapr.mro.loc.MroLableFileReducers$MroDataFileReducers.reduce(MroLableFileReducers.java:80)

at mapr.mro.loc.MroLableFileReducers$MroDataFileReducers.reduce(MroLableFileReducers.java:1)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:171)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:627)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:389)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.hbase.util.ByteStringer

at org.apache.hadoop.hbase.client.RpcRetryingCaller.translateException(RpcRetryingCaller.java:229)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:140)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas$RetryingRPC.call(ScannerCallableWithReplicas.java:310)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas$RetryingRPC.call(ScannerCallableWithReplicas.java:291)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.hbase.util.ByteStringer

at org.apache.hadoop.hbase.protobuf.RequestConverter.buildRegionSpecifier(RequestConverter.java:989)

at org.apache.hadoop.hbase.protobuf.RequestConverter.buildScanRequest(RequestConverter.java:485)

at org.apache.hadoop.hbase.client.ClientSmallScanner$SmallScannerCallable.call(ClientSmallScanner.java:195)

at org.apache.hadoop.hbase.client.ClientSmallScanner$SmallScannerCallable.call(ClientSmallScanner.java:181)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:126)

... 6 more

java.lang.NullPointerException

at mastercom.cn.bigdata.util.hbase.HbaseDBHelper.qureyAsList(HbaseDBHelper.java:86)

at conf.config.CellBuildInfo.loadCellBuildHbase(CellBuildInfo.java:150)

at mro.loc.MroXdrDeal.init(MroXdrDeal.java:200)

at mapr.mro.loc.MroLableFileReducers$MroDataFileReducers.reduce(MroLableFileReducers.java:80)

at mapr.mro.loc.MroLableFileReducers$MroDataFileReducers.reduce(MroLableFileReducers.java:1)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:171)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:627)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:389)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

下面是出现问题的代码:

/**

* 根据行键进行查询,返回的结果是一个List集合

*

* @param tableName

* @param rowKey

* @param conn

* @return

*/

public List<String> qureyAsList(String tableName, String rowKey, Connection conn) {

getList = new ArrayList<Get>();

valueList = new ArrayList<String>();

try {

table = conn.getTable(TableName.valueOf(tableName));

} catch (IOException e) {

LOGHelper.GetLogger().writeLog(LogType.error,"get table error" + e.getMessage());

}

// 把rowkey加到get里,再把get装到list中

Get get = new Get(Bytes.toBytes(rowKey));

getList.add(get);

try {

results = table.get(getList);

} catch (IOException e) {

LOGHelper.GetLogger().writeLog(LogType.error,"can't get results" + e.getMessage());

}

for (Result result : results) {

for (Cell kv : result.rawCells()) {

String value = Bytes.toString(CellUtil.cloneValue(kv));

valueList.add(value);

}

}

return valueList;

}

这个空指针异常也是挺恶心的,

我已经正常连接到了hbase,而且表名也是正常的...

原来是代码不够严谨: 在一些情况下,根据行键进行查询,可能得到的结果集是null,但是我的代码里并没有加上对可能出现的空指针异常进行处理的机制,然后使用for循环遍历这个空的结果集

for (Result result : results)

遍历一个空的结果集当然会报错啦!

解决方法: 前面加上一个判断,就解决了!

7. HMaster启动之后马上挂掉

查看日志里面报错信息如下:

FATAL [kiwi02:60000.activeMasterManager] master.HMaster: Unhandled exception. Starting shutdown.

org.apache.hadoop.hbase.util.FileSystemVersionException: HBase file layout needs to be upgraded.

You have version null and I want version 8.

Consult http://hbase.apache.org/book.html for further information about upgrading HBase.

Is your hbase.rootdir valid? If so, you may need to run 'hbase hbck -fixVersionFile'.

解决方案 :

试过了很多方法,最终通过在hdfs中,删除hbase的目录,然后重启hbase master 解决

那么,hbase的目录是哪一个呢?

在 : $HBASE_HOME/conf/hbase-site.xml里面配置,通常为/hbase

<property>

<name>hbase.rootdir</name>

<value>/hbase</value>

</property>