KUBERNETES-1-19-资源需求、限制及HeapSter

1.mkdir metrics创建测试目录。 vim pod-demo.yaml编辑文件。cat pod-demo.yaml查看文件(注意这里的resources:参数)。 kubectl apply -f pod-demo.yaml声明资源。kubectl get pods | grep pod-demo获取Pod信息发现被挂起(之前的taints参数没有去除的缘故)。kubectl taint node node1.example.com node-type-消除taints参数。kubectl taint node node2.example.com node-type-消除taints参数。kubectl apply -f pod-demo.yaml再次声明资源。kubectl exec pod-demo -- top到pod内部查看资源使用情况。

[root@master manifests]# mkdir metrics

[root@master manifests]# cd metrics/

[root@master metrics]# cp ../schedule/pod-demo.yaml .

[root@master metrics]# vim pod-demo.yaml

[root@master metrics]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/stress-ng

command: ["/usr/bin/stress-ng", "-m 1", "-c 1", "--metrics-brief"]

resources:

requests:

cpu: "200m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "512Mi"

[root@master metrics]# kubectl apply -f pod-demo.yaml

pod/pod-demo created

[root@master metrics]# kubectl get pods | grep pod-demo

pod-demo 0/1 Pending 0 14s

[root@master metrics]# kubectl taint node node1.example.com node-type-

node/node1.example.com untainted

[root@master metrics]# kubectl taint node node2.example.com node-type-

node/node2.example.com untainted

[root@master metrics]# kubectl apply -f pod-demo.yaml

pod/pod-demo configured

[root@master metrics]# kubectl get pods -o wide | grep pod-demo

pod-demo 1/1 Running 0 1m 10.244.2.21 node2.example.com

[root@master metrics]# kubectl exec pod-demo -- top

Mem: 1484576K used, 382472K free, 11412K shrd, 2104K buff, 723640K cached

CPU: 26% usr 0% sys 0% nic 73% idle 0% io 0% irq 0% sirq

Load average: 0.06 0.04 0.09 4/396 13

PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND

8 7 root R 262m 14% 1 11% {stress-ng-vm} /usr/bin/stress-ng

6 1 root R 6892 0% 0 11% {stress-ng-cpu} /usr/bin/stress-ng

1 0 root S 6244 0% 1 0% /usr/bin/stress-ng -m 1 -c 1 --met

7 1 root S 6244 0% 1 0% {stress-ng-vm} /usr/bin/stress-ng

2.kubectl describe pods pod-demo | grep -i qos查看服务优先级别(QoS Class: Burstable表示中等优先级别)。vim pod-demo.yaml编辑文件。cat pod-demo.yaml查看文件( resources:修改属性,cpu和memory的requests和limits均设置为相等)。kubectl apply -f pod-demo.yaml声明资源。 kubectl describe pods pod-demo | grep -i qos查看优先级别(QoS Class: Guaranteed此时为最高优先级别)。kubectl describe pods myapp-deploy-5d9c6985f5-g4cwf | grep -i qos查看其他Pod 的优先级别(QoS Class: BestEffort为最低优先级别,未设置cpu和memory的requests和limits)。

root@master metrics]# kubectl describe pods pod-demo | grep -i qos

QoS Class: Burstable

[root@master metrics]# kubectl delete -f pod-demo.yaml

pod "pod-demo" deleted

[root@master metrics]# vim pod-demo.yaml

[root@master metrics]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

resources:

requests:

cpu: "200m"

memory: "512Mi"

limits:

cpu: "200m"

memory: "512Mi"

[root@master metrics]# kubectl apply -f pod-demo.yaml

pod/pod-demo created

[root@master metrics]# kubectl describe pods pod-demo | grep -i qos

QoS Class: Guaranteed

[root@master metrics]# kubectl describe pods myapp-deploy-5d9c6985f5-g4cwf | grep -i qos

QoS Class: BestEffort

3.wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/influxdb.yaml下载文件。docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2获取国内镜像。docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2 重新打标签。kubectl apply -f influxdb.yaml声明资源。kubectl get svc -o wide -n kube-system | grep influxdb查看服务信息。kubectl get pods -o wide -n kube-system | grep influxdb查看pod信息。kubectl logs monitoring-influxdb-848b9b66f6-dw2cm -n kube-system获取事件日志。

[root@master metrics]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

--2018-12-21 00:26:27-- https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 960 [text/plain]

Saving to: ‘influxdb.yaml’

100%[=============================================================================================>] 960 --.-K/s in 0s

2018-12-21 00:26:28 (265 MB/s) - ‘influxdb.yaml’ saved [960/960]

[root@master metrics]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2

v1.5.2: Pulling from google_containers/heapster-influxdb-amd64

9175292d842f: Already exists

ae9916a9ae3f: Pull complete

89c48d3742e2: Pull complete

Digest: sha256:ba884de48c3cc4ba7c6f4908e7ac8c8909380855bb5f47655a1b23eba507b0fd

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2

[root@master metrics]# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2 k8s.grc.io/heapster-influxdb-amd64:v1.5.2

[root@master metrics]# kubectl apply -f influxdb.yaml

deployment.apps/monitoring-influxdb created

service/monitoring-influxdb created

[root@master metrics]# kubectl get svc -o wide -n kube-system | grep influxdb

monitoring-influxdb ClusterIP 10.98.176.22

[root@master metrics]# kubectl get pods -o wide -n kube-system | grep influxdb

monitoring-influxdb-848b9b66f6-dw2cm 1/1 Running 0 3m 10.244.1.22 node1.example.com

[root@master metrics]# kubectl logs monitoring-influxdb-848b9b66f6-dw2cm -n kube-system

ts=2018-12-21T07:28:13.290747Z lvl=info msg="InfluxDB starting" log_id=0CVvU7vW000 version=unknown branch=unknown commit=unknown

ts=2018-12-21T07:28:13.290820Z lvl=info msg="Go runtime" log_id=0CVvU7vW000 version=go1.10.3 maxprocs=2

ts=2018-12-21T07:28:15.820933Z lvl=info msg="Using data dir" log_id=0CVvU7vW000 service=store path=/data/data

ts=2018-12-21T07:28:15.822982Z lvl=info msg="Open store (start)" log_id=0CVvU7vW000 service=store trace_id=0CVvUHoW000 op_name=tsdb_open op_event=start

ts=2018-12-21T07:28:15.823048Z lvl=info msg="Open store (end)" log_id=0CVvU7vW000 service=store trace_id=0CVvUHoW000 op_name=tsdb_open op_event=end op_elapsed=0.070ms

ts=2018-12-21T07:28:15.823073Z lvl=info msg="Opened service" log_id=0CVvU7vW000 service=subscriber

ts=2018-12-21T07:28:15.823078Z lvl=info msg="Starting monitor service" log_id=0CVvU7vW000 service=monitor

ts=2018-12-21T07:28:15.823083Z lvl=info msg="Registered diagnostics client" log_id=0CVvU7vW000 service=monitor name=build

ts=2018-12-21T07:28:15.823086Z lvl=info msg="Registered diagnostics client" log_id=0CVvU7vW000 service=monitor name=runtime

ts=2018-12-21T07:28:15.823089Z lvl=info msg="Registered diagnostics client" log_id=0CVvU7vW000 service=monitor name=network

ts=2018-12-21T07:28:15.823095Z lvl=info msg="Registered diagnostics client" log_id=0CVvU7vW000 service=monitor name=system

ts=2018-12-21T07:28:15.823333Z lvl=info msg="Starting precreation service" log_id=0CVvU7vW000 service=shard-precreation check_interval=10m advance_period=30m

ts=2018-12-21T07:28:15.823358Z lvl=info msg="Starting snapshot service" log_id=0CVvU7vW000 service=snapshot

ts=2018-12-21T07:28:15.823366Z lvl=info msg="Starting continuous query service" log_id=0CVvU7vW000 service=continuous_querier

ts=2018-12-21T07:28:15.823372Z lvl=info msg="Starting HTTP service" log_id=0CVvU7vW000 service=httpd authentication=false

ts=2018-12-21T07:28:15.823377Z lvl=info msg="opened HTTP access log" log_id=0CVvU7vW000 service=httpd path=stderr

ts=2018-12-21T07:28:15.823557Z lvl=info msg="Listening on HTTP" log_id=0CVvU7vW000 service=httpd addr=[::]:8086 https=false

ts=2018-12-21T07:28:15.823586Z lvl=info msg="Starting retention policy enforcement service" log_id=0CVvU7vW000 service=retention check_interval=30m

ts=2018-12-21T07:28:15.824150Z lvl=info msg="Storing statistics" log_id=0CVvU7vW000 service=monitor db_instance=_internal db_rp=monitor interval=10s

ts=2018-12-21T07:28:15.824228Z lvl=info msg="Listening for signals" log_id=0CVvU7vW000

4.wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml下载文件。wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/heapster.yaml下载文件(注意这里有个镜像,可以下载国内镜像然后重新贴上标签)。vim heapster.yaml编辑文件。cat heapster.yaml查看文件(主要是apiVersion:和selector:以及type: NodePort)。kubectl apply -f heapster.yaml声明资源。kubectl get svc -n kube-system -o wide | grep heapster查看服务信息。kubectl get pods -n kube-system -o wide | grep heapster查看Pod信息。从物理机尝试访问服务网页端口(可以访问服务,但是网页不存在)。kubectl logs heapster-84c9bc48c4-djqmb -n kube-system | grep created查看pod日志。

[root@master metrics]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

--2018-12-21 02:33:02-- https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 264 [text/plain]

Saving to: ‘heapster-rbac.yaml’

100%[=============================================================================================>] 264 --.-K/s in 0s

2018-12-21 02:33:03 (77.0 MB/s) - ‘heapster-rbac.yaml’ saved [264/264]

[root@master metrics]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/heapster.yaml

--2018-12-21 02:37:40-- https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/heapster.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.72.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.72.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1100 (1.1K) [text/plain]

Saving to: ‘heapster.yaml’

100%[=============================================================================================>] 1,100 --.-K/s in 0s

2018-12-21 02:37:41 (229 MB/s) - ‘heapster.yaml’ saved [1100/1100]

[root@master metrics]# cat heapster.yaml | grep image

image: k8s.gcr.io/heapster-amd64:v1.5.4

imagePullPolicy: IfNotPresent

[root@master metrics]# vim heapster.yaml

[root@master metrics]# cat heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: heapster

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: k8s.gcr.io/heapster-amd64:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

type: NodePort

selector:

k8s-app: heapster

[root@master metrics]# kubectl apply -f heapster.yaml

serviceaccount/heapster created

deployment.apps/heapster created

service/heapster created

[root@master metrics]# kubectl get svc -n kube-system -o wide | grep heapster

heapster NodePort 10.101.219.38

[root@master metrics]# kubectl get pods -n kube-system -o wide | grep heapster

heapster-84c9bc48c4-djqmb 1/1 Running 0 16s 10.244.2.25 node2.example.com

[root@master metrics]# kubectl logs heapster-84c9bc48c4-djqmb -n kube-system | grep created

I1221 07:57:02.902103 1 influxdb.go:312] created influxdb sink with options: host:monitoring-influxdb.kube-system.svc:8086 user:root db:k8s

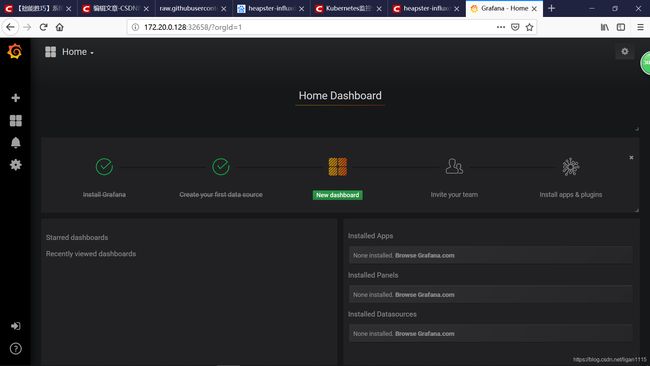

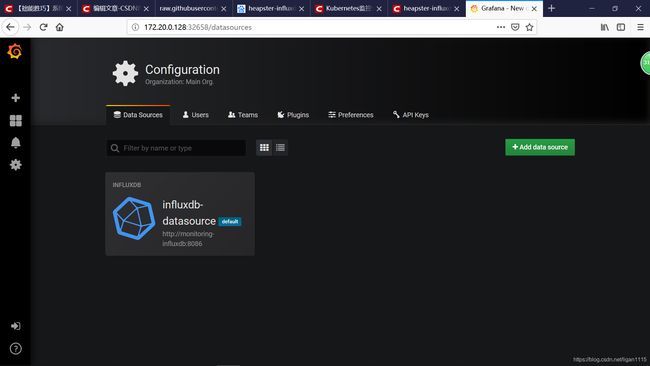

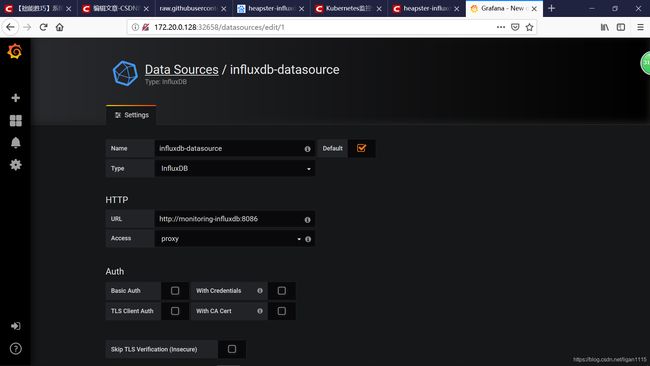

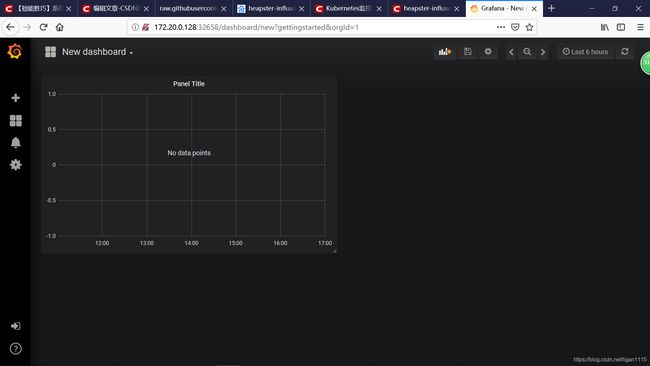

5.wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml下载文件。vim grafana.yaml编辑文件。cat grafana.yaml查看文件。kubectl apply -f grafana.yaml声明资源。kubectl get svc -n kube-system -o wide | grep grafana获取服务信息。kubectl get pods -n kube-system -o wide | grep grafana获取pod信息。通过物理机登陆服务端口。

[root@master metrics]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml

--2018-12-21 03:07:32-- https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2276 (2.2K) [text/plain]

Saving to: ‘grafana.yaml’

100%[=============================================================================================>] 2,276 --.-K/s in 0s

2018-12-21 03:07:32 (68.7 MB/s) - ‘grafana.yaml’ saved [2276/2276]

[root@master metrics]# vim grafana.yaml

[root@master metrics]# cat grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

type: NodePort

[root@master metrics]# kubectl apply -f grafana.yaml

deployment.apps/monitoring-grafana created

service/monitoring-grafana created

[root@master metrics]# kubectl get svc -n kube-system -o wide | grep grafana

monitoring-grafana NodePort 10.104.248.107

[root@master metrics]# kubectl get pods -n kube-system -o wide | grep grafana

monitoring-grafana-555545f477-bmqgw 1/1 Running 0 7s 10.244.2.27 node2.example.com

6.kubectl top pods查看Pod。(显示metrics不可用)kubectl top nodes查看节点(显示metrics不可用)。(注:从kubernetes1.11版开始逐渐放弃对HeapSter的支持,HeapSter与metrics结合还可以使用,但再往后的版本可能会无法使用。)

[root@master metrics]# kubectl top pods

W1221 04:18:16.784582 35818 top_pod.go:263] Metrics not available for pod default/myapp-deploy-5d9c6985f5-g4cwf, age: 19h40m21.784563934s

error: Metrics not available for pod default/myapp-deploy-5d9c6985f5-g4cwf, age: 19h40m21.784563934s

[root@master metrics]# kubectl top nodes

error: metrics not available yet