netty 内存池分析

文章目录

- 内存池的作用

- 引用记数

-

- AbstractReferenceCountedByteBuf

-

- 版本一

- 版本二

- 版本三

- 内存管理

-

- PoolChunk

-

- 分配内存

- 释放内存

- PoolSubpage

-

- 内存分配

- 内存释放

- PoolChunkList

- PoolArena

-

- 内存分配

- 内存释放

这几天看了netty的内存池,主要涉及了PoolArena,PoolChunk,PoolChunkList,PoolSubpage这几个类。

内存池的作用

众所周知,jvm的堆内存中进行io操作时,会先拷到直接内存,增加了一次额外的消耗;而直接内存的申请释放,相比堆内存要慢得多,因此netty引入了内存池,对直接内存减少了频繁申请释放的开销,同时对堆内存也避免了gc。

引用记数

内存池采用了引用记数的方式管理内存的生命周期,实现类为AbstractReferenceCountedByteBuf。

AbstractReferenceCountedByteBuf

看这个类的时候,有以下两个疑问

- 对于volatile变量refCount,为什么要用unsafe通过内存偏移访问而不是直接访问

- 为什么用2x表示引用计数,奇数表示引用计数为0而不是用0,1,2,3表示

翻了git提交记录,引用如下:

Use a non-volatile read for ensureAccessible() whenever possible to reduce overhead and allow better inlining. (#8266)

Motiviation:

At the moment whenever ensureAccessible() is called in our ByteBuf implementations (which is basically on each operation) we will do a volatile read. That per-se is not such a bad thing but the problem here is that it will also reduce the the optimizations that the compiler / jit can do. For example as these are volatile it can not eliminate multiple loads of it when inline the methods of ByteBuf which happens quite frequently because most of them a quite small and very hot. That is especially true for all the methods that act on primitives.

It gets even worse as people often call a lot of these after each other in the same method or even use method chaining here.

The idea of the change is basically just ue a non-volatile read for the ensureAccessible() check as its a best-effort implementation to detect acting on already released buffers anyway as even with a volatile read it could happen that the user will release it in another thread before we actual access the buffer after the reference check.

主要是为了性能考虑,防止jit优化失效

对于第二个疑问,涉及到好几个提交,将每个版本分析一下:

版本一

private ByteBuf retain0(int increment) {

for (;;) {

int refCnt = this.refCnt;

final int nextCnt = refCnt + increment;

// Ensure we not resurrect (which means the refCnt was 0) and also that we encountered an overflow.

if (nextCnt <= increment) {

throw new IllegalReferenceCountException(refCnt, increment);

}

if (refCntUpdater.compareAndSet(this, refCnt, nextCnt)) {

break;

}

}

return this;

}

private boolean release0(int decrement) {

for (;;) {

int refCnt = this.refCnt;

if (refCnt < decrement) {

throw new IllegalReferenceCountException(refCnt, -decrement);

}

if (refCntUpdater.compareAndSet(this, refCnt, refCnt - decrement)) {

if (refCnt == decrement) {

deallocate();

return true;

}

return false;

}

}

}

版本一中用0,1,2,3表示引用计数,使用compareAndSet进行引用计数的增减,在高并发环境下,这会引起不断重试影响性能。

版本二

private ByteBuf retain0(final int increment) {

int oldRef = refCntUpdater.getAndAdd(this, increment);

if (oldRef <= 0 || oldRef + increment < oldRef) {

// Ensure we don't resurrect (which means the refCnt was 0) and also that we encountered an overflow.

refCntUpdater.getAndAdd(this, -increment);

throw new IllegalReferenceCountException(oldRef, increment);

}

return this;

}

private boolean release0(int decrement) {

int oldRef = refCntUpdater.getAndAdd(this, -decrement);

if (oldRef == decrement) {

deallocate();

return true;

} else if (oldRef < decrement || oldRef - decrement > oldRef) {

// Ensure we don't over-release, and avoid underflow.

refCntUpdater.getAndAdd(this, decrement);

throw new IllegalReferenceCountException(oldRef, decrement);

}

return false;

}

版本二中改成了getAndAdd,避免了重试,解决了性能问题,但是又引入了另一个问题,考虑如下的情况:

- 某个ByteBuf当前refCount为1

- 线程一调用release0(1),执行getAndAdd后,refCount变为0

- 在线程一执行deallocate之前,线程二调用retain0(1),执行getAndAdd后refCount变为1

- 线程三调用retain0(1),成功将引用计数加1

- 线程一执行deallocate,内存被回收

版本三

private ByteBuf retain0(final int increment) {

// all changes to the raw count are 2x the "real" change

int adjustedIncrement = increment << 1; // overflow OK here

int oldRef = refCntUpdater.getAndAdd(this, adjustedIncrement);

if ((oldRef & 1) != 0) {

throw new IllegalReferenceCountException(0, increment);

}

// don't pass 0!

if ((oldRef <= 0 && oldRef + adjustedIncrement >= 0)

|| (oldRef >= 0 && oldRef + adjustedIncrement < oldRef)) {

// overflow case

refCntUpdater.getAndAdd(this, -adjustedIncrement);

throw new IllegalReferenceCountException(realRefCnt(oldRef), increment);

}

return this;

}

private boolean release0(int decrement) {

int rawCnt = nonVolatileRawCnt(), realCnt = toLiveRealCnt(rawCnt, decrement);

if (decrement == realCnt) {

if (refCntUpdater.compareAndSet(this, rawCnt, 1)) {

deallocate();

return true;

}

return retryRelease0(decrement);

}

return releaseNonFinal0(decrement, rawCnt, realCnt);

}

版本三使用偶数表示引用计数不为0,使用奇数表示引用计数为0,每次增减值都是2x,

只要一旦引用计数变为奇数,就将一直保持奇数,解决了版本二中的问题

内存管理

netty使用buddy伙伴算法分配内存,防止了内存碎片的产生。具体实现在PoolChunk类中。

PoolChunk

如下图所示,chunk表示分配的一整块内存,

这块内存会被分为一个个的page,每个page的大小为pageSize。用一棵二叉树表示buddy算法如下,根结点的大小为整块内存chunkSize,第1层每个结点为chunkSize/2,以此类推,最底层叶子结点的大小为pageSize,树的高度为maxOrder(从0开始),则

chunkSize = 2^maxOrder * pageSize

在PoolChunk类中,memoryMap数组用于表示这棵二叉树,长度为所有结点总数+1,memoryMap[1]表示根结点,则树中任意一个结点memoryMap[i],它的左子为memoryMap[2i],右子为memoryMap[2i+1]。memoryMap[i]中存放的以该结点为根的子树中高度最小的空闲结点的高度,初始值如图中所示,当memoryMap[i]=maxOrder+1时,说明以i结点为根的子树都以分配完毕。另有一个depthMap数组,存储每个结点在树中的高度,初始值与memoryMap相同。

PoolChunk的构造函数如下所示:

PoolChunk(PoolArena<T> arena, T memory, int pageSize, int maxOrder, int pageShifts, int chunkSize, int offset) {

unpooled = false;

//对应的PoolArena

this.arena = arena;

//byte[]或ByteBuffer

this.memory = memory;

//页大小,默认为8K

this.pageSize = pageSize;

//页大小的2的幂次

this.pageShifts = pageShifts;

//树的高度

this.maxOrder = maxOrder;

//chunk的总大小

this.chunkSize = chunkSize;

//offset用于对齐cacheLine,当memory为directBuffer时有用

this.offset = offset;

unusable = (byte) (maxOrder + 1);

log2ChunkSize = log2(chunkSize);

subpageOverflowMask = ~(pageSize - 1);

freeBytes = chunkSize;

assert maxOrder < 30 : "maxOrder should be < 30, but is: " + maxOrder;

maxSubpageAllocs = 1 << maxOrder;

// Generate the memory map.

memoryMap = new byte[maxSubpageAllocs << 1];

depthMap = new byte[memoryMap.length];

int memoryMapIndex = 1;

for (int d = 0; d <= maxOrder; ++ d) {

// move down the tree one level at a time

int depth = 1 << d;

for (int p = 0; p < depth; ++ p) {

// in each level traverse left to right and set value to the depth of subtree

memoryMap[memoryMapIndex] = (byte) d;

depthMap[memoryMapIndex] = (byte) d;

memoryMapIndex ++;

}

}

//subpages是叶子结点组成的数组

subpages = newSubpageArray(maxSubpageAllocs);

cachedNioBuffers = new ArrayDeque<ByteBuffer>(8);

}

分配内存

boolean allocate(PooledByteBuf<T> buf, int reqCapacity, int normCapacity) {

final long handle;

if ((normCapacity & subpageOverflowMask) != 0) {

// >= pageSize

handle = allocateRun(normCapacity);

} else {

handle = allocateSubpage(normCapacity);

}

if (handle < 0) {

return false;

}

ByteBuffer nioBuffer = cachedNioBuffers != null ? cachedNioBuffers.pollLast() : null;

initBuf(buf, nioBuffer, handle, reqCapacity);

return true;

}

reqCapacity是实际需要分配的大小,normCapacity是对齐到16或2的幂次之后的大小。

首先判断normCapacity是否大于pageSize,分两种情况,下面依次分析:

- 小于页大小的分配

private long allocateSubpage(int normCapacity) {

// Obtain the head of the PoolSubPage pool that is owned by the PoolArena and synchronize on it.

// This is need as we may add it back and so alter the linked-list structure.

PoolSubpage<T> head = arena.findSubpagePoolHead(normCapacity);

int d = maxOrder; // subpages are only be allocated from pages i.e., leaves

synchronized (head) {

int id = allocateNode(d);

if (id < 0) {

return id;

}

final PoolSubpage<T>[] subpages = this.subpages;

final int pageSize = this.pageSize;

freeBytes -= pageSize;

int subpageIdx = subpageIdx(id);

PoolSubpage<T> subpage = subpages[subpageIdx];

if (subpage == null) {

subpage = new PoolSubpage<T>(head, this, id, runOffset(id), pageSize, normCapacity);

subpages[subpageIdx] = subpage;

} else {

subpage.init(head, normCapacity);

}

return subpage.allocate();

}

}

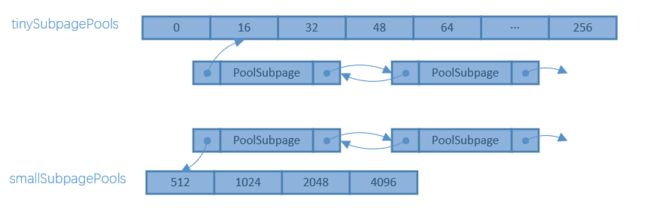

在PoolArena中有两个数组tinySubpagePools和smallSubpagePools,分别保存小于512字节的subpage组成的链表的头部和512字节到页大小的subpage组成的链表的头部,如下图所示

根据normCapacity在两个数组中找到head后,在树的最底层即叶子结点中分配一个空闲的节点。subpages是PoolSubpage组成的数组,代表所有叶子节点,将找到的空闲节点对应的subpage初始化为normCapacity单元大小,并挂到head的链表中,后续如果又要分配normCapacity大小的内存,可直接在链表中找到PoolSubpage进行分配,PoolSubpage内部的内存分配等到分配PoolSubpage的时候再讲。

- 大于等于页大小的分配

private long allocateRun(int normCapacity) {

int d = maxOrder - (log2(normCapacity) - pageShifts);

int id = allocateNode(d);

if (id < 0) {

return id;

}

freeBytes -= runLength(id);

return id;

}

先计算normCapacity大小的节点在树中的高度d,然后在第d层分配一个空闲节点。

回到allocate函数,内存分配完后,得到一个handle,handle的低32位代表memoryMapIdx, 高32位代表bitmapIdx,在分配小于页大小的内存时才会用到bitmapIdx。initBuf将得到的内存的起始地址及大小写入buf中,完成buf初始化。

释放内存

void free(long handle, ByteBuffer nioBuffer) {

int memoryMapIdx = memoryMapIdx(handle);

int bitmapIdx = bitmapIdx(handle);

if (bitmapIdx != 0) {

// free a subpage

PoolSubpage<T> subpage = subpages[subpageIdx(memoryMapIdx)];

assert subpage != null && subpage.doNotDestroy;

// Obtain the head of the PoolSubPage pool that is owned by the PoolArena and synchronize on it.

// This is need as we may add it back and so alter the linked-list structure.

PoolSubpage<T> head = arena.findSubpagePoolHead(subpage.elemSize);

synchronized (head) {

if (subpage.free(head, bitmapIdx & 0x3FFFFFFF)) {

return;

}

}

}

freeBytes += runLength(memoryMapIdx);

setValue(memoryMapIdx, depth(memoryMapIdx));

updateParentsFree(memoryMapIdx);

if (nioBuffer != null && cachedNioBuffers != null &&

cachedNioBuffers.size() < PooledByteBufAllocator.DEFAULT_MAX_CACHED_BYTEBUFFERS_PER_CHUNK) {

cachedNioBuffers.offer(nioBuffer);

}

}

free时还是传入这个handle,netty中根据大小将内存分为tiny, small, normal, huge,分别代表小于512,512-pageSize, pageSize-chunkSize,大于chunkSize四种。对于tiny和small内存,只释放subpage中的一部分,后面再讲,normal内存则将memoryMap中对应结点的值改为该结点的高度,即表示该节点为空闲内存,并依次修改各父节点的值。

PoolSubpage

PoolSubpage的作用是将一页内存分成若干个相同大小的单元进行分配。构造函数如下:

PoolSubpage(PoolSubpage<T> head, PoolChunk<T> chunk, int memoryMapIdx, int runOffset, int pageSize, int elemSize) {

//该subpage所属的chunk

this.chunk = chunk;

//在memoryMap中的index

this.memoryMapIdx = memoryMapIdx;

//相对于chunk起始地址的偏移量

this.runOffset = runOffset;

//页大小

this.pageSize = pageSize;

//位图,表示subpage中每个单元的占用情况

bitmap = new long[pageSize >>> 10]; // pageSize / 16 / 64

init(head, elemSize);

}

void init(PoolSubpage<T> head, int elemSize) {

doNotDestroy = true;

//单元大小

this.elemSize = elemSize;

if (elemSize != 0) {

maxNumElems = numAvail = pageSize / elemSize;

nextAvail = 0;

//初始化位图,全部设为空闲状态

bitmapLength = maxNumElems >>> 6;

if ((maxNumElems & 63) != 0) {

bitmapLength ++;

}

for (int i = 0; i < bitmapLength; i ++) {

bitmap[i] = 0;

}

}

//添加到PoolArena中tiny(small)SubpagePools数组中head对应的链表中

addToPool(head);

}

内存分配

long allocate() {

if (elemSize == 0) {

return toHandle(0);

}

if (numAvail == 0 || !doNotDestroy) {

return -1;

}

final int bitmapIdx = getNextAvail();

int q = bitmapIdx >>> 6;

int r = bitmapIdx & 63;

assert (bitmap[q] >>> r & 1) == 0;

bitmap[q] |= 1L << r;

if (-- numAvail == 0) {

removeFromPool();

}

return toHandle(bitmapIdx);

}

private int getNextAvail() {

int nextAvail = this.nextAvail;

if (nextAvail >= 0) {

this.nextAvail = -1;

return nextAvail;

}

return findNextAvail();

}

private long toHandle(int bitmapIdx) {

return 0x4000000000000000L | (long) bitmapIdx << 32 | memoryMapIdx;

}

成员变量nextAvail用于加快空闲单元的查找,在free时nextAvail会被设成被free的单元,于是下一次allocate就可以直接用这个单元,当nextAvail为-1时,就要遍历bitmap数组,找到一个空闲单元。找到空闲单元后,如果该subpage中所有单元都被分配,则将其从PoolArena的tiny(small)SubpagePools链表中移除。

内存释放

boolean free(PoolSubpage<T> head, int bitmapIdx) {

if (elemSize == 0) {

return true;

}

int q = bitmapIdx >>> 6;

int r = bitmapIdx & 63;

assert (bitmap[q] >>> r & 1) != 0;

bitmap[q] ^= 1L << r;

setNextAvail(bitmapIdx);

if (numAvail ++ == 0) {

addToPool(head);

return true;

}

if (numAvail != maxNumElems) {

return true;

} else {

// Subpage not in use (numAvail == maxNumElems)

if (prev == next) {

// Do not remove if this subpage is the only one left in the pool.

return true;

}

// Remove this subpage from the pool if there are other subpages left in the pool.

doNotDestroy = false;

removeFromPool();

return false;

}

}

PoolChunkList

PoolChunkList是由PoolChunk组成的链表,有两个成员变量minUsage, maxUsage,表示该链表中的PoolChunk的内存占用率要在这两个值之间,随着PoolChunk中内存的分配、释放,可能会不满足这个条件,则将其移入另外的PoolChunkList中。在PoolArena中有6个这样的list,分别具有不同的usage。

PoolArena

PoolArena是内存分配、释放的入口,构造函数如下:

protected PoolArena(PooledByteBufAllocator parent, int pageSize,

int maxOrder, int pageShifts, int chunkSize, int cacheAlignment) {

this.parent = parent;

this.pageSize = pageSize;

this.maxOrder = maxOrder;

this.pageShifts = pageShifts;

this.chunkSize = chunkSize;

directMemoryCacheAlignment = cacheAlignment;

directMemoryCacheAlignmentMask = cacheAlignment - 1;

subpageOverflowMask = ~(pageSize - 1);

tinySubpagePools = newSubpagePoolArray(numTinySubpagePools);

for (int i = 0; i < tinySubpagePools.length; i ++) {

tinySubpagePools[i] = newSubpagePoolHead(pageSize);

}

numSmallSubpagePools = pageShifts - 9;

smallSubpagePools = newSubpagePoolArray(numSmallSubpagePools);

for (int i = 0; i < smallSubpagePools.length; i ++) {

smallSubpagePools[i] = newSubpagePoolHead(pageSize);

}

q100 = new PoolChunkList<T>(this, null, 100, Integer.MAX_VALUE, chunkSize);

q075 = new PoolChunkList<T>(this, q100, 75, 100, chunkSize);

q050 = new PoolChunkList<T>(this, q075, 50, 100, chunkSize);

q025 = new PoolChunkList<T>(this, q050, 25, 75, chunkSize);

q000 = new PoolChunkList<T>(this, q025, 1, 50, chunkSize);

qInit = new PoolChunkList<T>(this, q000, Integer.MIN_VALUE, 25, chunkSize);

q100.prevList(q075);

q075.prevList(q050);

q050.prevList(q025);

q025.prevList(q000);

q000.prevList(null);

qInit.prevList(qInit);

List<PoolChunkListMetric> metrics = new ArrayList<PoolChunkListMetric>(6);

metrics.add(qInit);

metrics.add(q000);

metrics.add(q025);

metrics.add(q050);

metrics.add(q075);

metrics.add(q100);

chunkListMetrics = Collections.unmodifiableList(metrics);

}

qInit, q000, q025, q050, q075, q100就是前文提到的PoolChunkList链表,这几个list的usage各有重叠,防止PoolChunk在list之间频繁变换。

内存分配

private void allocate(PoolThreadCache cache, PooledByteBuf<T> buf, final int reqCapacity) {

//reqCapacity<512时对齐到16的倍数,否则对齐到2的幂次

final int normCapacity = normalizeCapacity(reqCapacity);

if (isTinyOrSmall(normCapacity)) {

// capacity < pageSize

int tableIdx;

PoolSubpage<T>[] table;

boolean tiny = isTiny(normCapacity);

if (tiny) {

// < 512

if (cache.allocateTiny(this, buf, reqCapacity, normCapacity)) {

// was able to allocate out of the cache so move on

return;

}

tableIdx = tinyIdx(normCapacity);

table = tinySubpagePools;

} else {

if (cache.allocateSmall(this, buf, reqCapacity, normCapacity)) {

// was able to allocate out of the cache so move on

return;

}

tableIdx = smallIdx(normCapacity);

table = smallSubpagePools;

}

final PoolSubpage<T> head = table[tableIdx];

/**

* Synchronize on the head. This is needed as {@link PoolChunk#allocateSubpage(int)} and

* {@link PoolChunk#free(long)} may modify the doubly linked list as well.

*/

synchronized (head) {

final PoolSubpage<T> s = head.next;

if (s != head) {

assert s.doNotDestroy && s.elemSize == normCapacity;

long handle = s.allocate();

assert handle >= 0;

s.chunk.initBufWithSubpage(buf, null, handle, reqCapacity);

incTinySmallAllocation(tiny);

return;

}

}

synchronized (this) {

allocateNormal(buf, reqCapacity, normCapacity);

}

incTinySmallAllocation(tiny);

return;

}

if (normCapacity <= chunkSize) {

if (cache.allocateNormal(this, buf, reqCapacity, normCapacity)) {

// was able to allocate out of the cache so move on

return;

}

synchronized (this) {

allocateNormal(buf, reqCapacity, normCapacity);

++allocationsNormal;

}

} else {

// Huge allocations are never served via the cache so just call allocateHuge

allocateHuge(buf, reqCapacity);

}

}

首先尝试从缓存中分配内存,分配失败时才从内存池进行分配。因为从内存池分配内存需要加锁,而缓存属于线程所有,无需加锁,在free时会将内存放入线程所属的缓存中。

对于tiny及small内存会先从两个对应的tiny(small)SubpagePools链表中进行分配,分配失败则调用allocateNormal分配一个页。

private void allocateNormal(PooledByteBuf<T> buf, int reqCapacity, int normCapacity) {

if (q050.allocate(buf, reqCapacity, normCapacity) || q025.allocate(buf, reqCapacity, normCapacity) ||

q000.allocate(buf, reqCapacity, normCapacity) || qInit.allocate(buf, reqCapacity, normCapacity) ||

q075.allocate(buf, reqCapacity, normCapacity)) {

return;

}

// Add a new chunk.

PoolChunk<T> c = newChunk(pageSize, maxOrder, pageShifts, chunkSize);

boolean success = c.allocate(buf, reqCapacity, normCapacity);

assert success;

qInit.add(c);

}

对于超过chunkSize的内存,PoolArena不会从内存池分配。

private void allocateHuge(PooledByteBuf<T> buf, int reqCapacity) {

PoolChunk<T> chunk = newUnpooledChunk(reqCapacity);

activeBytesHuge.add(chunk.chunkSize());

buf.initUnpooled(chunk, reqCapacity);

allocationsHuge.increment();

}

内存释放

void free(PoolChunk<T> chunk, ByteBuffer nioBuffer, long handle, int normCapacity, PoolThreadCache cache) {

if (chunk.unpooled) {

int size = chunk.chunkSize();

destroyChunk(chunk);

activeBytesHuge.add(-size);

deallocationsHuge.increment();

} else {

SizeClass sizeClass = sizeClass(normCapacity);

if (cache != null && cache.add(this, chunk, nioBuffer, handle, normCapacity, sizeClass)) {

// cached so not free it.

return;

}

freeChunk(chunk, handle, sizeClass, nioBuffer);

}

}

非池化内存直接释放,根据内存类型,堆内存由gc回收,直接内存需要手动释放。池化内存则加入线程的本地缓存中或放回内存池中。