大数据入门:Spark+Kudu的广告业务项目实战笔记(六)

本章目标:将代码打包并运行在服务器上。

1.将数据放在HDFS上

先把Hadoop启动起来:

[hadoop@hadoop000 ~]$ cd app/

[hadoop@hadoop000 app]$ ls

apache-maven-3.6.3 hive-1.1.0-cdh5.15.1 spark-2.4.5-bin-hadoop2.6

hadoop-2.6.0-cdh5.15.1 jdk1.8.0_91 tmp

[hadoop@hadoop000 app]$ cd hadoop-2.6.0-cdh5.15.1/

[hadoop@hadoop000 hadoop-2.6.0-cdh5.15.1]$ ls

bin etc include LICENSE.txt README.txt src

bin-mapreduce1 examples lib logs sbin

cloudera examples-mapreduce1 libexec NOTICE.txt share

[hadoop@hadoop000 hadoop-2.6.0-cdh5.15.1]$ cd sbin/

[hadoop@hadoop000 sbin]$ ls

distribute-exclude.sh slaves.sh stop-all.sh

hadoop-daemon.sh start-all.cmd stop-balancer.sh

hadoop-daemons.sh start-all.sh stop-dfs.cmd

hdfs-config.cmd start-balancer.sh stop-dfs.sh

hdfs-config.sh start-dfs.cmd stop-secure-dns.sh

httpfs.sh start-dfs.sh stop-yarn.cmd

kms.sh start-secure-dns.sh stop-yarn.sh

Linux start-yarn.cmd yarn-daemon.sh

mr-jobhistory-daemon.sh start-yarn.sh yarn-daemons.sh

refresh-namenodes.sh stop-all.cmd

[hadoop@hadoop000 sbin]$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [hadoop000]

hadoop000: starting namenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-namenode-hadoop000.out

hadoop000: starting datanode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-datanode-hadoop000.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-secondarynamenode-hadoop000.out

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/yarn-hadoop-resourcemanager-hadoop000.out

hadoop000: starting nodemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/yarn-hadoop-nodemanager-hadoop000.out

在HDFS上新建个目录,数据在HDFS上的规划是每天一个目录,YYYYMMDD格式:

[hadoop@hadoop000 sbin]$ hadoop fs -mkdir -p /tai/access/20181007

把data-test.json放到HDFS上:

[hadoop@hadoop000 sbin]$ hadoop fs -put ~/data/data-test.json /tai/access/20181007/

去50070端口瞅瞅:

成功

把ip.txt像之前一样上传到HDFS上:

hadoop fs -put ~/data/ip.txt /tai/access/

2.定时操作重构

每天跑一次,每天凌晨3点跑,需要传递一个需要处理的时间,在SparkApp.scala中:

package com.imooc.bigdata.cp08

import com.imooc.bigdata.cp08.business.{AppStatProcessor, AreaStatProcessor, LogETLProcessor, ProvinceCityStatProcessor}

import org.apache.commons.lang3.StringUtils

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

//整个项目的入口

object SparkApp extends Logging{

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[2]")

.appName("SparkApp")

.getOrCreate()

//spark-submit ... --conf time=20181007

//spark框架只认spark开头的代码

val time = spark.sparkContext.getConf.get("spark.time")

if(StringUtils.isBlank(time)){

//若为空则不执行

logError("处理批次不能为空")

System.exit(0)

}

LogETLProcessor.process(spark)

ProvinceCityStatProcessor.process(spark)

AreaStatProcessor.process(spark)

AppStatProcessor.process(spark)

spark.stop()

}

}

由于要将名字改为表名+时间命名,需要增加一个DateUtils:

package com.imooc.bigdata.cp08.utils

import org.apache.spark.sql.SparkSession

object DateUtils {

def getTableName(tableName:String,spark:SparkSession)={

val time = spark.sparkContext.getConf.get("spark.time")

tableName + "_" + time

}

}

之后将所有的表名改为工具类的调用,举例来说:

val sourceTableName = DateUtils.getTableName("ods",spark)

val sinkTableName = DateUtils.getTableName("province_city_stat",spark)

之后需要制定两个源文件的路径:

val rawPath = spark.sparkContext.getConf.get("spark.raw.path")

var jsonDF = spark.read.json(rawPath)

val ipRulePath = spark.sparkContext.getConf.get("spark.ip.path")

val ipRowRDD = spark.sparkContext.textFile(ipRulePath)入参有:spark.time/spark.raw.path/spark.ip.path

另外在生产时spark应这样调用,不要硬编码,统一使用spark-submit提交时指定:

val spark = SparkSession.builder().getOrCreate()3.代码打包

用Maven打包代码:

将包传到服务器的~/lib目录下。

找到本地的kudu-client-1.7.0.jar和kudu-spark2_2.11-1.7.0.jar,也上传到服务器的~/lib下。

打开spark:

cd ~/app/spark-2.4.5-bin-hadoop2.6/sbin/

sh start-all.sh开始写spark提交脚本,先在本地测试:

vi job.shtime=20181007

${SPARK_HOME}/bin/spark-submit \

--class com.imooc.bigdata.cp08.SparkApp \

--master local \

--jars /home/hadoop/lib/kudu-client-1.7.0.jar,/home/hadoop/lib/kudu-spark2_2.11-1.7.0.jar \

--conf spark.time=$time \

--conf spark.raw.path="hdfs://hadoop000:8020/tai/access/$time" \

--conf spark.ip.path="hdfs://hadoop000:8020/tai/access/ip.txt" \

/home/hadoop/lib/sparksql-train-1.0.jar启动脚本:

sh job.sh查看结果:

数据已经成功上传。

4.Spark on Yarn

vi jobyarn.sh只要把--master 改成yarn即可:

time=20181007

${SPARK_HOME}/bin/spark-submit \

--class com.imooc.bigdata.cp08.SparkApp \

--master yarn \

--jars /home/hadoop/lib/kudu-client-1.7.0.jar,/home/hadoop/lib/kudu-spark2_2.11-1.7.0.jar \

--conf spark.time=$time \

--conf spark.raw.path="hdfs://hadoop000:8020/tai/access/$time" \

--conf spark.ip.path="hdfs://hadoop000:8020/tai/access/ip.txt" \

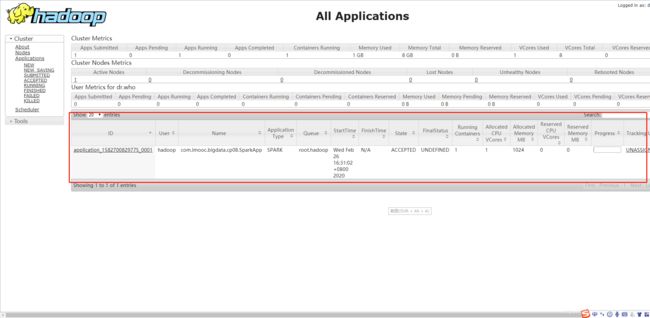

/home/hadoop/lib/sparksql-train-1.0.jarsh jobyarn.sh去8088看一下yarn:

已经在跑啦~

5.定时调度

调度框架:crontab/Azkaban/Ooize...

本项目使用crontab,crontab表达式的工具在这里。设定一小时运行一次的表达式:

0 */1 * * *crontab -e 编辑

crontab -l 查看

crontab -r 删除若需要凌晨三点开始执行作业,执行的是昨天的数据,其中昨天的表示命令为:

[hadoop@hadoop000 lib]$ date --date='1 day ago' +%Y%m%d

20200225

修改脚本:

time=`date --date='1 day ago' +%Y%m%d`

${SPARK_HOME}/bin/spark-submit \

--class com.imooc.bigdata.cp08.SparkApp \

--master local \

--jars /home/hadoop/lib/kudu-client-1.7.0.jar,/home/hadoop/lib/kudu-spark2_2.11-1.7.0.jar \

--conf spark.time=$time \

--conf spark.raw.path="hdfs://hadoop000:8020/tai/access/$time" \

--conf spark.ip.path="hdfs://hadoop000:8020/tai/access/ip.txt" \

/home/hadoop/lib/sparksql-train-1.0.jar

设定crontab:

crontab -e

0 3 * * * /home/hadoop/lib/job.sh

搞定啦~