【Spark】Spark 转换算子详解

文章目录

-

-

- 一、简介

- 二、转换算子详解

-

- map、flatMap、distinct

- coalesce 和 repartition

- randomSplit

- glom

- union

- subtrat

- intersection

- mapPartitions

- mapPartitionWithIndex

- zip

- zipParititions

- zipWithIndex

- zipWithUniqueId

- join

- leftOuterJoin

- cogroup

- 针对键值对的转换算子

-

- reduceByKey[Pair]

- groupByKey()[Pair]

-

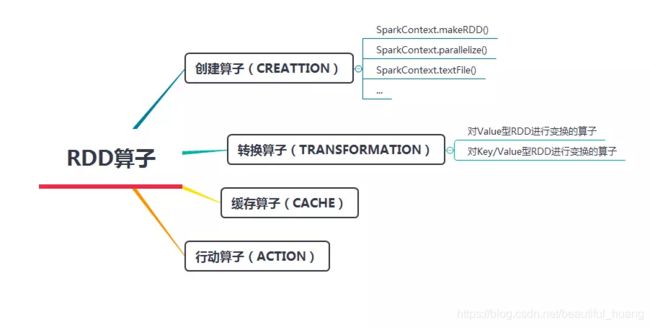

一、简介

转换(Transformation)算子就是对RDD进行操作的接口函数,其作用是将一个或多个RDD变换成新的RDD。

使用Spark进行数据计算,在利用创建算子生成RDD后,数据处理的算法设计和程序编写的最关键部分,就是利用变换算子对原始数据产生的RDD进行一步一步的变换,最终得到期望的计算结果。

对于变换算子可理解为分两类:

- 对Value型RDD进行变换的算子;

- 对Key/Value型RDD进行变换算子。在每个变换中有仅对一个RDD进行变换的,也有是对两个RDD进行变换的。

二、转换算子详解

map、flatMap、distinct

map说明:将一个RDD中的每个数据项,通过map中的函数映射变为一个新的元素。

输入分区与输出分区一对一,即:有多少个输入分区,就有多少个输出分区【分区数不会改变】

flatMap说明:同Map算子一样,最后将所有元素放到同一集合中;【分区数不会改变】

注意:针对Array[String]类型,将String对象视为字符数组

distinct说明:将RDD中重复元素做去重处理

map

scala> val a = sc.parallelize(List("dog","salmon","salmon","rat","elephant"),3)

a: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at :24

scala> a.partitions.size

res0: Int = 3

// glom的作用是将同一个分区里的元素合并到一个array里

// glom属于Transformation算子:这种变换并不触发提交作业,完成作业中间过程处理。

scala> a.glom.collect

res2: Array[Array[String]] = Array(Array(dog), Array(salmon, salmon), Array(rat, elephant))

scala> a.map(_.length).glom.collect

res4: Array[Array[Int]] = Array(Array(3), Array(6, 6), Array(3, 8))

flatMap

scala> val b = Array(Array("hello","world"),Array("how","are","you?"),Array("ni","hao"),Array("hello","tom"))

val add = b.flatMap(x=>x)

add: Array[String] = Array(hello, world, how, are, you?, ni, hao, hello, tom)

distinct

scala> a.distinct.collect

res22: Array[String] = Array(rat, salmon, elephant, dog)

coalesce 和 repartition

都是修改RDD分区数 、重分区

def coalesce ( numPartitions : Int , shuffle : Boolean = false ): RDD [T]

def repartition ( numPartitions : Int ): RDD [T]

将RDD的分区数进行修改,并生成新的RDD;有两个参数:第一个参数为分区数,第二个参数为shuffle Booleean类型,默认为false

如果更改分区数比原有RDD的分区数小,shuffle为false

!!!! 如果更改分区数比原有RDD的分区数大,shuffle必须为true

实际应用:一般处理filter或简化操作时,新生成的RDD中分区内数据骤减,可考虑重分区

coalesce

scala> val add = sc.parallelize(1 to 10)

add: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[4] at parallelize at :24

scala> add.partitions.size

res23: Int = 4

scala> val add1 = add.coalesce(5,false)

add1: org.apache.spark.rdd.RDD[Int] = CoalescedRDD[10] at coalesce at :25

scala> add1.partitions.size

res24: Int = 4

scala> val add1 = add.coalesce(5,true)

add1: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[14] at coalesce at :25

scala> add1.partitions.size

res25: Int = 5

repartition

scala> add1.glom.collect

res26: Array[Array[Int]] = Array(Array(5), Array(1), Array(2, 6, 8), Array(3, 7, 9), Array(4, 10))

scala> add1.repartition(3).glom.collect

collect collectAsync

scala> add1.repartition(3).glom.collect

res28: Array[Array[Int]] = Array(Array(8, 7, 10), Array(5, 2, 9), Array(1, 6, 3, 4))

randomSplit

RDD随机分配

def randomSplit(weights: Array[Double], seed: Long = Utils.random.nextLong): Array[RDD[T]]

根据一个权值数组将一个RDD随机分割成多个较小的RDD,该数组指定分配给每个较小的RDD的总数据元素的百分比。

注意: 每个较小的RDD的实际大小仅近似等于权值数组指定的百分比。

应用案例:Hadoop全排操作中做数据抽样操作

scala> val rdd = sc.makeRDD(1 to 10).collect

rdd: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

scala> val rdd0 =rdd.randomSplit(Array(0.1,0.2,0.7))

rdd0: Array[org.apache.spark.rdd.RDD[Int]] = Array(

MapPartitionsRDD[17] at randomSplit at <console>:26,

MapPartitionsRDD[18] at randomSplit at <console>:26,

MapPartitionsRDD[19] at randomSplit at <console>:26)

scala> rdd0(0).collect

res16: Array[Int] = Array(9)

scala> rdd0(1).collect

res17: Array[Int] = Array(8)

scala> rdd0(2).collect

res18: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 10)

glom

返回每个分区中的数据项

返回每个分区中的数据项,一般在用并行度时通过glom来测试

scala> val z = sc.makeRDD(1 to 15,3).glom.collect

z: Array[Array[Int]] = Array(Array(1, 2, 3, 4, 5), Array(6, 7, 8, 9, 10), Array(11, 12, 13, 14, 15))

union

并集 说明:将两个RDD进行合并,不去重

注意:union后分区数为两个RDD分区的和

scala> val x = sc.makeRDD(1 to 15,3)

x: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[29] at makeRDD at <console>:24

scala> val y = sc.makeRDD(10 to 18,3)

y: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[30] at makeRDD at <console>:24

scala> x.union(y).collect

res30: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 10, 11, 12, 13, 14, 15, 16, 17, 18)

scala> x.union(y).glom.collect

res29: Array[Array[Int]] = Array(Array(1, 2, 3, 4, 5), Array(6, 7, 8, 9, 10), Array(11, 12, 13, 14, 15), Array(10, 11, 12), Array(13, 14, 15), Array(16, 17, 18))

subtrat

差集

注意:subtrat 操作后分区数为前一个RDD的分区数

scala> val z = sc.makeRDD(1 to 12,4)

z: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[34] at makeRDD at :24

scala> val m = sc.makeRDD(5 to 8,2)

m: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[35] at makeRDD at :24

scala> z.subtract(m).glom.collect

res31: Array[Array[Int]] = Array(Array(4, 12), Array(1, 9), Array(2, 10), Array(3, 11))

intersection

交集

说明:将两个RDD求交集,去重

注意:intersection操作后 RDD分区数为之前分区数较大的值

scala> val z = sc.makeRDD(1 to 12,4)

z: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at makeRDD at :24

scala> val m = sc.makeRDD(5 to 8,2)

m: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[1] at makeRDD at :24

scala> z.intersection(m).glom.collect

res0: Array[Array[Int]] = Array(Array(8), Array(5), Array(6), Array(7))

mapPartitions

对每个分区进行操作

def mapPartitions[U: ClassTag](f: Iterator[T] => Iterator[U], preservesPartitioning: Boolean = false): RDD[U]

这是一个专门的映射,对于每个分区只调用一次。

通过输入参数(Iterarator[T]),各个分区的整个内容可以作为连续的值使用。

自定义函数必须返回另一个迭代器[U]。合并的结果迭代器将自动转换为新的RDD。

实际应用:对RDD进行数据库操作时,需采用 mapPartitions 对每个分区实例化数据库连接 conn 对象;

val a = sc.parallelize(1 to 9,3)

a.mapPartitions(

x=>{

var m = List[(Int,Int)]()

var pre = x.next

while(x.hasNext){

var cur = x.next

m.:: ((pre,cur))

pre = cur

}

m.iterator}).collect

mapPartitionWithIndex

def mapPartitionsWithIndex[U: ClassTag](f: (Int, Iterator[T]) => Iterator[U], preservesPartitioning: Boolean = false): RDD[U]

类似于mappartition,但接受两个参数。

第一个参数是分区的索引,第二个参数是遍历该分区内所有项的迭代器。

输出是一个迭代器,包含应用函数编码的任何转换之后的项列表

scala> val x = sc.makeRDD(1 to 10, 3)

x: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[4] at makeRDD at :24

scala> x.mapPartitionsWithIndex{

| (index,paritionIterator)=>{

| val partitionMap = scala.collection.mutable.Map[Int,List[Int]]()

| var partitionList = List[Int]()

| while(paritionIterator.hasNext){

| partitionList = paritionIterator.next :: partitionList

| }

| partitionMap(index)=partitionList

| partitionMap.iterator}}.collect

res8: Array[(Int, List[Int])] = Array((0,List(3, 2, 1)), (1,List(6, 5, 4)), (2,List(10, 9, 8, 7)))

zip

说明:通过将任意分区的第i个元素组合在一起,连接两个RDDs。得到的RDD将由两个组件元组组

注意:

- 两个RDD之间数据类型可以不同;

- 要求每个RDD具有相同的分区数

- 需RDD的每个分区具有相同的数据个数

scala> val x = sc.parallelize(1 to 15,3)

x: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[6] at parallelize at :24

scala> val y = sc.parallelize(11 to 25,3)

y: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[7] at parallelize at :24

scala> x.zip(y).glom.collect

res9: Array[Array[(Int, Int)]] = Array(Array((1,11), (2,12), (3,13), (4,14), (5,15)), Array((6,16), (7,17), (8,18), (9,19), (10,20)), Array((11,21), (12,22), (13,23), (14,24), (15,25)))

zipParititions

与zip类似,要求:需每个RDD具有相同的分区数;

scala> val a = sc.parallelize(0 to 9,3)

scala> val b = sc.parallelize(10 to 19,3)

scala> val c = sc.parallelize(100 to 109,3)

scala> a.zipPartitions(b,c){

| (a1,b1,c1)=>{

| var res =List[String]()

| while(a1.hasNext && b1.hasNext && c1.hasNext){

| val x = a1.next+" "+b1.next+" "+c1.next

| res :+ x}

| res.iterator

| }}.collect

res12: Array[String] = Array()

zipWithIndex

def zipWithIndex(): RDD[(T, Long)]

将现有的RDD的每个元素和相对应的Index组合,生成新的RDD[(T,Long)

val a = sc.parallelize(1 to 15,3)

a.zipWithIndex.collect

res27: Array[(Int, Long)] = Array((1,0), (2,1), (3,2), (4,3), (5,4), (6,5), (7,6), (8,7), (9,8), (10,9), (11,10), (12,11), (13,12), (14,13), (15,14))

zipWithUniqueId

val am = sc.parallelize(100 to 120,5

scala> am.zipWithUniqueId.collect

res29: Array[(Int, Long)] = Array((100,0), (101,5), (102,10), (103,15), (104,1), (105,6), (106,11), (107,16), (108,2), (109,7), (110,12), (111,17), (112,3), (113,8), (114,13), (115,18), (116,4), (117,9), (118,14), (119,19), (120,24))

//计算规则:

step1:第一个分区的第一个元素为0

第二个分区的第一个元素为1

第三个分区的第一个元素为2

第四个分区的第一个元素为3

第五个分区的第一个元素为4

step2:第一个分区的第二个元素0+5(分区数),第一个分区的第三个元素5+5(分区数),第一个分区的第四个元素10+5(分区数)

0,5,10,15

第二个分区的第二个元素1+5(分区数),第二个分区的第三个元素6+5(分区数),第二个分区的第四个元素11+5(分区数)

1,6,11,16

第三个分区的第二个元素2+5(分区数),第三个分区的第三个元素7+5(分区数),第三个分区的第四个元素12+5(分区数)

2,7,12,17

第四个分区的第二个元素3+5(分区数),第三个分区的第三个元素7+5(分区数),第三个分区的第四个元素12+5(分区数)

3,8,13,18

第五个分区的第二个元素4+5(分区数),第五个分区的第三个元素9+5(分区数),第五个分区的第四个元素14+5(分区数),第五个分区的第五个元素19+5(分区数)

4,9,14,19,24

join

说明:将两个RDD进行内连接,将相同键的值放到一起

def join[W](other: RDD[(K, W)]): RDD[(K, (V, W))]

def join[W](other: RDD[(K, W)], numPartitions: Int): RDD[(K, (V, W))]

def join[W](other: RDD[(K, W)], partitioner: Partitioner): RDD[(K, (V, W))]

val a = sc.parallelize(List("dog", "salmon", "salmon", "rat", "elephant"), 3)

a.keyBy(_.length).collect

res30: Array[(Int, String)] = Array((3,dog), (6,salmon), (6,salmon), (3,rat), (8,elephant))

val c = sc.parallelize(List("dog","cat","gnu","salmon","rabbit","turkey","wolf","bear","bee"), 3)

scala> a.keyBy(_.length).rightOuterJoin(c.keyBy(_.length)).glom.collect

res32: Array[Array[(Int, (Option[String], String))]] = Array(Array((6,(Some(salmon),salmon)), (6,(Some(salmon),rabbit)), (6,(Some(salmon),turkey)), (6,(Some(salmon),salmon)), (6,(Some(salmon),rabbit)), (6,(Some(salmon),turkey)), (3,(Some(dog),dog)), (3,(Some(dog),cat)), (3,(Some(dog),gnu)), (3,(Some(dog),bee)), (3,(Some(rat),dog)), (3,(Some(rat),cat)), (3,(Some(rat),gnu)), (3,(Some(rat),bee))), Array((4,(None,wolf)), (4,(None,bear))), Array())

leftOuterJoin

说明:对两个RDD进行连接操作,确保第二个RDD的键必须存在(左外连接

a.keyBy(_.length).leftOuterJoin(c.keyBy(_.length)).glom.collectres33: Array[Array[(Int, (String, Option[String]))]] = Array(Array((6,(salmon,Some(salmon))), (6,(salmon,Some(rabbit))), (6,(salmon,Some(turkey))), (6,(salmon,Some(salmon))), (6,(salmon,Some(rabbit))), (6,(salmon,Some(turkey))), (3,(dog,Some(dog))), (3,(dog,Some(cat))), (3,(dog,Some(gnu))), (3,(dog,Some(bee))), (3,(rat,Some(dog))), (3,(rat,Some(cat))), (3,(rat,Some(gnu))), (3,(rat,Some(bee)))), Array(), Array((8,(elephant,None))))

cogroup

说明:将两个RDD中拥有相同键的数据分组到一起,全连,使用键最多将3个键值RDD组合在一起

val x = sc.parallelize(List((1, "apple"), (2, "banana"), (3, "orange"), (4, "kiwi")), 2)

val y = sc.parallelize(List((5, "computer"), (1, "laptop"), (1, "desktop"), (4, "iPad")), 2)

val z = sc.parallelize(List((1,"say"),(3,"hello"),(5,"6")))

x.cogroup(y,z).collect

res1: Array[(Int, (Iterable[String], Iterable[String], Iterable[String]))] =

Array((4,(CompactBuffer(kiwi),CompactBuffer(iPad),CompactBuffer())),

(1,(CompactBuffer(apple),CompactBuffer(laptop, desktop),CompactBuffer(say))),

(5,(CompactBuffer(),CompactBuffer(computer),CompactBuffer(6))),

(2,(CompactBuffer(banana),CompactBuffer(),CompactBuffer())),

(3,(CompactBuffer(orange),CompactBuffer(),CompactBuffer(hello))))

针对键值对的转换算子

reduceByKey[Pair]

def reduceByKey(func: (V, V) => V): RDD[(K, V)] 合并具有相同键的值

scala> val a = sc.parallelize(List("dog", "tiger", "lion", "cat", "panther", "eagle"), 2)

a: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[53] at parallelize at :24

scala> val b = a.map(x=>(x.length,x))

b: org.apache.spark.rdd.RDD[(Int, String)] = MapPartitionsRDD[54] at map at :28

scala> b.collect

res33: Array[(Int, String)] = Array((3,dog), (5,tiger), (4,lion), (3,cat), (7,panther), (5,eagle))

scala> b.reduceByKey(_+_).collect

res34: Array[(Int, String)] = Array((4,lion), (3,dogcat), (7,panther), (5,tigereagle))

groupByKey()[Pair]

说明:按照相同的键key进行分组,返回值为RDD[(K, Iterable[V])]