hadoop伪分布式安装yarn(CDH5.16.2版本)

yarn安装

- 配置etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- 配置etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--配置web界面,默认是8088,防止被攻击改成38088-->

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>ruozedata001:38088</value>

</property>

</configuration>

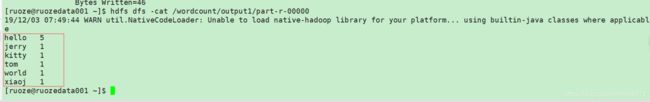

hadoop自带的词频统计wordcount

- 先查找wordcount的jar包

[ruoze@ruozedata001 hadoop]$ find ./ -name '*example*.jar'

./share/hadoop/mapreduce1/hadoop-examples-2.6.0-mr1-cdh5.16.2.jar

./share/hadoop/mapreduce2/sources/hadoop-mapreduce-examples-2.6.0-cdh5.16.2-test-sources.jar

./share/hadoop/mapreduce2/sources/hadoop-mapreduce-examples-2.6.0-cdh5.16.2-sources.jar

./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.16.2.jar

- 选择最后一个执行

hadoop jar app/hadoop/share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.16.2.jar wordcount /wordcount/input1/b.txt /wordcount/output1

- 执行过程

19/12/03 07:43:54 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

19/12/03 07:43:54 INFO input.FileInputFormat: Total input paths to process : 1

19/12/03 07:43:54 INFO mapreduce.JobSubmitter: number of splits:1

19/12/03 07:43:55 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1575329467615_0001

19/12/03 07:43:55 INFO impl.YarnClientImpl: Submitted application application_1575329467615_0001

19/12/03 07:43:55 INFO mapreduce.Job: The url to track the job: http://ruozedata001:38088/proxy/application_1575329467615_0001/

19/12/03 07:43:55 INFO mapreduce.Job: Running job: job_1575329467615_0001

19/12/03 07:44:00 INFO mapreduce.Job: Job job_1575329467615_0001 running in uber mode : false

19/12/03 07:44:00 INFO mapreduce.Job: map 0% reduce 0%

19/12/03 07:44:03 INFO mapreduce.Job: map 100% reduce 0%

19/12/03 07:44:07 INFO mapreduce.Job: map 100% reduce 100%

19/12/03 07:44:07 INFO mapreduce.Job: Job job_1575329467615_0001 completed successfully

19/12/03 07:44:07 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=76

FILE: Number of bytes written=286197

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=170

HDFS: Number of bytes written=46

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=1406

Total time spent by all reduces in occupied slots (ms)=1576

Total time spent by all map tasks (ms)=1406

Total time spent by all reduce tasks (ms)=1576

Total vcore-milliseconds taken by all map tasks=1406

Total vcore-milliseconds taken by all reduce tasks=1576

Total megabyte-milliseconds taken by all map tasks=1439744

Total megabyte-milliseconds taken by all reduce tasks=1613824

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=98

Map output materialized bytes=76

Input split bytes=112

Combine input records=10

Combine output records=6

Reduce input groups=6

Reduce shuffle bytes=76

Reduce input records=6

Reduce output records=6

Spilled Records=12

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=74

CPU time spent (ms)=670

Physical memory (bytes) snapshot=489766912

Virtual memory (bytes) snapshot=5547458560

Total committed heap usage (bytes)=479199232

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=58

File Output Format Counters

Bytes Written=46

修改机器的hostname

在root用户下修改

[root@JD ~]# hostnamectl

Static hostname: JD

Icon name: computer-vm

Chassis: vm

Machine ID: 983e7d6ed0624a2499003862230af382

Boot ID: c78cf2bffbea43d8a88110b3b8fa0c5f

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-327.el7.x86_64

Architecture: x86-64

[root@JD ~]# hostnamectl --help

hostnamectl [OPTIONS...] COMMAND ...

Query or change system hostname.

-h --help Show this help

--version Show package version

--no-ask-password Do not prompt for password

-H --host=[USER@]HOST Operate on remote host

-M --machine=CONTAINER Operate on local container

--transient Only set transient hostname

--static Only set static hostname

--pretty Only set pretty hostname

Commands:

status Show current hostname settings

set-hostname NAME Set system hostname

set-icon-name NAME Set icon name for host

set-chassis NAME Set chassis type for host

set-deployment NAME Set deployment environment for host

set-location NAME Set location for host

[root@JD ~]# hostnamectl set-hostname ruozedata001

[root@ruozedata001 ~]# cat /etc/hostname

ruozedata001

[root@ruozedata001 ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.3 ruozedata001

JPS的使用

- jps -l或者jps -m或者jps

[ruoze@ruozedata001 hsperfdata_ruoze]$ jps

23233 NameNode

23553 SecondaryNameNode

1729 Jps

29880 ResourceManager

23388 DataNode

30156 NodeManager

- 对应的标识文件在:/tmp/hsperfdata_ruoze,如果是root用户就是hsperfdata_root,如果是ruoze用户就是hsperfdata_ruoze;也就是/tmp/hsperfdata_username

- 作用:查看pid进程名称

- 进程所属的用户去执行 jps命令,只显示自己的相关的进程信息;root用户可以查看所有的进程,但是显示unavailable

- jps一般无法准确的查看进程是否存活,需要使用命令:

这个删掉,jps是查看不到,但是ps -ef|grep 1659 命令是可以查看到的,所以需要kill掉进程才能启动resourcemanager

ps -ef|grep 1659| grep -v grep | wc -l

- 删除/tmp/hsperfdata_ruoze该文件下的内容不影响进程的启动和停止

Linux的oom-kill机制和一个月清除/tmp下不在规则以内的文件机制

- oom-kill机制:某个进程内存使用过高,linux系统为了保护自己,防止夯住,会去杀死内存使用过多的进程,培养意识:当某个进程挂了,要找到log位置,cat /var/log/messages | grep oom

- 清除/tmp下的文件,所以需要配置hadoop和yarn启动的进程和日志输出目录:chmod -R 777 /home/ruoze/tmp ,mv /tmp/hadoop-ruoze/dfs /home/ruoze/tmp/(该步骤一定要做不然无法启动)

vi hadoop-env.sh

vi yarn-env.sh

vi core-site.xml

其中hdfs-site.xml文件中

dfs.namenode.name.dir=file:// h a d o o p . t m p . d i r / d f s / n a m e d f s . d a t a n o d e . d a t a . d i r = f i l e : / / {hadoop.tmp.dir}/dfs/name dfs.datanode.data.dir=file:// hadoop.tmp.dir/dfs/namedfs.datanode.data.dir=file://{hadoop.tmp.dir}/dfs/data

dfs.namenode.checkpoint.dir=file://${hadoop.tmp.dir}/dfs/namesecondary

属性均由hadoop.tmp.dir决定