【系列二】数学基础—概率—高斯分布3

1. 边缘概率与条件概率

x i ∽ N ( μ , Σ ) = 1 ( 2 π ) p 2 Σ 1 2 exp ( − 1 2 ( x − μ ) T Σ − 1 ( x − μ ) ) x_i\backsim N(\mu,\varSigma)=\frac{1}{(2\pi)^{\frac{p}{2}}\varSigma^{\frac{1}{2}}}\exp{(-\frac{1}{2}(x-\mu)^T\varSigma^{-1}(x-\mu))} xi∽N(μ,Σ)=(2π)2pΣ211exp(−21(x−μ)TΣ−1(x−μ)) x = ( x 1 x 2 ⋮ x p ) , μ = ( μ 1 μ 2 ⋮ μ p ) , Σ = ( σ 11 , σ 12 … , σ 1 p σ 21 , σ 22 … , σ 2 p ⋮ σ p 1 , σ p 2 … , σ p p ) x=\begin{pmatrix}x_1\\x_2\\\vdots\\x_p\end{pmatrix},\mu=\begin{pmatrix}\mu_1\\\mu_2\\\vdots\\\mu_p\end{pmatrix},\varSigma=\begin{pmatrix}\sigma_{11},\sigma_{12}\dotsc,\sigma_{1p}\\\sigma_{21},\sigma_{22}\dotsc,\sigma_{2p}\\ \vdots\\\sigma_{p1},\sigma_{p2}\dotsc,\sigma_{pp}\end{pmatrix} x=⎝⎜⎜⎜⎛x1x2⋮xp⎠⎟⎟⎟⎞,μ=⎝⎜⎜⎜⎛μ1μ2⋮μp⎠⎟⎟⎟⎞,Σ=⎝⎜⎜⎜⎛σ11,σ12…,σ1pσ21,σ22…,σ2p⋮σp1,σp2…,σpp⎠⎟⎟⎟⎞

将 x , μ , Σ x,\mu,\varSigma x,μ,Σ分组, x = ( x a x b ) , μ = ( μ a μ b ) , Σ = ( Σ a a Σ a b Σ b a Σ b b ) , { a = 1 , 2 , … , m b = 1 , 2 , … , n m + n = p x=\begin{pmatrix}x_a\\x_b\end{pmatrix},\mu=\begin{pmatrix}\mu_a\\\mu_b\end{pmatrix},\varSigma=\begin{pmatrix}\varSigma_{aa}&\varSigma_{ab}\\\varSigma_{ba} &\varSigma_{bb}\end{pmatrix},\begin{cases}a=1,2,\dotsc,m\\b=1,2,\dots,n\\m+n=p\end{cases} x=(xaxb),μ=(μaμb),Σ=(ΣaaΣbaΣabΣbb),⎩⎪⎨⎪⎧a=1,2,…,mb=1,2,…,nm+n=p

已知一个高维高斯分布,求它的边缘概率分布 P ( x a ) , P ( x b ) P(x_a),P(x_b) P(xa),P(xb)和条件概率分布 P ( x a ∣ x b ) , P ( x b ∣ x a ) P(x_a|x_b),P(x_b|x_a) P(xa∣xb),P(xb∣xa)?

PRML这本书中介绍了“配方法”来求解。下面介绍一种稍微简单一点的方法。

首先引入一个定理(不证): x ∽ N ( μ , Σ ) y = A x + B y ∽ N ( A μ + B , A Σ A T ) x\backsim N(\mu,\varSigma)\\y=Ax+B\\y\backsim N(A\mu+B,A\varSigma A^T) x∽N(μ,Σ)y=Ax+By∽N(Aμ+B,AΣAT)

求边缘概率分布:

x a = ( I m 0 ) ( x a x b ) = A x x_a=\begin{pmatrix}I_m&0\end{pmatrix}\begin{pmatrix}x_a\\x_b\end{pmatrix}=Ax xa=(Im0)(xaxb)=Ax,有上面的定理 x a ∽ N ( A μ , A Σ A T ) = N ( μ a , Σ a a ) x_a\backsim N(A\mu,A\varSigma A^T)=N(\mu_a,\varSigma_{aa}) xa∽N(Aμ,AΣAT)=N(μa,Σaa)

求条件概率分布:

构造一个新的随机变量:

x b ⋅ a = x b − Σ b a Σ a a − 1 x a = ( − Σ b a Σ a a − 1 I m ) ( x a x b ) = A x E [ x b ⋅ a ] = μ b ⋅ a = μ b − Σ b a Σ a a − 1 μ a v a r [ x b ⋅ a ] = x b b ⋅ a = Σ b b − Σ b a Σ a a − 1 Σ a b x_{b\cdot a}=x_b-\varSigma_{ba}\varSigma_{aa}^{-1}x_a=\begin{pmatrix}-\varSigma_{ba}\varSigma_{aa}^{-1}&I_m\end{pmatrix}\begin{pmatrix}x_a\\x_b\end{pmatrix}=Ax\\ E[x_{b\cdot a}]=\mu_{b\cdot a}=\mu_b-\varSigma_{ba}\varSigma_{aa}^{-1}\mu_a\\var[x_{b\cdot a}]=x_{bb\cdot a}=\varSigma_{bb}-\varSigma_{ba}\varSigma_{aa}^{-1}\varSigma_{ab} xb⋅a=xb−ΣbaΣaa−1xa=(−ΣbaΣaa−1Im)(xaxb)=AxE[xb⋅a]=μb⋅a=μb−ΣbaΣaa−1μavar[xb⋅a]=xbb⋅a=Σbb−ΣbaΣaa−1Σab

x b ⋅ a ∽ N ( μ b ⋅ a , Σ b b ⋅ a ) x_{b\cdot a}\backsim N(\mu_{b\cdot a},\varSigma_{bb\cdot a}) xb⋅a∽N(μb⋅a,Σbb⋅a)

x b = μ b ⋅ a + Σ b a Σ a a − 1 x a x_b=\mu_{b\cdot a}+\varSigma_{ba}\varSigma_{aa}^{-1}x_a xb=μb⋅a+ΣbaΣaa−1xa x b ∣ x a ∽ N ( μ b ⋅ a + Σ b a Σ a a − 1 x a , Σ b b ⋅ a ) x_b|x_a\backsim N(\mu_{b\cdot a}+\varSigma_{ba}\varSigma_{aa}^{-1}x_a,\varSigma_{bb\cdot a}) xb∣xa∽N(μb⋅a+ΣbaΣaa−1xa,Σbb⋅a)

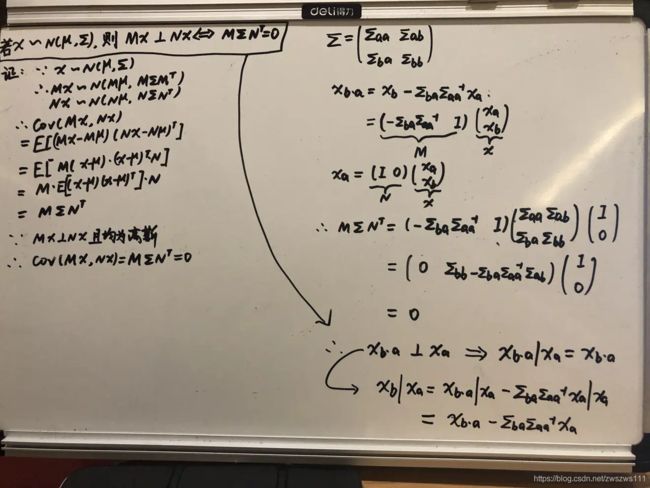

x b . a 与 x a x_{b.a} 与x_a xb.a与xa的独立性证明具体步骤如下:(独立说明 P ( x b ∣ x a ) = P ( x b ) P(x_b|x_a)=P(x_b) P(xb∣xa)=P(xb))

Note:

- 一般情况下两个随机变量之间独立一定不相关,不相关不一定独立(也就是独立的概念更“苛刻”一点,不相关稍微“弱”一点)

- 如果两个随机变量均服从高斯分布,那么“不相关”等价于“独立”

2. 联合概率分布

已知: p ( x ) = N ( x ∣ μ , Λ − 1 ) , p ( y ∣ x ) = N ( y ∣ A x + b , L − 1 ) p(x)=N(x|\mu,\varLambda^{-1}),p(y|x)=N(y|Ax+b,L^{-1}) p(x)=N(x∣μ,Λ−1),p(y∣x)=N(y∣Ax+b,L−1)

求: p ( y ) , p ( x ∣ y ) p(y),p(x|y) p(y),p(x∣y)

①: y = A x + b + ϵ , ϵ ∽ N ( 0 , L − 1 ) , ϵ ⊥ x E [ y ] = E ( A x + b + ϵ ) = A μ + b v a r [ y ] = v a r [ A x + b ] + v a r [ ϵ ] = A Λ − 1 A T + L − 1 y ∽ N ( A μ + b , A Λ − 1 A T + L − 1 ) y=Ax+b+\epsilon,\epsilon\backsim N(0,L^{-1}),\epsilon\bot x\\E[y]=E(Ax+b+\epsilon)=A\mu+b \\var[y]=var[Ax+b]+var[\epsilon]=A\varLambda^{-1}A^T+L^{-1}\\ y\backsim N(A\mu+b,A\varLambda^{-1}A^T+L^{-1}) y=Ax+b+ϵ,ϵ∽N(0,L−1),ϵ⊥xE[y]=E(Ax+b+ϵ)=Aμ+bvar[y]=var[Ax+b]+var[ϵ]=AΛ−1AT+L−1y∽N(Aμ+b,AΛ−1AT+L−1)

②: z = ( x y ) ∽ N ( [ μ A μ + b ] [ Λ − 1 Δ Δ A Λ − 1 A T + L − 1 ] ) Δ = C o v ( x , y ) = E [ ( x − E [ x ] ) ( y − E [ y ] ) T ] = E [ ( x − μ ) ( A x − A μ + ϵ ) T ] = E [ ( x − μ ) ( A x − A μ ) T + ( x − μ ) ϵ T ] = E [ ( x − μ ) ( A x − A μ ) T ] + E [ ( x − μ ) ϵ T ] = E [ ( x − μ ) ( x − μ ) T A T ] = v a r [ x ] A T = Λ − 1 A T z=\begin{pmatrix}x\\y\end{pmatrix}\backsim N\begin{pmatrix}\begin{bmatrix}\mu\\A\mu+b\end{bmatrix}&\begin{bmatrix}\varLambda^{-1}&\Delta\\\Delta&A\varLambda^{-1}A^T+L^{-1}\end{bmatrix}\end{pmatrix}\\ \Delta=Cov(x,y)=E[(x-E[x])(y-E[y])^T]=\\ E[(x-\mu)(Ax-A\mu+\epsilon)^T]=E[(x-\mu)(Ax-A\mu)^T+(x-\mu)\epsilon^T]=\\ E[(x-\mu)(Ax-A\mu)^T]+E[(x-\mu)\epsilon^T]=E[(x-\mu)(x-\mu)^TA^T]=\\ var[x]A^T=\varLambda^{-1}A^T z=(xy)∽N([μAμ+b][Λ−1ΔΔAΛ−1AT+L−1])Δ=Cov(x,y)=E[(x−E[x])(y−E[y])T]=E[(x−μ)(Ax−Aμ+ϵ)T]=E[(x−μ)(Ax−Aμ)T+(x−μ)ϵT]=E[(x−μ)(Ax−Aμ)T]+E[(x−μ)ϵT]=E[(x−μ)(x−μ)TAT]=var[x]AT=Λ−1AT

一些基本概念

原视频链接

【机器学习】【白板推导系列】【合集 1~23】