Elasticsearch ik分词器修改源码实现从mysql中定时更新词库

下载源码导入eclispe请参考我的上一篇文章ik分词器安装

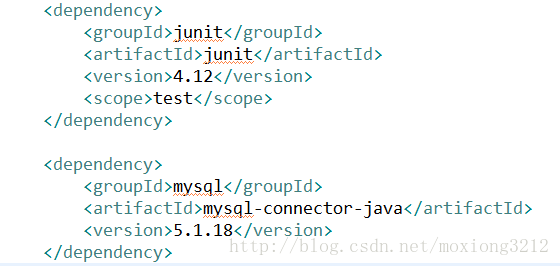

第一步 修改pom文件

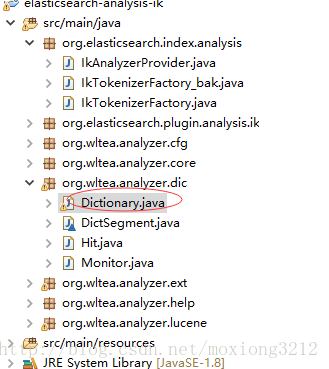

第二步 修改Java类

/**

* 批量加载新停用词条

*

* @param words

* Collection词条列表

*/

public void addStopWords(Collection words) {

if (words != null) {

for (String word : words) {

if (word != null) {

// 批量加载词条到主内存词典中

_StopWords.fillSegment(word.trim().toCharArray());

}

}

}

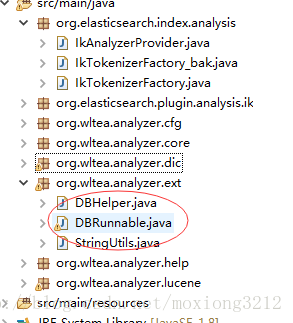

} 2.新建一个包 并添加几个Java文件

第一个 StringUtils.java

package org.wltea.analyzer.ext;

public class StringUtils {

/**

* 判断字符串是否为空 为空返回true 否则返回false

* @param str

* @return

*/

public static boolean isBlank(String str) {

int strLen;

if (str == null || (strLen = str.length()) == 0) {

return true;

}

for (int i = 0; i < strLen; i++) {

if ((Character.isWhitespace(str.charAt(i)) == false)) {

return false;

}

}

return true;

}

/**

* 判断字符串是否不为空 为空返回false 否则返回true

* @param str

* @return

*/

public static boolean isNotBlank(String str) {

return !StringUtils.isBlank(str);

}

}

第二个 DBHelper.java

package org.wltea.analyzer.ext;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.text.SimpleDateFormat;

import java.time.LocalDate;

import java.util.Arrays;

import java.util.Date;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.TimeZone;

import org.apache.logging.log4j.Logger;

import org.elasticsearch.common.logging.Loggers;

public class DBHelper {

Logger logger=Loggers.getLogger(DBRunnable.class);

public static String url = null;

public static String dbUser = null;

public static String dbPwd = null;

public static String dbTable = null;

/*public static String url = "jdbc:mysql:///elasticsearch";

public static String dbUser = "root";

public static String dbPwd = "whdhz19";

public static String dbTable = "t_es_ik_dic";*/

private Connection conn;

public static Map lastImportTimeMap = new HashMap();

static{

try {

Class.forName("com.mysql.jdbc.Driver");// 加载Mysql数据驱动

} catch (Exception e) {

e.printStackTrace();

}

}

private Connection getConn() throws Exception {

try {

conn = DriverManager.getConnection(url, dbUser, dbPwd);// 创建数据连接

} catch (Exception e) {

e.printStackTrace();

}

return conn;

}

/**

*

* @param key 数据库中的属性 扩展词 停用词 同义词等

* @param flag

* @param synony

* @return

* @throws Exception

*/

public String getKey(String key, boolean flag, boolean... synony) throws Exception {

conn = getConn();

StringBuilder data = new StringBuilder();

PreparedStatement ps = null;

ResultSet rs = null;

try {

StringBuilder sql = new StringBuilder("select * from " + dbTable + " where delete_type=0");

//lastImportTime 最新更新时间

Date lastImportTime = DBHelper.lastImportTimeMap.get(key);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

if (lastImportTime != null && flag) {

sql.append(" and update_time > '" + sdf.format(lastImportTime) + "'");

}

sql.append(" and " + key + " !=''");

lastImportTime = new Date();

lastImportTimeMap.put(key,lastImportTime);

//如果打印出来的时间 和本地时间不一样,则要注意JVM时区是否和服务器系统时区一致

logger.warn("sql==={}",sql.toString());

ps = conn.prepareStatement(sql.toString());

rs = ps.executeQuery();

while (rs.next()) {

String value = rs.getString(key);

if (StringUtils.isNotBlank(value)) {

if (synony != null&&synony.length>0) {

data.append(value + "\n");

} else {

data.append(value + ",");

}

}

}

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

if (ps != null) {

ps.close();

}

if (rs != null) {

rs.close();

}

conn.close();

} catch (Exception e) {

e.printStackTrace();

}

}

return data.toString();

}

public static void main(String[] args) throws Exception {

DBHelper dbHelper=new DBHelper();

String extWords=dbHelper.getKey("ext_word",true);

List extList = Arrays.asList(extWords.split(","));

System.out.println(extList);

// System.out.println(getKey("stopword"));

// System.out.println(getKey("synonym"));

LocalDate now=LocalDate.now();

}

}

第三个 DBRunnable.java

package org.wltea.analyzer.ext;

import java.util.Arrays;

import java.util.List;

import org.apache.logging.log4j.Logger;

import org.elasticsearch.common.logging.Loggers;

import org.wltea.analyzer.dic.Dictionary;

public class DBRunnable implements Runnable {

Logger logger = Loggers.getLogger(DBRunnable.class);

private String extField;

private String stopField;

public DBRunnable(String extField, String stopField) {

super();

this.extField = extField;

this.stopField = stopField;

}

@Override

public void run() {

logger.warn("开始加载词库========");

//获取词库

Dictionary dic = Dictionary.getSingleton();

DBHelper dbHelper = new DBHelper();

try {

String extWords = dbHelper.getKey(extField, true);

String stopWords = dbHelper.getKey(stopField, true);

if(StringUtils.isNotBlank(extWords)){

List extList = Arrays.asList(extWords.split(","));

//把扩展词加载到主词库中

dic.addWords(extList);

logger.warn("加载扩展词成功========");

logger.warn("extWords为==={}",extWords);

}

if(StringUtils.isNotBlank(stopWords)){

List stopList = Arrays.asList(stopWords.split(","));

//把扩展词加载到主词库中

dic.addStopWords(stopList);

logger.warn("加载停用词成功========");

logger.warn("stopWords为==={}",stopWords);

}

} catch (Exception e) {

logger.warn("加载扩展词失败========{}",e);

}

}

}

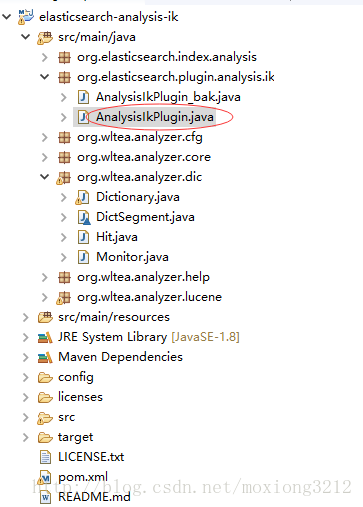

第三步

复制AnalysisIkPlugin.java文件

增加一个方法 不然elasticsearch 不能识别配置文件中自己新增的属性

@Override

public List>> getSettings() {

Setting dbUrl=new Setting<>("dbUrl", "", Function.identity(), Property.NodeScope);

Setting dbUser = new Setting<>("dbUser", "", Function.identity(),Property.NodeScope);

Setting dbPwd = new Setting<>("dbPwd", "", Function.identity(),Property.NodeScope);

Setting dbTable = new Setting<>("dbTable", "", Function.identity(),Property.NodeScope);

Setting extField = new Setting<>("extField", "", Function.identity(),Property.NodeScope);

Setting stopField = new Setting<>("stopField", "", Function.identity(),Property.NodeScope);

Setting flushTime =Setting.intSetting("flushTime", 5, Property.NodeScope);

Setting autoReloadDic = Setting.boolSetting("autoReloadDic", false, Property.NodeScope);

return Arrays.asList(dbUrl,dbUser,dbPwd,dbTable,extField,stopField,flushTime,autoReloadDic);

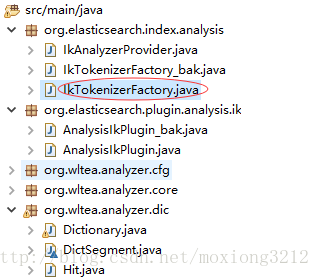

} 第四步

备份IkTokenizerFactory.java文件

修改IkTokenizerFactory.java的构造方法 修改后代码如下

public IkTokenizerFactory(IndexSettings indexSettings, Environment env, String name, Settings settings) {

super(indexSettings, name, settings);

configuration=new Configuration(env,settings);

//从es配置文件elasticserach.yml中获取mysql信息

Settings s = indexSettings.getSettings();

String dbUrl = s.get("dbUrl");

boolean autoReloadDic=s.getAsBoolean("autoReloadDic", false);

if(autoReloadDic&&StringUtils.isBlank(DBHelper.url)&&StringUtils.isNotBlank(dbUrl)){

String dbUser = s.get("dbUser");

String dbPwd = s.get("dbPwd");

//获取每隔多久从数据库更新信息 默认60S

Integer flushTime = s.getAsInt("flushTime", 60);

String dbTable = s.get("dbTable");

DBHelper.dbTable=dbTable;

DBHelper.dbUser=dbUser;

DBHelper.dbPwd=dbPwd;

DBHelper.url=dbUrl;

logger.warn("dbUrl=========={}",dbUrl);

String extField = s.get("extField");

String stopField = s.get("stopField");

logger.warn("extField=========={}",extField);

logger.warn("stopField=========={}",stopField);

ScheduledExecutorService scheduledExecutorService = Executors.newSingleThreadScheduledExecutor();

scheduledExecutorService.scheduleAtFixedRate(new DBRunnable(extField,stopField), 0, flushTime, TimeUnit.SECONDS);

}

}第五步

/**

* 批量加载新停用词条

*

* @param words

* Collection词条列表

*/

public void addStopWords(Collection words) {

if (words != null) {

for (String word : words) {

if (word != null) {

// 批量加载词条到主内存词典中

_StopWords.fillSegment(word.trim().toCharArray());

}

}

}

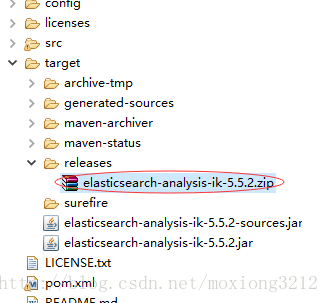

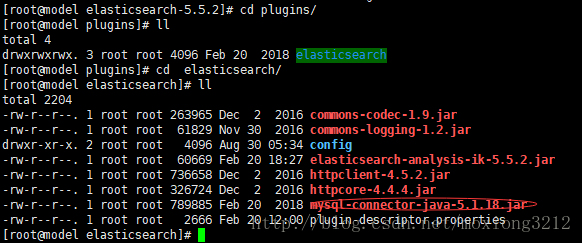

} 以上,ik分词器修改结束 打包 复制 elasticsearch-analysis-ik-5.5.2.jar 替换掉服务器上plugins文件夹下ik插件里面的同名jar包即可

如果服务器还没有安装ik分词器插件,则将下图所示打好的压缩包上传到plugins文件夹下解压即可

注意:将mysql驱动jar mysql-connector-java-5.1.8.jar放入到解压好的ik插件文件夹里 如图所示

下面进行测试

第六步 修改elasticsearch配置文件

[root@model elasticsearch-5.5.2]# vim config/elasticsearch.yml最下面添加

dbUrl: jdbc:mysql://192.168.254.1/elasticsearch

dbUser: root

dbPwd: whdhz19

dbTable: t_es_ik_dic

extField: ext_word

stopField: stop_word

flushTime: 5

autoReloadDic: true保存退出即可

下面进行测试

第七步 mysql建表

CREATE TABLE t_es_ik_dic (

id int(11) PRIMARY KEY AUTO_INCREMENT COMMENT '自增id',

ext_word varchar(100) DEFAULT '' COMMENT '扩展分词',

stop_word varchar(100) DEFAULT '' COMMENT '停用词',

synonym varchar(100) DEFAULT '' COMMENT '同义词',

dic_status tinyint(4) DEFAULT '0' COMMENT '状态,0表示未新增,1表示新增',

delete_type tinyint(4) DEFAULT '0' COMMENT '0表示未删除,1表示删除',

create_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

update_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间'

)

第八步 启动

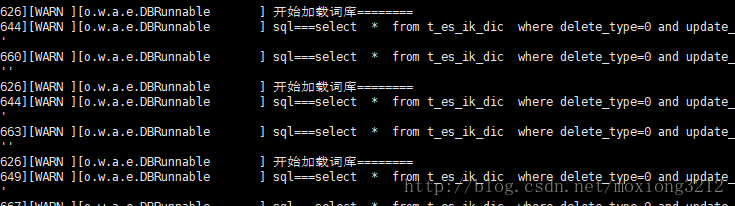

如果命令行打印如图所示,则表示启动成功

这时候可以在测试表中添加一些测试数据

如果命令行输出如图所示,表示添加扩展词成功成功

![]()

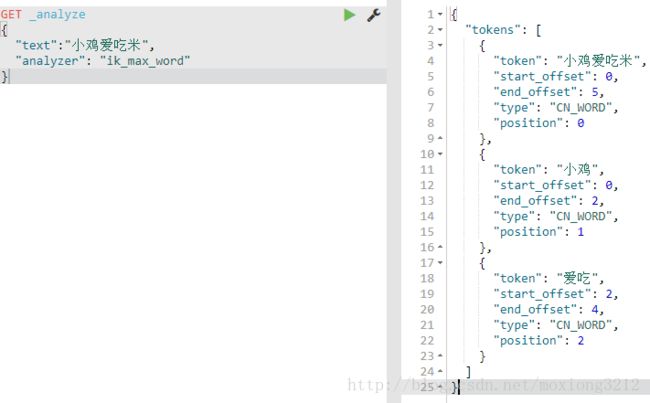

第九步 使用kibana进行测试

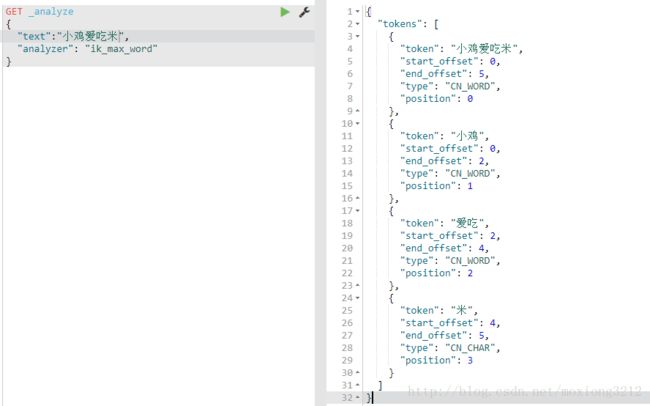

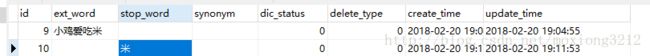

第十步 测试停用词

比如上例,认为 “米” 这个词没什么意义 不用分词,则在数据库添加 如图所示

命令行输出如图所示

![]()

kibana分词如图所示