tensorflow model deeplabv3 + mobilenetv2训练自标注数据流程整理

一、数据标注

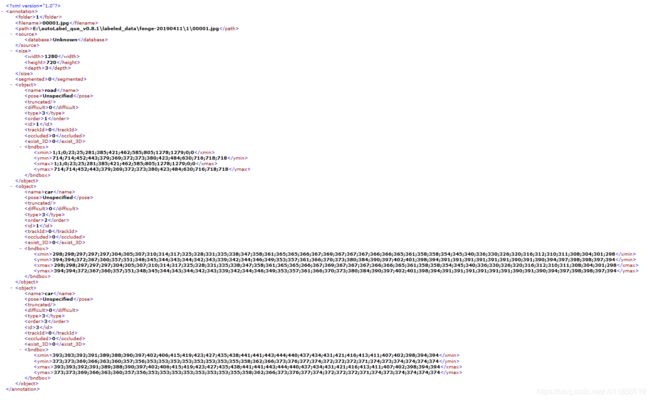

图片(tmp.jpg),标注结果文件(tmp.xml)如下:

label 类别如下(10类):

road;(128, 64, 128, 80)

car;(0, 0, 142, 80)

bus;(0, 60, 100, 80)

truck;(0, 0, 70, 80)

bicycle;(255, 0, 0, 80)

motorcycle;(0, 0, 230, 80)

person;(220, 20, 60, 80)

traffic_sign;(220, 220, 0, 80)

obstacle;(153, 153, 153, 80)

background;(70, 130, 180, 80)

数据处理成cityscapes的格式,需要下载对应的cityscapes数据处理的脚本连接如下:

https://github.com/mcordts/cityscapesScripts

需要修改脚本文件夹中cityscapesscripts/helpers/labels.py中的labels列表为:

然后编写训练label图片生成代码fillobject.py如下:import numpy as np

import cv2 as cv

import os,sys

import xml.etree.ElementTree as ET

import os, sys, getopt

from collections import namedtuple

Point = namedtuple('Point', ['x', 'y'])

import matplotlib.pyplot as plt

# Image processing

# Check if PIL is actually Pillow as expected

try:

from PIL import PILLOW_VERSION

except:

print("Please install the module 'Pillow' for image processing, e.g.")

print("pip install pillow")

sys.exit(-1)

try:

import PIL.Image as Image

import PIL.ImageDraw as ImageDraw

except:

print("Failed to import the image processing packages.")

sys.exit(-1)

sys.path.append( os.path.normpath( os.path.join( os.path.dirname( __file__ ) , '..' , 'helpers' ) ) )

# from cityscapesscripts.helpers import Annotation

from cityscapesscripts.helpers.labels import name2label

from cityscapesscripts.helpers.labels import labels

def mkdir(path):

folder = os.path.exists(path)

if not folder:

os.makedirs(path)

print "--- new folder... ---"

print "--- OK ---"

else:

print "--- There is this folder! ---"

def xml2labelImg(xmlfile,encoding, outline=None):

# the size of the image

annotations = []

width ,height = fillobject(xmlfile, annotations)

size = (int(width), int(height))

if width ==0 :

return -1

# the background

if encoding == "ids":

background = name2label['unlabeled'].id

elif encoding == "trainIds":

background = name2label['unlabeled'].trainId

elif encoding == "color":

background = name2label['unlabeled'].color

else:

print("Unknown encoding '{}'".format(encoding))

return None

# this is the image that we want to create

if encoding == "color":

labelImg = Image.new("RGBA", size, background)

else:

labelImg = Image.new("L", size, background)

# a drawer to draw into the image

drawer = ImageDraw.Draw(labelImg)

# loop over all objects

for obj in annotations:

label = obj['name']

polygon = obj['coordinates']

if len(polygon)==1 :

continue

# If the label is not known, but ends with a 'group' (e.g. cargroup)

# try to remove the s and see if that works

if (not label in name2label) and label.endswith('group'):

label = label[:-len('group')]

if not label in name2label:

print ("Label '{}' not known.".format(label))

# If the ID is negative that polygon should not be drawn

if name2label[label].id < 0:

continue

if encoding == "ids":

val = name2label[label].id

elif encoding == "trainIds":

val = name2label[label].trainId

elif encoding == "color":

val = name2label[label].color

try:

if outline:

drawer.polygon(polygon, fill=val, outline=outline)

else:

drawer.polygon(polygon, fill=val)

except:

print("Failed to draw polygon with label {}".format(label))

raise

# labelImg.show()

return labelImg

def xml2instanceImg(xmlfile,encoding):

# the size of the image

annotations = []

width ,height = fillobject(xmlfile, annotations)

size = (int(width), int(height))

if width ==0 :

return -1

# the background

if encoding == "ids":

background = name2label['unlabeled'].id

elif encoding == "trainIds":

background = name2label['unlabeled'].trainId

else:

print("Unknown encoding '{}'".format(encoding))

return None

instanceImg = Image.new("I", size, background)

# a drawer to draw into the image

drawer = ImageDraw.Draw(instanceImg)

# loop over all objects

nbInstances = {}

for labelTuple in labels:

if labelTuple.hasInstances:

nbInstances[labelTuple.name] = 0

for obj in annotations:

label = obj['name']

polygon = obj['coordinates']

if len(polygon)==1 :

continue

# If the label is not known, but ends with a 'group' (e.g. cargroup)

# try to remove the s and see if that works

isGroup = False

if (not label in name2label) and label.endswith('group'):

label = label[:-len('group')]

isGroup = True

if not label in name2label:

print ("Label '{}' not known.".format(label))

# If the ID is negative that polygon should not be drawn

if name2label[label].id < 0:

continue

labelTuple = name2label[label]

if encoding == "ids":

id = labelTuple.id

elif encoding == "trainIds":

id = labelTuple.trainId

if labelTuple.hasInstances and not isGroup and id != 255:

id = id * 1000 + nbInstances[label]

nbInstances[label] += 1

# If the ID is negative that polygon should not be drawn

if id < 0:

continue

try:

drawer.polygon(polygon, fill=id)

except:

print("Failed to draw polygon with label {}".format(label))

raise

# labelImg.show()

return instanceImg

def fillobject(xml_file,res):

xmldoc = ET.parse(xml_file)

root = xmldoc.getroot()

size = root.find('size')

width = size.find('width').text

height = size.find('height').text

# bimg = np.zeros((int(height), int(width), 3), np.uint8)

if len(root.findall('object'))<1:

return 0,0

for object in root.iter('object'):

order = object.find('order')

name = object.find('name')

bndbox = object.find('bndbox')

box_xs = bndbox.find('xmin')

box_ys = bndbox.find('ymin')

box_xs = box_xs.text.strip().split(';')

box_ys = box_ys.text.strip().split(';')

contour = []

if len(box_xs) != len(box_ys):

return "box_xs != box_ys"

for i in range(len(box_xs)):

contour.append(Point(int(box_xs[i]), int(box_ys[i])))

res.append({'name':name.text,'order':order.text,'coordinates':contour})

res.sort(key=lambda k: (k.get('order', 0)))

return width,height

# xmlfile = "G:\\tensorflow\\datas\\deeplab\\tsari\\fenge\\fengetu_biaozhu_1000_decode\\2016-10-08-11-58-35_000400.xml"

# # cv.drawContours(bimg, contours, -1, (0,255,0), 3)

# colormask = xml2labelImg(xmlfile,'color')

path = "G:\\tensorflow\\datas\\deeplab\\tsari\\fenge_night\\labels\\"

xmlfiles= os.listdir(path)

outputpath = "G:\\tensorflow\\datas\\deeplab\\tsari\\fenge_night\\train\\"

mkdir(outputpath)

for xmlfile in xmlfiles:

print xmlfile

# colormask = xml2instanceImg(path + xmlfile,'ids')

colormask = xml2labelImg(path + xmlfile, 'trainIds')

if colormask ==-1:

continue

# colormaskname = xmlfile.strip().split('.xml')[0]+"_instanceIds.png"

colormaskname = xmlfile.strip().split('.xml')[0] + "_gtFine_labeltrainIds.png"

# print colormaskname

colormask.save(outputpath + colormaskname )

# bimg.show()

# cv.imshow('color',bimg)

# cv.waitKey()

运行脚本生成train所需的标注图片如下:

然后是整理数据存放位置如下:

+ datasets

+ cityscapes

+ leftImg8bit

+train

+fengetu1

1_train_leftImg8bit.png

*_leftImg8bit.png

......

+fengetu2

2_train_leftImg8bit.png

*_leftImg8bit.png

......

.....

+test

+fengetu1

1_test_leftImg8bit.png

*_test_leftImg8bit.png

......

+fengetu2

2_test_leftImg8bit.png

*_test_leftImg8bit.png

......

.....

+val

+fengetu1

1_test_leftImg8bit.png

*_test_leftImg8bit.png

......

+fengetu2

2_test_leftImg8bit.png

*_test_leftImg8bit.png

......

.....

+ gtFine

+train

+fengetu1

1_train_gtFine_labeltrainIds.png

*_gtFine_labeltrainIds.png

......

+fengetu2

2_train_gtFine_labeltrainIds.png

*_gtFine_labeltrainIds.png

......

.....

+test

+fengetu1

1_test_gtFine_labeltrainIds.png

*_test_gtFine_labeltrainIds.png

......

+fengetu2

2_test_gtFine_labeltrainIds.png

*_test_gtFine_labeltrainIds.png

......

.....

+val

+fengetu1

1_test_gtFine_labeltrainIds.png

*_test_gtFine_labeltrainIds.png

......

+fengetu2

2_test_gtFine_labeltrainIds.png

*_test_gtFine_labeltrainIds.png

......

..... 然后执行:

python datasets/build_cityscapes_data.py --cityscapes_root=datasets/cityscapes/ --output_dir=datasets/cityscapes/tfrecord/

注意:根据自己的类别修改该脚本中的参数_NUM_SHAEDS = 10(我的类别工十类,上面数据部分提到了):

_NUM_SHARDS = 10

# A map from data type to folder name that saves the data.

_FOLDERS_MAP = {

'image': 'leftImg8bit',

'label': 'gtFine',

}

# A map from data type to filename postfix.

_POSTFIX_MAP = {

'image': '_leftImg8bit',

'label': '_gtFine_labelTrainIds',

}

# A map from data type to data format.

_DATA_FORMAT_MAP = {

'image': 'png',

'label': 'png',

}生成结果如下:

![]()

二:训练

训练数据准备好后开始训练,执行脚本:

CUDA_VISIBLE_DEVICES=0,2,3,4,5, ~/anaconda3/bin/python deeplab/train.py --logtostderr --training_number_of_steps=50000 --train_split="train" --model_variant="mobilenet_v2" --train_crop_size=361 --train_crop_size=361 --train_batch_size=10 --dataset="cityscapes" --train_logdir=./deeplab/output/mobilenet_v2_tsari_361_10_weights --dataset_dir=datasets/cityscapes/tfrecord/ --tf_initial_checkpoint=deeplabv3_mnv2_cityscapes_train/model.ckpt --num_clones=5 --fine_tune_batch_norm=False --output_stride=16

当目标类别比例失衡时可以通过调整deeplab/utils/train_utils.py中的label weight进行类别均衡:

def add_softmax_cross_entropy_loss_for_each_scale()

......

ignore_weight = 0

label0_weight = 1

label1_weight = 10

label2_weight = 10

label3_weight = 50

label4_weight = 10

label5_weight = 10

label6_weight = 10

label7_weight = 50

label8_weight = 10

label9_weight = 1

not_ignore_mask = tf.to_float(tf.equal(scaled_labels,0))*label0_weight + \

tf.to_float(tf.equal(scaled_labels,1))*label1_weight + \

tf.to_float(tf.equal(scaled_labels,2))*label2_weight + \

tf.to_float(tf.equal(scaled_labels,3))*label3_weight + \

tf.to_float(tf.equal(scaled_labels,4))*label4_weight + \

tf.to_float(tf.equal(scaled_labels,5))*label5_weight + \

tf.to_float(tf.equal(scaled_labels,6))*label6_weight + \

tf.to_float(tf.equal(scaled_labels,7))*label7_weight + \

tf.to_float(tf.equal(scaled_labels,8))*label8_weight + \

tf.to_float(tf.equal(scaled_labels,9))*label9_weight +\

tf.to_float(tf.equal(scaled_labels,ignore_label))*ignore_weight

训练传入参数注意事项:

1、# When fine_tune_batch_norm=True, use at least batch size larger than 12

# (batch size more than 16 is better). Otherwise, one could use smaller batch

# size and set fine_tune_batch_norm=False.

2、# For `xception_65`, use atrous_rates = [12, 24, 36] if output_stride = 8, or

# rates = [6, 12, 18] if output_stride = 16. For `mobilenet_v2`, use None. Note

# one could use different atrous_rates/output_stride during training/evaluation

3、# When using 'mobilent_v2', we set atrous_rates = decoder_output_stride = None.

# When using 'xception_65' or 'resnet_v1' model variants, we set

# atrous_rates = [6, 12, 18] (output stride 16) and decoder_output_stride = 4.

# See core/feature_extractor.py for supported model variants.

4、训练mobilenetv2 需要设置 output_stride=16

三、模型转换

CUDA_VISIBLE_DEVICES=2 ~/anaconda3/bin/python deeplab/export_model.py --checkpoint_path=deeplab/output/mobilenet_v2_tsari_361_10_weights/model.ckpt-50000 --export_path=deeplab/output/mobilenet_v2_tsari_361_10_weights/frozen_inference_graph.pb --num_classes=10 --model_variant="mobilenet_v2"

四、模型测试

mobilenetv2+deeplabv3在gtx1080ti上,361*361大小的图片,网络处理的速度为29ms每帧

CUDA_VISIBLE_DEVICES=0 ~/anaconda3/bin/python deeplab_demo.py output/mobilenet_v2_tsari_361_10_weights/ /media/harddisk/cuicui_data/val_imgs/dayimgs/ result/day_1_6.17/1/ result/day_1_6.17/2/