Centos7.3 Kubernetes集群部署

时间:2017年11月21日 浏览量:308

一、环境介绍及准备

1.1 物理机操作系统

物理机操作系统采用Centos7.3 64位,细节如下。

[root@localhost ~]# uname -a

Linux localhost.localdomain 3.10.0-514.6.1.el7.x86_64 #1 SMP Wed Jan 18 13:06:36 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

[root@localhost ~]# cat /etc/redhat-release

CentOS Linux release 7.3.1611 (Core)

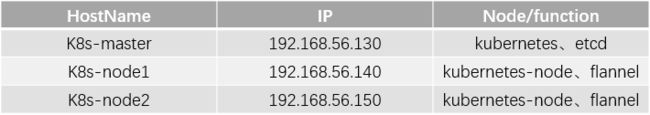

1.2 主机信息

本文准备了三台机器用于部署k8s的运行环境,细节如下:

1.3 环境准备

1.3.1 主机名修改

Master:

[root@localhost ~]# hostnamectl --static set-hostname k8s-master

Node1:

[root@localhost ~]# hostnamectl --static set-hostname k8s-node1

Node2:

[root@localhost ~]# hostnamectl --static set-hostname k8s-node2

1.3.2 安装docker及iptables

yum install docker iptables-services.x86_64 -y

1.3.3 关闭默认firewalld启动iptables并清除默认规则

systemctl stop firewalld

systemctl disable firewalld

systemctl start iptables

systemctl enable iptables

iptables -F

service iptables save

1.3.4 启动docker并加入开机自启动

systemctl start docker

systemctl enable docker

二、K8S集群部署

MASTER

2.1 master安装kubernetes etcd

[root@k8s-master ~]# yum install kubernetes etcd -y

2.2 配置

etcd

#修改

[root@k8s-master ~]# cd /etc/etcd/

[root@k8s-master etcd]# vim etcd.conf

9:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

20:ETCD_ADVERTISE_CLIENT_URLS="http://0.0.0.0:2379"

#启动并加入开机自启动

[root@k8s-master etcd]# systemctl start etcd

[root@k8s-master etcd]# systemctl enable etcd

kubernetes

#修改

[root@k8s-master ~]# cd /etc/kubernetes/

[root@k8s-master kubernetes]# ll

total 24

-rw-r--r-- 1 root root 767 Jul 3 23:33 apiserver #需要配置

-rw-r--r-- 1 root root 655 Jul 3 23:33 config #需要配置

-rw-r--r-- 1 root root 189 Jul 3 23:33 controller-manager #需要配置

-rw-r--r-- 1 root root 615 Jul 3 23:33 kubelet

-rw-r--r-- 1 root root 103 Jul 3 23:33 proxy

-rw-r--r-- 1 root root 111 Jul 3 23:33 scheduler #需要配置

#配置config

[root@k8s-master kubernetes]# vim config

22:KUBE_MASTER="--master=http://192.168.56.130:8080"

# apiserver

[root@k8s-master kubernetes]# vim apiserver

8:KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

11:KUBE_API_PORT="--port=8080"

14:KUBELET_PORT="--kubelet-port=10250"

17:KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379"

23:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

#controller-manager

[root@k8s-master kubernetes]# vim controller-manager

8:KUBELET_ADDRESSES="--machines=192.168.56.140,192.168.56.150" #增加配置

启动服务并加入开机自启动

[root@k8s-master ~]# systemctl list-unit-files |grep kube

kube-apiserver.service disabled #需要启动

kube-controller-manager.service disabled #需要启动

kube-proxy.service disabled

kube-scheduler.service disabled #需要启动

kubelet.service disabled

#启动

[root@k8s-master ~]# systemctl start kube-apiserver.service kube-controller-manager.service kube-scheduler.service

#查看是否启动

[root@k8s-master ~]# systemctl is-active kube-apiserver.service kube-controller-manager.service kube-scheduler.service

active

active

active

#开机启动

[root@k8s-master ~]# systemctl enable kube-apiserver.service kube-controller-manager.service kube-scheduler.service

注意:启动顺序 etcd-->kubernetes*

SLAVE 两个节点相同

2.3 slave 安装 kubernetes-node

yum install kubernetes-node.x86_64 flannel -y

2.4 slave配置

[root@k8s-node1 ~]# cd /etc/kubernetes/

[root@k8s-node1 kubernetes]# ll

total 12

-rw-r--r-- 1 root root 655 Jul 3 23:33 config #需要配置

-rw-r--r-- 1 root root 615 Jul 3 23:33 kubelet #需要配置

-rw-r--r-- 1 root root 103 Jul 3 23:33 proxy

#config

[root@k8s-node1 kubernetes]# vim config

22:KUBE_MASTER="--master=http://192.168.56.130:8080"

# kubelet

5:KUBELET_ADDRESS="--address=0.0.0.0"

8:KUBELET_PORT="--port=10250"

11:KUBELET_HOSTNAME="--hostname-override=192.168.56.140"

14:KUBELET_API_SERVER="--api-servers=http://192.168.56.130:8080"

启动并加入开机自启动

[root@k8s-node1 ~]# systemctl list-unit-files |grep kube

kube-proxy.service disabled

kubelet.service disabled

[root@k8s-node1 ~]# systemctl start kube-proxy.service kubelet.service

[root@k8s-node1 ~]# systemctl is-active kube-proxy.service kubelet.service

active

active

[root@k8s-node1 ~]# systemctl enable kube-proxy.service kubelet.service

#flannel配置

[root@k8s-node1 kubernetes]# cd /etc/sysconfig/

[root@k8s-node1 sysconfig]# vim flanneld

4:FLANNEL_ETCD_ENDPOINTS="http://192.168.56.130:2379"

启动并加入开机自动:

systemctl start flanneld.service

systemctl enable flanneld.service

#查看flannel状态

[root@k8s-node1 sysconfig]# systemctl is-active flanneld.service

active

注意:此时flannel启动不了,之所以启动不起来是因为etcd里面没有flannel所需要的网络信息,此时我们需要在etcd里面创建flannel所需要的网络信息

master创建 flannel所需要的网络信息:

[root@k8s-master ~]# etcdctl set /atomic.io/network/config '{ "Network": "172.17.0.0/16" }'

{ "Network": "172.17.0.0/16" }

2.5 集群检查

[root@k8s-master ~]# kubectl get node

NAME STATUS AGE

192.168.56.140 Ready 56m

192.168.56.150 Ready 54m

kubernetes集群配置完成!