VGGNet

- 感受野

- 网络结构

- 代码

-

- model.py

- train.py

- predict.py

- 其他版本

-

- model.py

- train.py

- predict.py

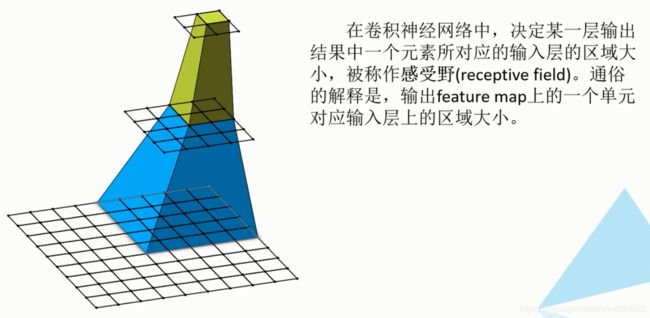

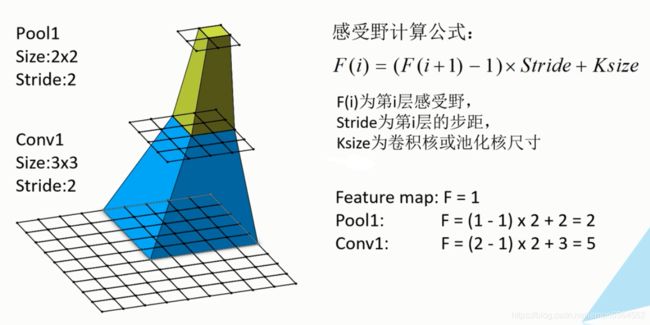

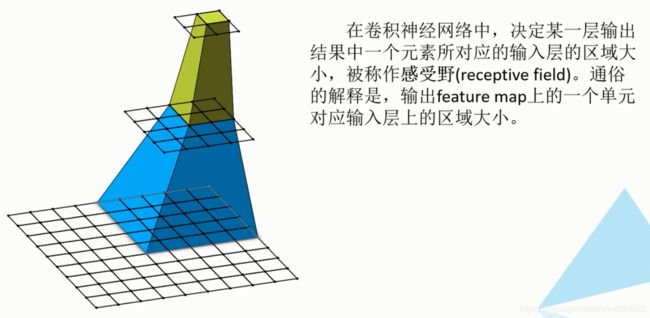

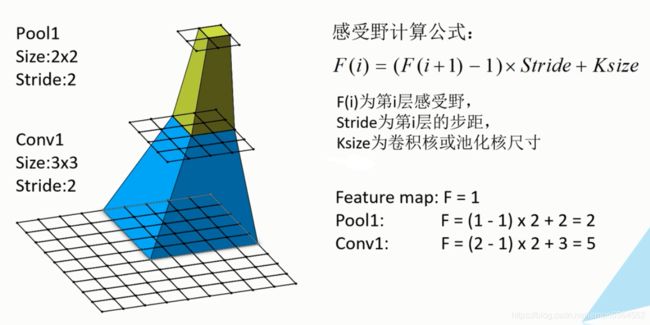

感受野

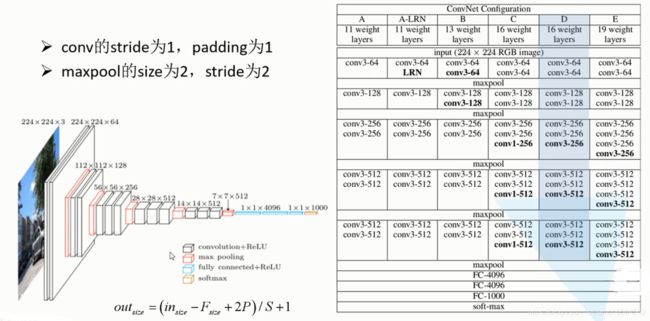

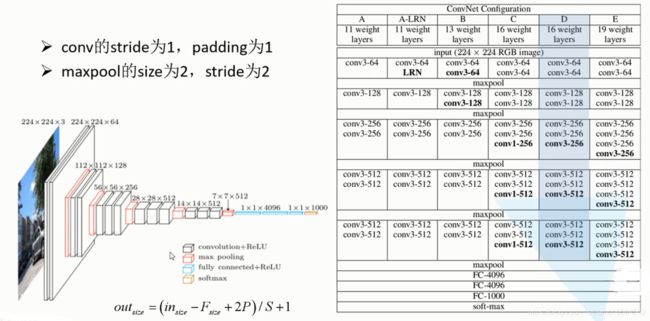

网络结构

代码

model.py

import torch

import torch.nn as nn

class VGG16(nn.Module):

def __init__(self, num__class):

super(VGG16, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, 3, 1, 1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(64, 128, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, 3, 1, 1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(128, 256, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, 3, 1, 1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(256, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(512, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, 3, 1, 1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2)

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(512 * 7 * 7, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num__class)

)

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

train.py

from model import VGG16

import torch

import torchvision as tv

import torchvision.transforms as transforms

import json

data_transform = {

"train":

transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

]),

"val":

transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

}

train_set = tv.datasets.ImageFolder(root="C:/Users/14251/Desktop/workspace/VGGNet/flower_data/train",

transform=data_transform["train"])

val_set = tv.datasets.ImageFolder(root="C:/Users/14251/Desktop/workspace/VGGNet/flower_data/train",

transform=data_transform["val"])

train_loader = torch.utils.data.DataLoader(train_set,

batch_size=32,

shuffle=True,

num_workers=0)

val_loader = torch.utils.data.DataLoader(val_set,

batch_size=32,

shuffle=True,

num_workers=0)

flower_list = train_set.class_to_idx

flower_dict = dict((val, key) for key, val in flower_list.items())

json_str = json.dumps(flower_dict, indent=4)

with open("class_indices.json", "w") as json_file:

json_file.write(json_str)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

net = VGG16(num__class=5).to(device)

loss_fun = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.0002)

best_accurate = 0.0

for epoch in range(10):

net.train()

running_loss = 0.0

for step, train_data in enumerate(train_loader, start=0):

train_images, train_labels = train_data

optimizer.zero_grad()

outputs = net(train_images.to(device))

loss = loss_fun(outputs, train_labels.to(device))

loss.backward()

optimizer.step()

running_loss += loss.item()

rate = (step + 1) / len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.3f}".format(

int(rate * 100), a, b, loss),

end="")

print()

net.eval()

accurate = 0.0

with torch.no_grad():

for val_data in val_loader:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

pred = torch.max(outputs, dim=1)[1]

accurate += (pred == val_labels.to(device)).sum().item()

val_accurate = accurate / len(val_set)

if (val_accurate > best_accurate):

best_accurate = val_accurate

torch.save(net.state_dict(),

"C:/Users/14251/Desktop/workspace/VGGNet/VGG_dict.pth")

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, running_loss / step, val_accurate))

print("Finished Training")

predict.py

import torch

import json

import torchvision.transforms as transforms

from model import VGG16

from PIL import Image

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

img = Image.open("C:/Users/14251/Desktop/workspace/VGGNet/test.jpg")

img = transform(img)

img = img.unsqueeze(dim=0)

try:

json_file = open(

"C:/Users/14251/Desktop/workspace/VGGNet/class_indices.json", "r")

class_indices = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

net = VGG16(num__class=5)

net.load_state_dict(

torch.load("C:/Users/14251/Desktop/workspace/VGGNet/VGG_dict.pth"))

with torch.no_grad():

output = net(img).squeeze()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indices[str(predict_cla)], predict[predict_cla].item())

其他版本

model.py

import torch.nn as nn

import torch

class VGG(nn.Module):

def __init__(self, features, num_classes=1000, init_weights=False):

super(VGG, self).__init__()

self.features = features

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(512*7*7, 2048),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(True),

nn.Linear(2048, num_classes)

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_uniform_(m.weight)

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.xavier_uniform_(m.weight)

nn.init.constant_(m.bias, 0)

def make_features(cfg: list):

layers = []

in_channels = 3

for v in cfg:

if v == "M":

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(True)]

in_channels = v

return nn.Sequential(*layers)

cfgs = {

'vgg11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'vgg19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

def vgg(model_name="vgg16", **kwargs):

try:

cfg = cfgs[model_name]

except:

print("Warning: model number {} not in cfgs dict!".format(model_name))

exit(-1)

model = VGG(make_features(cfg), **kwargs)

return model

train.py

import torch.nn as nn

from torchvision import transforms, datasets

import json

import os

import torch.optim as optim

from model import vgg

import torch

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../.."))

image_path = data_root + "/data_set/flower_data/"

train_dataset = datasets.ImageFolder(root=image_path+"train",

transform=data_transform["train"])

train_num = len(train_dataset)

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)

validate_dataset = datasets.ImageFolder(root=image_path + "val",

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=0)

model_name = "vgg16"

net = vgg(model_name=model_name, num_classes=5, init_weights=True)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.0001)

best_acc = 0.0

save_path = './{}Net.pth'.format(model_name)

for epoch in range(30):

net.train()

running_loss = 0.0

for step, data in enumerate(train_loader, start=0):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

running_loss += loss.item()

rate = (step + 1) / len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.3f}".format(int(rate * 100), a, b, loss), end="")

print()

net.eval()

acc = 0.0

with torch.no_grad():

for val_data in validate_loader:

val_images, val_labels = val_data

optimizer.zero_grad()

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += (predict_y == val_labels.to(device)).sum().item()

val_accurate = acc / val_num

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, running_loss / step, val_accurate))

print('Finished Training')

predict.py

import torch

from model import vgg

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

img = Image.open("../tulip.jpg")

plt.imshow(img)

img = data_transform(img)

img = torch.unsqueeze(img, dim=0)

try:

json_file = open('./class_indices.json', 'r')

class_indict = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

model = vgg(model_name="vgg16", num_classes=5)

model_weight_path = "./vgg16Net.pth"

model.load_state_dict(torch.load(model_weight_path))

model.eval()

with torch.no_grad():

output = torch.squeeze(model(img))

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indict[str(predict_cla)])

plt.show()