麻雀虽小五脏俱全

这篇博客仅仅200行代码,却涵盖了Python很多知识面

图形化界面+日志打印文件+代理IP+定时器+数据库连接+异常捕获…

import requests

from bs4 import BeautifulSoup

import uuid

import pymysql

import datetime

from fake_useragent import UserAgent

import time

from pymysql import OperationalError

import easygui

from tkinter import messagebox

from tkinter import *

from requests.exceptions import ProxyError

top = Tk()

top.withdraw()

cookk = {

'Cookie': 'deviceIdRenew=1; Hm_lvt_91cf34f62b9bedb16460ca36cf192f4c=1606462198,1606544088,1606547040,1606720814; deviceId=a3f148f-05f0-42d7-b8e5-e29d1f0f1; sessionId=S_0KI4880CAX77BO85; lmvid=d10147ae8706720112b5ed4fae4735a2; lmvid.sig=aaLOZZvjSRmxcxpc4je4nRzkSN_olI3w-q2rW9zTHs4; hnUserTicket=fa6e3cd1-65c0-485a-9557-26dc9b7682ac; hnUserId=267459990; Hm_lpvt_91cf34f62b9bedb16460ca36cf192f4c=1606722342'}

headers = {

'Host': 'www.cnhnb.com',

'Referer': 'https://www.cnhnb.com/hangqing/cdlist-0-0-0-0-0-1/',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36',

}

def getTime():

now_time = datetime.datetime.now()

next_time = now_time + datetime.timedelta(days=+1)

next_year = next_time.date().year

next_month = next_time.date().month

next_day = next_time.date().day

next_time = datetime.datetime.strptime(str(next_year) + "-" + str(next_month) + "-" + str(next_day) + " 03:00:00",

"%Y-%m-%d %H:%M:%S")

timer_start_time = (next_time - now_time).total_seconds()

return timer_start_time;

class Logger(object):

def __init__(self, filename='default.log', stream=sys.stdout):

self.terminal = stream

self.log = open(filename, 'a')

def write(self, message):

self.terminal.write(message)

self.log.write(message)

def flush(self):

pass

sys.stdout = Logger(stream=sys.stdout)

(host, db, user, passwd) = easygui.multpasswordbox('惠农网价格爬虫脚本', 'Python爬取中心',

fields=['请输入数据库地址(默认本地地址)', '请输入数据库名称(默认zhang)',

'请输入数据库账号(默认root)',

'请输入数据库密码(默认root)', ],

values=['127.0.0.1', 'zhang', 'root', 'root'])

(ipList, start, end) = easygui.multenterbox('惠农网价格爬虫脚本', 'Python爬取中心',

fields=['请输入ip:端口号,并用逗号隔开(ip个数=页码数)', '请输入要爬取的起始页(开始页)', '请输入要爬取的起始页(结束页)'],

values=['', '', ''])

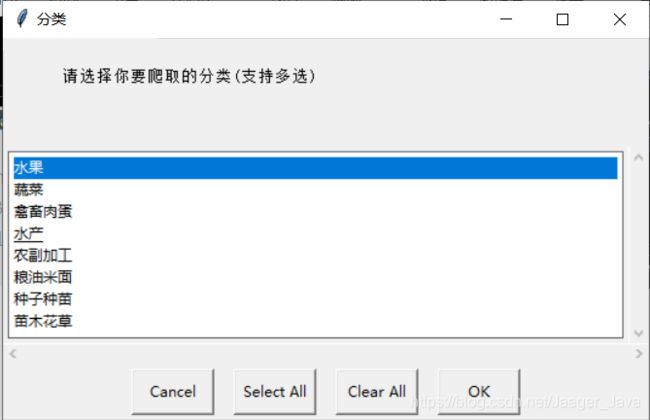

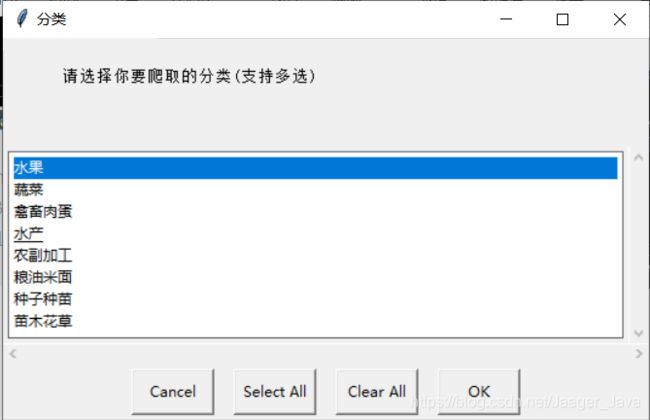

fenlei = easygui.multchoicebox(msg="请选择你要爬取的分类(支持多选)", title="分类",

choices=("水果", "蔬菜", "禽畜肉蛋", "水产", "农副加工", "粮油米面", "种子种苗", "苗木花草"))

print(f'这个集合是{ipList}')

bb = ipList.split(',')

IPlILI = [{

"https": "http://" + i} for i in bb]

def getPrice():

try:

conn = pymysql.connect(host=host, user=user, passwd=passwd, db=db, charset='utf8')

cur = conn.cursor()

messagebox.showinfo("提示", "数据库已连接")

print("------爬虫程序开始------")

except OperationalError:

messagebox.showwarning("提示", "输入的数据库账号或密码错误")

return

qqqLen = int(len(bb))

print(f'这个IP数组的长度是{qqqLen}')

www = 0

types = []

headers["User-Agent"] = UserAgent().random

if '水果' in fenlei:

types.append(2003191)

if '蔬菜' in fenlei:

types.append(2003192)

if '禽畜肉蛋' in fenlei:

types.append(2003193)

if '水产' in fenlei:

types.append(2003194)

if '农副加工' in fenlei:

types.append(2003195)

if '粮油米面' in fenlei:

types.append(2003196)

if '种子种苗' in fenlei:

types.append(2003197)

if '苗木花草' in fenlei:

types.append(2003198)

if '中药材' in fenlei:

types.append(2003200)

for inx, type in enumerate(types):

for i in range(int(start), int(end) + 1):

if www == qqqLen:

print('进来了')

print(www)

print(qqqLen)

www = 0

uull = IPlILI[www]

print(f'使用的ip是{uull},正在抓取的分类是:{fenlei[inx]},所在页数是:{i}')

try:

resp = requests.get(f"https://www.cnhnb.com/hangqing/cdlist-{type}-0-0-0-0-{i}", proxies=uull,

headers=headers, cookies=cookk, timeout=30)

page_one = BeautifulSoup(resp.text, "html.parser")

dd = page_one.find('div', class_='quotation-content-list').find_all('li')

except AttributeError:

continue

except ProxyError:

print(f'第{i}个IP无法连接')

IPlILI.remove(uull)

print('第一次移出IP成功')

pp = easygui.multenterbox('替换IP', 'Python爬取中心',

fields=['请输入要替换的IP(IP+端口号)'],

values=[''])

addUrl = {

"https": "http://" + pp[0]}

IPlILI.append(addUrl)

print('第一次添加IP成功')

try:

resp = requests.get(f"https://www.cnhnb.com/hangqing/cdlist-{type}-0-0-0-0-{i}", proxies=addUrl,

headers=headers, cookies=cookk, timeout=30)

page_one = BeautifulSoup(resp.text, "html.parser")

dd = page_one.find('div', class_='quotation-content-list').find_all('li')

except ProxyError:

print(f'新添加的IP依旧无法连接')

IPlILI.remove(addUrl)

print('第二次移出IP成功')

pp = easygui.multenterbox('第二次替换IP', 'Python爬取中心',

fields=['请输入要替换的IP(IP+端口号)'],

values=[''])

addTwoUrl = {

"https": "http://" + pp[0]}

IPlILI.append(addTwoUrl)

print('第二次添加IP成功')

resp = requests.get(f"https://www.cnhnb.com/hangqing/cdlist-{type}-0-0-0-0-{i}", proxies=addTwoUrl,

headers=headers, cookies=cookk)

page_one = BeautifulSoup(resp.text, "html.parser")

dd = page_one.find('div', class_='quotation-content-list').find_all('li')

except Exception:

continue

if dd is None:

print("要回去了")

continue

for ss in dd:

shopDate = ss.findAll('span')[0].text.strip()

if str(datetime.date.today() - datetime.timedelta(days=1))[8:10] == str(shopDate.split('-')[2]):

productId = str(uuid.uuid1())

name = ss.findAll('span')[1].text.strip()

pru = ss.findAll('span')[2].text.strip()

province = ss.findAll('span')[2].text.strip()[0:2]

if '内蒙' in str(pru) or '黑龙' in str(pru) or '台湾' in str(pru):

province = ss.findAll('span')[2].text.strip()[0:3]

print(pru)

if '自治州' in str(pru):

area = str(ss.findAll('span')[2].text.split("自治州")[1])

elif '自治县' in str(pru):

area = str(ss.findAll('span')[2].text.split("自治县")[1])

elif '地区' in str(pru):

area = str(ss.findAll('span')[2].text.split("地区")[1])

else:

try:

area = str(ss.findAll('span')[2].text.split("市")[1])

except IndexError:

print('这么奇葩的地市666666666')

print(area)

if type == 2003191:

gry = "水果"

elif type == 2003192:

gry = "蔬菜"

elif type == 2003193:

gry = "禽畜肉蛋"

elif type == 2003194:

gry = "水产"

elif type == 2003195:

gry = "农副加工"

elif type == 2003196:

gry = "粮油米面"

elif type == 2003197:

gry = "种子种苗"

elif type == 2003198:

gry = "苗木花草"

else:

gry = "中药材"

price = ss.findAll('span')[3].text[0:-3]

updown = ss.findAll('span')[4].text.strip()

unit = ss.findAll('span')[3].text[-3:]

sql = "insert into p_price_data(id,name,area,price,unit,creatime,province,up_down,type) VALUES (%s,%s,%s,%s,%s,%s,%s,%s,%s)"

cur.execute(sql, (productId, name, area, price, unit, shopDate, province, updown, gry))

print(f"分类为:{fenlei[inx]}的第{i}个页面数据抓取完毕")

www += 1

conn.commit()

time.sleep(2)

time.sleep(2)

cur.close()

conn.close()

if __name__ == '__main__':

print("----------------开始爬取价格----------------")

getPrice();

print("----------------价格爬取结束----------------")

代码写的很简单,注解也很详细,每一步都有说明

只看代码有点枯燥,运行一下,看下效果图o( ̄︶ ̄)o

就是这么人性化,解决IP问题

嗯,简单暴力的爬取反爬网站的数据