Pytorch下实现Unet对自己多类别数据集的语义分割

目录

-

- Unet训练

- 序言

- 开发环境

- 一、准备自己的数据集

- 二、修改训练文件

- 三、修改测试文件

- 四、计算测试集各类别mIoU

Unet训练

2015年,以FCN为基础改进得到了Unet网络。Unet结构简单,采用了编码-解码结构,编码器实现特征的提取,解码器进行上采样,并融合了不同尺度特征,实现精细分割。

Unet代码免费下载

序言

通常,Unet被普遍应用到医学图像的处理,实现病灶的分割,这里的分割一般只是针对于单类病灶和背景的分割。为此,博主使用pytorch,对含有21类的VOC2012数据集在Unet实现训练和测试,过程如下。

开发环境

- ubuntu 18.04

- cuda 10.0 cudnn 7.5

- pytorch1.2

- python3.7

一、准备自己的数据集

1. 数据集做标签

使用labelme对原始图像进行标注,得到对应的json文件。

2. json文件转换为mask和png图片格式

首先,新建文件夹;然后,在新建文件夹下,将自己的数据(原始图片和对应的json文件)放入同一个 data_annotated 文件夹;之后,制作自己的 labels.txt,拷贝 labelme2voc.py 文件不需改动;准备情况如下:

![]()

labelme2voc.py 文件目录 /labelme/examples/semantic_segmentation 下载链接,因为只用到该文件,大家可以不用去githup下载,这里直接给出 labelme2voc.py :

// labelme2voc.py

#!/usr/bin/env python

from __future__ import print_function

import argparse

import glob

import json

import os

import os.path as osp

import sys

import imgviz

import numpy as np

import PIL.Image

import labelme

def main():

parser = argparse.ArgumentParser(

formatter_class=argparse.ArgumentDefaultsHelpFormatter

)

parser.add_argument('input_dir', help='input annotated directory')

parser.add_argument('output_dir', help='output dataset directory')

parser.add_argument('--labels', help='labels file', required=True)

parser.add_argument(

'--noviz', help='no visualization', action='store_true'

)

args = parser.parse_args()

if osp.exists(args.output_dir):

print('Output directory already exists:', args.output_dir)

sys.exit(1)

os.makedirs(args.output_dir)

os.makedirs(osp.join(args.output_dir, 'JPEGImages'))

os.makedirs(osp.join(args.output_dir, 'SegmentationClass'))

os.makedirs(osp.join(args.output_dir, 'SegmentationClassPNG'))

if not args.noviz:

os.makedirs(

osp.join(args.output_dir, 'SegmentationClassVisualization')

)

print('Creating dataset:', args.output_dir)

class_names = []

class_name_to_id = {

}

for i, line in enumerate(open(args.labels).readlines()):

class_id = i - 1 # starts with -1

class_name = line.strip()

class_name_to_id[class_name] = class_id

if class_id == -1:

assert class_name == '__ignore__'

continue

elif class_id == 0:

assert class_name == '_background_'

class_names.append(class_name)

class_names = tuple(class_names)

print('class_names:', class_names)

out_class_names_file = osp.join(args.output_dir, 'class_names.txt')

with open(out_class_names_file, 'w') as f:

f.writelines('\n'.join(class_names))

print('Saved class_names:', out_class_names_file)

for label_file in glob.glob(osp.join(args.input_dir, '*.json')):

print('Generating dataset from:', label_file)

with open(label_file) as f:

base = osp.splitext(osp.basename(label_file))[0]

out_img_file = osp.join(

args.output_dir, 'JPEGImages', base + '.jpg')

out_lbl_file = osp.join(

args.output_dir, 'SegmentationClass', base + '.npy')

out_png_file = osp.join(

args.output_dir, 'SegmentationClassPNG', base + '.png')

if not args.noviz:

out_viz_file = osp.join(

args.output_dir,

'SegmentationClassVisualization',

base + '.jpg',

)

data = json.load(f)

img_file = osp.join(osp.dirname(label_file), data['imagePath'])

img = np.asarray(PIL.Image.open(img_file))

PIL.Image.fromarray(img).save(out_img_file)

lbl = labelme.utils.shapes_to_label(

img_shape=img.shape,

shapes=data['shapes'],

label_name_to_value=class_name_to_id,

)

labelme.utils.lblsave(out_png_file, lbl)

np.save(out_lbl_file, lbl)

if not args.noviz:

viz = imgviz.label2rgb(

label=lbl,

img=imgviz.rgb2gray(img),

font_size=15,

label_names=class_names,

loc='rb',

)

imgviz.io.imsave(out_viz_file, viz)

if __name__ == '__main__':

main()

最后,在新建文件夹下打开终端,激活labelme虚拟环境,运行:

python labelme2voc.py data_annotated data_dataset_voc --labels labels.txt

生成 data_dataset_voc 文件夹,里面包含:![]()

JPEGImages存放原图

SegmentationClass存放ground truth(mask)的二进制文件

SegmentationClassPNG存放原图对应的ground truth(mask)

SegmentationClassVisualization存放原图与ground truth融合后的图

ImageSets文件夹内还有一个文件夹Segmentation,存放train.txt、trainval.txt和val.txt

JPEGImages存放原图

SegmentationClass存放原图对应的ground truth(mask),即上面提到的SegmentationClassPNG

二、修改训练文件

1. mypath.py中路径的修改

// mypath.py

class Path(object):

@staticmethod

def db_root_dir(dataset):

if dataset == 'pascal':

return '/home/user/VOC2012/' # 改为自己的路径,这里的voc2012文件夹包含了ImageSets、JPEGImages和SegmentationClass这3个文件夹

elif dataset == 'cityscapes':

return '/path/to/datasets/cityscapes/'

elif dataset == 'coco':

return '/path/to/datasets/coco/'

else:

print('Dataset {} not available.'.format(dataset))

raise NotImplementedError

2. dataloaders/datasets/pascal.py修改

// pascal.py

from __future__ import print_function, division

import os

from PIL import Image

import numpy as np

from torch.utils.data import Dataset

from mypath import Path

from torchvision import transforms

from dataloaders import custom_transforms as tr

class VOCSegmentation(Dataset):

"""

PascalVoc dataset

"""

NUM_CLASSES = 21 # 修改为自己的类别数

def __init__(self,

args,

base_dir=Path.db_root_dir('pascal'),

split='train',

):

"""

3. dataloaders/utils.py修改

// utils.py

if dataset == 'pascal' or dataset == 'coco':

n_classes = 21 # 修改为自己的类别数

label_colours = get_pascal_labels()

elif dataset == 'cityscapes':

n_classes = 19

label_colours = get_cityscapes_labels()

else:

raise NotImplementedError

// utils.py

def get_pascal_labels():

"""Load the mapping that associates pascal classes with label colors

Returns:

np.ndarray with dimensions (21, 3) # 21改为自己的类别数

"""

return np.asarray([[0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

[0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

[64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

[64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

[0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0],

[0, 64, 128]]) # 根据自己的类别,自定义颜色

注意:对于自己的数据集,在函数 get_pascal_labels() 定义的各类别颜色,与labelme生成的SegmentationClassPNG中mask的颜色无法对应,需要对labelme虚拟环境中的相关文件做进一步改进,改进内容链接。

4. train.py修改

// train.py

# Define network

model = Unet(n_channels=3, n_classes=21) # n_classes修改为自己的类别数

train_params = [{

'params': model.parameters(), 'lr': args.lr}]

保存每个epoch的损失,便于后续绘制损失曲线

// train.py

self.writer.add_scalar('train/total_loss_epoch', train_loss, epoch)

print('[Epoch: %d, numImages: %5d]' % (epoch, i * self.args.batch_size + image.data.shape[0]))

print('Loss: %.3f' % train_loss)

loss_save='/home/user/U-Net/curve/loss.txt' # 保存每个epoch的损失,修改为自己的路径

file_save=open(loss_save,mode='a')

file_save.write('\n'+'epoch:'+str(epoch)+' cross entroy:'+str(train_loss))

file_save.close()

if self.args.no_val:

# save checkpoint every epoch

is_best = False

self.saver.save_checkpoint({

'epoch': epoch + 1,

'state_dict': self.model.module.state_dict(),

'optimizer': self.optimizer.state_dict(),

'best_pred': self.best_pred,

}, is_best)

--gpu-ids, default='0',表示指定显卡为默认显卡,若为多显卡可设置为default='0,1,2.......'

设置自己的epoch,batch_size,lr:

// train.py

# default settings for epochs, batch_size and lr

if args.epochs is None:

epoches = {

'coco': 30,

'cityscapes': 200,

'pascal': 400, # epoch=400,训练时改为自己的epoch

}

args.epochs = epoches[args.dataset.lower()]

if args.batch_size is None:

args.batch_size = 2 * len(args.gpu_ids) # 训练过程的batch_size,为2倍的GPU个数

if args.test_batch_size is None:

args.test_batch_size = args.batch_size # 测试过程的batch_size

if args.lr is None:

lrs = {

'coco': 0.1,

'cityscapes': 0.01,

'pascal': 0.05,

}

args.lr = lrs[args.dataset.lower()] / (2 * len(args.gpu_ids)) * args.batch_size # 学习率

直接在pycharm中进行run,正常训练情形:

每个epoch训练完成后都会对验证集进行评估,按照mIoU最优的结果进行模型的保存,最后的模型训练结果会保存在run文件中。

三、修改测试文件

demo.py修改

// demo.py

import argparse

import os

import numpy as np

import time

import cv2

from modeling.unet import *

from dataloaders import custom_transforms as tr

from PIL import Image

from torchvision import transforms

from dataloaders.utils import *

from torchvision.utils import make_grid, save_image

def main():

parser = argparse.ArgumentParser(description="PyTorch Unet Training")

parser.add_argument('--in-path', type=str, required=True, help='image to test')

parser.add_argument('--ckpt', type=str, default='model_best.pth.tar', # 得到的最好的训练模型

help='saved model')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--gpu-ids', type=str, default='0', # 默认单GPU测试

help='use which gpu to train, must be a \

comma-separated list of integers only (default=0)')

parser.add_argument('--dataset', type=str, default='pascal',

choices=['pascal', 'coco', 'cityscapes','invoice'],

help='dataset name (default: pascal)')

parser.add_argument('--crop-size', type=int, default=512,

help='crop image size')

parser.add_argument('--num_classes', type=int, default=21, # 修改为自己的类别数

help='crop image size')

parser.add_argument('--sync-bn', type=bool, default=None,

help='whether to use sync bn (default: auto)')

parser.add_argument('--freeze-bn', type=bool, default=False,

help='whether to freeze bn parameters (default: False)')

args = parser.parse_args()

args.cuda = not args.no_cuda and torch.cuda.is_available()

if args.cuda:

try:

args.gpu_ids = [int(s) for s in args.gpu_ids.split(',')]

except ValueError:

raise ValueError('Argument --gpu_ids must be a comma-separated list of integers only')

if args.sync_bn is None:

if args.cuda and len(args.gpu_ids) > 1:

args.sync_bn = True

else:

args.sync_bn = False

model_s_time = time.time()

model = Unet(n_channels=3, n_classes=21)

ckpt = torch.load(args.ckpt, map_location='cpu')

model.load_state_dict(ckpt['state_dict'])

model = model.cuda()

model_u_time = time.time()

model_load_time = model_u_time-model_s_time

print("model load time is {}".format(model_load_time))

composed_transforms = transforms.Compose([

tr.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

tr.ToTensor()])

for name in os.listdir(args.in_path):

s_time = time.time()

image = Image.open(args.in_path+"/"+name).convert('RGB')

target = Image.open(args.in_path+"/"+name).convert('L')

sample = {

'image': image, 'label': target}

tensor_in = composed_transforms(sample)['image'].unsqueeze(0)

model.eval()

if args.cuda:

tensor_in = tensor_in.cuda()

with torch.no_grad():

output = model(tensor_in)

grid_image = make_grid(decode_seg_map_sequence(torch.max(output[:3], 1)[1].detach().cpu().numpy()),

3, normalize=False, range=(0, 9))

save_image(grid_image,'/home/user/U-Net/pred'+"/"+"{}.png".format(name[0:-4])) #测试图片测试后结果保存在pred文件中

u_time = time.time()

img_time = u_time-s_time

print("image:{} time: {} ".format(name,img_time))

print("image save in in_path.")

if __name__ == "__main__":

main()

# python demo.py --in-path your_file --out-path your_dst_file

demo.py修改完成后,在pycharm中的Terminal下运行:

// Terminal

python demo.py --in-path /home/user/U-Net/test

--in-path /home/user/U-Net/test 是你测试图片的路径

左图为原图,有图为预测图。

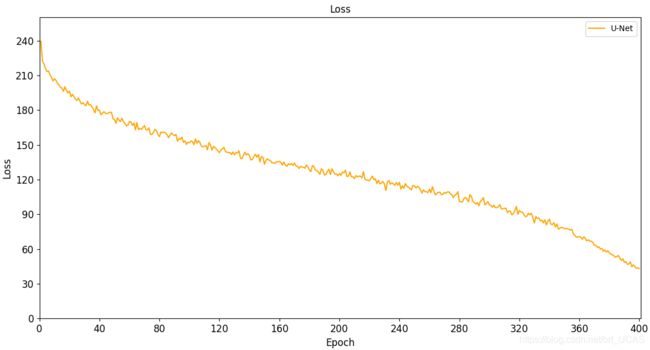

通过训练中保存的loss.txt得到Unet训练过程中的损失曲线:

四、计算测试集各类别mIoU

前面对测试集的测试得到的是24位预测图,无法与对应的8位ground truth作比较,无法求得测试集中对每个类别mIoU的计算,在这里我们根据demo.py做了进一步改进,得到了 demo_mIoU.py 下载链接,运行 demo_mIoU.py 后,得到8位灰度图:

左图为原图,右图为8位预测图。

左图为原图,右图为8位预测图。

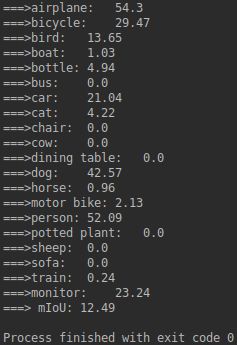

紧接着,在 mIoU.py 下载链接中计算关于测试集中预测图与ground truth的各类别mIoU值, mIoU.py 运行结果如下:

在这里,上图中左列为类别名,右列为测试集中该类别的mIoU值,最后一行的mIoU表示测试集的mIoU值。由于只是简单训练了voc2012中的数据,测试集只挑选了50张图,因此,得到的测试集mIoU值较小。

感谢各位读者朋友指正