centos7-kafka_2.11-2.1.1集群搭建(使用自带zookeeper搭建)

1、环境准备

-

准备三台服务器:192.168.0.128、192.168.0.129、192.168.0.130,以下操作是三台服务器一样。

-

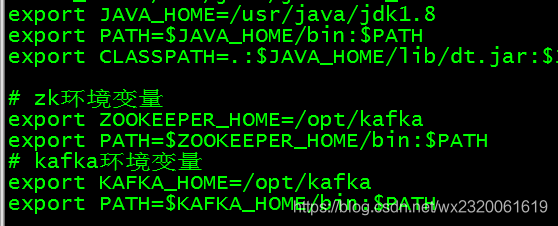

jdk1.8、zk、kafka安装并配置环境变量,我这里的zk用的kafka自带的

#jdk安装省略,下载kafka

wget http://mirror.bit.edu.cn/apache/kafka/2.1.1/kafka_2.11-2.1.1.tgz

tar -zxvf kafka_2.11-2.1.1.tgz

mv kafka_2.11-2.1.1 kafka

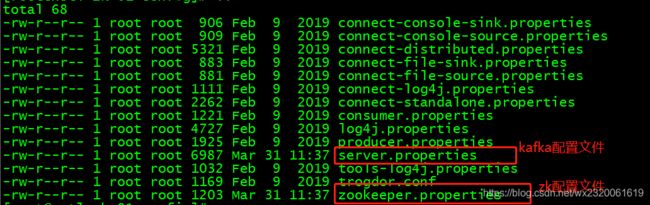

2、查看kafka安装目录下config下的文件

3、创建数据和日志文件夹

zk是kafka自带的,所以需要创建数据文件夹和日志文件夹,配置文件里会配置

/opt/kafka/zookeeper

/opt/kafka/log/zookeeper

/opt/kafka/log/kafka

4、先建立zookeeper集群

直接使用kafka自带的zk建立zk集群,修改zookeeper.properties文件:

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# the directory where the snapshot is stored.

dataDir=/opt/kafka/zookeeper

dataLogDir=/opt/kafka/log/zookeeper

# the port at which the clients will connect

clientPort=12181

# disable the per-ip limit on the number of connections since this is a non-production config

#maxClientCnxns=0

tickTime=2000

initLimit=10

syncLimit=5

server.0=192.168.0.128:2888:3888

server.1=192.168.0.129:2888:3888

server.2=192.168.0.130:2888:3888

注意:不要有多余的空格,不然会启动报错,很坑!

5、建立kafka集群

修改server.properties文件:

# broker.id三台服务器不能一样,这是唯一标识,各自服务器为0,1,2

broker.id=0

# ip为各自服务器ip地址,advertised.listeners很重要,默认是被注释的,要放开。

listeners=PLAINTEXT://192.168.0.128:9092

advertised.listeners=PLAINTEXT://192.168.0.128:9092

# log日志路径

log.dirs=/opt/kafka/log/kafka

# topic允许被彻底删除

delete.topic.enable=true

# zookeeper配置,三台都一样

zookeeper.connect=192.168.0.128:12181,192.168.0.129:12181,192.168.0.130:12181

6、创建zookeeper需要的myid文件

上边创建了zk的数据文件夹,/opt/kafka/zookeeper。命令:echo 1 > myid

将三台服务器各自写入0,1,2到myid中,只要都不一样就行,查看myid。

![]()

到此配置完成!

7、测试

防火墙端口什么的自己搞定,这里不写了。

启动顺序:先zk后kafka,停止顺序,先停kafka,后zk

- 将三台zookeeper启动:没报错就是启动成功了!

# 在kafka目录下运行:

bin/zookeeper-server-start.sh -daemon zookeeper.properties

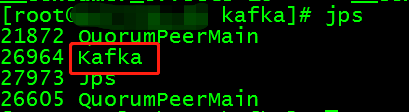

- 启动kafka

# 在kafka目录下运行:

bin/kafka-server-start.sh -daemon config/server.properties

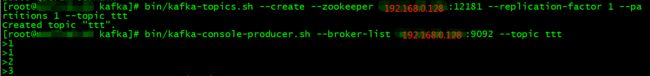

- 创建一个topic(例如在128的服务器上)

bin/kafka-topics.sh --create --zookeeper 192.168.0.128:12181 --replication-factor 1 --partitions 1 --topic test

- 运行 producer(在128的服务器上),然后在控制台输入一些消息以发送到服务器。

# 在kafka目录下运行:

bin/kafka-console-producer.sh --broker-list 192.168.0.128:9092 --topic test

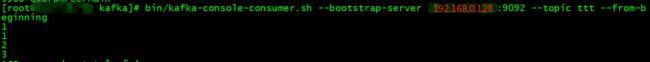

- Kafka 还有一个命令行consumer(消费者)(在129的服务器上),将消息转储到标准输出。

# 在kafka目录下运行:

bin/kafka-console-consumer.sh --bootstrap-server 192.168.0.128:9092 --topic test --from-beginning